-

Posts

225 -

Joined

-

Last visited

-

Days Won

2

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by AlexA

-

-

Interesting way of looking at it Ned, thanks for the insight.

-

Hi Guys,

Tinkering with my FPGA code today, I ran into a question which I'd never really contemplated before. Say I have an unsigned FXP number with word length = 1 and integer word length = 2. Labview tells me that this gives a maximum of 2 with a delta of 2, so the number can either be 0 or 2.

This makes no intuitive sense to me, the way I understood it, the word length is the total number of bits used to capture the number, the integer word length is the number of bits dedicated to capturing the integer portion, in other words, it kind of defines the "magnitude" of the number that can be captured, the remaining bits are used to give the "precision" to which the number is captured. So how is it even possible for the "integer word length" to be greater than the "word length"?

Insight much appreciated.

Cheers,

Alex

-

Hi Daklu,

Grrr, I know what you mean, I just lost a post

.

.Anyway, I was saying that I've figured out that new slave loops should be children of your parent class with different execution loops (blindingly obvious in retrospect). I've struck something strange though. The children don't seem to actually inherit the parents data type (the messenger queues). The same messenger queue types need to be placed into the child data.ctl file manually or else the childs execution loop, which I've copied from your parent and exchagned the object type from parent to child, is broken.

From my own experimentation and understanding, children should always inherit all the data fields of their parents, even if they're not visible on the child data.ctl front panel, correct me if I'm wrong.

Here are some pictures showing what I mean.

With inheritance checked in the class properties but the data not manually moved onto the child data.ctl file:

With inheritance checked and the data moved in manually:

Your thoughts?

Cheers,

Alex

-

As per Daklu's request in this thread, copy and pasting discussion on the slave loop idea.

Hey Daklu,Yeah it is probably possible. Right now I'm sipping my morning coffee and going over your Slave Loops concept. I feel that it's probably very similar to what I'm trying to do with my code but implemented in an OOP sense. Hopefully I can draw some parallels and a light will click. I'm particularly interested in the idea of launching and shutting down a slave process as needed.

I've gone through a really torturous route of wrapping each process inside a message handler framework, with an FGV pertinent to each process which is solely responsible for passing the stop message from the message handler to its process. The "process-nested-inside-message-handler" is in turn launched by a master message handler which handles input from the user to determine what tests should take place. I just had a blinding realisation as I wrote this that I could have handled this much simpler with a 0 time out queue in each process that listened for a stop message and if timed out did whatever the process was trying to do. One of the key assumptions that led me down my torturous path was that I must have a default case that handles messages which don't correspond to real messages. *Realisation* In fact, I could just use the timeout line on the queue to nest another case structure and still handle the default (incorrect message) case, gah, so much time wasted.

From looking at your slave loops template posted in this thread, am I right in assuming that if you wanted a different slave behaviour (a new plug in if you will), that you would merely make a new execute method in the SlaveLoop class? Or would you instantiate a whole new child class of SlaveLoop.lvclass with its own execute method?

Following on from my confession of my own wrangling efforts, how would you formulate one of your slave loops that needed to be a continuous process but also accept stop commands, would you use the timeout method I thought of above, or something else?

What would you do if you wanted to be able to close and reopen your slave loop without locking your code up? What I'm getting at here is, what is the OOP equivalent to launching a sub-vi using a property node with "Wait Until Done" set false?

Thank you for the opportunity to pick your brains! Your posts are always amazingly informative, even if it does take me months for things to finally click into place...

-

Not at all, pasting now.

-

Hey Daklu,

Yeah it is probably possible. Right now I'm sipping my morning coffee and going over your Slave Loops concept. I feel that it's probably very similar to what I'm trying to do with my code but implemented in an OOP sense. Hopefully I can draw some parallels and a light will click. I'm particularly interested in the idea of launching and shutting down a slave process as needed.

I've gone through a really torturous route of wrapping each process inside a message handler framework, with an FGV pertinent to each process which is solely responsible for passing the stop message from the message handler to its process. The "process-nested-inside-message-handler" is in turn launced by a master message handler which handles input from the user to determine what tests should take place. I just had a blinding realisation as I wrote this that I could have handled this much simpler with a 0 time out queue in each process that listened for a stop message and if timed out did whatever the process was trying to do. One of the key assumptions that led me down my torturous path was that I must have a default case that handles messages which don't correspond to real messages. *Realisation* In fact, I could just use the timeout line on the queue to nest another case structure and still handle the default (incorrect message) case, gah, so much time wasted.

From looking at your slave loops template posted in this thread, am I right in assuming that if you wanted a different slave behaviour (a new plug in if you will), that you would merely make a new execute method in the SlaveLoop class? Or would you instantiate a whole new child class of SlaveLoop.lvclass with its own execute method?

Following on from my confession of my own wrangling efforts, how would you formulate one of your slave loops that needed to be a continuous process but also accept stop commands, would you use the timeout method I thought of above, or something else?

What would you do if you wanted to be able to close and reopen your slave loop without locking your code up? What I'm getting at here is, what is the OOP equivalent to launching a sub-vi using a property node with "Wait Until Done" set false?

Thank you for the opportunity to pick your brains! Your posts are always amazingly informative, even if it does take me months for things to finally click into place...

-

Mr. Manners I'd just like to reanimate this thread to second your commitment to learning how to LVOOP. I've just spent the last week adjusting and readjusting plugin type VIs over and over, having to manually implement the same changes across each VI every time I uncovered a new bug in my implementations. Ugghh, now if only I could wrap my head around how to think about the launch methods for classes and equate that with running Sub-VIs programmatically, I think I'll be able to crack something important!

I need to set aside some time to sit down and try and DO, not just scratch my head over what's possible and not possible. If only I had more time!

-

Hahahaha, Thanks Daklu. I do appreciate the work that's gone into LapDog, and it does seem like a really elegant and light messaging system. Unfortunately re-engineering my code (again) is being swiftly pushed down the priority list in favour of moving onto other more pressing projects. Hopefully I can come back to it at some point, and I'll definitely consider using it as the back bone of my future projects.

-

Hmmm, I've read over the thread associated with LapDog's release on the Lava forums, and after playing with setting up and sending messages around using LapDog, I can understand the appeal of "strict" security implicit in using message classes to send data around, but it seems that it's a safer OOP duplication of variant type messaging?

Please bear in mind that the above is in the context of my very poor understanding of OOP, as such there may be some inate power of OOP that I've missed when playing around (more than likely).

-

Ok, it seems it's working now. I honestly have no idea what it was doing yesterday but I assume some sort of corruption on download or something like that. Anyway, I'll have a play around and see what I can make of it.

-

Hey Daklu,

Thanks for the insight. I've had a look at Actor Framework but concurred with someone who described it as not being suitable to drop into an already established project without massive re-engineering. I wanted to have a look at LapDog but when I downloaded it. It doesn't seem to run correctly. Double clicking or running the .vip package will cause the VIPM package handler window to open but almost instantly close again.

I was trying to install it for LV 2011 if that makes any difference.

Once again, thanks for your insight on re-entrancy!

Alex

-

Sorry about the second post, I had to refresh page so can't edit my OP.

I've managed to narrow down whats causing the lock. When the "Dequeue Message.VI" is placed on a block diagram in two separate while loops and asked to operate on two different queues in your typical asynchronous fashion, it will lock. So I guess I've answered my own question (it seems the only way is to make it re-entrant), but now I'm left wondering why this should be the case? Also, is the answer (while avoiding re-entrancy) to make the message handler sub-vis into methods on a message class? I don't know why I'm scared of re-entrancy, I just feel like maybe it will introduce some bugs that I can't predict (due to my lack of knowledge).

On a related question. If I take the approach of making the message handling code OO, would the resulting memory footprint be similar to multi-clone re-entrant execution? Or somehow similar to single memory instance re-entrant execution? (Which I'll admit I haven't yet tested).

-

I recently went through my code wrapping all my dequeue prims inside Sub-VI's which I've called Dequeue Message.VI. It's a simple wrapper that handles some basic errors such as queue reference loss and prettys up the unbundle by name code a little bit.

I noticed that messages enqueued weren't getting through to the dequeue. So I poked around a bit and discovered that the reference inside the Dequeue Message.VI was different to that outside. My best guess was that somehow because I was using multiple instances of this Sub-VI concurrently, when debugging, I was looking at a different instance of the Sub-VI. Even so, they shouldn't be "losing" their message, my best guess was somehow the multiple instances were causing the Sub-VI to lock. Which sounds like bs even to myself as I was under the impression that every time you dropped a Sub-VI into code, the compiler effectively inlined another copy of that code. In other words, from all my understanding, it should be possible to drop my Dequeue Message.VI into any number of loops and each dropped copy be independant of the other.

Anyway, just to see what happened, I changed the execution of "Dequeue Message.VI" to allow re-entrant execution and allocate different memory for each clone. Lo and behold, it worked.

Can anyone explain why this is so to me? Also, if I've done something crippling obvious that means I don't have to allow re-entrant execution, please let me know.

Edit:

I've pulled the code apart and created a scratch pad type test where I just passed a message from one loop to the other. It behaves as expected, even with the dequeue hidden inside a sub VI. I'm completely stumped why, in my main set of code it's hanging at the dequeue...

-

Hi JMak,

As dj mentioned above, learn to love the queue! I didn't really like the JKI template as it locked the UI and for me I didn't really intuitively grasp how to work with it to asynchronously launch plugins. There are many different variations of the producer-consumer, or master-slave architecture. At it's most basic, it's just two while loops communicating via queue.

Good luck, I think you're taking the first big step into Labview programming.

-

Hmm, had a little play and it appears the compiler is too smart for its own good. If you put the VIs in a constant wired case structure (opposing side), they will NOT be included.

-

Yeah, I've already dropped a suggestion regarding having to deploy an RT project as a startup. Will drop another one regarding Dynamic VIs. The solution I've come up with in the mean time is to call each Dynamic VI once in a normal fashion (sub-vi directly placed on block diagram) and shut it down immediately as part of the host code start-up, this forces the VI to be deployed as a dependency which can subsequently be launched dynamically.

-

Hmmmmmm, I've had a tiny break through with my problem. Thanks to you guys above but your answers didn't really help my problem. Mads, I think the answer might have been somewhere in your post but my brain did flips trying to follow your example.

So, the problem was, when a Labview Real-Time VI is run in Development mode (i.e. just by pressing the arrow), any sub-VI's which are called dynamically (via a "Start Asynchronous Call") will NOT be deployed in the correct sense.

The only way I could find to get Asynchronously Called VI's to launch in development mode was to manually deploy them. As in, go to the project window, right click them all and select deploy. This opens up their windows, and as long as the windows aren't closed, they will be recognised using the App.Dir property plus their name and subsequently launched correctly via the main VIs dynamic launch process.

I'm really not sure why this is, but I think it's a bug in Labview not recognising the potential dependencies of Asynchronously Called VIs during deployment.

Now, to see if there is a programmatic way to deploy these VIs so I can just hit Run in my windows host code.

-

Hey Swenp, thanks for the advice, in my explorations I created a build that had the sub-vis included under the "always included" box, it didn't seem to help. if I include them in a build via the "always included" box, how should I path to them? Simply via the App.Dir plus name method? In which case, what was I missing previously?

-

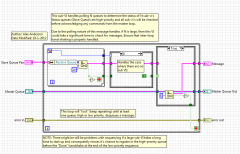

Hey guys, thanks heaps for your help! You've really seeded my mind with some new avenues to think along. For anyone interested, this is the poll N queues message handler I came up with.

As I noted in my previous post and in the block diagram, this is not a very good method unless you don't care at all what order the sub VI's start in.

Thanks again for the help, will update when I've solidified a method.

-

Hey guys,

I've stumbled across a problem when trying to use the "Application path" constant along with the name of a sub-VI to obtain a reference for dynamic launching (using the asynchronous call and forget VI).

Putting the Sub-VI in the application folder, or alternatively a special "Sub-VI" folder, then using "Application Path" constant plus the name of the Sub-VI to obtain a reference works fine on a windows PC. When deployed to real-time you get "Error 7", sub-VI not found. So I'm wondering, where exactly does a dynamically launched sub-VI go when it's deployed to real-time, and how do I build a path to it programmatically for the purposes of dynamic launching?

Thanks in advance,

Alex

-

The thing is, if a single status queue is used for the master to action on statuses from the subVIs, that queue must be the same queue that is driving that master's state machine (else you have the problem of the master loop reading two queues anyway, one for statuses and one for commands).

That feels wrong to me, I'm not sure why, but I feel like the purity of communication should be 2 way only, for sub modules to tie into a masters command queue to get their own statuses across feels dirty.

I've actually come up with a message handler that "locks" like a queue with no time out wired, but manages to poll N different high-priority queues and one low priority queue (the masters command queue). I'll link a png of it when I get back to work tomorrow, but basically it polls N high-priority queues and if there is nothing in them polls a low priority queue, if there's nothing in that queue either, then the it reloops until a message is found on at least one queue.

Considering the use case where the user asks to launch 3-4 modules in sequence, there is a danger (handled by setting sufficiently long time outs on the queue polls in the message handler) that somehow the module launcher control(master) queue will progress right through its requested launch sequence before any of the Sub VI's actually manage to fire up and return their statuses. As long as at least one Sub VI gets its statuses and handshaking requests into one of the N high-priority queue it's likely that all of them will. I can't really think of a more elegant way to handle sequences like that.

So to be clear on how I have been passing sequences of commands that require a confirmation. The UI code will take an event and turn it into the correct sequence of Sub VI launches which are sent to a Sub VI handling loop, at the end of this sequence it loads the specific command "Done" which is a handshaking command, what that does is that when the Sub VI handling loop dequeues "Done", it loads its own "Done" command back onto a return queue which goes to the UI loop, which is waiting at a dequeue.

Do you have any recommendations for handshaking logic if you use only a single status report queue? I couldn't figure out how to make the Sub VI handler wait for a hand shake from the subs after each one it tried to launch if there was already a sequence of launch requests in the master queue (hope I'm being clear). The best I came up with when working with a single return queue for all Subs as well as commands from the higher level stuff, was to allow requests from Sub-VI's to be enqueued at the opposite end, so as each Sub VI fired up it loaded its own requests on to the top of the queue. The problem with this, is that there's still a forseeable chain of events where a Sub-VI's start up routine takes so long that before it can load its request onto the queue, the queue finishes its sequence and returns command to the top level code. In fact, I seemed to see this case frequently with a certain launch sequence. I really don't like the idea of incorporating "Wait x seconds" in a launch sequence as it feels crude and superfluous if I can just come up with the right hand-shaking logic.

So, once again, to crystallise my ponderings, when working in an environment which has been specifically coded to be asynchronous, what is the best way to ensure that a strict sequence of events is followed?

Edit: The more I think about it, the more dirty my new code feels to me, some of the launched sub-modules need to pass data between each-other, there's a strong potential for a consumer to request a queue before a producer has published it, requiring re-request code. Ugggh, if I could just think how to ensure that each Sub-VI gets all the way through its launch sequence before the next one is launched (WITHOUT using crude "wait x seconds" code) I'd be set.

Edit 2 (2 minutes after edit 1): I supposed I could format the commands such that they can be parsed for specific information (like the command string could read "Status:Request Data" from a Sub VI and "VI Control:Launch X" from a master level command. That way perhaps commands could be dequeued in some sort of order then requeued if they don't match whats...required...at the time? Hmmm, have to ponder the possibilities a bit more. I wish I wasn't under PhD time pressure, I'm enjoying Labview more than muscle bath design at the moment!

-

Hi guys,

I've had to re-do some code I've been working on as it needed to be deployed to a real-time system. I'm taking this opportunity to make some architectural changes because some of the inter-process signalling felt really dirty. Here's a brief outline of what I'm doing.

Host machine VI controls Real-Time VI via network stream.

Real-Time VI take messages sent by network stream and performs some "program control" type logic (telling other mediator loops to launch sub-VIs, launch an FPGA based VI, etc. etc).

I'm currently wrestling with the practical implementation of an abstraction I want to use (because it feels right). That is, I want each dynamic sub-VI to be launched with it's own pair of messaging queues, one to receive commands from it's master, the other to send status reports back to the master.

The problem I'm having is I can't think of a really elegant way for the Sub-VI launcher to monitor "n" status queues.

To give some more context, the Sub-VI launcher loop has it's own pair of messaging queues which connect it to the Real-Time VI's master loop, that is, the loop that handles commands from the host machine via the network. Previously the sub-VI launcher loop has presented it's own command queue (that is, the command queue used by the Real-Time master loop to tell it to launch VI's) to listen for and handle status up-dates from the Sub-VI's. This has run me into trouble when trying to elegantly handle shut-down orders as suddenly the sub-VI launcher loop receives a stack of status updates from a raft of different Sub-VI's and must shut them down in the correct order and then report to the master loop so further action can be taken.

I feel as if somehow abstracting communication between the Sub-VI launcher and it's progeny to an individual messenger pair per child will allow me to perform sequences of shut-down events elegantly.

So, to synthesise, how can a master-loop elegantly monitor all its dynamically launched children and still respond to commands from its master?

Thanks for any insight you guys give,

Alex

Edit: A final note, I would prefer to stay away from an OOP solution for now. I've tried in the past to look into it but I just don't have the time to study it at the minute. So if you could confine answers to standard LV "state-machine" type architecture (a while loop enclosing a case structure), that would be much appreciated.

-

So the situation I'm faced with is as follows. I have a PXI crate with an FPGA card in it that has multiple DIO connectors. Each one of these connectors is wired out to a laser interferometer card's hardware interface. One connector corresponds to one channel on the laser interferometer. We have code that takes the input to each DIO, formats them, then presents each channels information on a "To-Host DMA".

The PXI crate is connected to a Real Time box. We (my work mate and myself) want to be able to work from two different Windows machines, connect to the real time box and subsequently each read a different to host DMA for the information from different channels.

Is there a graceful way to share FPGA resources like this between multiple projects? If not, what would be the best way to get this information (each individual channel) out of the FPGA, into the RT box and subsequently access it from the different machines?

Regards and thanks for your help in advance,

Alex

-

Hey Ravi,

Thank you very much! I didn't know queues behaved like that (error input true to the queue returns a blank element). That's really weird, why not just have it return nothing, i.e. no dequeue and pass the error cluster?

Once again, thanks for your help!

Kind regards,

Alex

The idea of throughput and handshaking

in Embedded

Posted · Edited by AlexA

Hi again guys,

I've had my FPGA code running for a while now, but I'm hesitant to trust it, because I don't really understand the concept of handshaking and throughput. Say I have the following block of code:

My question is, if the multiply takes 1 cycle/sample to compute (and this goes for all blocks that say they take 1 cycle/sample), does it return its result on the exact same cycle that it receives a valid point (I.e. when divides "ready for input" goes true and a deviated bin element is dequeued from the FIFO).

OR

Does it take another cycle and thus, I should always load the result of multiply (and any other high throughput maths) into a shift register to be absorbed by divide on the following loop iteration?

Thanks for your help.

Alex