-

Posts

1,986 -

Joined

-

Last visited

-

Days Won

183

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by drjdpowell

-

-

You've solved your problem, but this is the solution I just did:

-

- Popular Post

- Popular Post

I've put 0.4 on VIPM.io.

-

3

3

-

- Popular Post

- Popular Post

Working on the next JSONtext functionality, which is features to improve support of JSON Config Files.

See https://forums.ni.com/t5/JDP-Science-Tools/BETA-version-of-JSONtext-1-6/td-p/4146235

-

3

3

-

I reported that in 2018: CAR 605085. I don't think they are planning on fixing it. Must use C strings under the hood.

-

1

1

-

-

You could ignore the error, with NaN as default pressure and temperature. Or you could read flow first, and only get pressure/temperature if flow isn't "<unset>".

-

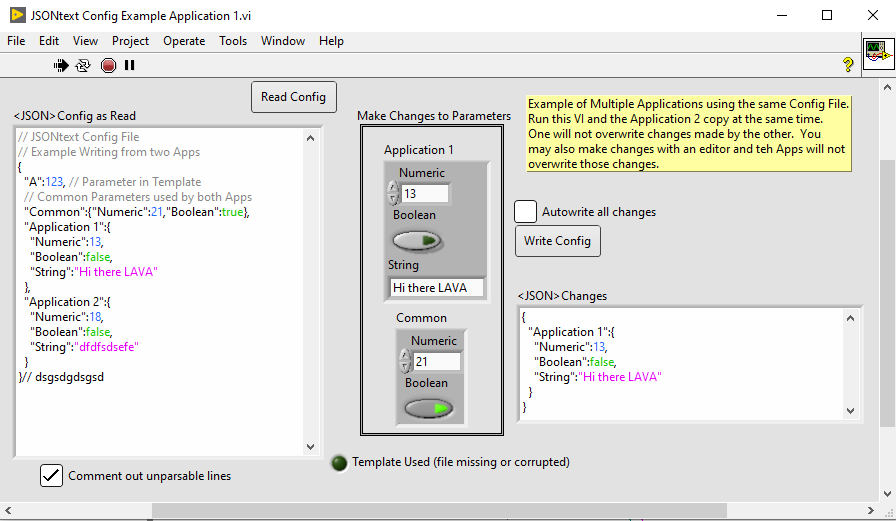

BTW, one other use case I've had is "Multiple applications read/write to common config file", which requires careful thinking about preventing one App from overriding changes made by another App.

-

1

1

-

-

48 minutes ago, Antoine Chalons said:

I started thinking about variant attributes to handle them (text and position) but it quickly gets tricky so for now I've accepted to either only read or lose comments.

I think you just need an extra string for each item to hold end-of-line comments, plus a "no item" data type to allow full-line comments. See how teh NI INI Library does it.

-

There are actually multiple different use cases of config files, and multiple ways to implement those uses cases.

My most common use case is "Computer writes config; Human reads and may modify; Computer reads config". The way I do this is mostly:

- Read config file into Application data structures.

- Later, convert Application data structures into config file.

Comments don't exist in the Application data structures, so comments get dropped.

Another (used, by the NI/OpenG INI libraries, and I'm guessing your TOML stuff) is:

- Read config file into intermediate structure

- Query structure to set Application data structures

- Update intermediate structure with changed values from Application data

- convert intermediate structure back to a config file

Here, the intermediate structure can remember the comments in the initial file and thus preserve them. But, IMO, this is also less clean and more complex than the first method, as you have an additional thing to carry around, and the potential to forget to do that "update" part.

Currently, with the latest version of JSONtext (now available!), I haven't really developed this second, comment-preserving method. I am more thinking of another, less common but important, use case: "Human writes (possibly from a template); Computer reads", where the computer never writes (this is @bjustice use case, I think). Here, the human writes comments (or the Template can be extensively commented).

But I do intend to support keeping comments. It will probably involve applying changes from the new JSON into the original config-file JSON, preserving comments (and other formatting). But that is for the future.

Note added later: the config-file JSON with Comments is now available in JSONtext 1.6.5. See https://forums.ni.com/t5/JDP-Science-Tools/BETA-version-of-JSONtext-1-6/m-p/4146235#M39

-

23 minutes ago, bjustice said:

Those cyan VIs are from the JDP utility VIPM package

Note: that package also has RFC3339-compliant Datetime format VIs, if you haven't already done Timestamps.

-

1 hour ago, Francois Normandin said:

The SMO Process has a "passive watchdog" that monitors a single-element queue and gracefully exits if the asynch process completes normally... but it will stop the whole asynch thread if the queue reference goes idle.

Messenger Library uses the same watchdog mechanism, although I just trigger normal shutdown via an "Autoshutdown" message ; I don't call STOP. I would have thought 500 ms is too short a time to wait before such a harsh method.

-

A "queue" is a first-in-first-out mechanism. Don't be confused by specific implementations; the LabVIEW Event system is just as much a queue** as the LabVIEW "Queue".

**Specifically, the "event registration refnum" is an event queue.

-

As thols says, the best practice is to NOT share things between loops, but if you do, I'd suggest a DVR.

-

9 hours ago, X___ said:

Have you talked with NI about that?

Like most problems, once I had a workaround, I no longer spent any time thinking about it.

-

1

1

-

-

- Popular Post

- Popular Post

I've encountered a black imaq image display in exes, solved by unchecking the box to allow running in a later runtime version. Don't know if that is related to your problem.

-

1

1

-

2

2

-

There are quite a lot of other message-passing frameworks that you might want have a look at. DQMH, (my own) Messenger Library, Workers and Aloha are on the Tools Network, for example. AF and the QMH template are not the only things out there.

-

1

1

-

-

2 hours ago, Filipe Barbosa said:

I have been getting error d91 (Hex 0x5B) The data type of the variant is not compatible with the data type wired to the type input on the From JSON.vi.

https://bitbucket.org/drjdpowell/jsontext/issues/80/2d-array-of-variants-not-converting

Will be fixed in 1.5.2

-

Can you attach the vi you show?Nevermind, I've reproduced it.

-

Your attribute Values need to be valid JSON. You are inputting just strings. Convert your strings to JSON first.

-

1

1

-

-

Do you check for an error coming out of SQLite Close? SQLite will not close and throw an error if unfinalized Statements exist on that connection. The unclosed connection would then continue to exist till your app is closed.

-

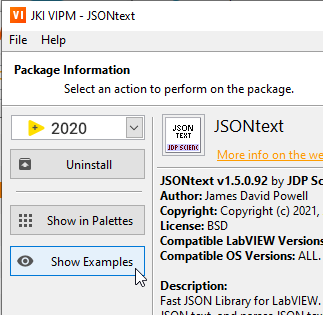

Example are in <LabVIEW>\examples\JDP Science\JSONtext, accessible through VIPM:

The JSONpath example might help.

-

Have you looked at the examples?

-

- Popular Post

- Popular Post

On 3/15/2021 at 2:39 PM, drjdpowell said:I have been coming round to supporting comments in JSONtext, at least for ignoring comments on reading (which is quite simple to implement, I think). And possibly features to be more forgiving of common User error, such as missing commas.

I've just implemented this and posted a beta: https://forums.ni.com/t5/JDP-Science-Tools/BETA-version-of-JSONtext-1-5-0/m-p/4136116

Handles comments like this:

// Supports Comments { "a":1 // like this "b":2 /*or this style of multiline comment*/ "c": 3 /*oh, and notice I'm forgetting some commas A new line will serve as a comma, similar to HJSON*/ "d":4, // except I've foolishly left a trailing one at the end }-

5

5

-

- Popular Post

- Popular Post

I have been coming round to supporting comments in JSONtext, at least for ignoring comments on reading (which is quite simple to implement, I think). And possibly features to be more forgiving of common User error, such as missing commas.

-

4

4

-

8 hours ago, ShaunR said:

Just be aware

Can't tell if that is just NI being overcautious, or there is an actual reason for that. Python 2 was sunsetted at the start of this year, so no one should use that. I'm guessing the NI's testing was done against 3.6, as the latest available version when they originally developed the python node.

Using JSONText to parse a poorly formatted JSON string

in LabVIEW General

Posted · Edited by drjdpowell

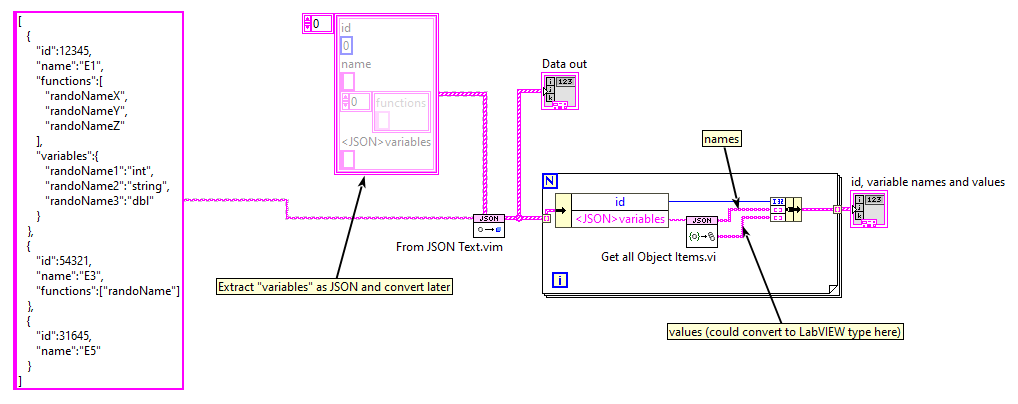

Just a comment, but I have noticed that people very often deal with JSON by looking for the "monster cluster" that completely converts the JSON into a monolithic LabVIEW structure. I suggest people think a bit more modularly in terms of "subJSON". The first question I would ask you if I were working with you on your actual project is why do you need (at this code level, at least) to convert your variables from their perfectly reasonable JSON format to an array of clusters?