-

Posts

1,986 -

Joined

-

Last visited

-

Days Won

183

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by drjdpowell

-

One could make two subVI's, "Open FP after delay" that outputs the notifier, and "Close FP" that inputs the notifier (as well as the error out of the rest of the code). Then it would be easy to add to any VI and be quite clear. Internals of the two VIs would need to be more complex, with an async call of a third subVI with the actual wait on notification, and some method of making sure "Open..." is finished before "Close..." actually closes the front panel. -- James [i'd try and write it if the graphics card on my work laptop hadn't up and died two days ago! "Seven working days to fix" ]

-

Variant vs Native Datatypes

drjdpowell replied to SteveChandler's topic in Object-Oriented Programming

I don't need it that complex, but I was looking into using one of the Open Source (ie free) middleware messaging systems such as RabbitMQ or ZeroMQ. Why reinvent the wheel? Unfortunately, there are no LabVIEW wrappers and I don't have the skills to create them (anyone have any experience with using these from LabVIEW?). Instead, I'm thinking of just doing a simple Messenger using the TCP primitives. -- James -

Variant vs Native Datatypes

drjdpowell replied to SteveChandler's topic in Object-Oriented Programming

I agree! In fact, I started to immediately think of "Priority Queues" as an example of a queue extension that didn't seem that useful to me. The design should be such that messages are handled promptly. In fact, I've never seen much advantage in "Preview" or "Flushing" of the queue. That's one of the reasons that my own messaging system abstracts Queues, User Events and the like as "Messengers"; I'm happy to ignore the extra features of Queues over User Events. I was thinking about an eventual design of a "Network Messenger" when wrote my previous post, and distributed computing where the network may have temporary disruptions. Or a computer may have to reboot or something. It was in that context that I was interested in what happens to queued messages building up. I certainly don't want to add extra bells and whistles to the raw queue primitives. I've been trying a different tactic of standardizing the dynamic call, with the launching of all VI's being identical and accomplished with the same "Launch" subVI. Every dynamic VI sits behind a "Messenger" and receives "Messages". Thus I have to only deal with the complexity once. I think I can easily add async dialog boxes to this design without ever having to revisit the details of dynamic launching. The inputs are "valid", they just forget to change them from the default, or change them and forget to change them back. -- James -

I have finally uploaded the code (still very unfinished) in my other thread. See the example "Test Active Object.vi". -- James

-

Self-addressed stamped envelopes

drjdpowell replied to drjdpowell's topic in Application Design & Architecture

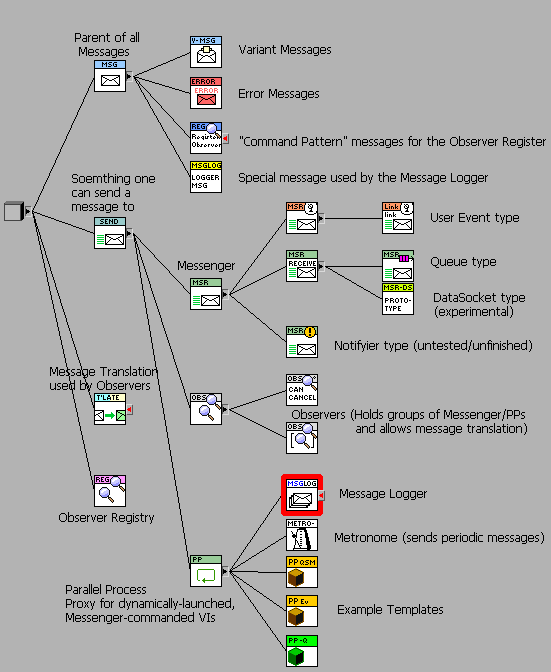

I've always been meaning to post this code library, once it is meaningfully "finished", but that may be a LONG time. So here is a very "beta", "lightly documented", and "less than entirely untested" version as of today. Test Messenger library.zip NOTE: newer version (2011) in a later post. Contains a "Messenging" library and a set of examples that are up-to-date versions of the ones I posted here and in my Parallel Process thread. Dependancies are the OpenG toolkit (Data Tools, mostly) and the JKI statemachine, both available with VI Package Manager. LabVIEW 8.6.1. Here is a a Class Diagram with labels: There is a Palette Menu for the library, but if that doesn't work there is also a "Tree.vi" of the most useful VIs. Any comments appreciated, -- James -

Completely stumped by the behaviour of user event

drjdpowell replied to AlexA's topic in Application Design & Architecture

'Cause you hit the button again. Stopping the loop doesn't stop the event queue inherent to the event structure. It locks the front panel, as instructed, until the event is handled. That it will never be handled because the loop is stopped is immaterial. -

Completely stumped by the behaviour of user event

drjdpowell replied to AlexA's topic in Application Design & Architecture

First thing to do is put a Wait in the upper loop, which is currently running at max CPU and starving other processes. That might have strange effects. -

Basic OO question - Overrides & Call Parent Method

drjdpowell replied to MartinMcD's topic in Object-Oriented Programming

It serves as a starting point for adding functionality. For example, if your "Car" needs to always have it's headlights on when the engine is on, you would override "Start Engine" and add turning on of the lights to it, while calling the parent method to actually start the engine. -

Basic OO question - Overrides & Call Parent Method

drjdpowell replied to MartinMcD's topic in Object-Oriented Programming

To extend the analogy let's replace your numerics with something vehicles have. A vehicle has an engine, and a method to "Start Engine". You've created a "car", which being a vehicle has an engine that can be started, and you've given it a second engine (!) and were surprised when the "Start Engine" method started the first engine. OK, so, why did you add a second engine? -- James BTW. The "parent/child" terminology of OO is actually confusing; "Car" is not a child of "Vehicle", it is a type of vehicle. -

Self-addressed stamped envelopes

drjdpowell replied to drjdpowell's topic in Application Design & Architecture

I believe so. It's "garbage collection"; where LabVIEW frees up the resources of the VI. -

Self-addressed stamped envelopes

drjdpowell replied to drjdpowell's topic in Application Design & Architecture

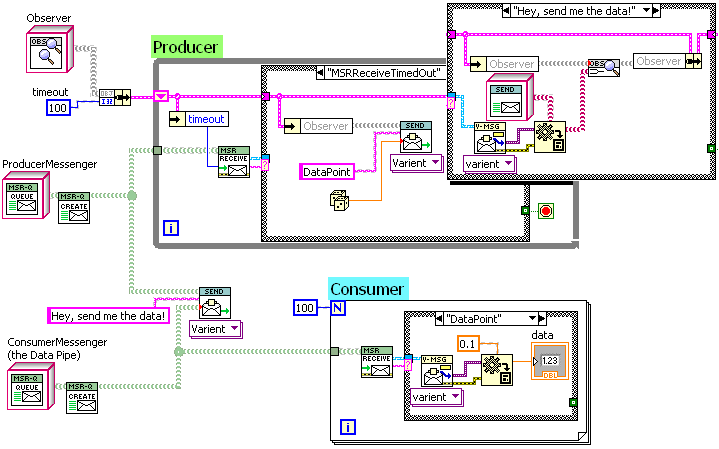

Alex, you can guess my advice: do away with the over-complexity of dynamic launching and the like. But ignoring that... Whatever way you do this, it is better to think of the consumer making the data pipeline and getting that reference to the producer, rather than the other way round. The consumer is dependent on the queue, the producer is not. If I were designing something like this with my messaging design shown above, the producer would send it's data to an "ObserverSet", which by default is empty (data sent nowhere). The consumer would create a "messenger" (queue) and send it to the producer in a "Hey, send me the data!" message (alternately, the higher-level part of the program that creates both consumer and producer would create the queue and the message). The producer would add the provided messenger to the ObserverSet, allowing data piping to commence. In the below example, the consumer code registers it's own queue with the producer and is piped 100 data points. The Actor Framework is rather advanced. You might want to look at "LapDog" though, as it is a much simpler application of LVOOP that one can "get" more easily (I am surprised AQ thinks the Actor Framework is simpler). -- James -

Hi Ravi, Darren's VI seems to be missing from the R4 zip file. -- James

-

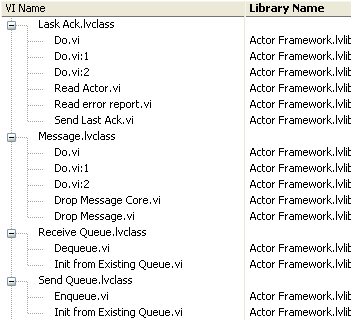

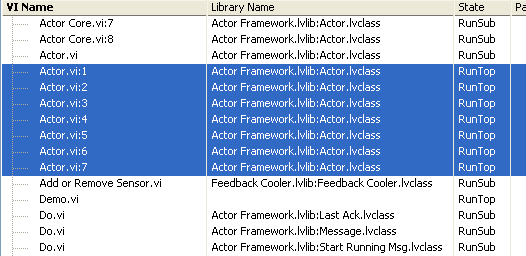

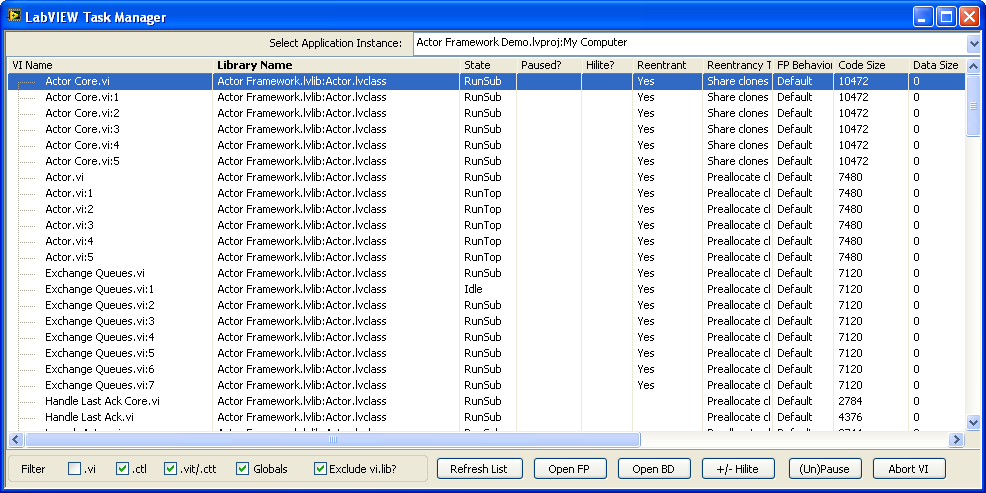

You might want to instead have a numeric control of the clone number to search up to. Zero would mean disabled, the default could be 100 or so, and Users could increase the number if they were working with higher-numbered clones. BTW, an idea to improve the tool is to group VI's by class/library, like this: Not sure if that's easy to do, but it would greatly improve readability for LVOOP projects that tend to have large numbers of identically-names VIs (as well as not particularly descriptive names such as "Do.vi"). -- James

-

Ravi, Here's, if you would like to use it, is a "Find Clones" VI that searches for clones up to some number. It can be inserted into the list of VIs after filtering. Find Clones.vi

-

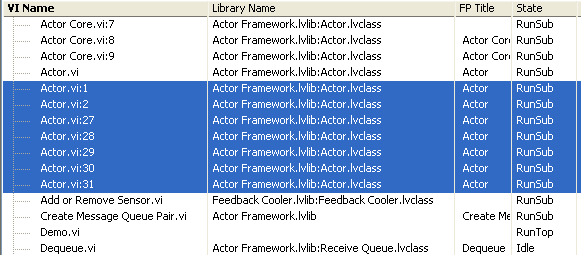

I downloaded the 2011 Actor Framework example (that uses Async Call by Ref) and it keeps increasing the numbers (even though the clones leave memory between runs) but sequentially. Rather strangely though, the numbers 1 and 2 get reused. Curiouser and curiouser... For the older software, with the "Run VI" method, the numbers don't increase.

-

Not necessarily. If the original clones have left memory when the new ones are created, the numbering seems to start at the lowest available number. It's only when clones stay in memory for some reason (Front Panel still open, for example) that the numbers start marching forward. I was having the issue of Actor.vi clones staying in memory until I saved everything in LV2011 (your right, it is an older version of your framework that I am running) after which all the clone number start from 1 regardless of how many times I restart the application. I don't fully understand the issue, and unfortunately I don't fully understand why LabVIEW sometimes keeps clones in memory.

-

I don't know your Actor Framework very well, but when playing around with it using the Task Manager I found that the dynamically launched "Actor.vi" clones showed up as "RunTop" and I was able to Abort them from the task manager. Running "Pretty UI" with 2 sensors added.

-

Here's a quick hack where I test for clones, looking at AQ's Active Object Demo: Searching for clones up to xxxxx:100 doesn't take much time (up to xxxxx:10000 had a delay of about 5 seconds for this project). One could decrease the likelihood of missing clones while still being fast(ish) by a search procedure such as: search up to 100, if find clones also search 100-1000, if find more clones search 1001 to 10,000. -- James

-

How fast can they be checked? Because in my experience, they start at 1 and count up, so in the majority of cases trying every number up to, say, 100 will find them all (I think I got the count up to 50-odd a couple of times). If you create your message queue in the calling VI and pass it to the dynamic callee, then when the caller goes idle it invalidates the queue, which can be used to trigger shutdown of the callee. No good if you need the callee to be fully independent of the caller, but most of my dynamic VI's are "owned" by the process that starts them. -- James

-

Simultaneous breakpoint custom probes?

drjdpowell replied to Aristos Queue's topic in Development Environment (IDE)

The only alternate idea I can think off is to add the debug feature to whatever framework you are using to either dynamically launch the VIs, or to pass messages. The launching subVI could keep a list of references to the VIs that it launches (for access by the debug tool). Or if there is a common "enqueue" or "send message" subVI, that could be commanded by the debug tool to pause its own caller. But surely there must be a way to get a reference to all running VI's, including clones? -- James -

Variant vs Native Datatypes

drjdpowell replied to SteveChandler's topic in Object-Oriented Programming

Darn "delete" browser button ate my first post! My rewrite will be entirely different in focus because my initial post wasn't that interesting anyway. Ah yes, the synchronous call to the unpredictable "User" process, I have been bitten by that before. I've written dialog boxes that beep loudly or that auto-cancel after a short while. I've toyed with the idea of making an asynchronous dialog box that works with command-pattern-style messages (since what is a dialog box but a way of sending a message and getting a response) but haven't put the effort in. Your multi-loop design is certainly robust against such issues, but how do you handle information that the UI Input loop might need? What if you have a dialog box that needs to be configured using your "DeltaZ" value, for example? My Users are always asking for dialog boxes that redisplay all the configuration controls for them so that can realize what they forgot to set. I don't see how your UI input loop could implement such a dialog. Just as an aside, has anyone heard of a design for a message-passing system where messages are flagged by what should be done if they are undeliverable for a significant time? Most of my messages convey current state information, and are rendered obsolete in a few seconds when the next message of that type arrives. Having the queue fill up with obsolete messages and crash the program seems silly. The ideal queueing system would know this and only save the last version for most messages. One could use a size-limited lossy queue, but unfortunately some messages are of the type that must be delivered. -- James -

Simultaneous breakpoint custom probes?

drjdpowell replied to Aristos Queue's topic in Development Environment (IDE)

That was my first thought, but I don't think that list includes dynamically-launched clones (which AQ will be using, I think). At least, it didn't seem to work under LabVIEW 8.6 when I tried it briefly. -- James -

Variant vs Native Datatypes

drjdpowell replied to SteveChandler's topic in Object-Oriented Programming

Ah, I see, you have "internal to the UI component" messages in addition to messages such as "GoToCenterPosition" that are sent out of the component. Personally (illustrating alternate possible styles of programming that might use LapDog) I would probably try and write such a UI in a way that combines your top three loops in a single event-driven UI loop (using a User Event instead of a queue). This would eliminate "Inp:LoadBtnReleased" messages entirely. Your way is more flexible, I imagine, and allows the full use Queue functionality (so far, I'm happy with the limits of User Events). -- James BTW: is that a timed loop that reads a global variable? This is not your preferred architecture I would hazard to guess? -

Variant vs Native Datatypes

drjdpowell replied to SteveChandler's topic in Object-Oriented Programming

My development group of one is very good at standardizing. The advantage of having a "VarMessage" as a standard part of the library is that you could add it to the enqueue polymorphic VI (in analogy to how you currently allow data-less messages), simplifying the wiring for those who use Variant messages. One can easily extend the polymorphic VI oneself, but then one has a customized LapDog library which makes upgrading to a new LapDog version trickier. Command Messages are different, I think, because they are inherently non-re-use (unless I'm mistaken, one would have a different tree of commands messages for each application). A VarMessage might also be an easier way in to LapDog messaging for those used to text-variant messages. My experience is limited to much smaller projects than yours, and they are scientific instruments where one does need direct control of many things. And "abstraction layers" seem less attractive if your the only person on both sides of the layer. Also, I was more imagining a bottom-up approach, where the meaningful process variables are propagated up into the UI control labels. And one isn't constrained to do this; one has the flexibility to abstract things as needed. Currently, for example, I'm implementing control of a simple USB2000 spectrometer. I've written it as an active object that exposes process variables such as "IntegrationTime". In my simple test UI, I just dragged the process variables into the UI diagram, turned them to controls and wired them to send messages in the generic variants way I described in my examples. In the actual UI, which is a previously written program from a few years ago, the IntegrationTime message is sent programmatically based on other UI settings. Making a specific IntegrationTimeMessage class would have made writing the test UI much more work, without gaining me anything in the actual UI. BTW, you don't send "MyButtonClicked" messages, surely? Isn't that exactly the kind of UI-level concepts ("Button", "Clicked") you don't want propagating down into functional code? I certainly see the advantages of the "one message, one class" approach. I'm just arguing for variants as a better generic approach over "one simple data-type, one class". -- James -

Yes. Yes. Huh?!? What's this got to do with a default state? The last time I looked at your code, the QSM structure was set up such that it always enqueued another state. Normally, the QSM doesn't do this. When there is no further state in the queue, the dequeue just waits until a new command comes in (or it hit's the defined timeout). Thus, these QSM designs sometimes have "timeout" states, but they never have "idle" states, nor is "default" used for anything other than typos. OK, should have read your whole post before starting to reply. So, yes, that's better. But as I've pointed out before, your developing your QSM design in the middle of trying to get up to speed on dynamic launching AND debugging what I'm sure is a complex FPGA/imaging project. Doing all that at once is fraught with difficulty. -- James