JoeQ

Members-

Posts

70 -

Joined

-

Last visited

-

Days Won

1

JoeQ last won the day on January 29 2016

JoeQ had the most liked content!

Profile Information

-

Gender

Not Telling

LabVIEW Information

-

Version

LabVIEW 2011

-

Since

1986

JoeQ's Achievements

Newbie (1/14)

1

Reputation

-

LabVIEW EXE Running on a $139 quad-core 8" Asus Vivotab tablet

JoeQ replied to smarlow's topic in LabVIEW General

How is the tablet holding up. I tried running LV on one. It can read the tablets GPS sensor but everything else comes from the USB. https://www.youtube.com/watch?v=6PpAJeAurr4 -

Making phase noise measurments the old way using Labview 6. https://www.youtube.com/watch?v=GP6B_ImnoII

-

Article that mentions the Labview toolkit. http://evaluationengineering.com/articles/201208/the-uncertainty-about-jitter.php

-

LeCroy JTA2 vs Labview..... https://www.youtube.com/watch?v=Bg45FuoeHZk

-

Video showing a 1970's VNA being run with Labview. This equipment has no CPU and everything had to be calculated by hand. Having the PC crunch the numbers makes this old antique easy to use. Labview starts about half way into it....

-

My company seems "stuck" on 2011... benefits to upgrading?

JoeQ replied to Autodefenestrator's topic in LabVIEW General

Actually I agree with you. There is no way I could do in 6.1i that I can in 2014. I typically only use Labview to automate some sort of test, maybe collect and display some data. In a few rare cases I have used it to replace writting a full on app. It's been a great tool for engineering work. I can get a lot done in short time. I do not typically reuse my code. Most of it is fairly simple and the tests are unique enough there is little to gain. Any features they add that attempt to "help me" draw go to waste on me. I know where I want things and how I want them wired. If they want to help me in this area, make it fast and stable. I would take 10 bug fixes of my choice over an autogrow feature! No one will say Labview has not improved over the years but some areas really lack luster for the time it has been on the market. You mention the UI. I was amazed when I found out that the simple edit commands that Windows supports were not supported in the tables, list boxes, or any other function I looked it. For me, I just use the basics when it comes to the UI. I'm sure you have seen some of my panels. 3D graphs are about as fancy as I get. Any time I have tried to get too fancy all I do is waste a lot of time. If I had one UI feature that I could add it would be solve the font problem once and for all! I don't care how. Make your own custom Labview fonts for all I care. Just make it a standard. As impressed as I am with how advanced the majority of the libraries have become, I am still amazed just how limited the Ethernet support is and how much I have to make direct calls to Winsock. If I were stuck with 2011 I would have been fine with it except for that free jitter tool kit is just so slick. -

My company seems "stuck" on 2011... benefits to upgrading?

JoeQ replied to Autodefenestrator's topic in LabVIEW General

I must not be the average Labview user. I would guess there are less than 10 features that I use that were added from 6.1i to 2014! Believe me, I cringe every time we decide to upgrade because I know some library will be broke or they will have dropped support for some NI hardware I use. Problems can be something as simple as the serial ports no longer work (and still don't because we all know how hard it is to use Windows to talk to a serial port) to something like the cPCI bus no longer works (which after MANY hours on my part and narrowing it to a single file they were able to find the problem). It takes me a fair amount of time to qualify a new version when it comes out. I will say that at least moving from 2011 to 2014 did not imped my efforts other than the time to install it. That said or if you read my previous posts you may have the idea I can't see any value to using Labview. This is certainly not the case at all. It has saved me countless hours over the years. It has also caused me to have a few more gray hairs. Add to the list, they include the Jitter Analysis Toolkit for free (and it works)! -

https://www.youtube.com/watch?v=4rJcEVj8OYo&feature=youtu.be Video showing some of the features of the Jitter Analysis Toolkit.

-

If I understand the data you show, 2011 is still working even with the 2014 installed. Based on this, I do not believe a clean PC is going to change anything. What does the profile tool show?

-

I was guessing it would be the low level interface but sounds like this is not the case. Something in how you handle the CAN communications on your side. If I understand your graphs, you suggest you are using 20% with the CAN and without you are at 2%. CAN is a VERY slow bus, 500k maybe a 1meg? To see 20% I suspect you have the code structured so you stall out when waiting for CAN data.

-

Not too surprised. Again, are 2011 and 2014 installed on the same PC for both tests?

-

I am curious when you ran the test, did you have just 2011 installed for the first part, then install 2014 to run the second part? Or was 2014 installed, then you ran both tests. Reason I ask is I am curious if this was something in the way they talk with your specific hardware. If they can mess up something as simple as a RS232 port, it's no impossible that they messed up your CAN interface. It will be interesting to hear what you find was the cause.

-

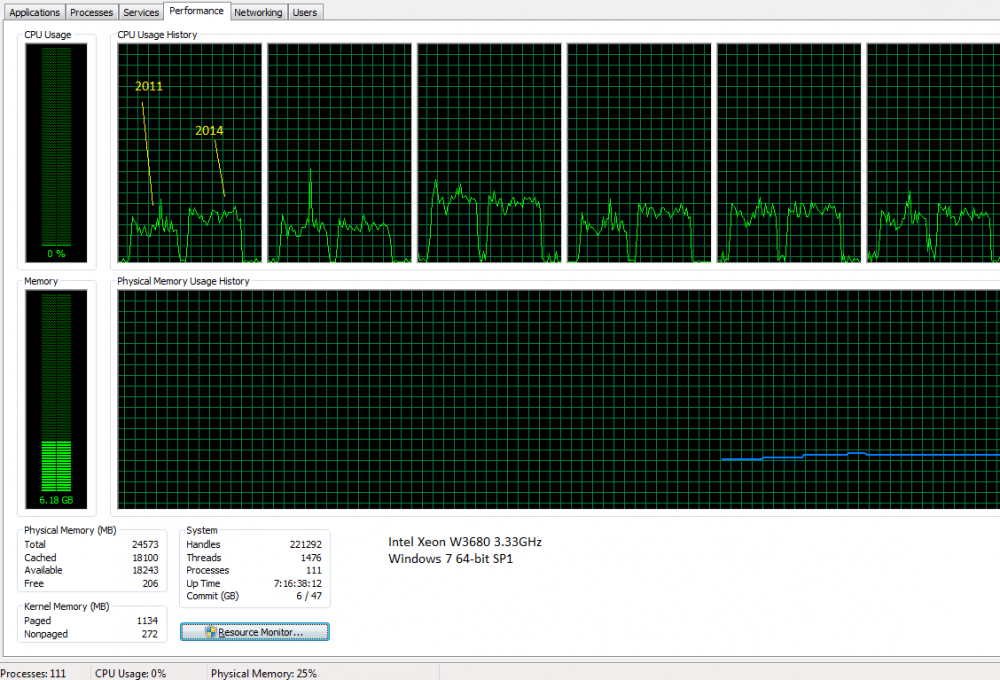

I loaded the project I last worked on into 2011 and then 2014 while recording the CPU usage. Both use about 25% of the processing power. I really have not seen anything slow down or take more processing power. Wonder what you are doing that you see this sort of change.

-

Wasted day at the track thanks to LV 2011, One more bug...

JoeQ replied to JoeQ's topic in LabVIEW General

Playing with an open source C code generator to build the model for my simulator. -

Real-time acquisition and plotting of large data

JoeQ replied to wohltemperiert's topic in LabVIEW General

Sub 1Mbit then. This should be no problem for storage. If the boards are able to put out data in say a raw 24-bit mode, it would be better store it in that format and post process them to double. For your speeds, doesn't matter. Typically I don't care about the GUI when I am collecting data. Maybe I just need some sort of sanity check. So I may take a segment of data, say 1000 samples, and do a min/max on it. Then just use the two data points that will be sent to the GUI. I don't like to throw out data or average as it is just too misleading when looking at the data. Again, think about how a peak detect on a DSO works. I may for example change the sample size for the min/max as the user zooms in. This min/max data path is normally separate from the rest of the data collection. If the GUI stalls for a half second it may not be a problem but normally, missing collected data is not something I can have. For your rates, you should be able to do most anything and get away with it. System I mentioned previous has about 500X higher sustained data rate and can't drop data. It's a little tight but workable without any special hardware. I still use that old Microsoft CPU Stress program to stress my Labview apps. Launch a few instances of it and you can get a good idea how your design is going to hold up.