-

Posts

752 -

Joined

-

Last visited

-

Days Won

2

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Neville D

-

-

There seems to be a bug in the Modbus CRC calculation vi in the NI library. Replace it with the attached VI that I got from Steve Brooks on the NI website.

-

1

1

-

-

Maybe you can borrow an equivalent one from your local NI rep. If I remember correctly that is a pretty old card and replaced by a newer version with more bits, but function calls etc should be the same.

-

Hi all,

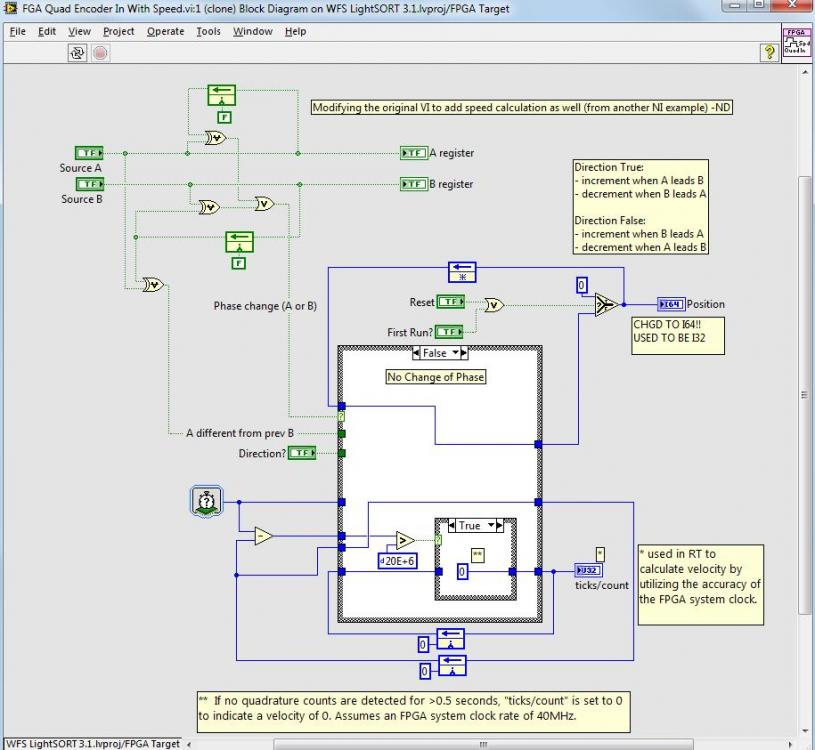

working on my first reconfigurable IO project using a PXI 7811R with LabVIEW RT. I am building a quadrature encoder counter using a couple of the NI examples, and was wondering if there were any caveats to changing it to use an I-64 as the counter output? The NI example uses a 32 bit integer for the count.

In my version, I changed it to an I64 count output and added a speed (counting ticks between pulses) output as well.

It seems to work fine, but would appreciate any cautions that experienced FPGA users might have.

Neville.

PS. Cross posted to info-LabVIEW as well.

-

How many shared variables are you using? I have around 70 or so communicating with a PXI LVRT target, with no problems. What kind of target do you have? Hosting large numbers of shared variables on smaller/older targets cFP or FP might be an issue.

I haven't investigated this but:

you could put password protected indicators on a tab sheet and hide/display the entire tab or that particular tab on the RT front panel. Again, I don't know if those tab properties will work on RT. They might.

-

Why don't you add the password functionality in the calling Host VI instead of adding to the RT panel? When user presses a button to open the panel, force them to input a password before actually opening the panel.

-

Have you tried using continuous samples instead of finite samples?

-

Thanks Jon.

-

Hi,

does anyone know the link to download the NIWEEK 2011 presentations?

-

Have you tried a mass compile of the entire project?

-

Currently all my editor has to do is generate an error and the old ni edito comes up.To call the new ni editor you would need to change the code to dynamically call it and close down mine.

Hi guys,

I downloaded the Mark's excellent Icon Editor a while back, and don't remember what slight changes I made, but in my case, clicking on the "NI Icon Editor" button calls the NEW NI Icon Editor. I really hate it, so I just press the ABORT button on Mark's Icon Editor, and that calls up the OLD NI Editor, in the few cases I need to modify an icon to keep the pictures previously set.

As an aside, Mark it would be great if you could add a feature where, you could edit the Icon History to delete some icons. The use case for this is: say you build an icon, and plonk down the VI on a block diagram, now you find the icon is hard to read because of the colours chosen, I frequently go in and adjust (lighten) the colours a bit, and use that. But the icon history now has two versions of the same colour scheme, each slightly different, and can trip you up if you re-use the wrong one the next time around.

Neville.

-

Roo,

I would suggest going through the examples, now that you have tried the Express VI. You can also right-click the express VI and open it up to see what the code is doing inside. The Express Vi is just to get you started. In general, its not a good idea to use that for an application since it has a lot of code in it that you may not be need in your specific case, slowing down your application.

Try getting one camera up and running as you want it, then duplicate the loop for two cameras.

N.

-

Neville

Great post.

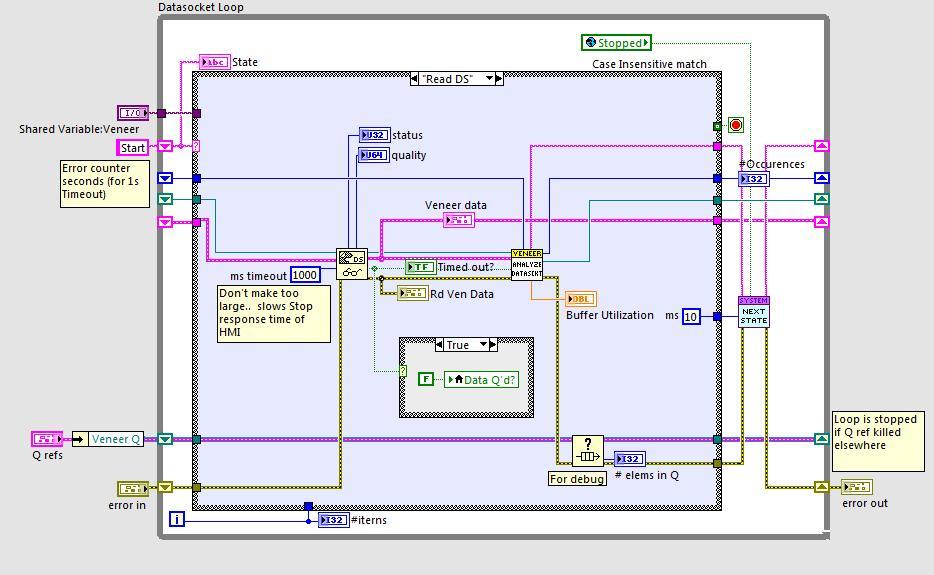

Is it possible for you to share what you are doing in "Analyze Datasocket" VI?

Paracha

Paracha,

that VI's operation is not relevant to the Datasocket communication illustrated. Its just some housekeeping on the data acquired.

N.

-

I have a similar issue with NSV:s and RT.

If the RT application is executed from development environment, everything works fine, but if the application is built into a rtexe and set as startup the application is broken.

If the rtexe was built with debug enabled and I connect to the rtexe, the panel displays a broken arrow but no information about what caused the application to break.

I've tried the delay before accessing any shared variable, but it is still the same.

The only solution that seems to solve my issue is to replace all static NSV:s with programmatic access. Lucky for me I only have 5-10 static NSV's in the application.

/J

Hmm.. not sure what version you are using but I have used 8.6.1, 2009 and 2010 with static and dynamically called Shared variables, with no problems. I have about 80 of them and 3 or so are read dynamically in the PC Host. The SVE is hosted on the RT PXI target.

Neville.

-

Hi,

I tried launching the RT execution trace toolkit and been ask to add the NI service locator to the exclusion list of my computer's firewall.

It appears that for LV 2010, the NI service locator is included within the SystemWebserver.exe located here:C:\Program Files\National Instruments\Shared\NI WebServer.

I added the SystemWebserver.exe in the exclusion list of the firewall but i still get the message asking to add the NI service locator to the exclusion list of my computer's firewall when lauching the execution trace tool.

Any hint?

I'm Using Labview 2010 under Win7.

Regards,

Guillaume

I remember having the exact same problem, but I don't remember what I did to fix it.. have you tried just ignoring the warning and trying to work with it anyway? Maybe a reboot?

Neville.

-

That's one idea. However, I'm still starting and stopping tasks to accomplish this.

I'm using an old SCXI system for all analog measurements - thermocouples, 4-wire RTDs, current inputs. I average measured samples to increase accuracy, especially on the 4-wire RTDs. The RTDs happen to be connected to a mechanically multiplexed module. The NI manual suggests scanning multiple samples from one channel before switching to the next channel to avoid wearing out the mechanical multiplexer. The channel switching is actually audible. Imagine switching a mechanical multiplexer 100 times/second - it would wear out quickly.

I've seen this recommended scanning method mentioned multiple times in NI documentation, but there doesn't seem to be any obvious and convenient way to implement it using channels configured in MAX without starting/stopping tasks. I'm just hoping I'm missing something.

Maybe this is further incentive to stop using MAX...

I'm still not clear as to what you are trying to accomplish/improve. Maybe a screenshot of the specific part of your code would help.

Reading all those temperature channels means your sample rate can be very slow without affecting accuracy. So what if you have to start and stop the task? You will not be running the loops at kHz speeds any way, so the few ms when you don't have data when the task is being set up are not that important, considering your loop should be running around once a second or slower.

And why bother with MAX? Just configure the channels as you need in your code itself. You don't really need MAX except when trouble shooting initial wiring etc. You can also save your channel configuration generated in the code to MAX if you need.

Split the "slow" and fast channels into two or more separate tasks, read them in their respective software timed loops (can be either while or timed loops), write generated data to a Q to process in a separate loop and your done. Maybe a stop button to stop and clean up when finished.

N.

-

I've used network variables and networks streams plenty, but network variables just don't seem reliable enough and network streams hang when connection is lost.

In my experience, they are quite reliable; most bugs have been traced to code other developers have written, rather than any issue with the shared variables themselves. Couple of points:

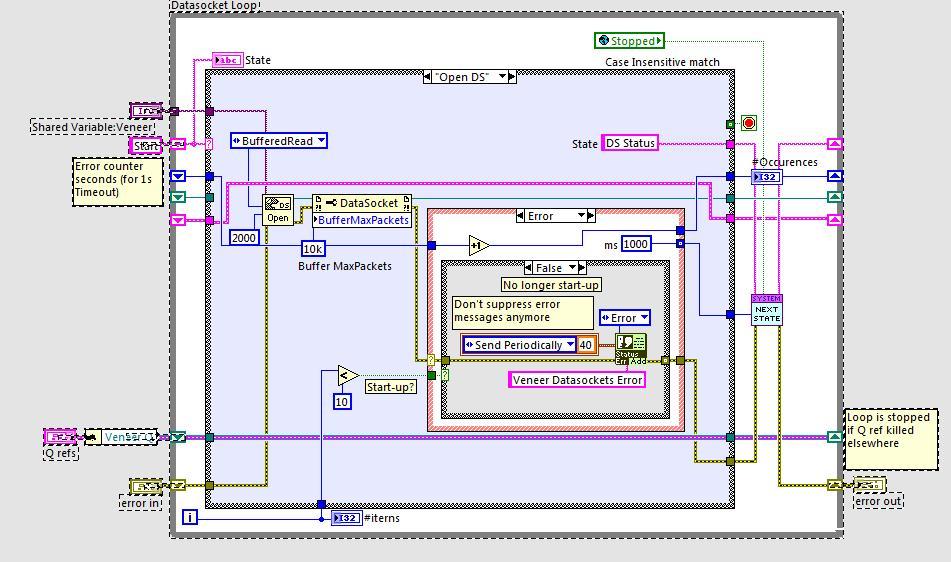

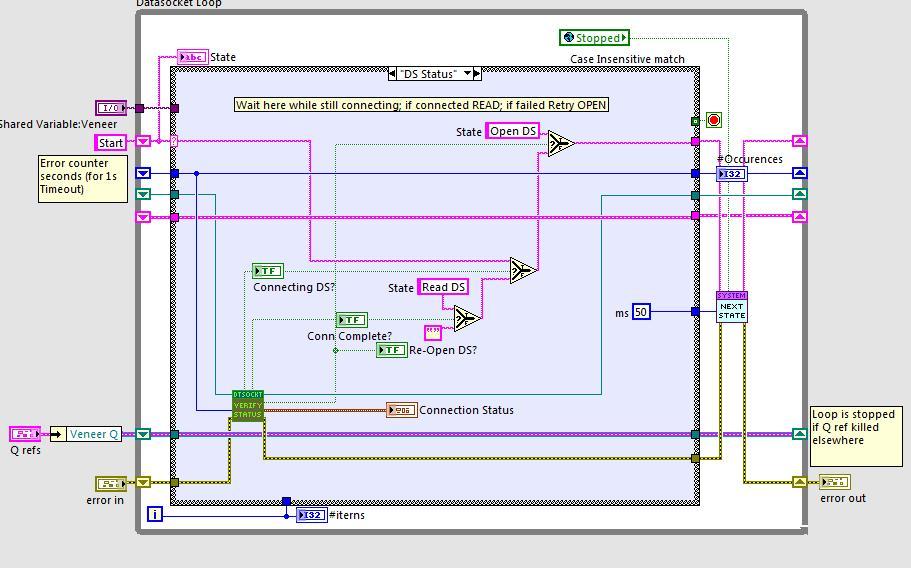

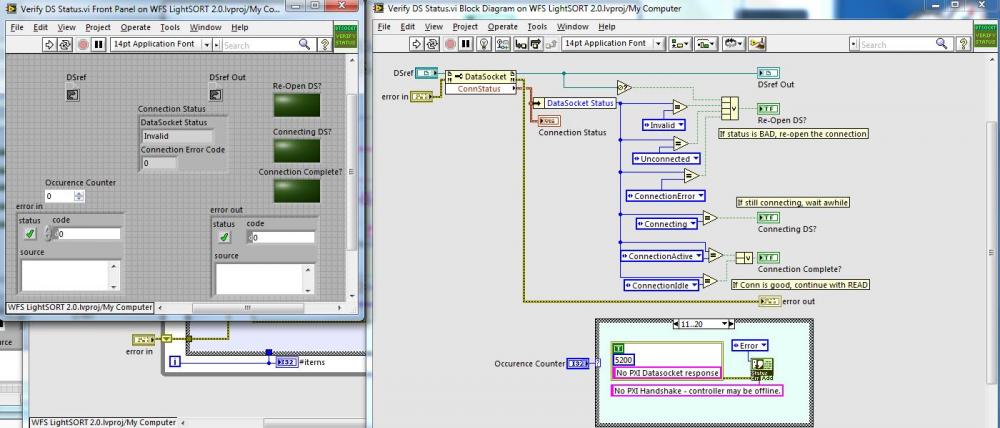

1 "Important" (regularly updated, critical) shared variables are read using the DataSocket API, where I connect to the SV engine on the RT target, and then monitor the status of my connection. If it goes down, I reconnect, kind of like a TCP loop. The read values are then Q'ed. Prior to implementing the reads in this way, I would get SV's go down when the RT target was being rebooted, and they wouldn't "reconnect" without restarting the HMI (PC) application.

2 Once connection is re-established, the other static Shared variables seem to connect without a problem. Runtime for built executables (between reboots) can be 8 or 9 days.

3 The Shared Variables are hosted on the RT target and number about 70 or so; some are complex clusters, others are SGL's, DBL's, and even an IMAQ image flattened as a string. Some values are buffered, others are not.

Here are some screenshots:

Neville.

-

1

1

-

-

Look up "Producer Consumer" architecture in the LabVIEW help.

Neville.

-

Can you describe your application in a bit more detail?

If you are scanning digital channels, may be you could use change detection on the channels?

How about setting up the channels that don't need to be scanned multiple times in one task and the other channels in separate tasks?

Neville.

-

In the past, having graphs/charts transparent and overlaid caused the drawing updates on panels to be drastically slowed down when LV tries to draw and then redraw the layers above. Try to avoid transparency or else make sure transparent elements are not overlaid on each other.

But I agree, panel drawing routines especially for charts/graphs have been a performance bottle-neck for ages. In this day of streaming video, and using video cards as co-processors for FFT's, having an application fall to its knees when plotting data is a bit ridiculous.

NI really need to work on improving performance in this area.

N.

-

that's a pretty interesting idea but it's not scalable enough for us. For example right now we have to purchase eye trackers, with PXI we could just plug in a video acquisition board and write our own eye tracker software. Things like that seem very hard without a true bus system.

You could get an eye tracker with a PCI slot, mounting it in the ETS (realtime) PC.. really its exactly the same as PXI, but minus the Star trigger bus. But PXI is a more robust (though expensive, when you factor in chassis+controller) solution.

Requirements for using a desktop PC as a LabVIEW Real time target

One option would be to put the processing in dlls and call those from Labview RT but I'm not sure that works (it does in 'normal' Labview).

Yes, that will work with LV-RT as well. We have written C dll's and called them in RT before.

As an aside, I notice that there is an " Real time Execution Trace Toolkit" for LabWindows as well (in the latest LW 2010 SP1 Release Notes).

Have fun!

N.

-

- real-time. I'll try to explain: must be able to acquire 16channels of analog data at 20kHZ/16bit, together with (ie synchronized to the uSec) 32 digital TTL inputs sampled at the same rate, every 2mSec with a maximum jitter of a couple of uSec.

In other words, all channels of the external analog/digital signal must make it into the software exactly 1mSec later, represented as arrays of 20 samples per channel. - a low-latency link to an external pc running windows/linux/mac os. Ideally if the external pc would be polling that link, it should be able to react to any data on it within a mSec. I'm not sure that is even possible on a non-realtime os, but right now we poll the parallel port on XP an it seems to be doing that fine without too much jitter (seems never higher than 5mSec or so)

- we must be able to write at least the signal processing in C++, with a compiler providing support for at least TR1. Ideally the entire application would be written in Visual Studio.

- line-by-line debugging of the code. Again, ideally, this would be debugging with Visual Studio

At a first glance much of this seems possible with PXI running NI's realtime os, and programmed using LabWindows. But the site is a bit vague about what can and what cannot be done using LabWindows, plus I'm not sure how the debugging works.

Are the 16 channels all acquired simultaneously at the same rate, and starting together? I think that is totally possible. However if you want to acquire them at different rates, then you would have to break them out on different cards since each card has only one sample clock.

The PXI is equipped with an internal trigger bus as well as RTSI lines, so you should be able to route your start trigger to a separate DIO card to start the DIO operations with the AI task.

You could also use a PC with certain specifications on the ethernet chipset and such, as a realtime platform. The setup might take a bit of time, but once established, it behaves just like a PXI platform (but cheaper, and you need a RT runtime licence). It probably will not have the PXI's star-trigger bus, which might be required in your application. Again, I am not sure if LWindows RT will run on the PC, but if LV-RT can, I am assuming it is possible with LW-RT as well. You can then buy NI's PCI versions of DAQ cards and use those in the LW-RT environment on the PC.

Also, the PXI controller comes in an extended temperature option and usually stands up quite well to harsh environments.

There is a LabWINDOWS realtime module as well which I am almost certain should run on PXI. I have never used it so no idea of its debug capabilities.

http://www.ni.com/lwcvi/realtime/

Some points learned through experience:

1 When you have a choice between "low-cost" NI hardware and more expensive, go with the more expensive. You will almost certainly need some functionality (more DMA channels etc.) in the more expensive card as you go further along your development.

2 Pay careful attention to the number of modules. ALWAYS get the 8 slot PXI chassis at the very minimum (you might need the 13 slot). I have been bitten in the past with getting the 4slot or the 5slot then being stuck with $1500 hardware that can't be upgraded or easily replaced without ending up with unused hardware.

3 Get the latest/greatest/fastest PXI controller you can (from NI since you need the RT support). Getting older hardware is a recipe for lots of pain and heartache.

If you go with PXI, you always have the option of using LabVIEW RT as well, if you think of going that route. The debug tools and communication tools there are excellent.

I have just recently finished debugging a PXI RT system with Counter, DI, DO, and image acquisition using the RT Trace execution toolkit. Its great (once you understand how it works, with the very spartan documentation available).

The network streams API would be perfect for deterministic communication as well.

Good luck and keep us appraised of your progress.

Neville.

- real-time. I'll try to explain: must be able to acquire 16channels of analog data at 20kHZ/16bit, together with (ie synchronized to the uSec) 32 digital TTL inputs sampled at the same rate, every 2mSec with a maximum jitter of a couple of uSec.

-

I am basically making use of an Analog Input to acquire some pulses using which i want to calculate the number of ticks and further the angle and number of rotaions .

If i was using a counter card life would have been easy , but currently dont have one .

can anybody suggest easy ways of deriving the ticks first , from the incoming waveform ?

Cheers ,

T

What hardware are you using? Most NI Multifunction hardware have a couple of counters already built in on the card.

N.

-

- real-time. I'll try to explain: must be able to acquire 16channels of analog data at 20kHZ/16bit, together with (ie synchronized to the uSec) 32 digital TTL inputs sampled at the same rate, every 2mSec with a maximum jitter of a couple of uSec.

In other words, all channels of the external analog/digital signal must make it into the software exactly 1mSec later, represented as arrays of 20 samples per channel. - a low-latency link to an external pc running windows/linux/mac os. Ideally if the external pc would be polling that link, it should be able to react to any data on it within a mSec. I'm not sure that is even possible on a non-realtime os, but right now we poll the parallel port on XP an it seems to be doing that fine without too much jitter (seems never higher than 5mSec or so)

- we must be able to write at least the signal processing in C++, with a compiler providing support for at least TR1. Ideally the entire application would be written in Visual Studio.

- line-by-line debugging of the code. Again, ideally, this would be debugging with Visual Studio

At a first glance much of this seems possible with PXI running NI's realtime os, and programmed using LabWindows. But the site is a bit vague about what can and what cannot be done using LabWindows, plus I'm not sure how the debugging works.

Are the 16 channels all acquired simultaneously at the same rate, and starting together? I think that is totally possible. However if you want to acquire them at different rates, then you would have to break them out on different cards since each card has only one sample clock.

The PXI is equipped with an internal trigger bus as well as RTSI lines, so you should be able to route your start trigger to a separate DIO card to start the DIO operations with the AI task.

Do you have any proprietary/non-NI hardware you will be using? I am not sure how that would translate to the PXI platform.

You could also use a PC with certain specifications on the ethernet chipset and such, as a realtime platform (check out "ETS" on NI's website). The setup might take a bit of time and research, but once established, it behaves just like a PXI platform (but cheaper, and you need a RT runtime licence). It probably will not have the PXI's star-trigger bus, which might be required in your application, but you can use the RTSI bus. Again, I am not sure if LWindows RT will run on the PC, but if LV-RT can, I am assuming it is possible with LW-RT as well. You can then buy NI's PCI versions of DAQ cards and use those in the LW-RT environment on the PC. Using this setup you can easily and cheaply upgrade the PC with the latest/fastest processor, down the road. No need for a chassis for the DAQ card as well.

However, the PXI controller comes in an extended temperature option, usually stands up quite well to harsh environments, and works right away painlessly with RT.

There is a LabWINDOWS realtime module as well which I am almost certain should run on PXI. I have never used it so no idea of its debug capabilities.

http://www.ni.com/lwcvi/realtime/

Some points learned through experience:

1 When you have a choice between "low-cost" NI hardware and more expensive, go with the more expensive. You will almost certainly need some functionality (more DMA channels etc.) in the more expensive card as you go further along your development.

2 Pay careful attention to the number of modules. ALWAYS get the 8 slot PXI chassis at the very minimum (you might need the 13 slot). I have been bitten in the past with getting the 4slot or the 5slot then being stuck with $1500 hardware that can't be upgraded or easily replaced without ending up with unused hardware.

3 Get the latest/greatest/fastest PXI controller you can (from NI since you need the RT support). Getting older hardware is a recipe for lots of pain and heartache.

If you go with PXI, you always have the option of using LabVIEW RT as well, if you think of going that route. The debug tools and communication tools there are excellent.

I have just recently finished debugging a PXI RT system with Counter, DI, DO, and image acquisition using the RT Trace execution toolkit. Its great (once you understand how it works, with the very spartan documentation available).

The network streams API would be perfect for deterministic communication as well.

Good luck and keep us appraised of your progress.

Neville.

- real-time. I'll try to explain: must be able to acquire 16channels of analog data at 20kHZ/16bit, together with (ie synchronized to the uSec) 32 digital TTL inputs sampled at the same rate, every 2mSec with a maximum jitter of a couple of uSec.

-

Also I am wondering why my subpanel invoke looks different from yours ( it has not the reference input,although the version is 2010) can you send me the file of your example instead of image?

Thank you

Farid,

Jon has posted a VI snippet. Download it and you should be able to run the code. Search the examples in LabVIEW for "subpanel".. it should bring up some useful stuff.

N.

Wasted day at the track thanks to LV 2011, One more bug...

in LabVIEW General

Posted

I recall reading about USB adapters with the FTDI chipset being the most reliable. Try to get one that has that. I recently bought a USB-RS485 adapter from

http://www.rs232-converters.com/USB_to_serial_adapters/USB_to_rs232_rs485_rs422_ttl_converter.htm

It works fine.