-

Posts

752 -

Joined

-

Last visited

-

Days Won

2

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Neville D

-

-

QUOTE (Matthew Zaleski @ Mar 24 2009, 03:32 PM)

Did I misread the provide NI link to RT Target System Replication? It seems to imply that I could image the drive in my cRIO, drivers and all, then replicate it to other cRIOs. The tricky part would be the INI file controlling IP address.Assuming I misread that, then yes, I'd love to be able to somehow script the whole device driver install for NI-MAX. I now have theoretically identical systems scattered worldwide. Explaining NI-MAX to someone half a world away isn't my idea of fun, hehe.

You can "replicate" the software setup of a target back to itself (if for example the hard drive was corrupted). But not to other targets of the same type.

Upgrading to LV-RT 8.6 on one target and copying that to another target is not allowed.

N.

-

QUOTE (jlokanis @ Mar 24 2009, 12:21 PM)

Quick tip: don't place a large data structure in an attribute of a variant that you store in a single element queue and access from several points in your app. If you do, then everytime you preview the queue element to read it, you will make a copy of the whole shabang, even if you are only interested in another attribute of the variant structure that is a simple scalar. This will eventually blow up on you.So is the copy made because of the preview Q or is it because its an attribute of the variant?

(Just to be clear..)

N.

-

Do you have a lot of targets in your project?

Where does it slow down in the loading? Your VI's or vi.lib VI's?

N.

-

Or you could save to file at a reduced sample rate.

Or use filtering to save phenomena of interest (peak values) at a reduced sample rate.

Basically you need to analyse your requirements BEFORE you start collecting Terabytes of data. If there is a "problem" how much time are you willing to spend to wade through multiple hard-drives worth of data?

Neville

-

I seem to remember trying something similar that a few years ago, but I wasn't able to make it work either. It had something to do with the fact that some modules are smarter than others (i.e. you can query certain information while with simpler modules you can't).

Maybe a quick call to NI is in order?

N.

-

Cat,

History and jokes aside, I was re-reading all the earlier posts (actually related to your issue..) and it seems to me there are a lot of good ideas there. Forget about the toolkit and the $ your boss spent to get it for you. Use those tried and true methods and I'm sure you can isolate where the memory leak is occuring.

Track the states, log them to file (like I pointed out), use WireShark to track TCP transactions to isolate if the communication is the cause (like someone else pointed out).

Build a "test harness" set of VI's that don't have parts of the code and run that (for example no TCP just reading from file).

Put case structures in suspect VI's run with certain parts of the code de-activated and see if it occurs.

Run test cases on multiple PC's to speed debugging up.

Good old-fashioned debugging is drudgery and fancy tools are all well and good, but basically it is "99% perspiration and 1% inspiration"..

PS I tried using the Real-time trace toolkit a few weeks ago, and I couldn't make head nor tail of the information, running a vision application on a quad-core RT target with multiple timed and regular while loops doing processing and TCP-IP communication via Gig-E. I gave that up and ran a series of tests with and without the timed loops to evaluate.

PS2 Post back with the results of your debugging for more help from the community.

Neville.

-

Mike's covered most of the basics. But check the gain on your board. "Low cost" USB devices usually have low gain and are not ideal for use with thermocouples (signals in uV) or other tiny signals. Using a DAQ device with isolated inputs will also help, but again this will be expensive.

For future reference read this.

Neville.

-

QUOTE (JeroenM @ Mar 19 2009, 01:46 PM)

I already mask everything that is not needed for the analysis, the main problem is that the calibration is not what I want the round picture must be calibrated to a square where it looks like it is a normal photo without distorition. The dots in de the middle of de calibrated image are not round like the rest of de dots.You mean you want the image dewarped? I don't think there are any built-in routines to dewarp an image. You will have to write your own. The calibration functions are meant to extract out measurements in real-world units, so your measurements will be correct, but the picture won't "look" flat.

We have done this in the past; dewarping is quite processor intensive, and for a machine vision app its only eye-candy.. it looks great, but serves no measurement purpose. You can use calibration routines for measurement.

Read the first few chapters of the NI Vision Concepts Manual for more info.

Neville.

-

QUOTE (neBulus @ Mar 20 2009, 04:02 AM)

So its not an edge case after all.Then our shared experiences having to fill this hole left exposed by the proj way of managing distributed LV apps highlights that fact the project can benefit form ... "Tinking outside the project".

Ben

Yes, I have been harping about this with NI engineers for a while, every opportunity I get.

jgcode, I am using the System Replication tool as part of my remote utility to deal with multiple targets, but like I said it took a while to get everything to run smoothly, when really, it should be part of the project setup to be able to download the same code to a number targets with a single "build & run as startup" option on the project somehow.

Another pet-peeve I have is the inability to update NI software on a remote target without NI-MAX. Customers don't know or care about it, but would like a CD (sent by us) that they could run to upgrade their NI RT hardware to the latest-greatest versions.

Neville.

-

I'm not sure what your issue is.. you can save the calibration and reuse it later, you don't have to calibrate every time. You can also apply calibrations to images with a Region of Interest marked out. In that case, Vision is smart enough to calibrate only the ROI part of the image speeding things up.

so look for some feature of interest, mark it with a ROI rectangle and then apply whatever processing you need, and you will get results in calibrated real-world coordinates.

Neville.

-

QUOTE (neBulus @ Mar 19 2009, 05:53 AM)

What I don't like about the project...The tools in the project are only in the project. Say I have an app that has to talk to 500 cFP units. Without bending over backwards (which I did) you wil need a project with 500 cFP nodes configured to be able to load the same software on each but with different IP addresses and config files. Yes this is an edge case.

No, this is definitely NOT an edge case. I have an app where I have 12 PXI targets all running the same code at a customer site, and I had to individually build exe's and download them one by one.

I built my own tool to ftp the exe code to all targets simultanously, but that took a fair bit of work.

Also, the more targets you add, the longer the project takes to load, even though each target has pretty much same info. And making changes to these projects seems to take longer (like make copy of project).

N.

-

QUOTE (Aristos Queue @ Mar 18 2009, 03:07 PM)

Another solution would be having the UI be VI A and the state machine be VI B and instead of calling VI B as a subVI, call it using a VI Reference and the Run method, then when user hits the STOP button, you call the Abort method of the VI reference. Your app as a whole keeps running, but that state machine stops.Is that more or less dirty? Can it be made acceptable somehow?

Aborting camera applications on RT causes the IMAQdx driver to be left hanging and the camera cannot be accessed until the system is rebooted, so I would say this is a bad.

N.

-

QUOTE (ASTDan @ Mar 18 2009, 02:28 PM)

"NI introduced autopopulating folders in LabVIEW 8.5 to automatically reflect the contents of folders on disk." From webAmid all this project goodness: one annoying thing with auto-pop folders is that everytime you open the project it has an asterisk * for save changes even if there are none. It has something to do with the auto-pop folders.

Just a caution.

N.

-

QUOTE (ASTDan @ Mar 18 2009, 01:08 PM)

The auto-populating thing really cheeses me. I creatle little test vi's all the time during development to test out ideas before I intigrate them in the main code. These show up in my project when they don't belong, and I have to go into the project to delete them.Well, don't autopopulate folders if that annoys you. I usually put the Test VI's under a Test or Experimental folder and leave them in the project, until I absolutely do not need them for whatever reason.

Avoiding cross-linking and ability to work with different RT targets are big advantages to going with Projects.

Neville.

-

QUOTE (aaronb @ Mar 18 2009, 08:46 AM)

http://lavag.org/old_files/monthly_03_2009/post-2680-1237402388.jpg' target="_blank">

I notice you have 7.1.1. That SHOULD allow you to save the Extract Single Tone (or any other VI from vi.lib) separately to a new VI. Do this before making any changes and save to a different location so that your changes don't get erased with the next update of LV!

I have 8.6 so my changes are a lot more work, plus you won't be able to open the VI anyway..

N.

-

Great! Thanks Paul.

N.

-

Coercion dots especially for large data types like arrays can be a problem at run-time, when the Memory Manager might have to be invoked. This can affect the performance of loops especially if the arrays are large. To be safe, coerce the data to the required type from:

Numeric pallet>Conversion> and select the right function to coerce your data to the correct type.

N.

-

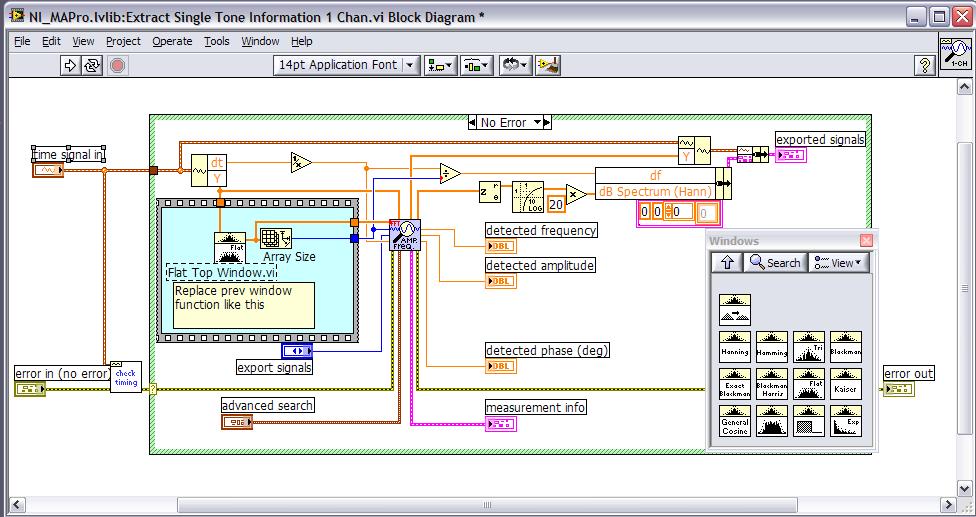

It seems you can't modify VI's in vi.lib anymore.. ARGGGHH!! Why does NI have to make everything more difficult with every new release?

Anyway, Open up Extract Single Tone Information 1 Chan.vi, manually build an exact copy of it using Extract Single Tone Information from Hann Spectrum.vi.

Make a copy of ma_FFT with Hanning.vi but make the WINDOW typedef constant into a Control and change from Hanning to flat top.

Stick this modified VI into the first VI and you should be done.

These are the mechanics of it, but you can only tell with actual data whether changing the windowing is going to give you "correct" results.

FYI, this VI was once available as a download from the NI website and could be modified at will. Maybe you can look there again to find an older version and that might be quicker to modify.

Neville.

-

QUOTE (Aristos Queue @ Mar 16 2009, 09:45 AM)

But I have worked with various hardware teams over the years, and I've discovered that the further you get from signal processing and the closer you get to industrial control, the less you need to understand the hardware. Once you have an API that provides control over a motor and another API that acquires a picture from a camera, then its all math and software to figure out how to spin the motor such that the robot arm moves to a specific spot in the image and picks up the target object. You still need to understand the limitations that the hardware places on the software -- memory limits, data type restrictions, processor speed, available paralellism -- but those are restrictions within which you can design the software without understanding analog electricity itself.I tend to disagree. It is equally (or if not more) important to really understand hardware limitations. Look at the number of questions on LAVA where people ask about capabilities of NI DAQ hardware. It is straightforward to crank out code once one understands the DAQmx API, but far harder to figure out which card to use or whether the card one has can do what you require it to.

Selecting inappropriate hardware (or using hardware incorrectly like not accounting for ground loops or common mode voltages) because of a lack of application-specific knowledge can be an expensive mistake.

All your other points are very valid though.

N.

-

Is this a homework assignment?

-

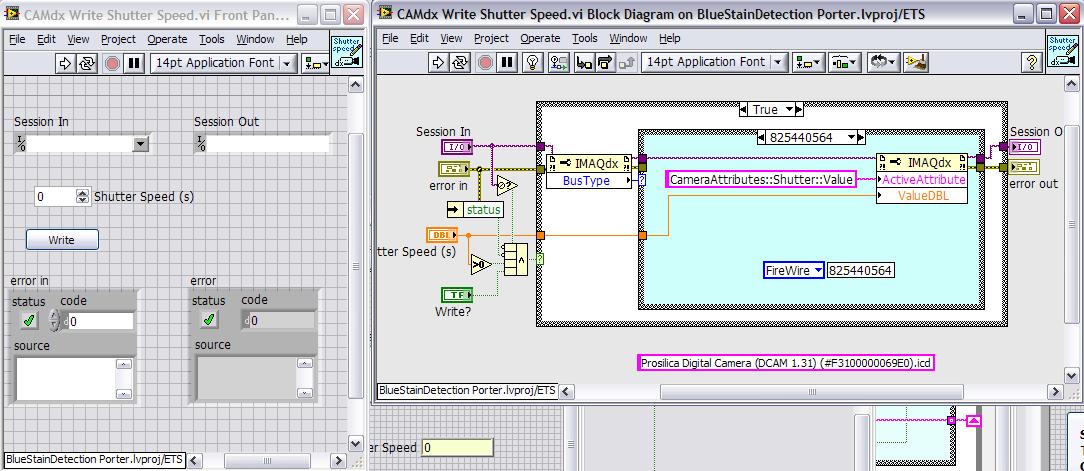

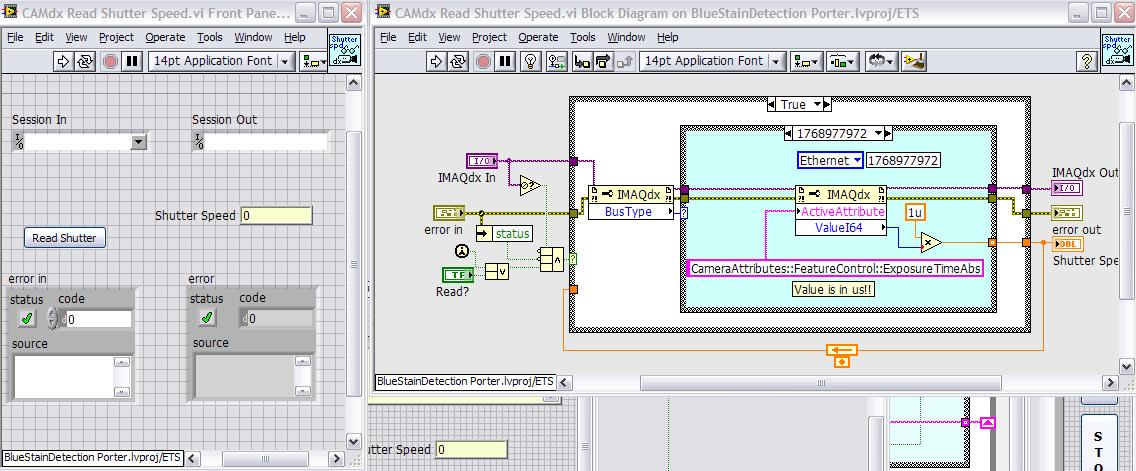

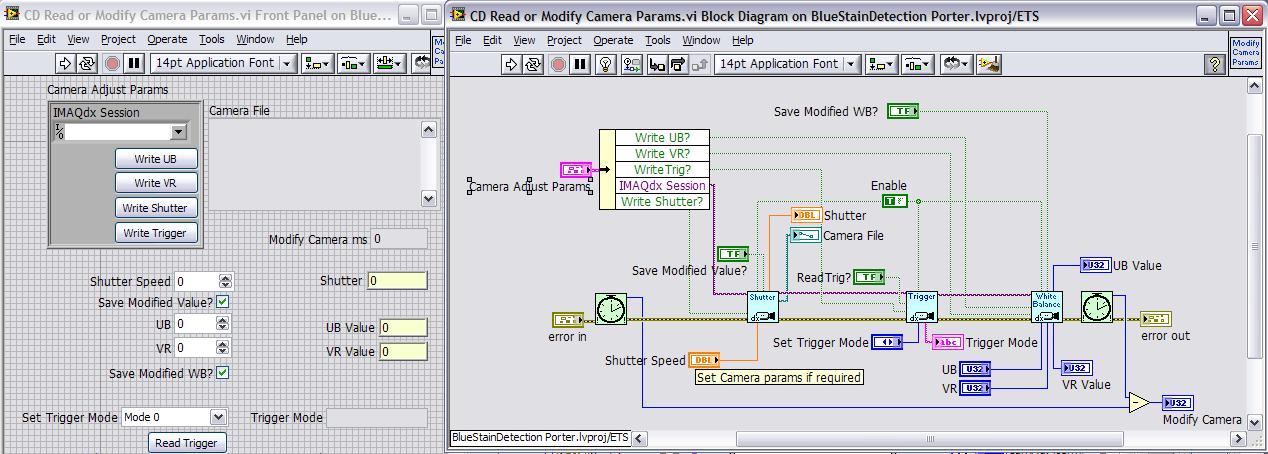

I took a look. It should work. Maybe you are updating too many camera parameters at one time.

I would write subVI's for every camera property you are changing and then activate only those subVI's where properties actually have changed.

No point writing Width and Height if only PacketSize has changed. Plus reading everything out as well.

This is how I do it:

WRITE:

Cache your camera values in a shift register at the first iteration, then update your cache values as you change parameters. The fact that you are timing out indicates, something is not able to keep up. Either the camera can't keep up with too many changes or possibly the loop rate is too fast?

READ (with cache):

The BusType is just used to detect a FireWire or a GigE camera and send the appropriate command. The feedback node stores the last read value, so as not to overload the camera with commands when not necessary.

Here's what sending commands looks like (run in a loop):

Neville.

-

Also read this.

-

QUOTE (orko @ Mar 6 2009, 02:53 PM)

QUOTE (orko @ Mar 6 2009, 02:53 PM)

I'm not sure what you mean by combining ROI's and how that would apply here. My U16 image is always going to be the same size as my RGB, so I'm thinking that I probably won't be able to benefit from cordoning off an ROI.Say for example:

you had a 3 rectangles that you wanted to keep in an image, ignoring the rest of the image (i.e a mask).

You can define each of these as a ROI using Rectangle to ROI, make an array of ROIs, then use Group ROI's to get one composite ROI.

Next use ROI to Mask to transform it into a mask. Its very fast. You don't touch the pixels in the image until the very last step and that too for a relatively small number of pixels.

N.

-

Make a mask such that

U16 pixels non-zero => mask pix=1 (pixel of interest)

U16 pixels zero => mask pix =0 (not interested)

Apply this mask to the U16 image. Now merge this resultant masked image with your U32 image. Hopefully both images are the same size? (What does merge mean in your case?) Do you want the U16 to display over top of your image (hiding the original)? Displaying with varying amounts of transparency is quite complex (see attached examples) and may not be very fast.

Other approaches:

Overlays will work faster than merges (which involves actually manipulating pixels). You could transform your U16 image to a bitmap and just overlay the bitmap.

ROI tools are very powerful (though not very well documented). If you can define a ROI, you can manipulate those a lot faster. For example you can go from a ROI to a mask and back. You can combine ROI's as well.

Why is it U16? Won't U8 do? Does this overlay change or is it constant from image to image (like a logo for example)? If constant change to bmp at first then just overlay this bmp. Thats what I do with displaying our logo over the processed image on a monitor.

Attached are two interesting examples of merging with transparency. One displays an image of a brain with a tumour overlayed on that image. Its fairly complex, but works. I don't remember where I downloaded them from.

Neville.

Deploy RT and PC VI's - PXI Webserver trouble

in Real-Time

Posted

Are you able to connect to the remote panel? (Operate>Connect to Remote Panel).

What webserver port are you using? Default is 80 which for us is a proxy port so I usually change it to 90.

I forget if VI Server has anything to do with it, but just try enabling VI Server on the Remote Target as well.

Check the ni-rt.ini file at C:\ on the target to make sure all your settings are correct.

N.