-

Posts

752 -

Joined

-

Last visited

-

Days Won

2

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Neville D

-

-

I'm working on a large LabVIEW toolkit that will need several families of icons, somewhat math-related. Is there a book, link, software package that would be helpful not so much in creating the icons, but designing pictures that relate to the VI functions? I'm aware of the icon section on IDNET.

There is something on the NI website.. search for Icon Art Glossary or something like that.. they have an html doc that can pull up a whole bunch of icons which you can then modify to your taste.

Neville.

PS Let me know if you can't find it.. I downloaded it a while back, and I can dig it up from my stuff if required.

-

Has anyone had any experience getting LabVIEW RT to run on single board computers. I've had some work and some simply not load at all. The latest is a SBC with an INTEL 845GV chipset. It loads from floppy and even sees the Harddrive in LabVIEW, but it will not load from the Harddrive. I can load it from the format disk, and it appears to see that LabVIEW RT is there, because it says "Loading LabVIEW Real-Time....." but it never accesses the Harddrive and eventually says "Could not find OS in root directory." I've tried different formats of the drive, different partition sizes, bios settings galore, etc. -- all with no luck.

It may just be the chipset does not work with LabVIEW RT, but according to Ardence, there is no issue with Pharlap; so, it would be LabVIEW specific. In the past, I've had great success with the Intel 815E chipset, but I'm changing the form factor to a PCISA based backplane and so I need to find a compatible SBC.

Please note, that we are using the built in 8255X based ethernet connection. I've tried it with the built in Ethernet and an Intel Pro 100/S with the same results.

Is the Hard drive formatted for FAT32?

How about the boot sequence? Is it set to A drive and then C drive?

I think the chipset might be a problem. As far as I remember they support a chipset that is not commercially available anymore. You should check with NI on this.

Also if you are using LV-RT 7x, you need to download lvalarms.dll from your Desktop using the FTP tool in NI-MAX. There is a bug in the lvalarms.dll that gets built in. <<Check the knowledgebase for detailed info about where to find the lvalarms.dll file on your pc and where to place it on your target.

-

Since when? This is not true. NI's web server supports Mozilla browsers as well. Where did you get that information from?

I stand corrected!

Sorry.

Neville.

I wouldn't go and add datasocket to the complexity of the system. A simple TCP/IP server in the RT app similar to the DataServer/Client example will probably work much more realiable and not add much overhead to your app.While I didn't do that on RT systems yet, I often add some TCP/IP server functionality to other apps to allow them to be monitored from all kinds of clients.

Rolf Kalbermatter

In my opinion, throwing in a write or a read DS function is a lot easier & quicker than writing a TCP/IP client server. That is the idea behind the Datsocket stuff.

Unless, you have the TCP/IP Client Server already written from another project..

N.

-

Looks like NI just swallowed another little fish!

-

NOTE that it doesn't save the VI(s) so the breakpoints will come back when the VI(s) are reloaded.

Thank you,

-Khalid

Hi Khalid,

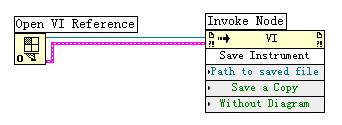

I realized that, and my utility will save the changed VI by invoking the "Save instrument" method.

Try it out!

Regards,

Neville

-

Doesn't hang, no error message. Just runs right through and never accesses the devices.

Interface is GPIB0 in MAX.

Very strange. I don't believe it has anything to do with the exe specifically. Try switching off one of the instruments and seeing if your code generates an error. It HAS to.

What does it do when it "runs right through"? If it runs through without generating any errors, I am wondering what your code actually does.

Neville.

-

Thanks. I am only using three devices besides the USB-GPIB interface. In my program, they are addressed as GPIB0::16, GPIB0::18, and GPIB0::19 in the VISA constant.

Seems simple enough to me, just can't get it to run the devices.

Are you getting any error message from your code? Where is it hanging?

Is your interface GPIB0 or is it GPIB1 (plugging or replugging the USB device might cause it to use another device number perhaps).

It has got to be something simple.. its not a big deal to build/install/run exe's.

Neville.

-

First time creating a Standalone Executable with LabView 7.1. Went through the steps in the app builder. Created the .exe and loaded it on the test PC. Needed RTE. Loaded that. The app ran but did not see the USB-GPIB interface. Loaded that. MAX now sees all of my devices. Program runs but does not access devices. Burned up 4 CDRs so far, what am I missing?

Thanks!

Is your VISA alias on the new machine correctly set up?

i.e. if you are accessing com port 1 as "COM1", then on the new system COM1 needs to be linked to Serial port#1.

Same concept applies to the VISA aliases. If your DMM is accessed as "DMM", define it to be the instrument you require.

Or else just use "GPIB::5" in the VISA control or constant.

Try to put an indicator on the front panel that shows you what the VISA string being used by the exe is.

Remember, once you have built the exe and installed the RTE, on the next iteration, simply copy over the new exe and it should run (you don't have to go through the install process again).

Neville.

-

Thanks guys, I wasn't sure if that property would overwrite an existing VI, but it seems to work.

Here is a utility that I wrote to get rid of breakpoints and then save the resulting project.

http://forums.lavausergroup.org/index.php?...findpost&p=7003

Enjoy!

Neville.

-

Try adding this VI to your Project folder in LabVIEW (c:\program files\national instruments\labview 7.1\project). Restart LabVIEW and you will have "Remove All Breakpoints..." in the Tools menu of your VI.

Hi Khalid, and Lavezza,

I took the liberty of adding Lavezza's code with a few mods to an existing tool that I had worked on with E. Blasberg.

It helps you to find used and unused VI's in a project, kill unused VI's that you select, and now, remove all breakpoints in the project.

Throw this llb in your /projects folder and then restart LV. Use from the Tools menu (Find used & unused VI's)

Thanks to all who participated.

Here it is!

Neville

-

Hi All,

I am trying to write a utility that finds all breakpoints, removes them and then saves the changes.

Anybody know how I can programmatically saved the changed VI?

I already got the breakpoint stuff working.

Thanks,

Neville.

-

So LabVIEW disappears from the task manager in less than a second or does the vi window just close?

It removes the vi's from memory fast on my machine and closes those, but it seems like LabVIEW is trying to cleanup loose ends with the operating system after that.

No, it looks like everything closed out pretty fast. Just a comment, publishing data (Real or booleans) is a more efficient (faster) way to go than the simple method of right-clicking on a control & publishing it to the DS server.

Neville.

-

You could use Mozilla Firefox for this. With tabbed browsing, you can open a complete folder of links in different tabs at once.

The NI Web server only supports Internet Explorer.

Datasocket is the way to go. I have communicated with about 16RT targets with buffered data using Datasockets. Usually works well, though sometimes the Datasocket server inexplicably crashes at startup.

Neville.

-

I found that it didn't matter if the server is running or not. Attached is a vi that opens the server, the controls connect and then the server is closed and labview is exited. Note the time it takes for labview to close down on the task bar (or in task manager). On my system it takes about ten seconds. I can also remove the server open and close and it does the same thing.

Thanks

I tried your VI and everything works pretty fast. Close < 1s.

Do you have enough memory in your PC?

I have a laptop with 1Gig of RAM (just for comparison).

Neville.

-

You can also define a constant 2d array by putting an array object on your block diagram then placing an I32 number in it. Drag the index control downwards to make it 2d, then drag the edges of the array to increase the number of visible elements, then type in your values.

Neville.

-

Alright last question on this topic which I seemed to have butchered. I am trying to incorporate a boolean control array along with the listbox where each control corresponds to the specific index in the listbox. Idealy I would like the array to scroll along with the scrolling of the listbox, so the controls line up so to speak. I didn't have any luck with finding events or properties that can be manipulated.

Any suggestions? Attached is the listbox vi and the array of clusters vi.

Hi Rohit,

The listbox has a property whereby you can display a little symbol next to each entry. Instead of having a complicated series of moving boolean arrays, just display a "check" symbol for true and a "x" for false.

Right-click on an item in the listbox and visible items>symbols. You can also access this property through the property nodes.

And yes, you can add a scroll-bar to the array by right-clicking on the array>Advanced>Customize and then edit the array control to replace the up and down arrows with a scrollbar.

Neville.

-

Hello. Relative newcomer to LV 7.1. I have a series of cases that I select with a U16 ring control. In the interest of creating an international program, what I would like to be able to do is to have the user just click on a picture on my front panel and that would select the case that I need to run? I was able to do this with LabWindows, Is this easy to do in LV?

Thanks

Use the Text & Pict Ring control from the Ring & Enum Pallet.

Neville.

-

Thanks for the information. I often wonder why we are given programming tools then warned not to use them.

Thanks,

Randall

I think it helps to know the limitations of the tools you have at hand. The idea of the references etc. is to clean up your top-level diagram, and bury property manipulations in a sub-VI.. a VERY useful function, but it has its cost.

Neville.

-

Hi everybody, is there a way to pause a Timer Loop in LV 7.1 or stop and start it again? If someone knows how to do that please let me know.

Thanks

AV

What do you mean by Timer loop?

You can use the "timed loop" structure which can be stopped and restarted as well.

Neville.

-

Thank you for your reply!!

At the same time, I am thinking about creating an executable file instead of running a vi. I would like to know whether running an executable file will be faster than running a vi. (I mean increasing MHz now to the order of 10MHz. It is said that because the exe file does not need compilation anymore but the vi does, is it true?)

Ayumi

Yes, the executable will run slightly faster, since diagrams are not saved, and Front panels of lower level VI's are not saved, and debugging functions are disabled.

I doubt if you will get an order of magnitude speed improvement though...

The VI is also "compiled". Every time you save a VI, it does a quick compile.. you will see the compile message only if you have a large number of VI's you are saving at the same time.

N.

-

Neville,

Thank you for your reply!

1. I am trying to send out pulses at highest frequency possible. (5V TTL)

2. I am using LabVIEW 6.1

3. PCI-6534 and I use electric cable to connect the I/O board and the CRO to read the signal

4. As seen in the attached vi, you can change the frequency of the signal output, the highest frequency which can be acheived is 1.25MHz with this vi. I would like to know whether it is because of the speed of my computer or it is because of other factors.

Thank you very much for your attention!

Ayumi

Hi Ayumi,

After digging around in the NI Knowledge Base (www.ni.com/kb), I found some answers. Check out these links:

http://digital.ni.com/public.nsf/allkb/4FC...6256D8900563E45

http://digital.ni.com/public.nsf/websearch...6256b3a0062bff6

http://digital.ni.com/public.nsf/allkb/7DF...6256DF900785211

http://digital.ni.com/public.nsf/allkb/560...6256C5500711C2B

http://digital.ni.com/public.nsf/allkb/D41...6256FE8000F28FD

http://digital.ni.com/public.nsf/allkb/CE8...6256AE600663BD3

http://digital.ni.com/public.nsf/allkb/AB4...6256C010061EA7B

http://digital.ni.com/public.nsf/allkb/5D2...62565D7005DEA42

http://digital.ni.com/public.nsf/allkb/23F...6256CD70055095E

Bottom line is the speed of your computer is also an issue when trying to do high-speed DIO. Another thing is the speed and efficiency of the DAQ driver. Looks like you are using the "traditional NI-DAQ". DAQ-Mx the new driver is far faster & it uses a multi-threaded architecture. This might buy you further speed as well. But it can't be used with LV 6.1 You would have to upgrade to LV 7.0 or later (7.1.1 is the latest version).

Take a look at the speed benchmarks in the above links.

Hope this helps.

Neville.

-

-

If the time taken to execute the code is very small (<10ms), the overhead required to call the counters might affect your readings.

In this case,

1 Get start time from tick counter.

2 Perform a known number of operations in a loop

3 Get stop time from another tick counter

4 Calculate the avg. time for the operation using the #operations and the elapsed time.

Neville.

-

Thank you for your reply!

Now I am trying to make an output signal at highest possible frequency and the result was 125000Hz by the example named Const Pattern Output.vi (available with LabVIEW). Over 125000H it would show the following error message:

"Because of system and/or bus-bandwidth limitations, the driver could not write data fast enough to keep; up with the device throughout; the onboard device reported on underflow error. This error may be returned erroneously wen an overrun has occured"

But the PCI-6534 should have a maximum of 20MHz. Is it limited by Windows?? Will it be better if I use Linux instead?

Thank you for your attention!

Ayumi

Ayumi,

can you PLEASE provide more complete information. It is impossible to follow what you are doing with little bits and pieces.

1 Explain your application

2 State your Software Version (including DAQ driver)

3 Describe your hardware & setup (triggering etc.)

3 Post your example code

4 State your exact problem

I cannot find your particular example in my LV version 7.1.1.

Neville.

Naming a plot on waveform graph's plot legend

in LabVIEW General

Posted

Hi Abe,

Use the "active plot" property to select which plot you are working with, then change the name of that plot. Active plot just accepts plots numbered 0,1,... etc.

Neville.