mzu

Members-

Posts

55 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by mzu

-

I think I just post the sequence of actions to recreate the described situation here: Launch LabVIEW File->New Project RMB->new class accept the default name ("Class 1" in my case) My computer->RMB->New simulation subsystem drag 2 instances of the class to "Subsystem 1" front panel change one of them to indicator connect them on BD Icon->RMB->show connector Link control with topmost left terminal Link indicator with topmost right terminal In project explorer move the subsystem inside the class input terminal->RMB->this connection is-> dynamic dispatch input output terminal->RMB->this connecton is-> dynamic dispatch output labview thinks for 2 seconds Push an arrow button - it becomes broken Can anybody confirm? as soon as you change connection back to, say, required-recommended and recompile the vi it starts to work normally If you close the project, LabVIEW shuts down by itself without going to the start dialogue

-

Dear audience, I am trying to design an LVOOP wrapper around some simulation (control and simulation loop). The idea is that I want to have a base class which will define interface to a number of simulations (basic actions etc), and then derived classes, which will actually define a particular simulation to execute. I wanted to have control and simulation loop and some auxilitary stuff as a part of basic class implementation, so that I can set up the simulation parameters in the base class methods. So I want to define a dynamic dispatch simulation subsystem. At the moment when I define input and output terminals as "dynamic dispatch" the arrow breaks, "Error compiling a VI". I am using 2010f4 32 bit. Is it a feature or a bug? Any suggested workarounds?

-

Load nd2 (Nikon) file to image assistant / vision functions

mzu replied to mzu's topic in Machine Vision and Imaging

Those were 30 movies, ~1Gb each. Actually, there is a solution. NIKON has published the SDK at http://www.nisdk.net/ (registration and verification required). They give you a dll which you can use in your projects, they also supply the manual. -

There is an analytical solution for that. Just chose proper coordinate system (x axis aligned with (xb-xa, yb-ya) vector). Then Y of your intersection point will be +-sqrt(r^2-(AB/2)^2). Rotating the coordinate system back will give what you need.

-

Load nd2 (Nikon) file to image assistant / vision functions

mzu replied to mzu's topic in Machine Vision and Imaging

Justin, thank you for your reply, I tried ImageMagick. Unfortunately, identify.exe tells me "no decode delegate for this image format" which, probably means it is not supported. I checked inside the ImageJ ND2 reader plugin (http://rsbweb.nih.go...nd2-reader.html). It all comes down to interface with a windows DLL nd2sdkwrapperi6d.dll. This dll is written using JNI to provide some methods for one of the java classes inside the ImageJ plugin. My limited knowledge of JNI tells me that this DLL will be callable only from within the JVM (first parameter of each JNI interfaced function is a pointer to some internal JVM structure). The easiest way around all that as I see it now is to write a Java wrapper around the existing ImageJ, launch a separate JVM via System Exec and then, say, establish a TCP/IP to localhost communication to get the image data out of it. For the limited amount of images (~30) that I have it may be an overkill ... Thank you for an offer to send you a file, but each of the files that I have is about 380MB, not an easy file to send... -

Is there any easy way to open nd2 files (Nikon elments, microscopy) in NI vision assistant or to be used with any of the vision functions? Now, I open them in ImageJ, save as a series of TIFF, and only then open them with LabVIEW. Before, I start writing my own importer, I wonder if any toolkit does that. Search in google on "nd2 LabVIEW" produces nothing ... (xpost: http://forums.ni.com/t5/Machine-Vision/Any-easy-way-to-load-nd2-files-Nikon-microscopy-to-Vision/td-p/1392658, http://www.labviewportal.eu/viewtopic.php?f=129&t=2803)

-

Unwrap phase.vi may be your solution

-

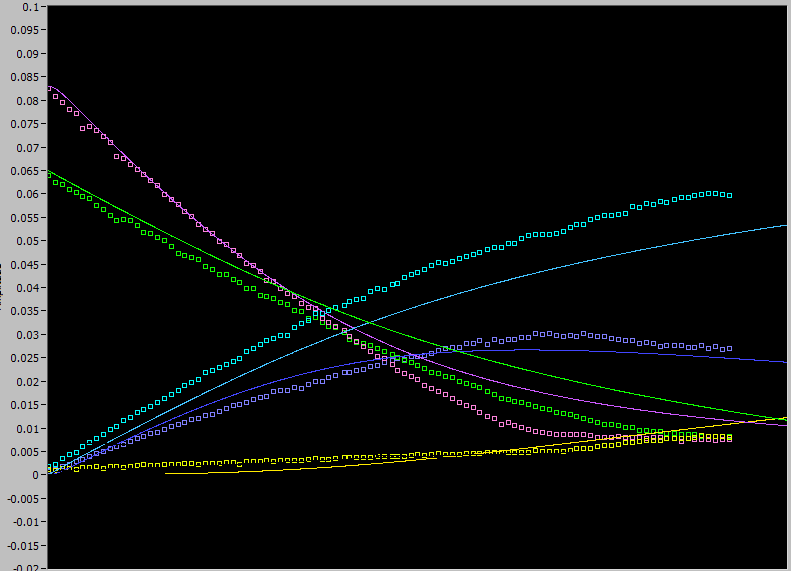

There is a model of a physical process formalized in LabVIEW simulation toolkit. It take a set of parameters, and produces time dependencies of observables. I have an experimental data, same time dependencies of observables, but measured on a real system. I would like to get the set of parameters, so that model curves will fit experimental. I have an idea of the initial values of the parameters. Sample of data is provided below: Points are experimental data, curves are the results of manual fitting, using the above mentioned model. Had there been one curve, it would have been easy. I would have used NonlinearCurveFit.vi, and supply it with a reference to a model curve generating VI. What can I do with multiple curves? I really would like to avoid porting the fitting algorithm from the Numerical Recipes. I would also like to avoid writing a wrapper (OriginC <-> LabVIEW) to do a linked fit in Originlab Origin. I can use Matlab, of course, but it will require writing another wrapper. Is there a quick way of doing it, without leaving LabVIEW? (xpost: http://forums.ni.com/ni/board/message?board.id=170&thread.id=472607) (xpost: http://www.labviewportal.eu/viewtopic.php?f=87&t=1794)

-

Looks like it is complaining on the absence of traditional NI-DAQ. Figure out the proper version of traditional NIDAQ from here: http://digital.ni.com/public.nsf/allkb/97D574BB1D1EEC918625708100596848, download and install what you need

-

The other option would be to use Microsoft (VS) Automation, which is able to parse C and C++ on the source code level. I used it with some degree of success in my CIN wizard for VS.2003 some time ago. Since LabVIEW is becoming more and more Microsoft-centric ...

-

Or to open a VI from another location ...

-

For what I noticed it happens when the current working directory of any program is set to the USB drive. Open File Dialogue apparently does change it. I just open some file from my HD ...

-

Tomi, thank you for this awesome piece of code. I am trying to compare this approach with a "conventional" one where I wrap "Run VI" method of the VI server in the basic LVThread class, and assume all other parallel tasks are the implementations of the childs of the LVThread). I really like your approach: 1. It works in RunTime engine. I do not have to specify FP of every child of LVThread should I want to pass some argument to the tasks 2. It works even in mobile module - no need to use "Get LV Class Path" which is unavailable for the mobile. However, you can not asynchronously stop (Abort VI) a hung up worker? I understand that "Abort VI" should be used in limited circumstances, but do you see any way to overcome that?

-

IF not RTB, then Picture indicator? or Picture indicator and wrap an xControl around it?

-

Gary, this is pretty much the same deal. In case you need a CIN (using LabVIEW less then 8.2, etc) you're welcome to use my CIN Wizard for VS .2003 http://code.google.c...dvs2003labview/

-

For wonderful example, when C is much faster see This forum topic

-

According to LabVIEW documentation : "Assuming the underlying code is the same, the calling speed is the same whether you use a Calll Library Function Node or a CIN." After version 8.2, there is almost no incentive of writing a CIN instead of a dll. There was a beautiful explanation as to why: http://expressionflow.com/2007/05/09/external-code-in-labview-part1-historical-overview/, http://expressionflow.com/2007/05/19/external-code-in-labview-part2-comparison-between-shared-libraries-and-cins (For some reason links did not paste correctly)

-

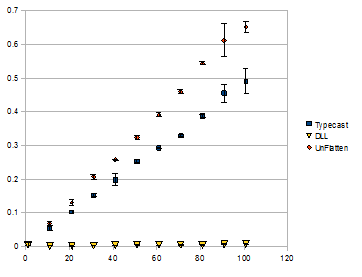

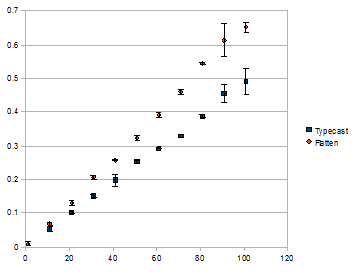

Adam, would not all those checks be independent of the array size? Like check that the length == size of an area allocated for a handle etc ... Both typecast primitive and unflatten primitive know the type at "compile-time" (whatever it means for LabVIEW). I got your point. What kind of a conversion: only byteswap? or copying, or something else?

-

I agree, but there is a slight linear dependence. Why? May be it the passing of the parameters inside the VI, containing a dll, may be it is an overhead of the testing method.

-

rolfk, made a DLL according to your recipe. Please, find attached VI and DLL. It is 2 orders of magnitude faster on my computer. The code inside the DLL is quite simple: void TypeCast(LStrHandle *arg1, TD1Hdl *arg2){ void *tmp = *arg1; *arg1 = (LStrHandle)*arg2; //Swap *arg2 = (TD1Hdl)tmp; (**arg2)->dimSize >>=1; // Adjust array size} DLLTypecast.zip

-

Thank you Rolf, the issue is even more complex. Checking for the string length and other checks is a O(1) operation. What unflatten does is O(N), where N is amount of data. But I guess we will never know unless LV source code leaks out someday ...

-

Thank you for your reply, This is exactly what I need: a C-type typecast. In LabVIEW this kind of typecast swaps bytes (probably, because for early versions of LabVIEW flattened data was always big-endian). So, I though that using direct specific operation, with specific instruction "not to swap bytes" would save time. Nope. Also, note that the time difference between those 2 approaches is O(number of bytes), so "Unflatten From String" actually does some operation on every byte(word) of the string it operates on.

-

-

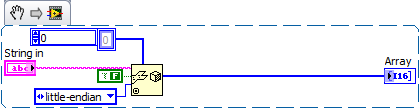

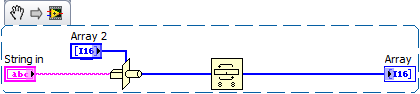

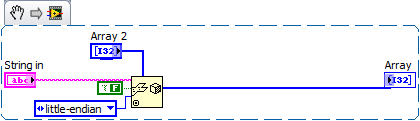

Dear audience, There is a certain very long string (1-100 MB). It contains 16bit integers in little-endian format. I need to convert them to the proper i16 array. I am using Windows, x86. 2 solutions come to my mind: Use typecast to i16 array and then swap the bytes, since first typecast assumes big-endian order. Use unflatten from string, specifiying the right order. In actual BDs there is a constant instead of the control for array2 (this is what LV2009 changed when I created snipplet) One might think that avoiding an extra pair of byte swaps in the second case would make it faster. Not really, see the attached graph. It has size of the string along the X-axis (in MB) and time required to get the conversion done along the Y axis (in ms). The dependence is quite linear, so something extra is done on each byte of the data in case of unflatten from string. What is it? Why number 2 is slower? It does not depend on LabVIEW version (8.6 vs 2009) and does not depend on 32/64 bit. (crosspost from http://www.labviewpo...t=1367&start=0)

-

For one of the projects I did it for Windows XP, /3GB key. The link on NI website does not mention one of the things: modifying labview.exe (google for IMAGE_FILE_LARGE_ADDRESS_AWARE) to let it access the whole memory. (I am talking about 7.1.1). Yes, I was able to get more memory than I was used to. However, certain drivers became unstable and had to disable some built-in things on motherboard as well as change add-on cards.