eaolson

-

Posts

261 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by eaolson

-

-

QUOTE(Michael_Aivaliotis @ Feb 15 2007, 04:13 PM)

Don't know if this is a bug or a feature request:Can the list folder function accept multiple wildcard patterns?

Edit: I know the OpenG List Folder VI handles this, I was just wondering why the NI one doesn't.

I'd just like to point out that the semicolon is a valid character in a filename, at least in Windows. So this would actually reduce the functionality of the list folder function. It seems to me it would ideally accept an array of patterns, rather than a single delimited string.

-

The reason that the typecast solution works, is that property/invoke nodes don't do type-checking at run-time (they rely on LabVIEW's type checking of wires in the editor) -- they only check to see if the input object has the properties/methods that are being accessed/called. And, the StringConstant VI Server object does have a Text attribute, but it simply hasn't been exposed yet in VI Server. This is akin to duck typing -- if a StringConstant has a Text attribute, then reading the Text attribute should work (even if the StringConstant is masquerading as String control, as long as nobody checks to make sure that it is really a String).

Interesting. I've only fiddled with scripting a little bit. It looks like the pre-cast StringConstant refnum and the post-cast String refnum are the same. So does the Type Cast primitive manipulate the actual data (i.e., the thing the refnum is pointing to) in any way, or does it just cause the block diagram editor to interpret the data type of the wire differently?

It is possible to get yourself in trouble with this trick. For example, a String has the Default Value property, while a StringConstant doesn't. Trying to modify the Default Value of the StringConstant this way throws error 1058, "Specified property not found" at run time.

-

The color picker was given the ability restrict colors to the 8 bit resolution. We toyed with decimating the colors to show which ones were discrete, but it just looked odd.

I noticed the whole "Icons have a limited pallette" thing a few months ago, and found it incredibly frustrating. I selected my color, went back to the icon editor, started using it, and my icon just didn't look right. So I went back to the color picker, thinking I'd grabbed the wrong color, started using it again, and it still didn't look right. I finally managed to find something that looked acceptable, and gave up trying to figure out what was going on. The frustrating part was that the color picker dangles all those colors in front of you, but whisks them away when you get back to the editor, without mentioning that it had changed your selection. It's not mentioned in the documentation, either.

Why the 256ish color limit in the icons, anyway? Does it just harken back to archaic times when we had 8-bit monitors and, darn it, we were glad to have that?

-

"Note The front panel must include a National Instruments copyright notice. Refer to the National Instruments Software License Agreement ... about the requirements for any About dialog box you create for your LabVIEW application."

That sounds like NI is trying to assert ownership of the copyright of any compiled VI. Surely not?

-

I have a hunch that the 8-bit colorspace LabVIEW is using might be some kind of very standard one, but I don't know enough about this kind of thing to just come out and claim that

. And then for icons they're just using a further subset of it.

. And then for icons they're just using a further subset of it.It looks like they're pretty much using the web-safe, 216 color pallete. There's a fantastic representation of it here.

Which makes me think: would it be possible to exchange the color picker in the icon editor with something more useful, much like how Mike Balla's Icon Editor can be dropped into the right location to use instead of the default one? There's not a lot of reason to have a color picker with millions of colors if it only lets you use a few of them.

-

Well you don't have to worry of course but if you choose a license that is not compatible with most mainstream OS licenses it prevents people wanting to use such a license for their own software to make use of your VIs. Of course that is your decision and your call to, but thinking about these issues is not entirely superfluous AFAICS.

I'm no lawyer, but I don't really see what the problem is. Creative Commons Attribution 2.5 allows you to (a) copy and distribute the work and (b) make derivative works. In exchange you (a) attribute the work and (b) distribute the CC license. The BSD license basically allows you to do the same with the same restrictions. Functonally, they don't seem to be significantly different.

One thing I've never understood is where a derivative work stops and starts. If I take say, one of the OpenG string VIs, and modify the functionality a bit and rearrange the terminals, clearly it's a derivative work and it falls under the OpenG BSD license. If I then call that modified OpenG VI in an original application to, say, fly the Space Shuttle, does that mean my application as a whole is a derivative work or not? Under the old LGPL license, it seems it would be if the application and all its sub-VIs were compiled to an EXE. (I assume that's why the transition to BSD is under way.) But LabVIEW seems to sort of compile on the fly, so would it be a derivative work when its running under the development environment? When it's loaded into memory but not running? When it's just sitting on the disk?

-

That's an excellent idea - I wonder how difficult it would be to implement the same with a joystick for rough motion control?

I doubt it would be too difficult, but they're slightly different. If you think about moving something with a mouse, the intuitive way of thinking about it (in my opinion, your brain may vary) is that the object moves in the same direction as the mouse movement and when the mouse stops moving, the object stops moving. Speed of the object should be related to the speed of the mouse movement. For a joystick, the tilt direction controls the direction of the object's moton, and as you tilt the joystick it moves faster and faster. If you push the joystick all the way forward, the object moves and keeps moving until the joystick goes back to its center position. Basically, a mouse controls position; a joystick controls velocity.

The nice thing about the serial mouse is that the protocol is simple, well-documented, and implemented by a number of manufacturers. There's a three button one, as well. I don't think the same is true for joysticks.

-

Just for clarity, could you provide a simple way to do this. i.e. can you just plug in the mouse into a serial port, after the computer is booted? I haven't messed with serial mice in a long time, (can you still buy one?) so my knowledge here is limited. But an example of how to do this important stipulation of your code would be in order so people can make use of it.

Plugging the mouse in after the computer boots is exactly how I do it. I've always had the vague impression that hot-swapping peripherals was not such a great idea (USB being an exception), but have not had any problems doing it to date.

The reason I wrote these VIs is because I wanted some user input to a program running on a PXI system running LabVIEW Real-Time. Since there is neither mouse or USB support, this was the best solution I could come up with. There, of course, leaving the mouse/trackball attached to the COM port all the time is just fine.

Serial mice are still out there, if barebones. I picked up a $4 one for testing. Most industrial trackballs seem to have a serial version available, as well.

This is my first contribution to the CR, and pretty much the first thing I've released for "public consumpton." Comments and suggestions would be greatly welcomed.

-

-

Is there something specific that you needed from the website?

No, I only noticed it because I clicked on the wrong bookmark by accident. I guess I just wanted confirmation that DataAct had not gone out of business and that, if I ever needed a fresh installer for dqGOOP, I wasn't up a creek.

-

I've been unable to get to the DataAct website for a couple of days now. The domain name hasn't expired, but the DNS doesn't resolve. I'm hoping this is temporary. Does anyone know?

-

HUGE differences: a pressure cooker is NOT expected to explode... and leaves no microwave background radiation.

Ah, but what if you put the pressure cooker in the microwave?

-

The explanation with the dematerialization like the SF movies is not possible because of Heisenberg

-

If I recall correctly will closing the FP terminate the executable if it is the main VI.

Ton

I did not realize this method of starting a background application did not work if it was compiled into an executable. Using a launcher (separate VI that opens a reference, runs the VI with Wait Until Done = F and Auto Dispose Ref = T, then closes itself) also doesn't seem to work with a compiled application.

This is a bit tangential to the original topic, but is there any way to have an executable application running in the background and not visible without using the FP.State property? (I'm still trapped in the land of 7.1.1, so I don't have access to it.)

-

Yes. I strongly recommend never using the FP.Open property. It is "old style" and the FP.State was added to supplant it. Passing "false" to FP.Open is an ambiguous situation. If the window is already hidden and you pass false, did you intend for us to close the window entirely? This is just one of many ambiguous situations. The behavior in all these cases is well defined, but in all of these cases you have to figure out what decision LV R&D made. The old style still exists because of the huge number of toolkits that use it.

I asked because RagingGoblin posted a link to an NI example using FP.Open, but the image he attached used FP.State. I wasn't sure if there was a specific reason for the discrepancy, or if he was just taking advantage of a version 8 feature that wasn't available when that was written.

(I swear I wrote this yesterday, but it's not here today.)

-

Is there much of a difference between hiding the application by setting the FP.State property to Hidden and closing the front panel by setting the FP.Open property to False?

-

Ah, looks like this was fixed in 8.20.

-

I posted this over at the NI forums, but thought I would bring it up here as well.

The configuration file VIs Read Key (Path) and Write Key (Path) don't seem to work as expected (at least not as I was expecting them to) on an RT target.

When working on my WinXP PC with these two VIs, paths are translated to what looks like is supposed to be a device-independent format before being written to disk. The path C:\dir\file.txt becomes /C/dir/file.txt when writing and vice versa when reading. On my RT target, however, that same path is written to disk as C:\dir\file.txt, unchanged from the native format.

The translaton of a native path to and from the device-independent format appears to be the responsibility of Specific Path to Common Path and Common Path to Specific Path. These both use the App.TargetOS property to determine the operating system. In the case structure for these two VIs, however, there is no case for PharLap or RTX. (My RT hardware is runnng PharLap; I don't know enough about RTX to comment.) This means that the results of String to Path or Path to String are used without translating between the device-independent format to the native path format.

This isn't a problem if you create a configuration file on one machine and use it on that same machine. I noticed this only when tranferring a config file from my PC to the RT target, where the target would not open the file paths it loaded from the configuration file.

This occurs on 7.1.1 and 8.0. I don't have 8.20 to see if happens there, too. It also affects the OpenG variant configuration functions, becuase they eventuall call the LabView configuration VIs.

-

3) Set a high goal and work for it. My first big one was writting an ethernet sniffer. By the time I was done I understood networking file I/O driver interfaces...

Is anyone else waiting with baited breath for the next coding challenge for that very reason?

-

It seems like both variants and flattened strings allow one to pass data in and out of a VI without needing to know what the underlying datatype is. Is there a reason to prefer one over the other in this instance (e.g. speed, memory size)?

There are other applications, I'm sure: Variants have attributes (but I've never really seen the point of those); and TCP communication requires the use of strings. Variants carry with them information about the datatype, but you still need something of the appropriate datatype to use Variant to Data.

-

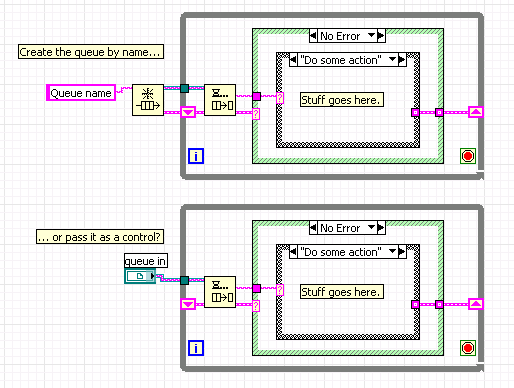

An unanmed queue cannot be accessed by any other VI.

By this, do you mean that another VI can not obtain a refnum for an unnamed queue created elsewhere (i.e., with Obtain Queue)? I have no problem creating an unnamed queue in a top level VI and passing that queue's refnum to a subVI for use there.

-

I like using producer/consumer type patterns. For example, one loop watches for user input, then sends a command to a waiting loop to actually process that input. Often, it's necessary for a sub VI to use one of the queues, and I'm just wondering if there is a preferred method of passing the queue to the sub VI. Usually, I pass the queue refum itself (generated by Obtain Queue) as an input to the sub VI. That way, the queue is created only once, in the top-level VI, and released there as well. Any loop in a sub VI waiting on the queue would then quit.

I think I remember reading somewhere that this may not be the most desiriable way for the sub VI to get the queue. Each sub VI could obtain its own copy of the queue if it knows its name, instead. The refnums generated this way are different, I believe, even though they actually point to the same queue.

Is one of these two methods generally preferred over the other?

I'm still using 7.1.1, and there is a bug when obtaining multiple copies of the same queue by name, but I believe that only rears its ugly head when getting many thousands of copies, as in a loop.

This would apply equally well to notifiers, I assume.

-

I've encountered a situation exactly as described in the NI page that you talked about. We create a listener and then do a wait on listener. On closing the connection we only close the Listener ID and not both the Listener ID and Connection ID and we've been wondering why we then have to shutdown LV completely before we can establish a new connection to that port!

Why not just use TCP Listen as opposed to Create/Wait? It seems to largely do the same thing, but you can close the TCP connection ID it returns and recreate it later with no problems.

-

I don't understand Dave and Toby's objections. From what I can figure out, the object wire behaves as a reference

I'm pretty sure it's by-value, at least according to the white paper. If the wire forks and goes into two different sub-VIs, you've now got two copies of the original object.

The only missed boat I see here is that it would have made sense to have the standard Property Node be able to read and write the cluster elements of the object. I don't think that would be hard for the LV developers to implement.Wouldn't that violate the whole point of data encapsulation? At that point, you might as well just use a cluster.

List Folder accept multiple wildcard patterns

in LabVIEW Feature Suggestions

Posted

QUOTE(Michael_Aivaliotis @ Feb 15 2007, 05:39 PM)

You also can't use ; when searching for files in Windows. It seems to ignore the ignore the character completely. So I guess the question becomes: which is better, a function that works in every case, or one that prevents you from doing certain things, but gives you some extra functionality in exchange?