eaolson

-

Posts

261 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by eaolson

-

-

-

QUOTE(CTITech @ Feb 21 2008, 01:08 PM)

The application is medical and mil, so I need presion. So im open for options. :headbang:The 9215 is a voltage-measurement board, correct? Could you put a precision, known resistor in series with your unknown, apply some voltage across both, measure the voltage drop across the known resistor (giving you current) and then measure the voltage drop across the unknown (R=V/I)?

-

QUOTE(jdunham @ Feb 19 2008, 04:14 PM)

Sorry, your code isn't accurate. Your graph reads in milliseconds, and you are assuming that your 20ms excursion is divided evenly among all 1,000,000 iterations of your empty FOR loop. It's much more likely that just a few of those iterations are taking the a large part of the excursion.OK, that's a valid point. But because the ms timer is limited in resolution to 1 ms, I had to do a bunch of iterations and average. And this is for an empty loop. Since that's boring and you'll want to do something inside the loop, that will slow it down considerably.

And since the OP was talking about QNX, this is all probably a moot point.

-

QUOTE(BobHamburger @ Feb 19 2008, 12:01 PM)

I concur with what Chris is saying here. If you're running on a Windows machine, the rule of thumb is that you can't reliably get loops to run any faster than around 30 mSec.I have to disagree with this. Running just a quick check, I can get an empty For loop to run at 5-7 nanoseconds, with occasional excursions to about 20 ns.

-

QUOTE(tmunsell @ Feb 14 2008, 01:05 PM)

I think I understand what you are saying..... Please bear with me, since I am new to Labview (and programming in general), but I've been reading a lot about it lately in the book "LabVIEW for Everyone". What I'm trying to do is set up the two loops to operate simultaneously as part of the main app. I tried seperating the loops by putting the channel read subVIs inside the second loop, but when I ran the app, only the main loop ran. Nothing in the second loop worked.... no data flow at all.That's because you have wires leaving the main loop and going to the second loop. No data leaves a loop on a wire until the loop stops. I bet if you look again at your program with Highlighting turned on, there will be a single iteration of the second loop after you press the stop button.

QUOTE

Do you know where I can find some examples that I can learn from? I need to configure the second loop so that the data displayed on the front panel indicators for 4 of the channels (Vib1-4) have an adjustable time delay, but all the other channels in the main loop must run in real-time. I've been trying to find an easy way to do this (like a time delay subVI that will go inline with the data flow for those particular channels), but haven't had much luck. I'll read up on queues and see if I can understand how they work.Take a look at the General Notifier Example in the LabVIEW examples (Help : Find Examples). It's very similar to what you're trying to do.

Time delay-wise, you might just convert your desired delay to milliseconds and feed that value to Wait Until Next ms Multiple. Put that in the second loop. There is also an OpenG version that can use an error cluster to enforce dataflow.

-

QUOTE(Holograms2 @ Feb 14 2008, 06:35 AM)

Problem is that I can't seem to find the Imaq fit ellipse function or Imaq fit circle functions. I'm guessing if I have an older version it'll be a tad more complicated to do this.You still haven't said which version of LabVIEW or Vision you're using. For me (8.2) it's under Vision and Motion : Machine Vision : Analytic Geometry. For some reason both functions are called IMAQ Fit Ellipse 2 and IMAQ Fit Circle 2.

QUOTE

Just been told that best way would be to plot a Gaussian graph of x components, and then y components and work that way rather than using an ellipse fitting function.I'm not quite sure what you mean by this. "Components" of what?

-

QUOTE(crelf @ Feb 12 2008, 09:49 AM)

I hope this will not be seen as sedition, but if you're not using Vision, you might want to check out http://rsb.info.nih.gov/ij/' target="_blank">ImageJ. It's an open-source image processing program specifically intended for this sort of thing. Not being written in LabVIEW, it is of course, inferior, but it has its uses.

-

QUOTE(dblk22vball @ Feb 6 2008, 01:12 PM)

I was thinking that LabVIEW and some sort of vision should be able to do this, however I have not been able to find any information on this type of analysis. I did download the Vision assistant from NI, but could not get it to "detect" the water droplets properly (could be I am not 100% familiar with the suite yet). I was just doing a simulated analysis, not with any live equipment (camera).Could you maybe fit the smallest ellipse that fits all your water droplets inside it, and compare that with your target?

-

QUOTE(Justin Goeres @ Jan 16 2008, 11:34 AM)

Which reminds me, I think there should be a key command for Open Detailed Help for Whatever is in the Context Help Right Now.Don't forget that you can right-click just about anything and there's usually a Help option that takes you to the same place as the Detailed Help link. Context help doesn't even have to be open.

-

-

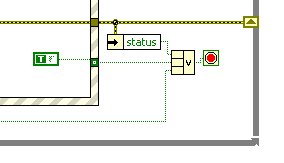

I'm cleaning up a quick-and-dirty app I wrote which is turning out to be something more useful than I originally expected. What I'm thinking of turning this into would have one loop running continuously doing data acquisition and periodically a user-fired event would save the data. Data will be stored in an array. So what I'm thinking of having a single element queue to store the array. The DAQ loop dequeues the array, appends the new element, and re-queues the array. The save loop can get a snapshot of the array at any time by previewing the array and saving it to disk. Attached is sort of a sketch of what I'm thinking of.

My concern is that in the dequeue-append-enqueue process, the Dequeue node has a buffer allocation on the output, I assume making a copy of what's in the queue. If the array gets large, I'm worried that will slow things down. On the other hand, I notice that neither the append or the enqueue has a buffer allocation. So maybe I'm misunderstanding where things are getting copied, and the queue is just pushing a pointer to the array around behind the scenes. Which is it? Or is there a better way of structuring this so the DAQ loop and the Save loop each have access to the same array?

(Right now, everything's in a single state machine and the user can't save the data without stopping the data acquisition.)

-

QUOTE(crelf @ Jan 15 2008, 02:12 PM)

So you're not an auto-tool user, are you?Actually, I am.

Yes, it took a bit of getting used to and when I need to go back to the Paint tool, I can never remember what combination of Shift, Alt, or Ctrl it is, but it works really well.

Yes, it took a bit of getting used to and when I need to go back to the Paint tool, I can never remember what combination of Shift, Alt, or Ctrl it is, but it works really well.That being said, if the auto-tool took two seconds for the current tool to fade away and another two seconds for the next tool to fade into view, that feature would quickly get folded, spindled, and mutilated.

I'm just saying that good graphic design does not good UI make. (Can you say "Genie effect?")

-

QUOTE(Aristos Queue @ Jan 15 2008, 11:17 AM)

There are some interesting aspects to this UI.Thoughts?

I read Joel Spolsky's User Interface Design for Programmers not long ago. As I recall, one of his pointers is to realize that mouse action is imprecise. Not everyone's hands are as steady as everyone else's.

I also don't like the fad of every sub-menu swooshing into view and then swooshing away when I'm done with it. (Macs, I'm looking at you.) It's disorienting, unnecessary, and time is spent waiting for the menu to finish animating into position.

One thing I really didn't like about that example site is that it wasn't always clear what was a control and what wasn't. Sometime's I would put the mouse over some text that didn't look frobbable, and something else would smoothly animate into view.

The LabVIEW pop-up palette acts this way and I often find it annoying. Right click to pop-up the palette. Look through palette options. "No, wait, I didn't want that one and now the sub menu is blocking the option I really do want." So now I have to move the mouse off to the side, wait for the sub menu to disappear, select the correct sub menu, and repeat.

-

-

QUOTE(Michael_Aivaliotis @ Dec 18 2007, 08:25 PM)

I was thinking of installing a tagging system on LAVA, before NI decided to release it on their forums, but decided not to because I don't find it useful myself. Perhaps I'm wrong and you all really want it. Well, do you?I don't think it would be very useful. Tagging seems to be used mostly as a way to categorize things, especially blog posts, which don't generally have a categorization scheme in place. There is already a categorization process here on LAVA, in the form of which forum and which sub-forum something is posted to. I can see myself going to Technorati and looking at tag clouds just to see what people are talking about this week, but I don't see myself wanting to go the LAVA forums (or the NI forums, frankly) and wonder "What sort of things have people been asking questions about lately?"

-

I should have known that someone would have already had this idea in this crowd. Heh.

-

QUOTE(Justin Goeres @ Dec 14 2007, 06:18 PM)

The 8.2.1 upgrade is free. I'd say that a critical bug in a basic comparison function would be a pretty good argument for applying that update . While you're at it (if it's a political issue) just tell the person holding the purse strings that it's only really fixed in 8.5 -- 8.2.1 was just a patch

. While you're at it (if it's a political issue) just tell the person holding the purse strings that it's only really fixed in 8.5 -- 8.2.1 was just a patch  .

.My inability to upgrade is not technical or financial, but political. Alas.

-

QUOTE(pdc @ Dec 14 2007, 12:11 PM)

I use the In Range and Coerce Function to coerce the N terminal of a for loop but the output is never coerced.Also if the N terminal is in the range for the In Range and Coerce Function, the In range output need two run to turn on.

It gets even freakier. For me, in 8.20, the first run has the In Range? output in the bottom loop coming out as (incorrect) False for the first run, and True for the subsequent runs. Now, toggle the value of "Include upper limit" for the In Range and Coerce node in the top loop, and the next run gives False again for the bottom loop. Subsequent runs give True.

Wish I could upgrade. Grump.

-

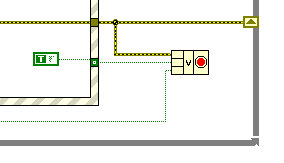

I don't know how many times I've needed to stop a while loop based on several booleans, as well as an error structure. It's a little thing, but it's kind of annoying:

I'd love to see some way to combine the Compound Arithmatic node (in boolean mode) combined with the Stop/Continue node. That would be especially useful if it could be used in conjunction with error clusters as well, rather than having to unbundle the status element first. You can already wire up the error cluster directly to the Stop node, but often that's not quite enough.

Something like this:

-

QUOTE(neB @ Dec 12 2007, 08:14 AM)

If our dreams are the product of our brains and our brains are composed of elements that must adhere to quantum mech, doesn't that dictate that dreams have to adhere with quantum mech?Well, think of it this way: down at the nitty gritty level, all computers have to comply with certain electrical engineering principles. Is it meaningful to say your LabVIEW program adheres to Ohm's Law? Yes, the electrons flowing through the little metal wires do, but you can't use it do describe the software made up by those electrons. Analagously, even though the interaction of the elements in your brain are "quantum," the mind isn't.

My dreams are all spaghetti code.

-

QUOTE(alfa @ Dec 7 2007, 08:54 AM)

Sigh. I told myself I wasn't going to get involved in this thread anymore, but the http://scienceblogs.com/insolence/2006/06/your_friday_dose_of_woo_its_no.php' target="_blank">growing misuse of scientific terms like "quantum" rather bugs me. Not only is saying a dream is quantized ridiculous (what's the smallest possible increment of a dream?), but saying that the Schrödinger equation applies to dreams is complete gibberish. You can't apply mathematical concepts to an abstract concept like that of a dream. It's like asking someone to take the logarithm of a pineapple.

-

QUOTE(george seifert @ Dec 10 2007, 01:30 PM)

Thanks. I missed that one. Unfortunately both methods introduce some funky artifacts in the image if you scale by something other than a power of 2.Have you tried fiddling with the Interpolation Type option in IMAQ Resample? Zero Order is the default and will be the fastest, but will also be the worst. Bi-linear and Cubic may give you better results, but will be slower.

-

QUOTE(Stevio @ Dec 10 2007, 09:19 AM)

When i visualized the derivate of this signal i see a lot of noise in it. I guess this is because i have an array and not a real signal.Numerical derivatives are often very noisy. Your best bet might be to use some sort of peak detection. The locations of the peaks would give you your frequency. Rise time might be a bit more tricky, since looking at your data, I'm not even sure how to define the start of each pulse.

-

PLease help a newbie with a "Basics I" course question

in LabVIEW General

Posted

QUOTE(richlega @ Feb 28 2008, 01:18 PM)

My first reaction was answer "C" and I'm gratified to read that Much Smarter People had the same response. I'm just curious to know what the "correct" answer was, according to the example.