-

Posts

3,917 -

Joined

-

Last visited

-

Days Won

271

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Rolf Kalbermatter

-

-

QUOTE(tnt @ Sep 28 2007, 04:41 AM)

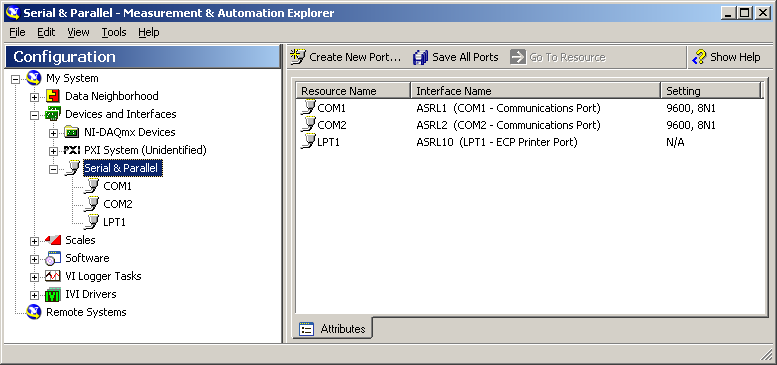

Making sure VISA is installed will of course help tremenduously! MAX is only the UI configuration tool for all NI driver software. As such it does NOT access any hardware at all directly but looks for installed NI software drivers such as NI-VISA, NI-DAQ, NI-DAQmx etc and queries them for known resources.

I didn't consider that you could somehow end up with MAX on a computer without having installed VISA.

Rolf Kalbermatter

-

QUOTE(Shoyurx @ Sep 27 2007, 12:37 PM)

I am trying to controll a Micropross Class 185 RFID card reader using Labview. I've tried using the Measurement and Automation explorer, but it doesn't seem to see my serial port. When I try creating a new device, I dont get an option to select serial.Has anyone had problems with this, or perhaps used Micrpross hardware with Labview? Any help would be appreciated.

The serial port in your computer is entirely independant of your external device. If you can't see it in MAX it is either not there, not properly installed in your OS or disabled. MAX automatically shows the serial devices it can detect from the OS. It does not allow you create arbitrary devices for that class since that makes no sense. A port is either there and accessible or it isn't.

Have you tried to do a Refresh of the MAX configuration (hitting F5 after starting it up)? That will rescan the system for built in devices and show any it can detect. If that does not give you a serial port then you have to start debugging there and see why MAX can't seem to find it.

After you got your port you can start to deal with your device but until then it is completely irrevelant to this.

Rolf Kalbermatter

-

QUOTE(Ben @ Sep 27 2007, 07:53 AM)

I am facing another Web-based app as well. I was concidering using LV as the back end and use standard stuff to handle the web-access. But Since I am weak in the web-support area, I would alos like to hear what poeple have to say to help aart-jan.Long ago I did look into this also in combination to interface to external script servers like Python for instance. One idea I came up is to use the LabVIEW TCP/IP VI server interface directly (instead of going through it's Active X interface which would limit the solution to Windows LabVIEW hosts only). I could access this interface through direct TCP/IP sockets in LabVIEW 6.1 but eventually run into some problems to access the Call by Reference interface (nothing that couldn't be overcome but the flattening of the typedescriptor and the different parameters is a real headache to get right and requires a lot of inside research into the LabVIEW datatype storage model (almost fully documented in an older App Note). I eventually stopped development on this because of several reasons.

- My Library was for ease of testing and debugging written in LabVIEW. Not very useful really considering that you can much more easily use VI Server directly and converting this interface to another language like C, Python, etc would still be quite an undertaking.

- NI obviously never documented that protocol for some reasons. One of them is that they change it between LabVIEW versions albeit not extremely. But the introduction of the 32bit safe typedescriptors in LabVIEW 8 certainly must have had a profound impact on that protocol although I never looked into that. Support of the entire (private) LabVIEW object hierarchy through this interface in LabVIEW 7 should probably also have made some impact here.

In general I concluded this interface to have great potential but its undocumented nature and version dependant changes make it basically unsuitable to rely upon for any serious project. And for just some fiddling around it is way to much work to develop and maintain such a solution in any language I could imagine.

But it would be quite feasable to create a LabVIEW DLL/shared lib that wraps the important VI Server methods and functions into exported functions and access that from any host that is capable to call into shared libraries. You would have one DLL/shared lib per platform you want to access LabVIEW from but since VI server is network TCP/IP based that would not have to be the machine that is running the actual LabVIEW target VI.

Rolf Kalbermatter

-

QUOTE(Ben @ Sep 27 2007, 03:13 PM)

Hi Gabi1,I use the

Source Distribution >>> Single Prompt >>> Preserve Hierarchy

As a quick and easy method to collect all of the VI's associated with an application and move them to a new location. This functions as an easy way to get rid of all of the temporary VI's that are not being used by the application but are present in the folders mixed in with all of the active files. When the surce is built, LV takes care of saving all of the VI's such that they can find all of their sub-VI and prevents cross-linking issues.

One the Source Dstribution is built to the new location, the old folder can be deleted and replaced with the results of the Source distribution.

So it an easy way to clean up the folders while preventing cross-linking.

I used this feature in older LabVIEW versions exactly to detect crosslinking! It allowed to see if a project was referencing somehow VIs outside of the project file hierarchy (and vi.lib, user.lib). Any such VIs I usually squashed immediately to avoid later problems with cross linked VIs referencing VIs from other projects (and even worse: other LabVIEW versions).

Unfortunately LabVIEW 8.0/8.2 lost that capability and I didn't get around to try 8.5 yet for any real project.

Rolf Kalbermatter

-

QUOTE(yen @ Sep 24 2007, 01:59 PM)

My guess would be that it's in your spam folder (look for "unknown sender", might be different in your mail client).It's been a long time since I registered, but that's how I get the reply notifications.

Actually it seems not just to be the spam folder. I recently had to reset my password and did it three times before a mail showed up. And yes I did check the spam folder and yes I did wait about 10 minutes and even longer before reattempting.

Rolf Kalbermatter

-

QUOTE(jbrohan @ Sep 23 2007, 09:10 AM)

HelloI need to interpret some voice comments recorded on a voice mail. The 'Speech' in question is a structured comment on the sounds in the voice recording.

There are several LV examples but I cannot get any to work. I use Win XP and LV 7.0.

In the MS package there are some C++ examples which compile and work right out of the box. I assume that this means I have all the ActiveX components required.

Does anyone have a good example of LV voice recognition, or even Grammar building.

The speech engine is totally mind-blowing. You can limit its vocabulary to a small set (solitaire words for example "play the red queen") which improves the recognition. I would not be surprised to see speech recognition as an important input route for LV. There was a chap the other day trying to get a reading from a DMM when the signal was stable, presumably both hands were busy and he needed a way to trigger the acquisition.

The problem with speech recognition is that it is a failry complicated technique to get to work in any useful way. I only played briefly with it in other applications, without trying to import it into LabVIEW and it did not feel up to what I would expect from such a tool.

It is simply rather complicated to configure and train it appropriately since human perception of speech seems to be such an involved process and as probably anyone who knows more than one language can attest, is also very much depending on the environmental influences where the language is one parameter of it.

There has been work in speech recognition for more than two decades now with speech recognition technology already available in the Windows 3.1 area and still it hasn't made it to a meaningful means of human interaction with the computer, not to talk about replacing human interface devices like a mouse or keyboard at all. This has been partly because of processing power and memory usage but that can't be the only problem, when you consider that computers have now already 1000 times as much memory as was common 15 years ago and the CPUs run at about 50 times the speed of then and are even more powerful, not to mention the availibility of multicore and multi CPU systems.

Not having looked at the MS Speech recognition API in a long time I can't really say much about it but it has been already complicated years ago and probably got even more possibilities and features since then.

Rolf Kalbermatter

-

QUOTE(siva @ Sep 20 2007, 03:33 AM)

I was using CVS some time back. Tortoise-CVS uses the network connectivity only during "commit" and "update" activity. Rest of the time, user doesnt need any network connectivity. The user can work at his code. If the user wants to commit(crreating the next version without check-in-out activity) his work., at that time only he needs the network.. I believe CVS-NT/Tortoise-CVS (freeware) is the right choice for dynamic connectivity situations..SVN really works the same. But the OP request was explicit intermediate commits to the server while away from the network, and here CVS will fail exactly the same as SVN. Only solution I see is to keep the CVS server on the local machine too and put it's repositry on an external harddrive or maybe make a regular backup copy of the entire local repository to some other location. I know that that will work with SVN since a repository is there completely self contained without any external information that could get lost when doing a simple file backup.

Rolf Kalbermatter

-

QUOTE(manic @ Sep 19 2007, 03:39 AM)

The worst example of bad labview code i've ever seen was a monstrous 16 MB VI without any subVI. I guess it had about 300 screen sizes, when viewed in 1280x1024 px. Something like 20 screens wide and 15 screens high.Unfortunately, i can't provide an image of that thing...(not only because of the size, but due to legal reasons)

Fortunately, i wasn't the guy to redesign that piece of LV-**** :laugh:

If I would be tasked with that my reaction would be simple. Don't even try to redesign it! It will cost at least double the time than doing it from scratch and still not be as clean and performent as it should be.

Rolf Kalbermatter

-

QUOTE(Gabi1 @ Sep 19 2007, 04:04 PM)

well i'm using notifiers quite a bit .i just love notifiers and queues . there is something in the idea that you can put in, and retrive, data from somewhere in the computer universe, that just make me feel good.

. there is something in the idea that you can put in, and retrive, data from somewhere in the computer universe, that just make me feel good.

anyway, i find notifiers extremely usefull in producer/consumer loops, or master slave configurations. however, i saw the other day on NI forum somebody trying to pause a DAQ loop, from an event structure. that made me wonder if i could pause the whole loop !, optimising CPU for other apps in the program, like analysis. so in the state machine loop, there would be a pause state, driven by one or several notifiers. as i said before, notifiers still have some disadvantages, when the sole purpose is to notify. the occurences are just better elements for that - in case one could have a function like "wait on multiple occurences".

As Aristos already mentioned the extra memory used for a skalar notifier won't really kill your memory at all. The occurrences on the other hand are not producer/consumer oriented and while multiple places can wait on the same occurrence their mechanisme is not in such a way that you could easily do the opposite at all.

On the other hand polling an occurrence with a small timeout, while not optimal, will not kill your CPU performance either.

Rolf Kalbermatter

-

-

QUOTE(Louis Manfredi @ Sep 18 2007, 11:09 AM)

Which just goes to show, my original point-- seems too bad that I have to rely on 3rd party sources of info, like LAVA, for this kind of thing. What other neat features are out there that I don't know about?Well, such is life. Most things I found by accident, searching actively for something or through user groups/mouth of word/LAVA/Info-LabVIEW etc. and when then looking for them they were eventually somewhere in the printed or online docs, but it is simply to much material to read through completely.

I have long ago resorted to work with what I know and try to learn new things by various opportunities and simply not let myself be bothered that there are still many golden eggs out there that I do not know about. If I would have a photographic memory I would simply scan all the documents in a matter of flipping through them and rely on that, but alas, I'll have to do with what I can.

Rolf Kalbermatter

-

QUOTE(Cherian @ Sep 12 2007, 01:58 PM)

[i had posted the following problem on the NI forum (http://forums.ni.com/ni/board/message?board.id=280&message.id=2885). I didn't get a solution to the problem, but an NI application engineer suggested that I post it on LAVA.]We use NI PCI-7831R FPGA boards for real-time data acquisition in computers running Linux. (We wrote the driver ourselves.) However, when we want to download a new version of the FPGA bitfile (.lvbit) to the board, we need to move the board temporarily over to a Windows computer with the LabVIEW FPGA module installed. This, we would like to avoid.

Does anyone know of a way to download the bitfile while the FPGA board is still in the Linux computer? (It is not a dual boot computer.) Is there a program (or information to write a program) somewhere that will just download a bitfile that has already been created on a Windows computer?

Thanks for any help or hints.

Wow, you mean you wrote a driver for the FPGA board to communicate with it from within LabVIEW? In that case I would assume you know more about how this could be done, than anyone else here on LAVA possibly could know. And probably also almost anyone else except those that work for NI and developed the FPGA product and maybe a system integrator or two under NDA.

Rolf Kalbermatter

-

QUOTE(tharrenos @ Sep 16 2007, 11:18 AM)

Hi,I am kinda new to the whole scene. I want to design a wireless remote control of a central heating over Wi-Fi. Any suggestions would help.

Thanks in advance.

As far as LabVIEW is concerned there won't be any problem. WiFi cleanly integrates into the OS networking stack and as such just looks like any other Ethernet hardware interface to LabVIEW, meaning LabVIEW has absolutely no idea that the packets would go over a twisted pair, glasfiber, dial-up modem, or WiFi network.

The central heating part might be a bit more troublesome. You will need to have some hardware that can control the heating and at the same time has a WiFi interface too. As embedded device which would be interesting only for high production numbers that would require some engineering. If you just put a normal (small size) PC beside and run a LabVIEW executable on that too the necessary engineering would be quite limited.

Rolf Kalbermatter

-

QUOTE(Louis Manfredi @ Sep 13 2007, 01:21 PM)

--Well, if they've been around for a long while, certainly upgrade notes is the wrong place to point these features out. But, I've been around for a long while too, (dozen years or so with LabVIEW, CLD) and both features are new (and useful) to me. I geuss that's why I aways follow the LAVA traffic, but it would be nice if the NI documentation did a better job of showing this sort of thing to us. (I'm sure both are in the documentation and I'll find details on the enum one now that I know to look, but why did it take LAVA to let me know to look?)Best Regards, Louis

Yes upgrade notes would not have worked. They are both in LabVIEW as long as I can remember, which is about version 2.5 or maybe the parse enum wasn't in there but at least 3.0. Possibly 2.2 for Mac only, had it already too.

Rolf Kalbermatter

-

QUOTE(pjsaczek @ Sep 12 2007, 04:47 AM)

so, if this is a common problem, does that mean there is a solution?As indicated already, if you talk to a serial device always add explicitedly the end of message character for that device to the command or if you want to be more dependant on a specific VISA feature set the "Serial Settings->End Mode for Writes" property for that VISA session to "End Char" and make sure you also set the "Serial Settings->End Mode for Reads" property and the "Message Based Settings->Termination Character" property accordingly as well as the "Message Based Settings->Termination Character Enable" to True. As already said it could have problems with older VISA versions (really old) and I find it better to explicitedly append the correct termination character myself to each message.

Also note that since about VISA 3.0 or so the default for serial VISA sessions for "Serial Settings->End Mode for Reads" is already "End Char" and the "Message Based Settings->Termination Character" property is set to the <CR> ASCI character as well as the "Message Based Settings->Termination Character Enable" is set to true. However "Serial Settings->End Mode for Writes" property is set to "None" for quite good reasons as it does modify what one sends out and that can be very bad in certain situations.

LabVIEW as general purpose programming environment shouldn't do that for you automatically since there are many somewhat more esoteric devices out there that use another termination character or mode then appending a <CR> character or <LF><CR> character sequence.

Rolf Kalbermatter

-

QUOTE(pjsaczek @ Sep 10 2007, 10:52 AM)

thanks alot, in the instructions it says the command has to be ended with <CR>. so for example, for it to read off the current position of the tester, and the force on the load cell, type RB<CR>. is the basic serial VI putting some more characters on the end? or do I need to add something to the end?cheers, pete oh, and the instrument doesn't do handshaking cheers!No LabVIEW is not adding anything to the strings on its own and I would be really mad if it would. However if you use VISA in a more recent version (let's say less than 5 years old or so) you can configure it to do that for a serial session automatically for you. But personally I find that not such a good idea. I prefer to code each command in such a way to append the correct end of string indication explicitedly.

Rolf Kalbermatter

-

QUOTE(jed @ Sep 11 2007, 04:26 PM)

I would love to do this, but I need to delay the shutdown:Any idea how to delay it until I am done with some housekeeping?

Or can I figure out exactly what the shutdown params are (shutdown/restart/etc), then cancel it, do my thing and reinitiate it?

The Windows message queue example is not really meant to hook directly into the queue but only monitor it. In order to hook into that event you would have to modify the C source code of that example to do that specifically. Not to difficult but without some good C knowledge not advisable.

Another way might be that newer LabVIEW versions will send a filter event in the event handling structure "Application Instance Close?" which you can use to disallow shutting down the app. Not sure if it will disallow shutting down the session directly though. But it should be enough to detect that there might be a shutdown in progress and allow you to execute the command Adam mentioned to abort that.

Rolf Kalbermatter

-

QUOTE(dsaunders @ Sep 11 2007, 03:09 PM)

I found a few threads (http://forums.lavag.org/Calling-a-VI-using-VI-Server-The-VI-is-not-in-a-state-compatible-with-this-operation-t2772.html&view=findpost&p=9908' target="_blank">like this) that talk about calling plugin VIs. I thought that the connector pane would be an easy way to verify that the plugin is valid.Having played with the LV 8+ replacement for the Icon Editor a bit, I noticed that they require a certain connector pane in order for your replacement VI to be valid. Here is the interesting thing, though. They allow you to connect terminals that are unconnected in their template connector pane.

But then it would seem that using the Call By Reference Node would be unavailable because it requires a strictly typed VI with an exact match on the connector pane.

Does anyone know of a way to check if a VI matches a template connector pane, that ignores unconnected terminals in the template? The plugin may or may not use these terminals and it should not matter. Can I mimic what the Icon Editor does, or is this just not feasible?

The private property is somewhat a pain to use as it is really a number of informations you would need to check. First the connecter pane layout itself which is just a magic number for one of the patterns you can choose. Then the array of connections with an arbitrary number to identify the position in the connector pane it is connected too and last but not least the datatype of each connection which is a binary type descriptor and can't just be compared byte for byte but needs to be verfied on a logical level since different binary representation do not necessarily mean different data types.

Not sure I would want to spend that much time for this!

Another possibility and LabVIEW uses that a lot for its plugins is to use the Set Control Value method and then simply run the plugin VI. Makes the connector pane completely independant of the calling information. You just need the correctly named controls on the front panel. Using an occurrence (or notifier etc.) you can wait in the caller for the VI to signal its termination or simply poll its status to be not running anymore.

Rolf Kalbermatter

-

QUOTE(chrisdavis @ Sep 11 2007, 08:45 PM)

I was contemplating adding a processor usage display to one of my CPU hungry programs. At NI week 2007, one of the demo's, specifically one on Tuesday demonstrating the new multi-core parts of the timed loop had such a display. Does anyone know how they did it, or how I might add such a display to my program?My best bet is .Net although I think the WinAPI will also give you this information on a somewhat lower level and probably not exactly trivial to access. Possibly LabVIEW has some private hooks itself. Would make sense to have this info in LabVIEW somehow to make it platform independant.

Rolf Kalbermatter

-

QUOTE(Techie @ Sep 9 2007, 10:04 PM)

Hi Everyone,Can I dynamically create(i.e using code to create) controls of Labview when the program run.

All comments so far have been valid and right. One other thing, creating controls while in principle possible requires the use of so called scripting, a feature that has not been released by NI and made difficult to access too. Also you can not do it on a VI that is running, so for most user applications it is meaningless as you usually want to do such stuff from the VI itself.

LabVIEW can not make any modifications to a VI that is running if these changes would modify the diagram in any way and dropping a control on a front panel does modify the diagram. That is also a limitation of the compiling nature of LabVIEW.

Rolf Kalbermatter

-

QUOTE(brianafischer @ Sep 8 2007, 01:50 PM)

For some reason, the LabVIEW development does not like to use multiple monitors. I use multiple monitors at work and home, but the VIs always open in the last location in which they were saved.If the VI is saved on the secondary monitor, and then opened on a PC with a single monitor the VI opens "off in space" on the non-existent monitor. My work PC has the secondary monitor on the "right" and my home PC has the secondary monitor on the "left. I always work on the secondary monitor since both of them are nice large widescreens.

What would be "nice" is if there was a way to force VIs to open to a "default" monitor, always the secondary in my case!

Does anyone have advice on how to improve this situation?

What version of LabVIEW are you using? LabVIEW since at least version 7 is fully multi monitor aware and moves windows so that some part of it's window is always visible although it does not center them in the primary screen which I would find to intrusive anyhow. Making an application open its windows always in the secondary screen independant of its location is defintely a very esotoric requirement. Personally I find it rather disturbing to have to look right or left of the main screen depending on the setup. It's already hairy enough to switch between single monitor and multiple monitor use.

But I know many applications that will open their window always in the last saved monitor location independant if that monitor is present or not

and I really find that an annoying behaviour, especially if they replace the default system menu too, so that you can't even move it from the task bar with the cursor keys.

And upgrading to LabVIEW 8.5 could help you a lot. There you can configure per front panel on which screen it should be displayed and LabVIEW will honor that if that monitor is present. But i think this is mostly a runtime feature. Not sure it will have the desired effect on the windows in edit mode and definitely not for the diagram windows.

Rolf Kalbermatter

-

QUOTE(yen @ Sep 8 2007, 02:22 PM)

From the fact that you are asking the question I assume that you no longer have access to the original developer.Do you at least have a copy of the source code? If you do, you can commision another LabVIEW developer to build a command line interface. If not, you're basically stuck with the other suggested option.

For what it's worth, though, you might be able to use the ActiveX interface available with LabVIEW to connect to the program from another program and input values into it. Do you have any programming experience?

In order to be able to connect to a LabVIEW application over AcitveX it also must have been build with that option enabled. I personally always make sure that option is disabled for various reasons, including safety.

Rolf Kalbermatter

-

QUOTE(yen @ Sep 5 2007, 01:36 PM)

I actually used Tibbo products more than once (their TCP to serial adapters) and they're pretty good, but I would agree that this is not LV related.My understanding is that LV embedded requires using C, so I'm guessing no.

Just because there is a Basic development environment for this target does not mean that there couldn't be a (Gnu based) C toolchain for it too.

Rolf Kalbermatter

-

QUOTE(tcplomp @ Sep 7 2007, 02:38 PM)

And just to show what the pre LabVIEW 8.5 version of this code would look like:

http://forums.lavag.org/index.php?act=attach&type=post&id=6885''>http://forums.lavag.org/index.php?act=attach&type=post&id=6885'>http://forums.lavag.org/index.php?act=attach&type=post&id=6885

Forget about the unwired loop termination! ;-) That is not the point here.

And if anyone wonders why one would write a VI in such a way. It is called pipelined execution and has advantages on multi core, or multi CPU machines as LabVIEW will simply distribute the different blocks onto different CPUs/cores if that is possible at all. On single core systems it has no real disadvantage in terms of execution speed but this construct of course takes a memory hit because of the shift registers that store actually double the data between iterations than what a linear execution would need.

Rolf Kalbermatter

Read Value of Static Output Card?

in Hardware

Posted

QUOTE(brianafischer @ Sep 28 2007, 01:01 PM)

You can usually do that for digital outputs but not for analog outputs. It would anyhow only read the value written in the digital registers of the DA converter and not the actual value on the analog output. To read the analog value there needs to be an AD converter too and that would make the DA converters extra expensive. However most NI-DAQ boards do have a lot more analog inputs so wiring the analog output externally to an analog input will allow you to do what you want. On some boards you can even use internal signal routing lines but programming the according switches is a lot more complicated than a simple external wire.

Rolf Kalbermatter