-

Posts

3,909 -

Joined

-

Last visited

-

Days Won

270

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Rolf Kalbermatter

-

-

hmm...its quite hard for me to get the DAQ card becuz of my current location...

or is it possible to directly show all the voltages difference in parallel port, not measure it?

i got 1 VI that is get the power status of the pc, i want to get something like this which is related to parallel port ...i found out that this VI is using the Call Library Function

No. There is absolutely and definitely no hardware in the parallel port circitry which would measure that voltage. And therefore no magic could allow Windows to provide a function to read this voltage. The power status is a specific analog circuitry present in all modern PC motherboards and supervising the internal power supply and Windows has a function to read this status since it wants to know when it should suspend operation before the battery is drained. But this circuitry has absolutely no connection to the parallel port.

Rolf Kalbermatter

-

sorry..is there any function that able to measure the output voltage of the parallel port, on the inside Labview?because i need to measure the output voltage by juz only using the indicator as the voltage display

Yes, buy a DAQ card and install it in your computer ;-). Otherwise no, the parallel port is a digital interface with signals when going above 2.4 V meaning logic high and below 0.8V meaning logic low. There is no analog measurement built into the parallel port nor any other part in a normal PC. (This leaves out the temperature and CPU voltage level controls nowadays built into the power management of modern PCs but you couldn't use them for measuring arbitrary port signals anyhow).

Rolf Kalbermatter

-

Hi All,

I have built an EXE with debugging enabled. I run the EXE, it opens up unstarted. Then in LV's project explorer I go to Operate>Debug Application...

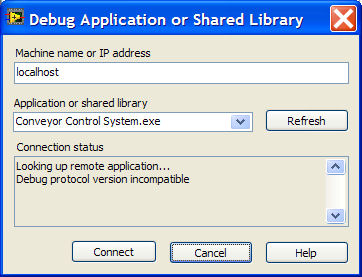

When I attempt to connect to me EXE I get an error message as shown in the included image.

Anyone have any thoughts?

How can there be an incompatibility with the debug protocol? All this is being done on the same PC.

Any help is appreciated

Don't you need to enable the TCP/IP access for VI Server of the executable application in order for the debug connection to succeed? The error message itself is quite likely not very accurate but something is going wrong.

Rolf Kalbermatter

-

It works with labels. It works with text strings that just sit on the front panel.

It just does not seem to work with Boolean Text.

"Making value default" does not affect it.

It also doesn't work with text in string controls. I assume that LabVIEW does something with the data part of a control that makes the entire string use the font setting of the first character on reopening the VI.

Rolf Kalbermatter

-

From the Java side the main method looks like:

byte[] b = new byte[80];

80 Bytes, because I would like to send the following information:

Byte 0..3 (Packet Size)

Byte 4..5 (Data Type)

Byte 6..9 (Data -> 32 Bit Integer)

Socket client = server.accept();

OutputStream out = client.getOutputStream();

out.write(b);

LabVIEW:

I am using LabVIEWs TCP Read function with 80 bytes to read preset.

My question:

- How can I make sure to receive the data in a way that I can work with it within LabVIEW? I have already tried to endianize the data within Java but it didn't work out.

You must be doing something wrong with endianizing or such. But this shouldn't be that difficult to figure out. Just go debug on the LabVIEW side! Look at the data you read from the TCP/IP socket and display it in a string control. Now play with the display mode of that string control and look at what you can see. In Hex mode you definitely should see a pattern you can then further trace to see what might be wrong.

While endianizing in Java might be the solution I'm not sure that is actually the right way. Java being a platform independant (at least that is the intention isn't it ?) development platform may already do some endianizing on its own internally and in that case likely chooses the big endian or network byte order format which happens to be the same as what LabVIEW uses. Otherwise Java client server applications between Intel and non Intel platforms couldn't communicate seemlessly.

Rolf Kalbermatter

- How can I make sure to receive the data in a way that I can work with it within LabVIEW? I have already tried to endianize the data within Java but it didn't work out.

-

Hi Rolf

thanks for your anwser!

The background of my question is, that we have actually such a COM Component integrated in our project, the imc COM Components from www.imc-berlin.de. If I understood your anwser than calling this component inside VB is faster than calling the same componet from inside LV, right? When it comes to convert the VARIANT's in LV I loose again time... a lot! :thumbdown:

The background of my question is, that we have actually such a COM Component integrated in our project, the imc COM Components from www.imc-berlin.de. If I understood your anwser than calling this component inside VB is faster than calling the same componet from inside LV, right? When it comes to convert the VARIANT's in LV I loose again time... a lot! :thumbdown: Is any COM Component slower inside LV than inside VB? Also if the COM Component was build with a real compiler such as Visual C? I only try to understand a little bit more about the background of the low speed for this component using LV.

Andi :thumbup:

Well, I do think Visual Basic does usually work directly on VARIANTS but am not sure. As such it would not need to convert a VARIANT array to another representation before doing some stuff on it. If using such a COM object is much faster in VB than from LabVIEW I don't know. Last time I touched VB is more than 10 years ago. COM itself does not mandate VARIANTS per se, but if it is going to be an Active X interface or a COM object which can be marshalled over network you need to limit yourself to the supported datatypes (and I'm not sure if Active X can marshall VARIANTS, I know that it can't easily do that for structures.)

Basically COM was not designed with data acquisition applications in mind and trying to use it in such environments creates all sorts of problems and limitations. Using Visual C to build the COM component likely will speed up the execution time of such a COM component and you don't necessarily need to restrict yourself to VB supported datatypes (although wanting to allow such a component to be used in VB you probably want to at least provide alternate VB compatible access methods). VARIANTS definitely wouldn't be my choice of data transport if I ever would write a COM component.

While a DLL interface may seem cumbersome at first it definitely is still the most flexible one and allows all sorts of fast and reliable data transfers. Also it is the most versatile in terms of interfacing as there are almost no software environments that can't link to a DLL (and if they can't link to a DLL they very likely can't link to a COM object either). The only advantage of COM or actually rather DCOM here is that it supports seamless networking (at least if you limit yourself to data types that it can marshall).

Rolf Kalbermatter

-

Has anyone ever shared 1 GPIB device with 2 PCs? I have an expensive piece of equipment that I would like to share across several test platforms via GPIB, and I wanted to find out any caveats/tips/tricks before I go down this path...

Your help would be greatly appreciated!

Thanks.

You can do that but you will have to get acquinted with some low level GPIB details and API functions. Basically a GPIB bus always has exactly one system controller (this is called SC in GPIB speak). This is normally the PC but since you have two of them you will have to configure one of them to be the system controller (that is the default for a PC GPIB interface) and the other to be not so.

Then at any time there can only be one interface a controller in charge (called CIC). A system controller can at all times request control over the GPIB bus and therefore be CIC, aborting any ongoing communication by another controller that has been CIC. In order for a non-SC to get a CIC it has to wait for the current CIC to pass control to it.

This all can be done for sure using the old traditional GPIB functions and I'm sure there are still some documents on the NI site explaining that. I have no idea if VISA supports multi controller functionality in any way. Last time I checked it didn't seem to.

Rolf Kalbermatter

-

Hi

I would like to pass double arrays of data from VB to LV / LV to VB. I use an ActiveX DLL created in VB. When I integrate this component into LV with automation open, the double array apears as a Variant datatype

. Converting this array into a double array in LV is very slow

. Converting this array into a double array in LV is very slow  . Using the same ActiveX component inside VB is very fast in comparison.

. Using the same ActiveX component inside VB is very fast in comparison.Does anybody know why LV is so much slower handling an ActiveX Calls? Is there a faster way to pass arrays of doubles between LV and VB?

Thank you for helping!

Probably not. Visual Basic does everything that does not fit into a string or a single variable with VARIANTs. VARIANTs are not so fun to deal with directly and you need a whole bunch of Windows OLE functions that are not available on non Windows 32 bit systems. Therefore LabVIEW chooses to convert incoming variants into its own binary format (well not really its own but the format used by virtually any real compiler, which of course leaves out Visual Basic) and reconvert them back when handing them back.

The only real solution to speed these things up is to not use Visual Basic and/or Active X for such cases (or do everything involving such arrays in Visual Basic). My choice would be the first as I try to avoid ActiveX at almost all costs for its famous stability, version interchange compatibility, and lean and mean runtime system, not to mention its unparalleled multi-platform nature :-).

Rolf Kalbermatter

-

We have a customer that uses WonderWare and Net DDE. We need to read a few WonderWare tags from a LabVIEW application running on a separate computer. I am sure this has been done before but I cannot find a good example that demonstrates the format to use for computer name, service, topic, item name when talking to WonderWare. I could only find an Access and Excel example.

All we need to do is connect to and monitor a few existing tags in WonderWare from another computer on the LAN running a LabVIEW application.

I would prefer to use OPC but they have been using Net DDE for years and do not want to change.

We cannot use LabVIEW DSC.

Well, without LabVIEW DSC you will have to write your own DDE server. Let me tell you that that is no fun at all, as DDE has a few drawbacks nowadays. First it is an old technology and in some areas rather strange to use for nowadays understanding. Second it has a few quirks including in the LabVIEW interface and there is NO support for it anymore. Nobody at NI is going to look into a problem if you can't get something to work and there very likely won't be a bug fix of some sorts if it turns out to be not working due to some obscure DDE incompatibility with your Windows system.

That said an Excel example is more or less all that is needed. It should show you the service name as well as the topics. What you may be running into is the NetDDE setup that is needed for networked DDE communication. There have been tutorials on NetDDE configurations for LabVIEW DDE on NI's site, but they were written for Windows 3.1 and Windows 95. You may be likely running into access right problems on more modern Windows NT based systems and need to figure out how to setup your system right to get around them before being able to access a remote DDE resource.

Do the Excel examples work over the network, because that would indicate that the NetDDE configuration is more or less correct.

Rolf Kalbermatter

-

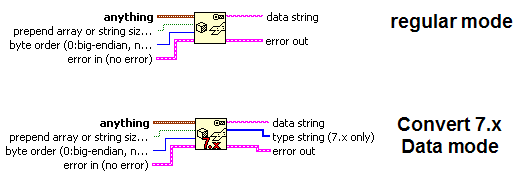

In LabVIEW 8.0, the Flatten To String function has an option called Convert 7.x Data that causes the familiar type descriptor output to appear.

According to the documentation...

My question is this: If LabVIEW 8.0 and later stores type descriptors in 32-bit flat representation, how to we access LabVIEW 8.0+ type descriptors? Has anyone found a way to access and decode these? It seams like the documentation is recommending that we "rework" for this new format, but I can't seem to find anything that leads down this trail.

Could this be something delegated to the scripting feature now? Would seem to me that you could enumerate the diagram or whatever and as one of the properties of a control or wire get its new 32 bit type descriptor. As such it is of course basically not accessible anymore. Talk about removing features due to an update!

NI seems to find with every new version new ways to make a power user wonder if an upgrade is really such a good idea.

Rolf Kalbermatter

-

If you'll go to:

http://discussionboard.chopra.com/default.asp ->Ask Dr. Deepak Chopra->Dark Cloud with 1257 replies, there you'll find the answer.

Oh well, a closed discussion board. I don't feel like signing up to view what you refer to, but I guess I have some ideas.

Happy holidays

Rolf Kalbermatter

-

Thanks for your reply!

Yes, the disk space is not an issue at all nowadays. But I just want to know if I can strip off the part that is not needed, since the front panel does nothing other than passing variable in and out.

Thanks for your info. Indeed, I didnt have any particular reason of usign LLBs. I used it just because I saw it is used extensively in LabVIEW library functions.

You can strip the front panel, but for the VIs you want to call dynamically you shouldn't do that. In order for the dynamic parameter passing to work the VI really should have it's front panel otherwise you will get all sorts of errors when trying to load such a VI dynamically and/or call it.

Rolf Kalbermatter

-

Couple of days back I upgraded to LV8. The development enviroment looks really nice. But once I started using it I felt that its not as cool as it looks...particularly the project explorer window... Probably I will be able to appreciate the features once I start using it regularly...But I would like to know what other people think of the project explorer...Does it really make life easy( or complicated?).

One thing I would like to know is the reason for not creating a folder in the drive when you create a new folder in the project explorer window.

Also how helpful is the project explorer if one is using SVN which, I think, is not supported by LV8 SCC.

You bring up points which have been discussed already here on lava as well as info-labview.

I don't think you really want to tie the project hierarchy to a disk hierarchy. This puts up a strong limitation to what the project hierarchy can represent which is IMO not necessary. Other development environments like MS VC don't choose to synchronize the project hierarchy with any disk hierarchy either and I feel this to be a good choice.

SVN and all the other SCC systems are not directly supported in LabVIEW 8 but LabVIEW makes use of the MSSCC API. This is a closely guarded trade secret of MS and you only can get the specs by sigining a NDA. As such it is not very likely that there will be Open Source implementations of MSSCC interfaces soon. However you can get PushOk's SVN plugin (not open source or free though) discussed by Jim Kring in another thread on this board, which interfaces SVN to MSSCC compatible development environments and in this way LabVIEW is just as compatible to SVN as it is to other SCC systems such as MS VSS or Perforce.

The project explorer view is quite something to get used too, and it does have certain quirks at the moment which are sometimes just not intuitive and sometimes simply a bug. I expect most of these things to be ironed out in LabVIEW 8.1 ;-)

Rolf Kalbermatter

-

Rolf: How do you do this? I could not find a VI property with a name such as "debugging".

Thanks,

Oops is that one of the super secret VI properties? Nope doesn't seem! It is in Execution->AllowDebugging.

Rolf Kalbermatter

-

What is up with this rash of newspeak of late? "Creative Action Request". Just call a bug a bug.

Here's some more:

Consent to an unconstitutional reduction in civil liberties == "Patriotic"

Opposed to government policy == "Unamerican"

Kidnap and torture == "Rendition"

Back in my days at NI, CAR stood actually for "Corrective Action Request" and probably still does. The guy who wrote that answer probably just was tinkering about the creativeness he could do in writing a LabVIEW program instead of answering a technical support. Working in technical support can have this effect on you! :laugh:

Rolf Kalbermatter

-

I am doing an operation on a VI using VI server. I would like to change the VI's modification bitset to show as changed, so that that user is prompted to save the VI when it is unloaded from memory (and also the VI Name in the window title should have an "*" to indicate that it has been modified). I have experimented with "Begin Undo Transaction" and "End Undo Transaction" and calling these is not enough to cause a "modification".

Anyone know a good way to do this?

Thanks,

I usually do this by toggling the debugging flag twice. Snooped it from some LabVIEW internal functions some versions ago.

Rolf Kalbermatter

-

But there is NO excuse that every command the UI has is not listed in the program menu. NONE.

Please don't do that! The menu would get so cluttered it would be completely useless! LabVIEW has virtually thousend of options if you add everything together.

Rolf Kalbermatter

-

I'm trying to write a function that returns the files in a folder that have the Archive bit set. :headbang: Is there a way in labview to read this information? The get file info VI seems to be very limited (probably do to portability). I trying to avoid having to compare file modified date to a history file. Seem like a lot of work when the OS already does this book keeping for me!

Thanks

You will have to call a Windows API function. Use the Call Library Node and configure it so that the library name is kernel32.dll, function name GetFileAttributesA, calling convention stdcall, return value numeric, unsigned 32bit integer, and one function parameter filenname of type string, C pointer.

Now wire in the filename path to the parameter input after having it converted to a string with the Path To String primitive and the return value from that function contains a whole bunch of information encoded in the individual bits. Basically if the return value is 0xFFFFFFFF then there was an error such as the file was not present. Otherwise you can AND the return value with following values and if the result is not 0 the bit is set:

#define FILE_ATTRIBUTE_READONLY 0x00000001

#define FILE_ATTRIBUTE_HIDDEN 0x00000002

#define FILE_ATTRIBUTE_SYSTEM 0x00000004

#define FILE_ATTRIBUTE_DIRECTORY 0x00000010

#define FILE_ATTRIBUTE_ARCHIVE 0x00000020

#define FILE_ATTRIBUTE_ENCRYPTED 0x00000040

#define FILE_ATTRIBUTE_NORMAL 0x00000080

#define FILE_ATTRIBUTE_TEMPORARY 0x00000100

#define FILE_ATTRIBUTE_SPARSE_FILE 0x00000200

#define FILE_ATTRIBUTE_REPARSE_POINT 0x00000400

#define FILE_ATTRIBUTE_COMPRESSED 0x00000800

#define FILE_ATTRIBUTE_OFFLINE 0x00001000

#define FILE_ATTRIBUTE_NOT_CONTENT_INDEXED 0x00002000

Some of them are redundant since LabVIEW returns them also in the File Info primitive.

Enjoy

Rolf Kalbermatter

-

I've been playing with .NET in my spare time, The list of new features in the .NET framework v 2.0. includes a SerialPort class.

I've read about some people being dissapointed with having to use VISA in order to access Serial devices; could this new .NET class be used to create a replacement for the old Serial Read/Write functions?

Talk about installation size! VISA is small compared to .Net 2.0!! The only reason not to use VISA was really the very restrictive licensing and runtime costs. NI changed this licensing recently and now I do not see a pressing need to replace VISA with yet another even bigger monster. Besides you are not allowed to install .Net on anything but a genuine Windows system anyhow and for .Net 2.0 I would guess it better had to be XP or better or it won't install anyhow.

Rolf Kalbermatter

-

Hi oskar, that was of quite a help for me too. I also want to know where does TCP-open comes into picture. what is the difference between TCP-listen and TCP-open as long as application is considered.

TCP listen creates a socket and waits for incoming connections. This is in TCP speak the server application. TCP Open connects to a server and therfore is used in a TCP client application. In order to create a server which can simultanously handle several client connections you use the TCP Create Listener and TCP Wait on Listener which above mentioned TCP Listen combines but then only can support one connection.

To get some examples search in the LabVIEW example finder for TCP/IP.

Rolf Kalbermatter

-

in my program, i do use some property node, but none in the program using the "draw text in rec.vi".

and i didnt change this part of program, why cannt i build this time?

and my purpose to use the "draw text in rec.vi" is show some words in my picture.

I think in your case the second part of the message is the important part. For some reasons your VI has lost its diagram. Maybe you did set something wrong during the last build and the original VI was overwritten by the one build by the application builder which usually has no diagrams.

I would do a reinstall of LabVIEW to get the vi.lib VIs back into original state.

Rolf Kalbermatter

-

I had a quick look and didn't see anything in the license manager for this. Where did you find it?

The license manager is very stupid. It only enumerates the license files present in your system and shows their status. It also can invoke the activation wizard for a specific license so that you can get a valid license. All the license files present in your system (such as the whole bunch of possible LabVIEW development licenses or the NIIMAQ1394) get installed with the respective NI software either with demo or inactive status. The license activation then gets an autorization code from NI (either by secure web, fax or email) and adds that autorization to the existing license file, signing the whole file with a code to proof that it is a valid license. Then the license manager will recognize it and so will LabVIEW. But LabVIEW checks also for other valid licenses for which no standard license file gets installed together with LabVIEW. Those queries will fail just as if the license file had not been activated or properly signed and the feature will not be available.

Things I found that it checks for licenses are LabVIEW Scripting, XNode Development, Pocket PC, and Palm OS.

So now we just need to get those valid licenses to use these features ;-) Knowing that the whole licensing is based on FlexLM I have a feeling that you could force it without to much of a hassle but that would be illegal!

Rolf Kalbermatter

Has anyone attempted a hack of the license to find out if that may release the scripting?If I would, I wouldn't tell! I like to sleep without being lifted off my bed in the middle of a night by a special police force ;-)

Rolf Kalbermatter

-

Has anyone experience connecting Yokogawa MX100 and LabVIEW ?

I have this problem...

I have got the LabVIEW driver from Yokogawa website and than started to built the LabVIEW program to take the data from MX100.

This MX100 has the capability to measure in 10 ms interval rate. Using I tried to make a loop with 10 ms period. And than I tried to change the coltage rapidly. But it seems the LabVIEW Program only can detect voltage change every 100 ms. I have used Yokogawa program and it worked.

But using Labview I can have other possibility to modify the program as I want.

So, if anyone has such experience , please please tell me...

I have been stucked in this problem for last days

Thanks in advanced.

The way the Yokagawa MX100 driver is constructed it may be almost impossible to get 10 ms update rates. They read in multiple times huge amounts of data for configuration, scaling and such both for the system and module level and write all that data back to the device too. I only managed to get everything working reasonably fast by diving deep in the driver and take it apart to optimize the number of buffer read and writes. However I'm only doing analog input here, so I couldn't specifically help you with your output problem and the code I have is not specifically neat or clean but just functional and very specific for the datalog problem I had at hand. Took me a day or so to understand the structure of the driver a bit and another day to get it working.

Rolf Kalbermatter

-

I'm trying to enter a tree control in an array, and I want the contents of each "tree" in the array to be different. However, whenever I add a string to the tree in one array cell, it shows up in the trees in all other array cells. Is there any way to "decouple" these trees?

I think an array of trees to be a bad UI solution. Aside of that it won't work in LabVIEW as you would like. A LabVIEW array element can only have different data for the individual elements in the array but shares all the attributes for every element. The data in a tree control is the tag of the currently selected node but the tree representation is really all constructed from attributes of the tree control and therefore will be the same for all array elements. But as said above I think you are trying to create an UI solution which will be anything than easy to understand for a potential user anyhow, so you really should reconsider your approach.

Rolf Kalbermatter

Unicode Text

in Application Design & Architecture

Posted

The biggest problem is that Unicode is not Unicode. While Windows uses 16 bit Unicode most other systems use 32 bit Unicode characters. So having a platform independant Unicode library is almost an impossibility. If you don't believe me you should checkout the Unicode support that had to be implemented in Wine in order to support 16bit Unicode on 32 bit Unicode Unix platforms.

This whole issue is even more complex when you consider wanting to read Unicode coded data files in a platform independent manner. Basically I would consider this almost impossible because you would have to support both 16 bit and 32 bit Unicode on all LabVIEW platforms.

What I'm suspecting the NI solution will be is a Windows only Unicode function library for creating, saving, and reading Unicode strings. With a little work this can already be done with a Call Library Node calling Win32 APIs, as they do all the nasty work for you. I posted a while back a library to the NI forums to convert to and from Windows Unicode strings calling those Win32 APIs. Last time I checked the link to is was:

http://forums.ni.com/ni/board/message?boar...uireLogin=False

Rolf Kalbermatter

Rolf Kalbermatter