-

Posts

390 -

Joined

-

Last visited

-

Days Won

16

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Bryan

-

-

Would you be willing to post what the solution was?

-

1

1

-

-

Welcome @LVmigrant!

If your company isn't willing to pay for LabVIEW training, but uses LabVIEW - it sounds like a great recipe for major headaches in the future.

Speaking as someone who has inherited some really bad LV code over the years, a company's up front investment in training for their employees will save them a lot in the future as far as maintenance, refactoring, and many other things. The bad code I inherited was from programmers who didn't take any training nor took the time to learn from other more seasoned programmers.

This is a great community with some of the most knowledgeable LabVIEW programmers I've ever met. Even though I've been using LabVIEW on and off since 1999 - I don't hold a candle to the knowledge contained within some of the the members of this forum.

That being said - many of the users in here have contributed to the LabVIEW Wiki, which has a LOT of useful information.

-

I was never involved with it, where I used to work I supported a software group that wrote code for an automated military application. I can't speak for other DoD contracting companies, but I know that they went through iteration after iteration of software testing, regression testing and debugging over the course of several months to a couple of years with testing "events" that would go around the clock for a couple of days at a time.

My role was not with programming for that application, but I was responsible for maintenance and troubleshooting of the simulation testbed that they used to develop their software. If they found undesirable behavior from their code, I worked with them to determine whether it was actually a bug or something wrong with the testbed.

-

15 hours ago, Cat said:

I'm wondering if the group mind here thinks CLD would be good enough, or should it be CLA? Especially considering the person will have to be the "A"rchitect as well as the "D"eveloper.

Well - Finding a CLA may be more difficult than finding a CLD. Some people may (like myself) have gone the path up to feeling qualified to take the CLA Exam, but never pulled the trigger for one reason or another (mostly $$ reasons). I never did it because - working for a company - a CLx never benefited me in any way nor did it benefit my company. They were willing to foot the bill for the exams, some of the time, but I never went through the process and let my certification expire long ago after only one renewal.

You could specify CLA-Level of experience without necessarily making them have an active or expired credential. However, you'd still have to determine somehow by evaluation or by faith that they have the level of experience they claim to have.

10 hours ago, ShaunR said:You very quickly find out who is an engineer and who is a code monkey.

Hey! I (likely) resemble that remark!

-

I would start with having a CLD (recent or in the past) as being helpful, but not necessarily a requirement.

Ask them if they've taken any LabVIEW courses and what they were. This should give a baseline of their expected knowledge/experience. Quiz them on what they SHOULD know having taken them.

Quiz them on the commonly used LabVIEW frameworks (or commonly used in YOUR job) to get a feel for their grasp on the concepts (e.g. QDMH, DVRs, OOP, etc. LIB MR DUCKS).

A courses, a degree, or CLD doesn't necessarily equal competence (but I agree, it gives more of a 'warm-n-fuzzy' feeling) - I've seen it several times in my career. Some of the most competent people I've worked with have no official degree, but lots of experience. Some people can have loads of LabVIEW experience, but never went for a CLD/CLA because the certification wasn't required for their job or field.

If they can (and it's not always able to be done because of intellectual property constraints), ask them to provide sample code for you to review.

I'm sure others will have more (and much better) insights than I do, since I haven't taken part in many candidate interviews for LabVIEW positions. (Although I will be here in the near future).

-

Is the TVI - Tester Automation Framework an open source alternative?

I see you also posted the exact same text here. Is it also a LabVIEW software validation tool?

-

What are the functions of pins 7, 8, and 9 on the DB-15 controller? Is it possible that you have RX connected to RX and TX connected to TX on both ends? If so, you'll likely need to wire it like a crossover:

DB-9 Pin 2 (Rx) to DB-15 Pin ? (Tx)

DB-9 Pin 3 (Tx) to DB-15 Pin ? (Rx)

DB-9 Pin 5 (GND) to DB-15 Pin ? (GND) -

I'm sure others have better suggestions (e.g. a LabVIEW Toolkit or other), but in the past I worked with a system that used PDF995 virtual printer and have LabVIEW set it as the default printer and then print to it. However, It will prompt you for a filename/save location every time you print to it and I wasn't able to figure out how to change the default filename at the time nor have it print without prompting.

-

20 hours ago, hooovahh said:

I sure hope LabVIEW has a future because I've hitched my career to it. But even if NI closed its doors tomorrow I feel like I'd still be using it for another 10 years or so, or until it didn't run on any supported version of Windows. But I feel your concern, and if I were a junior engineer starting out, I would feel safer in another language.

I'm in the exact same boat. While I'm not on the cutting edge and doing much of the cool stuff many of you in this forum do, I do entertain the idea in the back of my mind that LabVIEW may one day go away while I'm still in the working world.

I'd like to think (realistic or not) that if NI ever decided to completely get rid of LabVIEW, that they would show appreciation to all of we who have made it successful in the past by making it open source.

-

-

-

Thanks for the info! I don't currently work at that company or with iVVivi anymore, so my question was more of a "what became of it" post than anything. While I was there, we had selected it over TestStand because of the built in HAL and simplicity in test development.

-

At my previous employer, we had updated a couple of our test systems to Calbay Systems' "iVVivi" software. I had spent a lot of time with it and recently got to wondering whatever happened to it.

When Calbay Systems was acquired by Averna, iVVivi seemed to disappear. It makes me feel bad for those at my previous employer who are now stuck with using a test executive software for which they may no longer have support.

I know that we have some Averna employees in the forum, and I'm hoping someone may be able to shed some light on what became of it - including someone who helped us with our implementation of iVVivi (though I haven't seen him on the forums in many moons).

-

I'm very interested in the responses to this. We're currently looking into migration of existing TestStand systems either back to pure LabVIEW or to a home-grown or other architecture. TestStand has just become too much of a pain in the butt for us that we're planning on purging it from our test systems.

I miss the days of Test Executive (TestStand's predecessor). It was simple and easily customizable for LabVIEW programmers. Even "iVVivi" (created by CalBay - Now Averna??) was better than TestStand for our needs (lacking in some areas), but iVVivi wasn't open source and it seems to have disappeared from existance.

It would have been nice if NI would have made the old Test Executive code open source, but I'm sure it would have hurt the sales of TestStand, (although I'm sure TestStand is doing a pretty good job of that by itself).

Sorry, I didn't mean to hijack - I'll stop right now as I could easily go on a tirade about my experiences with TestStand.

-

On 2/8/2021 at 10:49 AM, crossrulz said:

(NI has strictly stated we should not call it "CE")

Did they give a reason? I'm betting has something to do with "Microsoft CE" being a thing, and they don't want to even remotely draw any negative attention from Microshaft.

-

I had heard that they were entertaining the idea of supporting Discover... but even fewer people use it.

-

AutoIT is the first tool that comes to mind. I'm not familiar with it personally, but know some people who have used it in the past.

I don't know if there are any issues using it with LabVIEW, but I know it has been used to automate control of other types of Windows applications.

-

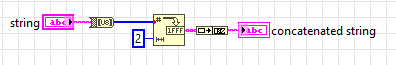

Here's an example of converting a text string to bytes to an "ASCII Hex" string.

-

Another SVN user here (With TortoiseSVN as standalone and also with an SVN server). Was introduced to it years ago and have had no reason yet to move to any others.

I like it for its (relative) simplicity when compared with others.

For LabVIEW, you can configure it to use the LVCompare and merge tools, (there are some blurbs online you can search for that tell you how to configure it).

Drawbacks that I've found (in my current employment) is lack of organization in the repository (poor pre-planning). They use one repository for EVERYTHING and it's gotten huge and didn't plan it for best use of the the branching/tagging functionality.

Another one is searching this HUGE repository I have to work with. I've found no easy way to search for files/folders/etc. My workaround for this is to use the TortoiseSVN command line and dump an SVN list to a file - then search the file to get a relative location for what I'm looking for.

-

You could also create your own VI that establishes a connection to the database where the credentials are stored as constants and remove the block diagram. To do this, you have to create a project, then source distribution with that VI (always included). Then, in the settings for that VI in the distribution, you select the option to remove the block diagram. This is currently the most secure method of hiding LabVIEW source that I'm aware of.

The only problem is that if the credentials ever need to be changed, you have to change it in the source VI, re-build the source distribution and then send the new VI to your students.

-

On 1/6/2021 at 12:47 PM, ShaunR said:

That's not very American. Where's the guns?

Sorry, I missed that detail. The LabVIEW program automates the targeting and actuation of firearms.

(To the FBI/NSA agent monitoring this post - this is what is known as a joke).

-

My LabVIEW FPs are color schemed ONLY in red, white, and blue, with animated U.S. Flag GIFs or Bald Eagles used in each custom control.

But my BDs are bloated to 3X my monitor size...

-

- Popular Post

- Popular Post

Shaddap-a you face!

Shaddap-a you face!

-

3

3

-

On 12/27/2020 at 7:58 AM, Mefistotelis said:

I actually don't care enough for LV to get triggered by the first sentence. But the last one... 😡

I agree, I have garnered great disdain for Winblows over the years as far as the negative impacts to our testers from updates mandated by IT departments, obsolescence, the pain to install unsigned drivers, just to name a few. I would hate to see NI stop support for Linux as it has been growing in popularity and getting more user friendly.

Linux is a great and stable platform, though not for the faint of heart. It takes more effort and time to build the same thing you could do in shorter time with Windows. However, If LabVIEW were open source and free, you could theoretically build systems for just the cost of time and hardware. I've been wishing over the years that they would support LabVIEW on Debian based systems as well.

I've created two Linux/LabVIEW based setups in the past and never had the issues I've run into with Windows. Yes, it took more time and effort, but as far as I know - the one(s) I created (circa 2004-5) have been working reliably without issue or have even required any troubleshooting or support since their release. One is running Mandrake and the other an early version of Fedora.

Shaddap-a you face!

Shaddap-a you face!

New LAVAG Rank System?

in Site Feedback & Support

Posted · Edited by Bryan

That's a lot of participation awards...

I'm surprised an Admin hasn't chimed in yet to let us in on the new hotness.