-

Posts

633 -

Joined

-

Last visited

-

Days Won

4

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Gary Rubin

-

-

We're running standard LV8.6 EXEs on Windows Embedded Standard on compact flash drives. I didn't do the install, but I think that we followed some of NI's directions with little trouble.

-

You used to be able to get this close to runways here in the states if you were fortunate enough to live near a major airport. I spent a few years in Denver and (the now defunct) Stapleton IA had a hotspot to watch planes. It was a place to kill a Friday or Saturday night and drink

. On Friday nights a 747 would be doing touch-and-goes for hours.

. On Friday nights a 747 would be doing touch-and-goes for hours.Sadly the modern age of terrorism won't allow much of that any more.

You can still do that at Reagan National Airport. There's a park just north of the airport that the planes overfly as the take off and land. You can hear the vortices in the air after the incoming planes fly over.

-

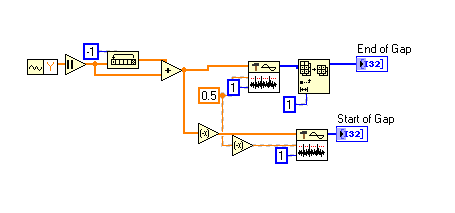

Your comments got me to look closer at my simulated data. I knew my real data would be noisier, but it will also look more like a sine wave than the triangle wave I had. I bumped up the sample frequency and now it looks better. Sorry to say though that the contributed ideas won't work on the new data. I'm back to my original plan of finding peaks and differentiating. By finding the time between peaks in the differentiation I can get the gaps. OK anybody up for round 2?

George

The effectiveness of these all depend on your SNR.

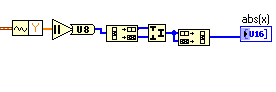

How about a simple moving average (SMA) on the absolute value of the data and look for the result to be below some threshold? The size of the SMA window would depend on the noisiness of the data. That uses a for loop, unfortunately.

A slight variation would be to traverse the data array and check for the last N elements all below a threshold. Again, this uses a for loop.

Or a combination of my original idea and ShaunR's... Do the diff (or sum) with the array shift, compare to a thresh, then convert from boolean to 0,1.

-

Nothing personal

That's alright. I reported Yair's original post as a test.

Admin edit: Yep, that was reported successfully by Gary Rubin with the following text: "testing..."

-

I was able to report a post.

I'm also the only non-premium member who's commented on this thread. Coincidence?

Admin edit: this post was reported sucessfully by Phillip Brooks with the following text: "Test of reporting as a non-premium member."

-

1

1

-

-

-

No, I just enjoy typing for the finger exercise it gives me

Thanks Chris. I almost ended up with tea all over my monitor.

-

I bet it isn't

Well, you'd have to compare apples to apples. The input into the threshold peak detect looks the same as your output.

-

-

My impression is that you have to write your number-crunching code in C using CUDA, and this just gives you a way to call that code from LabVIEW. Not being a C programmer, I was hoping for something that would let me target the GPU with my native LabVIEW code (or at least something that looks like native LabVIEW code).

-

Pun

in LAVA Lounge

-

Pun

in LAVA Lounge

-

We once had a data recorder fall out of a rack because the slides weren't installed right. After that, I felt like I could tout that the system had been drop-tested from a height of 3 feet.

-

When I've got a big bug late in the afternoon, I don't worry too much about it because I just know I'll dream of the solution... Most times, I solve these bugs the next day before my colleagues even arrive at work... I don't remember feeling tired, but I do feel I work too much!

My solutions usually come to me in the shower.

-

I'm sure I'm not alone in being grateful that ShaunR changed his profile picture from the dancing baby to whatever it is now.

-

GPIB can be pretty slow. Is it fast enough for your control loop?

-

I'm for sure in one of those chaotic/'agile' environments where nobody pays you for doing any internal documentation of your code AND the requirements are changed any and every time you talk with customers.

I'm in that boat too.

I get the impression that those of us who use LabVIEW for data acquisition and processing operate in very different world than those doing ATE. In ATE, I would imagine that the system requirements are pretty clear from the outset. You know your process, and you know the manner in which you want it automated.

For data processing applications, the development process may be much more iterative, as you may not know what has to happen in step 3 of the processing until you can see the results of step 2. Likewise, the user may not know what his UI requirements are until he sees some initial results. This is especially true when using LabVIEW to develop new processing algorithms.

-

Can't wait to try it! Thanks!

-

Scott is putting Info-LabVIEW back together and you should have now received an e-mail rescinding your unsubscription.

Yep, I got that one. Thanks.

-

Thanks Paul,

I was wondering why I was suddenly unsubscribed.

Gary

-

Wow, I really killed this thread. Thats a bit scary. Wonder if I could use this power for good?

It wasn't you. Activity on this thread ebbs and flows.

-

What is your subscription mode set to for that topic? Immediate or Delayed?

Immediate.

-

My email notifications include the following:

There may be more replies to this topic, but only 1 email is sent per board visit for each subscribed topic. This is to limit the amount of mail that is sent to your inbox.This is not accurate; I just got 5 updates for the same topic.

-

That definitely should be the part of RCFs competitor - Wrong-Klik Framework. With such plugins as "finish this project", "Get the idea", "Solve this problem", "Earn money for me" and less spectacular but still effective "Wire diagonally", "Zoom out", "Measure wires cross-talking" or "Ask on LAVA why this is not working". Some of us will also welcome "Generate post for alpha thread".

Don't forget "make coffee" and "get donuts"

Is this the most useful Express VI ever?

in LabVIEW General

Posted

I find that they often do too much. The few times I've used them, I've immediately converted them into a subvi, then stripped out all the cases and conditions that I didn't want/need. I guess I use them as templates/examples.