-

Posts

216 -

Joined

-

Last visited

-

Days Won

3

torekp last won the day on August 29 2021

torekp had the most liked content!

Profile Information

-

Gender

Male

-

Location

SE Michigan

Contact Methods

- Personal Website

- Company Website

LabVIEW Information

-

Version

LabVIEW 2012

-

Since

2001

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

torekp's Achievements

-

Thanks. I'm using 64-bit LabVIEW and 64-bit Visual Studio executable. LabVIEW runs first and creates the memory map, but I don't know how to "Open" in C++ other than "Open or Create". Since the map name is given, I figured "open or create" sounds appropriate.

-

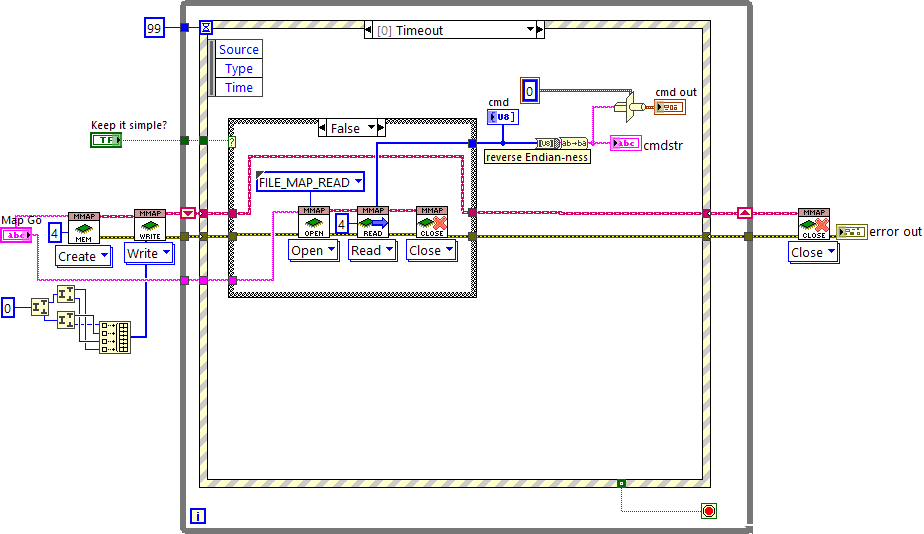

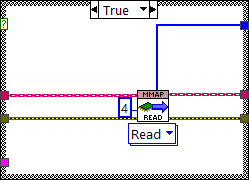

Update: I compiled an executable with ShaunR's help. My LabVIEW VI (LV 2017) communicates fine with a simple C++ program, but my executable doesn't. I found a workaround (turn the "keep it simple" boolean off, to use the workaround). Still, I'd like to understand. The C++ sharmemtest.zip uses Microsoft Visual Studio 2015 and boost (version 1.76.0, if it matters). sharmemtest.zip shmem_testr.vi

-

Web service for noobs like me

torekp replied to torekp's topic in Remote Control, Monitoring and the Internet

Computers at the devices won't have any WWW connection, only local. And the GUI computer will normally not connect more than locally either. So I'm not too worried about security, although now you can explain why I'm an idiot as well as a noob 😬 -

Web service for noobs like me

torekp replied to torekp's topic in Remote Control, Monitoring and the Internet

Thanks! If not Labview, Python is probably my least-incompetence path forward. Any suggestions on a good starting point - maybe a canned websocket example that I could adapt? -

I was trying a python http communication tutorial - https://aiohttp-demos.readthedocs.io/en/latest/tutorial.html#views - when I had to disable the NI Application Web Server to proceed. And then I thought, what the **** am I doing? Maybe I should take the free (well, prepaid) gift of a working web server. Here's my task. A central HQ computer will have a GUI that monitors five machine stations, each of which has its own computer. Every approx 10 ms (negotiable), each station gives a report consisting of two arrays, the larger being 2048 data points, the other much smaller. Whenever HQ feels like it, HQ can tell a station to start or stop (its computer stays on). A local IP connection is used, with a router at each end. There is also a Raspberry Pi with its own IP address at each station's router, that can send camera frames to HQ. The station-computers use Python and C++ to do their work, not counting whatever needs to be added to communicate with HQ. Your advice please? Should I use Labview? On both ends or just the HQ? And which if any of these helpful add-ons suggested by Hooovahh should I use?

-

I'm not sure, but I think you can use the liblinear functions in https://github.com/oysstu/LabVIEW-libsvm (oysstu's package), and set the solver type to L2R_LR (not "...Dual"). The solver type is a parameter in the cluster "parameters" for liblinear_train.vi. This seems, on first glance, to be doing one-class regression (which is what you want), rather than two-class classification.

-

As my avatar would say: Missed it by THAT much!

-

I searched for "DLL" in titles of posts to the forum on Application Builder etc, and got 0 results. Which is wrong -- I know because I have posted a topic with "DLL" in the title! What gives?

-

I left off an important fact about what I was doing, and apparently, doing wrong. After DAQmxWriteDigitalU32, I was doing DAQmxGetWriteTotalSampPerChanGenerated, DAQmxGetWriteCurrWritePos, and DAQmxGetWriteSpaceAvail. Those diagnostics told me how the problem proceeded, but they also helped cause it. Now that I commented them out, it has run for 17 hours, whereas mean time to failure was <1 hour when the diagnostic commands were used. Ever see the movie Mystery Men? One of the heroes, Invisible Boy, has his super-power only when no one is looking. My cDAQ task can do its job, as long as I don't look at it.

-

Thanks guys. I already went to direct connection from computer to cDAQ, and that seemed to lengthen the duration without this error - although it's so intermittent that it's hard to tell. I've never heard of NAGLE before, and on reading about it, it sounds like a likely suspect. I'll see if I can finagle something. (Hmm, I probably shouldn't pun-ish people for answering my questions!) Edit: Fin Nagle didn't do me any favors. Network traffic is 416 Kbps sent, which makes sense for my data plus a relatively small header per packet. When I watch task manager as it crashes, the fall-off of traffic is a cliff, not a blip, when error -200621 happens. I have yet to observe another -200292, but that could show me more of a blip; it will keep writing data for about 10 more cycles before the crash. If I can figure out how to make the graph show multiple points per second (and yet not scroll away too fast).

-

I've got a cDAQ-9185 chassis with four 9477 digital output modules, connected to my computer by Ethernet, writing samples at under 3 kHz (almost 150 samples, every 50 ms). The task keeps giving error -200292 (could not write samples, not enough space) but the real problem is that samples stop being generated. And that happens when there is a long time (~100 ms) between just before my DAQmxWriteDigitalU32 statement (in CVI LabWindows) and just after. It seems that the attempted write doesn't go through until it's too late. I have "Do Not Allow Regeneration" set, for what I think are good reasons, so when all samples in the buffer have been generated, it quits. So, I suspect that Windows and/or the Ethernet port driver are taking their sweet time. Questions: is there a way to have Ethernet based control without massive timing jitter? Or is it "Abandon all hope, ye who enter"? Would a USB-connected cDAQ chassis perform more deterministically?

-

Wow, conditional auto-indexing in loops has been around since Labview 2012? How the heck did I not notice this?

-

DLL functions or shared variables? Or something else? I have a Labview 2014-64 executable (or I can build a DLL) that runs one piece of equipment, the X-ray. The other engineer has a large CVI Labwindows 2015 + MS Visual Studio 2012 (C++) executable that runs everything else. I want the Labview code to be a slave of the CVI code, accepting commands to turn X-ray On or Off, reporting failures, and the like. Translating the X-ray code into C++ would be possible in principle, but not fun. Shared variables look easy, but I'm kinda scared of them. I would define all the shared variables in my LV code, since I'm more familiar with LV, then use them in both. There's a thread in here called "Shared Variable Woes" so maybe I should be scared. In the alternative, I tried building a proof-of-concept DLL in Labview, and calling its functions in CVI/C++, and it works, but it's kinda clunky. (I'm attaching it below in case you want to play, or advise.) Your advice would be appreciated. XrayDLL.zip

-

For what it's worth, for some classifiers (PLSDA for example), I've gotten better results by using a sequence of binary classifiers, rather than just doing all-vs-all. Dunno if this applies to SVM, but I suspect it could. Might be worth the effort.