Search the Community

Showing results for tags 'labview'.

-

https://www.winemantech.com/blog/testscript-python-labview-connector From release blog... Summary: Test engineers typically add manual-control screens to LabVIEW applications. While it would be helpful to repetitively execute varying parts of those manual-control screens, LabVIEW is not optimal for dynamic scripting, or on-the-fly sequencing with flow control. (Imagine editing the source code of Excel each time you wanted to create a macro.) And while Python is built for scripting, it requires advanced custom coding to interface with LabVIEW. Announcing TestScript: a free Python/LabVIEW connector from Wineman Technology that is simple to add to your existing LabVIEW application and abstracts complex Python coding, allowing you to easily use Python to control LabVIEW or vice versa.

-

Can anyone please tell what a DVR [ Data value reference ] is ? I want to know at what situation it will be used and what are the advantages we get by using DVR. I am really confused in this topic . If someone has any code in which they have worked with DVRs. kindly share it to me. Thank you.

- 14 replies

-

- dvr

- ni software

-

(and 2 more)

Tagged with:

-

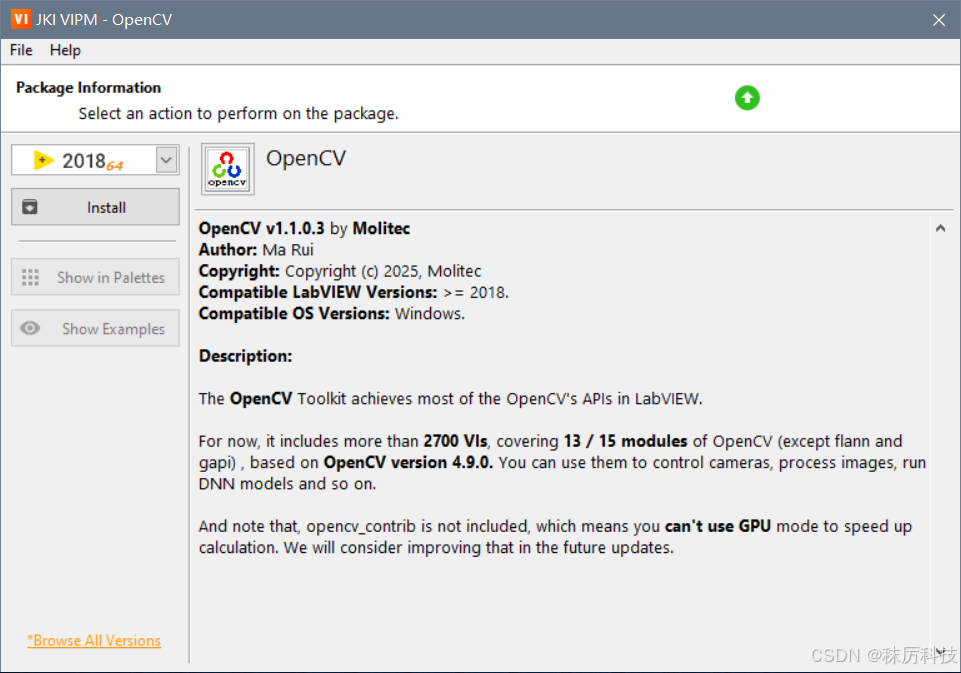

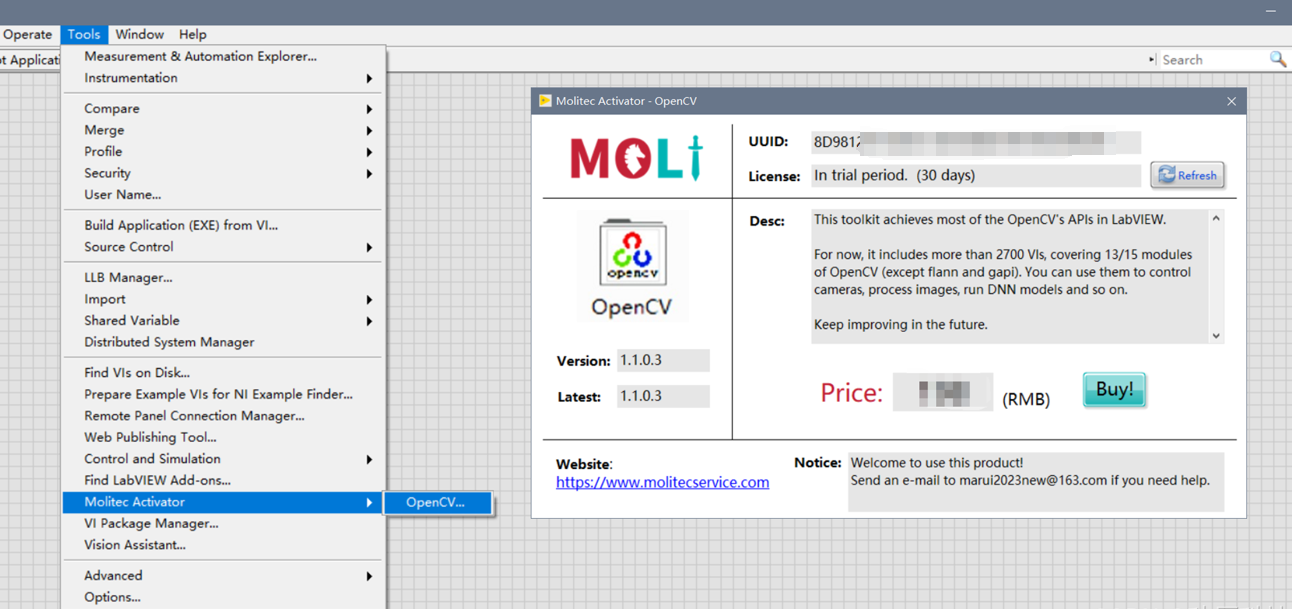

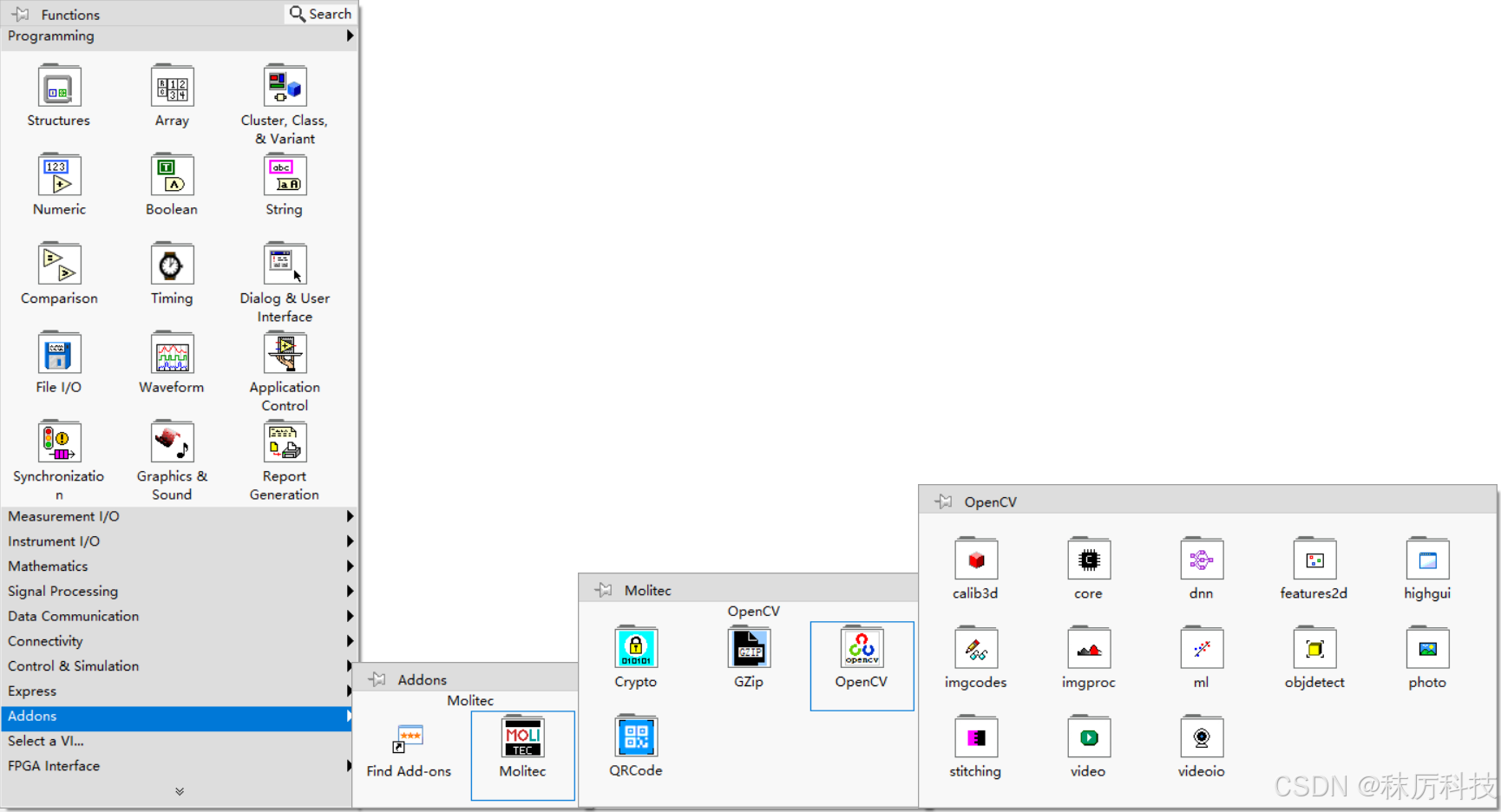

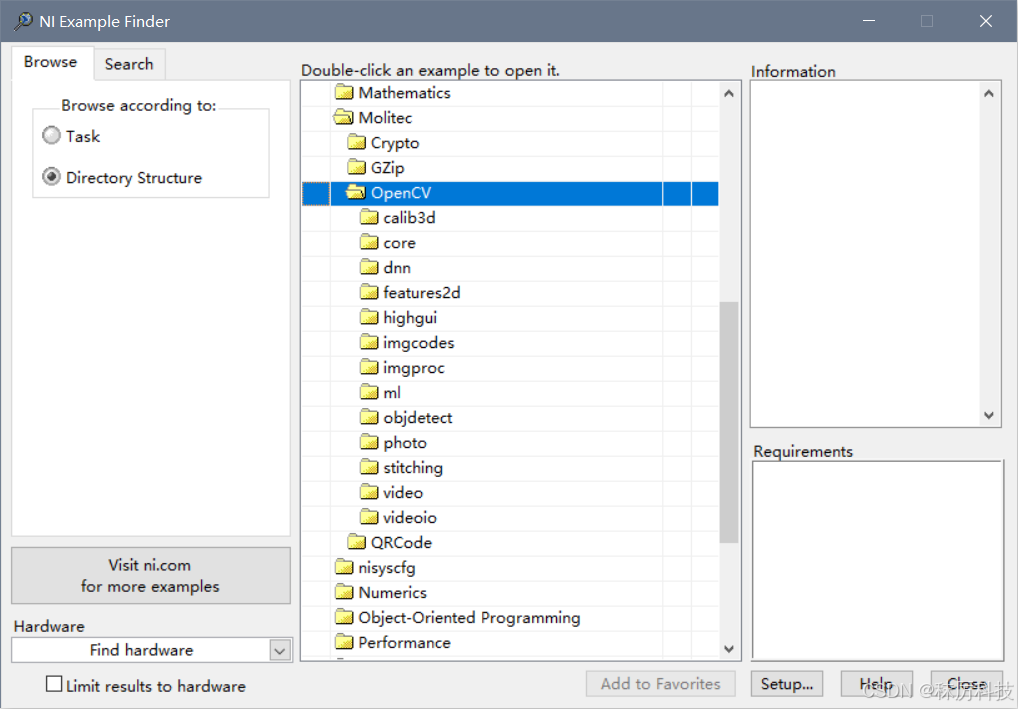

Hello everyone, I developed an Addons-Toolkit of LabVIEW, which achieves most of the OpenCV's APIs. It includes more than 2700 VIs, covering 13/15 modules of OpenCV (except flann and gapi) . You can use it to control cameras, process images, run DNN models and so on. Welcome to my CSDN blog to download and give it a try! (Chargeable, 30 days trial) Requirements: Windows 10 or 11, LabVIEW>=2018, 32 or 64 bits.

-

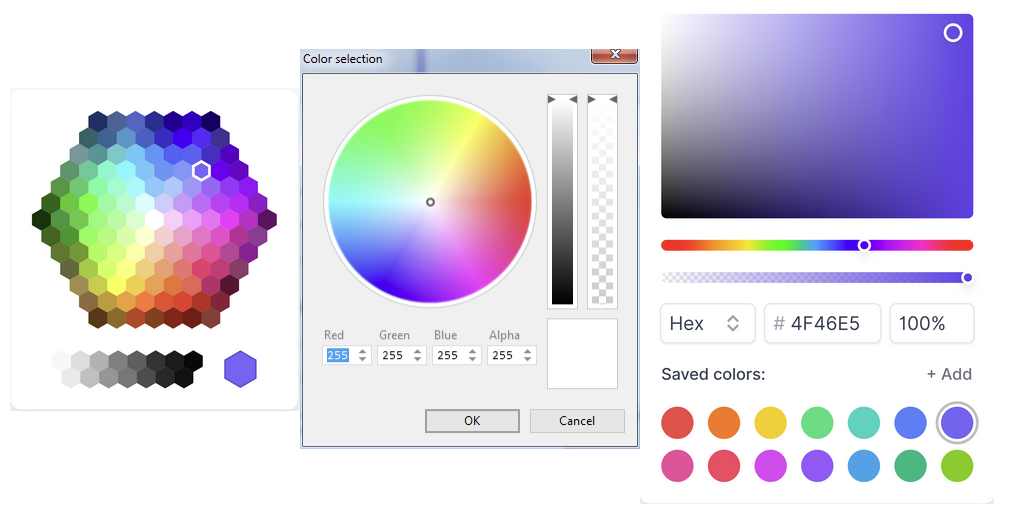

I am using labview 2020 Labview supports color box control only for squares. I want hexagon or color palatte, color wheel, etc. Is there anyone who produced these gui? Samples S

-

Hello I need to create a json in the following structure: { "Value1": { "Build": { "1": { "t_inst": { "Type": "Gold", "Key": "15", "size": "big" }, "1_avg": { "Type": "Green", "Key": "11", "size": "big" }, "2_avg": { "Type": "Blue", "Key": "21", "size": "big" } }, "2": { "t_inst": { "Type": "Gold", "Key": "15", "size": "big" }, "1_avg": { "Type": "Green", "Key": "11", "size": "big" }, "2_avg": { "Type": "Blue", "Key": "21", "size": "big" } } } }, "Value2": { "Build": { "1": { "t_inst": { "Type": "Grey", "Key": "22", "size": "small" }, "1_avg": { "Type": "Yellow", "Key": "8", "size": "big" }, "2_avg": { "Type": "Blue", "Key": "21", "size": "big" } } } } } I've searched several forums, but none I've seen have as many layers as I need. Could someone help me?

-

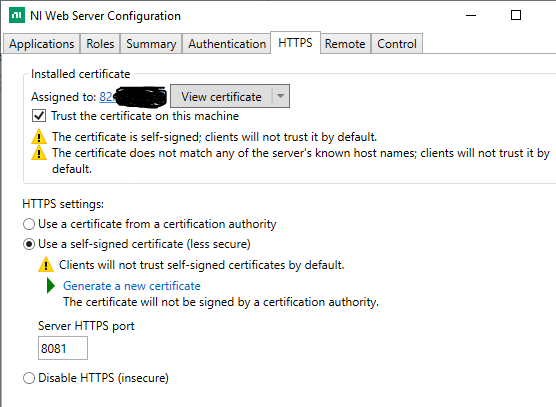

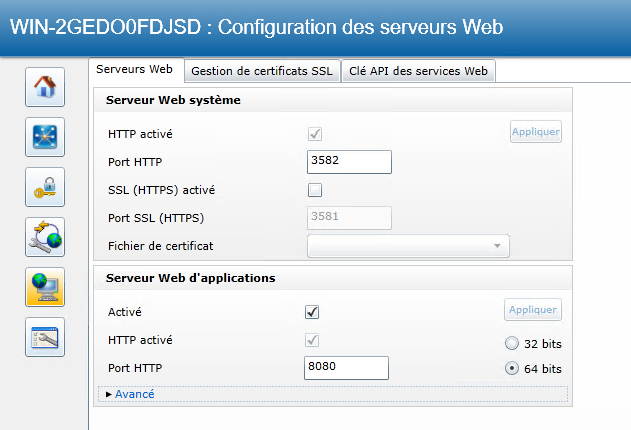

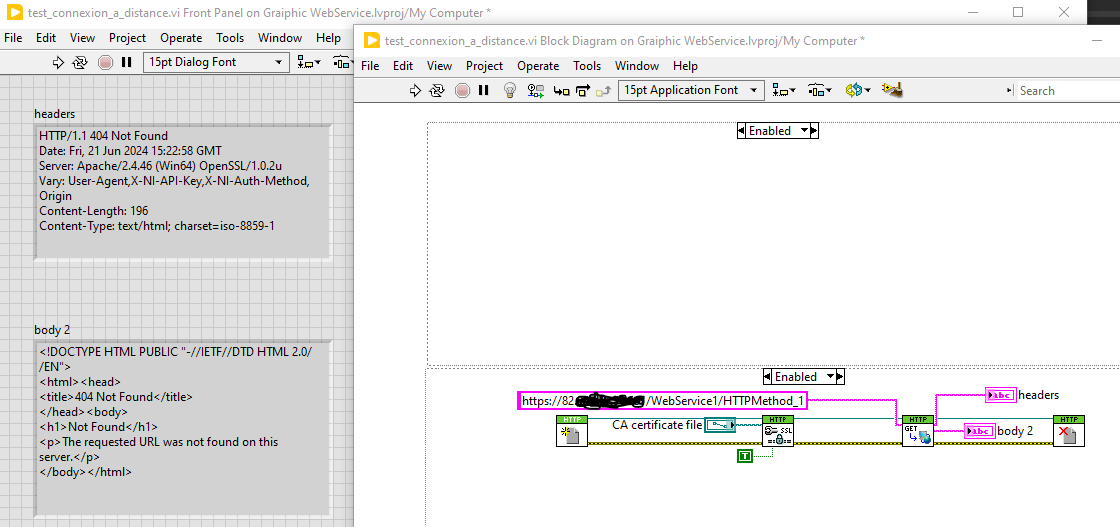

Hello, I'm trying to run a LabVIEW web service using the HTTPS protocol. I have published my webservice on a windows server. I tested with another machine (client) this webservice and all works correctly. Then I wanted to enable HTTPS on the web service, so I created a self-signed certificate configured with the server's IP address, which I installed on the server and the client machine (my computer). On the client side, I manage to go through the server, but an error message tells me that it can't find the url of my web service method. It's as if my web service didn't exist, but it works if I use HTTP. Below are the images of my web service configuration : I'm also displaying the error I get when I make the request: Thank you in advance for your reply.

-

- labview

- webservice

-

(and 1 more)

Tagged with:

-

I want to make the front panel transparent according to the background color to remove the unwanted parts. When I set the windows behavior of the VI to floating, the program is OK. But when I set thewindows behavior of the VI to the modal, there is a transparent border, and it is not fully transparent. Is there any good solution? The VI of the test is in the test1.rar file. test1.rar

-

View File 1-Wire.zip This tool-set gives access to all the 1-wire TMEX functionality. I was able to access 1-wire memory with this library. It has all the basic VI to allow communication with any 1-wire device on the market. It needs to be used in a project so the selection of the .dll 64 bit or 32 bit is done automatically. It works with the usb and the serial 1-wire adapter. Submitter Benoit Submitted 06/01/2018 Category Hardware LabVIEW Version

-

Hello, I would like to generate a manifest to modify the configuration of an executable so that it launches as administrator. For that I followed the LabVIEW link : https://www.ni.com/docs/fr-FR/bundle/labview/page/lvhowto/editing_app_manifests.html However the command "mt.exe -inputresource:directorypath:applicationname.exe -out:applicationname.manifest" given by LabVIEW does not work for me. When I run the command: "C:\Program Files (x86)\Windows Kits\10.0.22621.0\x86\mt.exe" -inputresource: "C:\Users\user_name\Desktop\exe\Installer.exe" -out:Installer.manifest Here is what I get: Microsoft (R) Manifest Tool Copyright (c) Microsoft Corporation. All rights reserved. Thank you for your answer Translated with DeepL

-

View File MCP2221A library There it is. The complete library for the MCP2221A. I2c adapter, I/O in a single IC. I love that one. Let me know if any bug is found. I try to make that library as much convenient as possible to use. Two version available 32 bit and 64 bit. little note: to open by serial number, the enumeration need to be activated on the device first. (open by index, enable the enumeration) It needs to be done only once in the life time of the device. PLEASE do not take ownership of this work since it is not yours. You have it for free, but the credit is still mine... I spent many hours of my life to make it works. Submitter Benoit Submitted 05/29/2018 Category *Uncertified* LabVIEW Version 2017 License Type BSD (Most common)

-

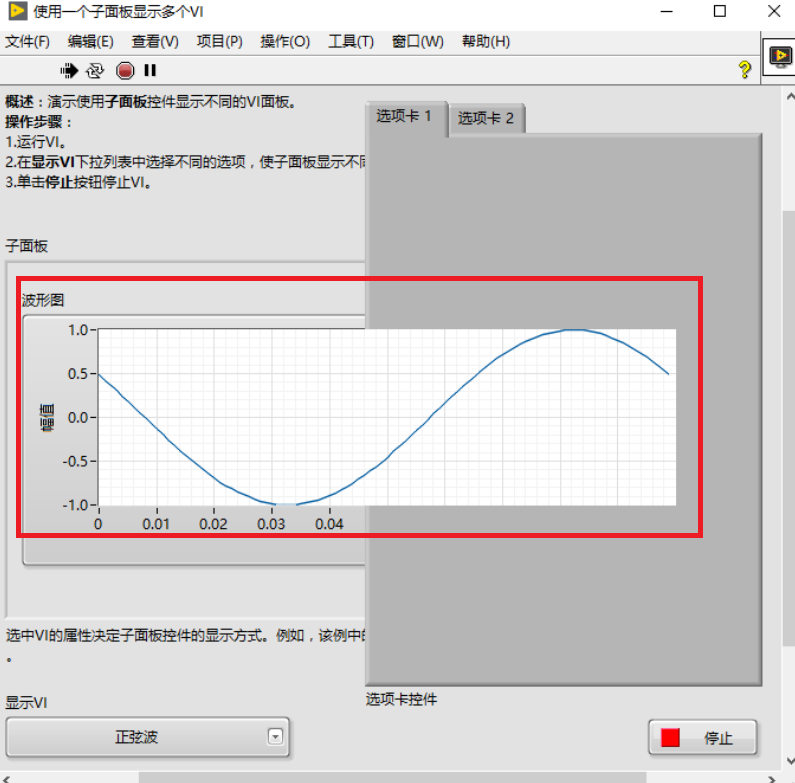

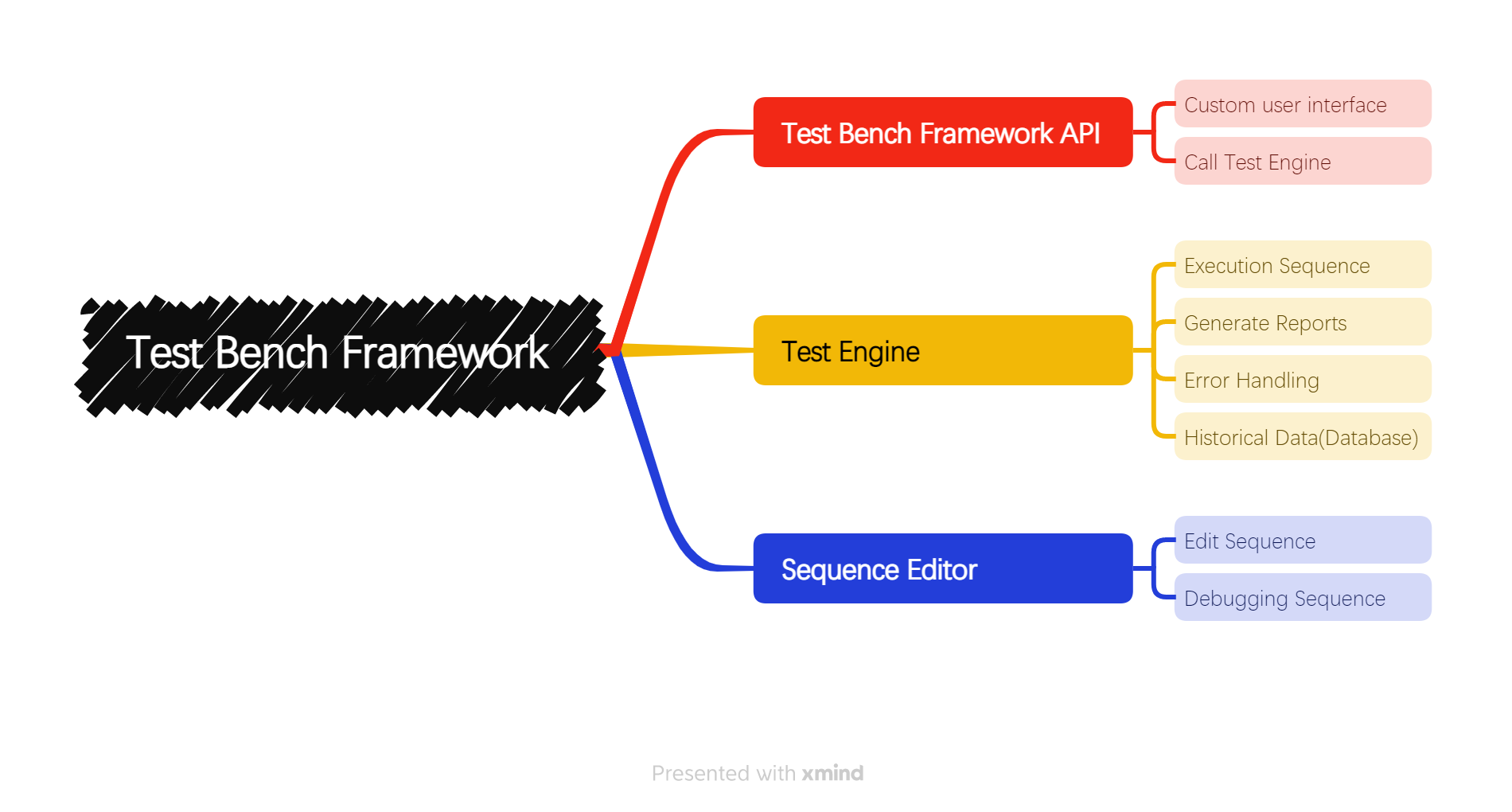

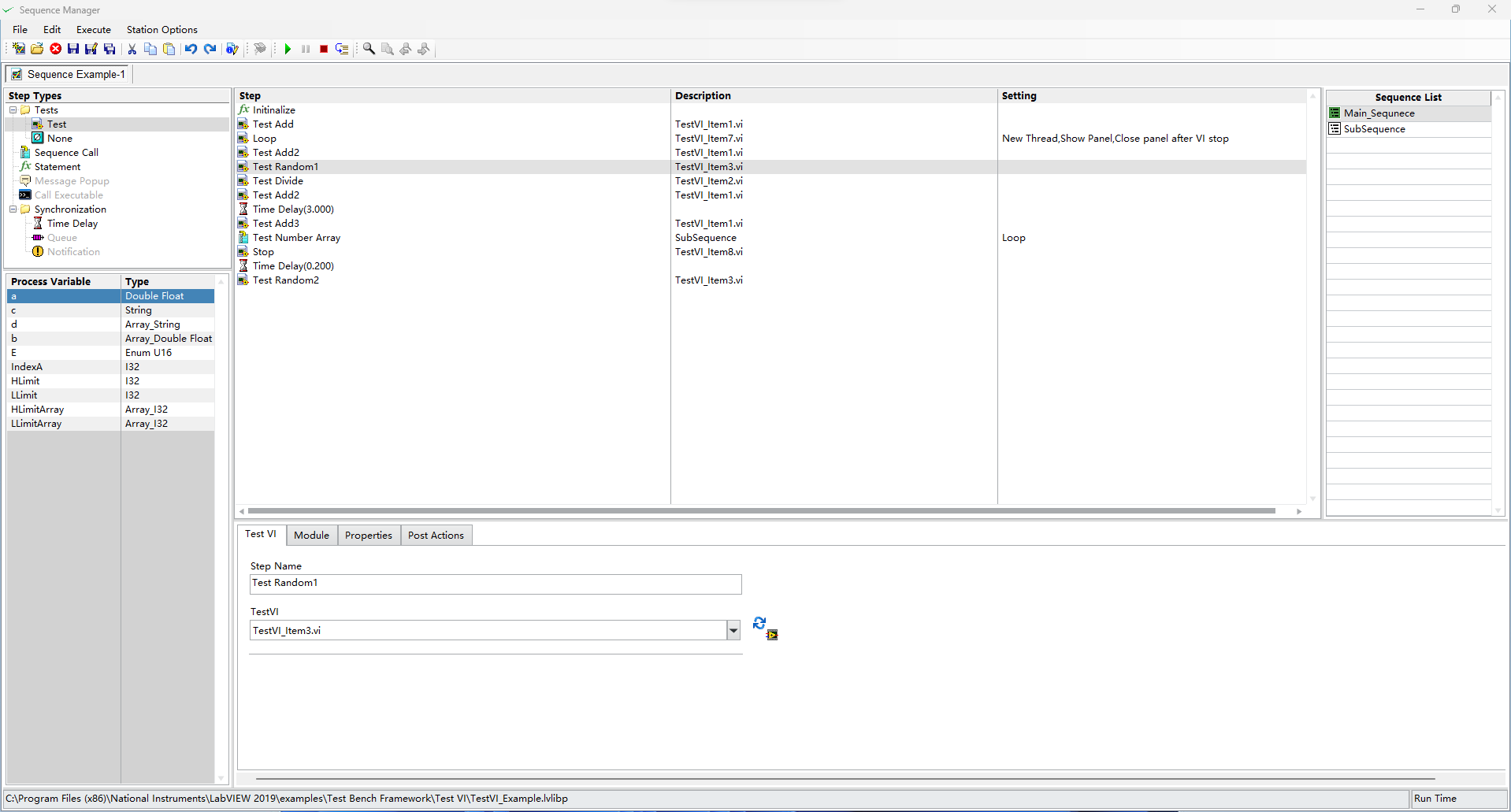

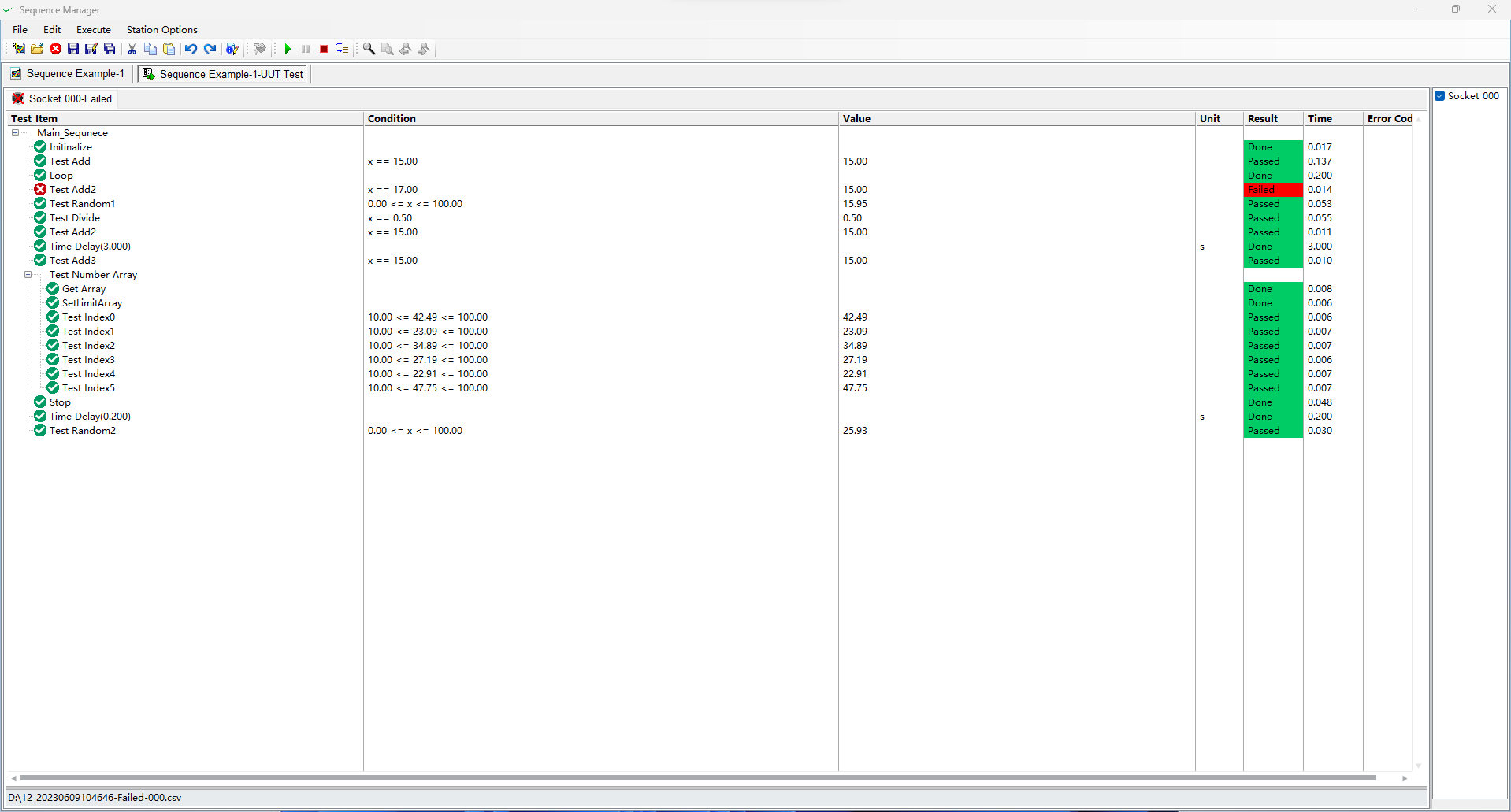

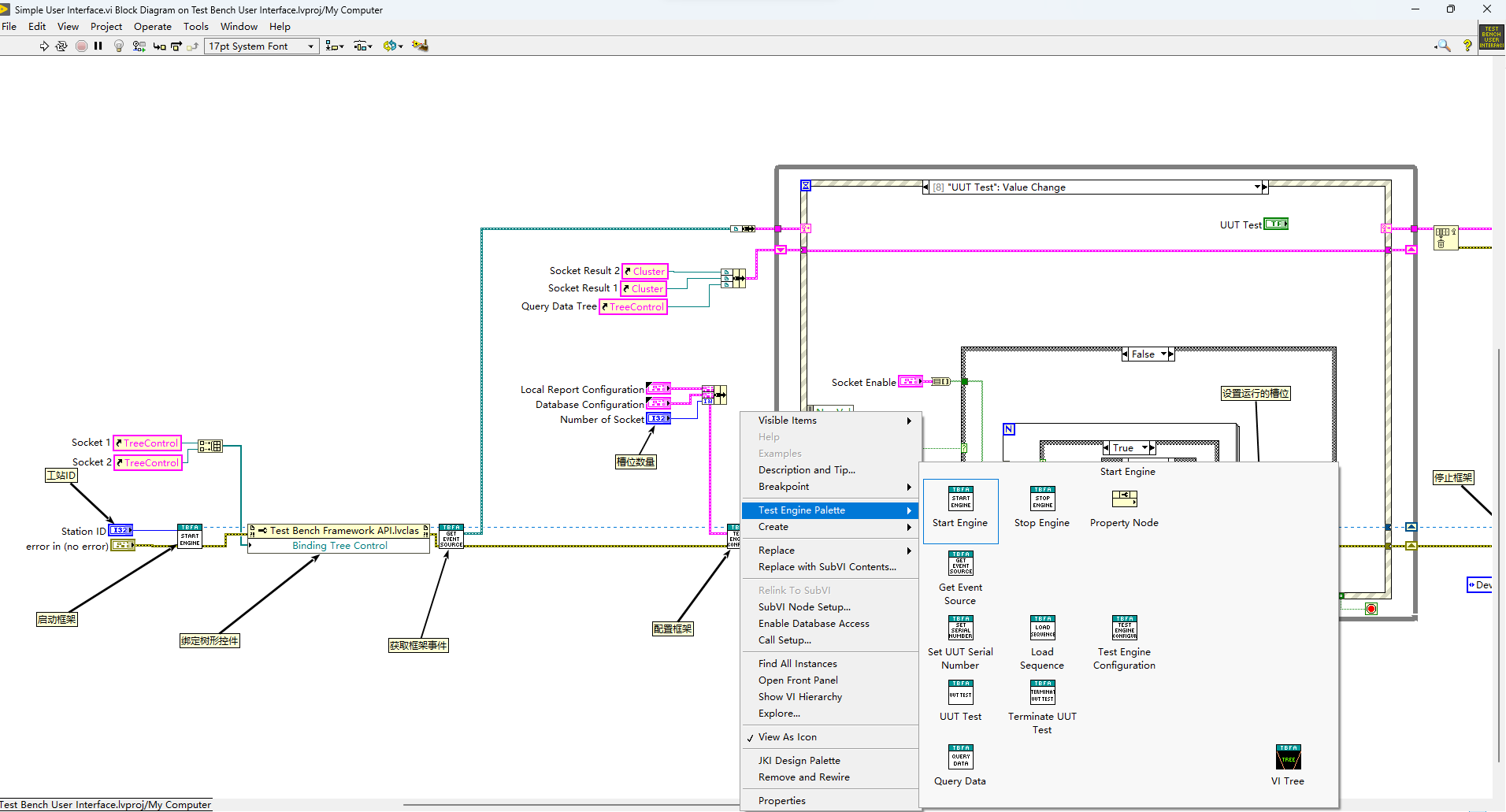

I used LabVIEW to develop a toolkit for ATE software. The toolkit is called "Test bench Framework", which includes a test sequence editor and a test engine.This toolkit features the ability to execute several different sequences in parallel.If you are interested in this kit please contact me, thank you! This toolkit is over 10MB in file size and cannot be published on VIPM, so I uploaded it to Github.Test-Bench-Framework . I used the TestStand icon inside my own sequence editor and wondered if there would be any copyright issues involved.But it's not commercially available yet.

-

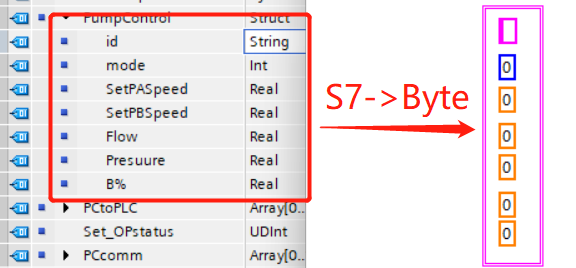

Through Siemens' S7 communication, I got some byte type data, but I didn't know how to convert these data into the corresponding structure data of labview. It is well known that the bool of struct in TIA occupies one bit, but the bool of struct in labview occupies one byte. Has anyone dealt with it?

-

I'm looking for a way to automatically expand and collapse ring controls. Example: I have already seen a method where mouse click is simulated using user32.dll when mouse entered. I just want to know whether there are any better methods available. Also I do have access to LabVIEW 2022 Q3... incase if any new property/method added, kindly let me know! Thanks in advance!

-

Hi I am using the MPSSE I2C drivers over a USB FT232H device, communicating with BMP280. When I read the BMP280 device ID, it is supposed to return 0x58 but the DeviceRead vi returns 0xFF. I have used a logic analyser and can confirm that the device is actually sending 0x58, but the output from the read vi is 0xFF. Has anyone had this same problem, or can anyone suggest what might be the issue? Thanks Arj

-

HI! I am new in this forum. I have searched a lot for the Labview Real-Time version 8.0 without any success. I am writing in case anyone has the link, zip, rar, iso or any format with this version. It is for an old PXI. Thank you very much in advance!

-

Hello, I would like to know if it is possible to launch an executable via a VI and if it is possible to launch it as an administrator ? Or if it is possible that the VIPM launches my VI as an adminitrator after the installation of a packet ? Thank you for your answer

-

Hello, I'm trying to open an HTML file in google chrome via the VI System Exec.vi under NI Linux, for this I had to install the "xdg-utils" package. With the Linux terminal the command "xdg-open [file path] works fine. However, when I execute the code with LabVIEW the VI returns the following error (standard error indicator): /usr/bin/xdg-open: line 779: www-browser: command not found /usr/bin/xdg-open: line 779: links2: command not found /usr/bin/xdg-open: line 779: elinks: command not found /usr/bin/xdg-open: line 779: links: command not found /usr/bin/xdg-open: line 779: lynx: command not found /usr/bin/xdg-open: line 779: w3m: command not found xdg-open: no method available for opening '/home/lvuser/Desktop/Reports/20221128/FileHTML.html' After some research I understood that this was due to the fact that the default opening of the file is not goole chrome. So I executed the following commands in the terminal: - xdg-mime query default x-scheme-handle/http - xdg-mime query default x-scheme-handle/https - xdg-mime query default text/html But nothing does with LabVIEW it does not work but works with the Linux terminal. I join you the code (using LabVIEW 2020). Thanks for your feedback. code.vi

-

I am using MoveBlock via Call Library Function to copy a few bytes. The duration of this call measured with two TickCount timestamps in a flat sequence around the call is about 40ms. Since MoveBlock should be similar to memcpy in C, I thought it would also perform similarly, however, if it's really this slow, I cannot use it in a meaninful way. Has anyone else measured its duration and can you confirm my findings?

- 5 replies

-

- labview

- memory management

-

(and 1 more)

Tagged with:

-

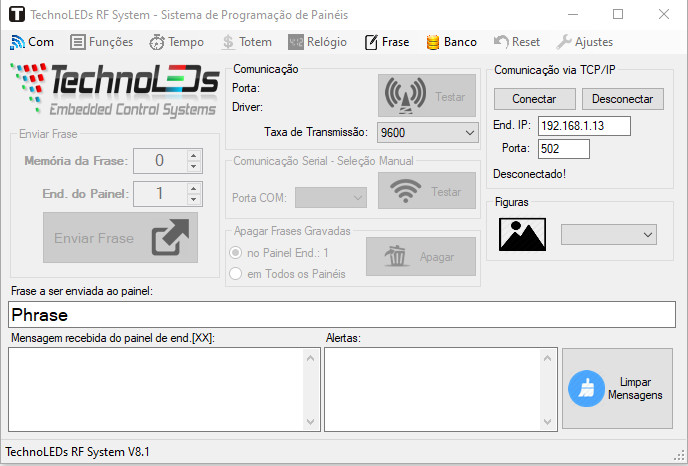

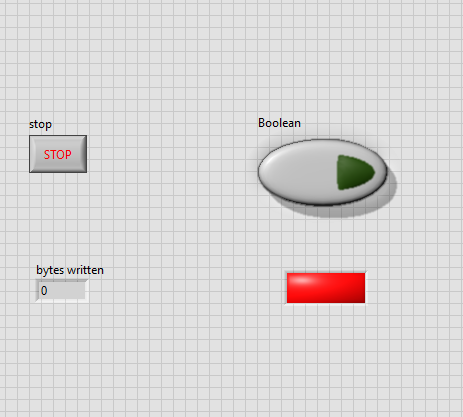

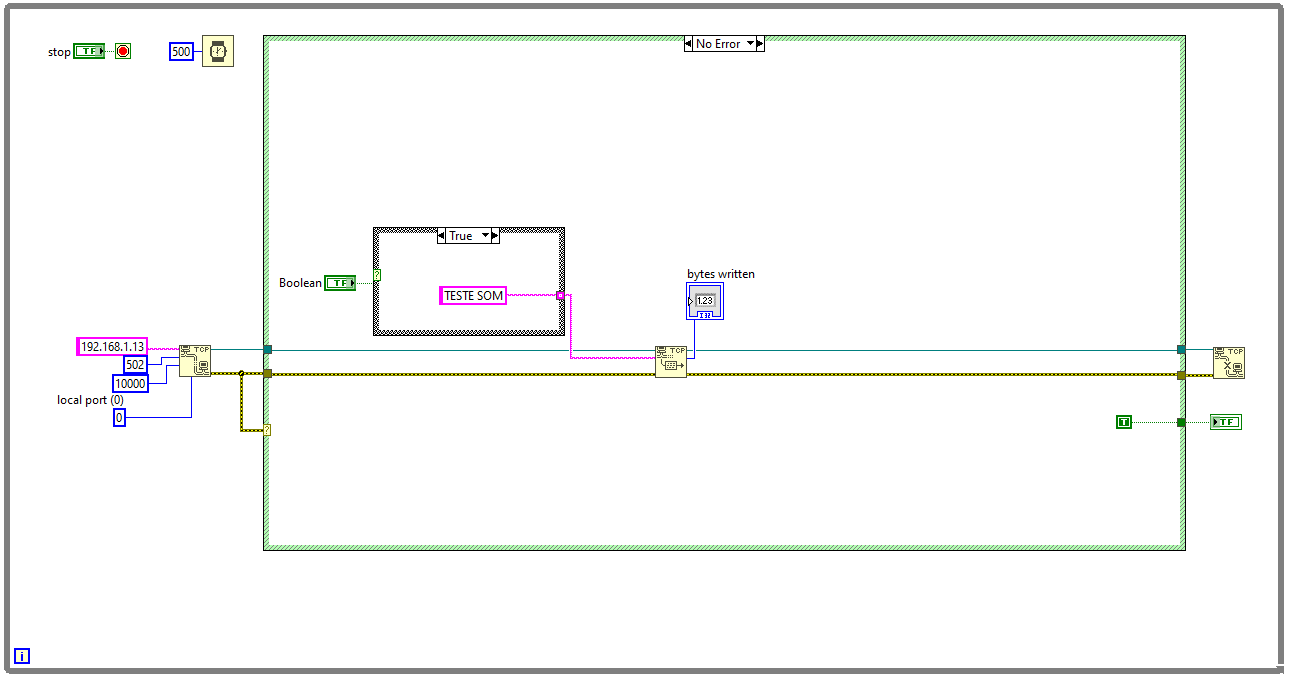

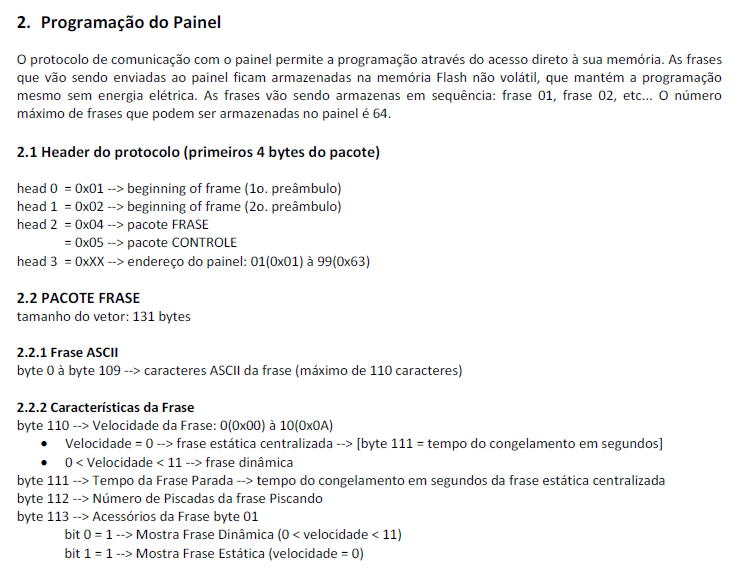

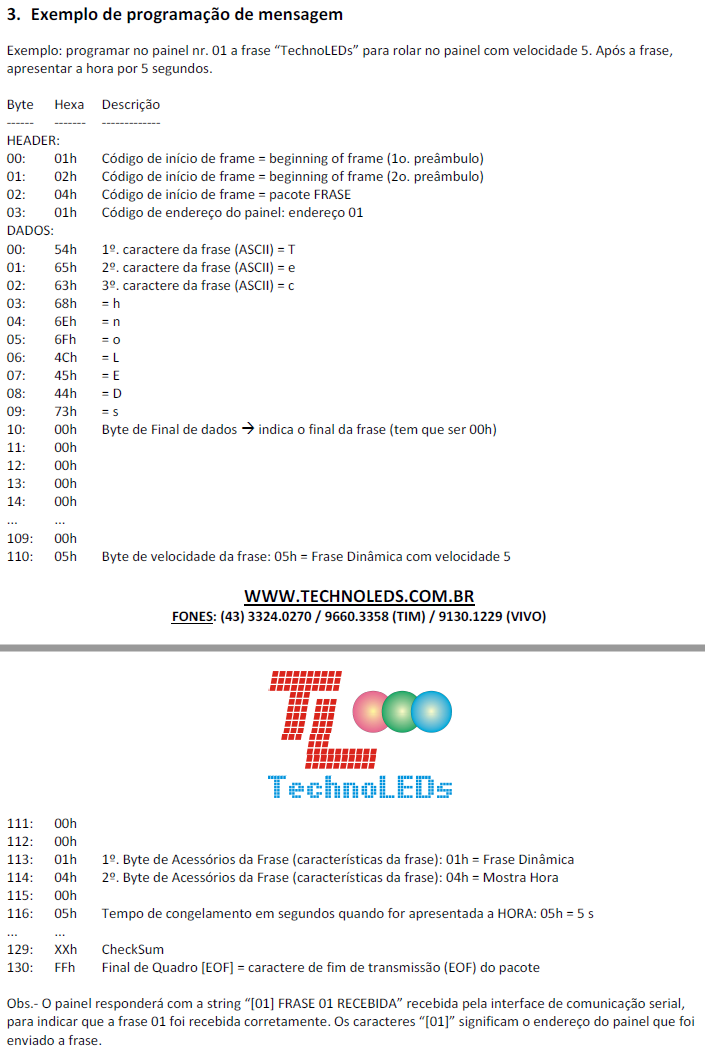

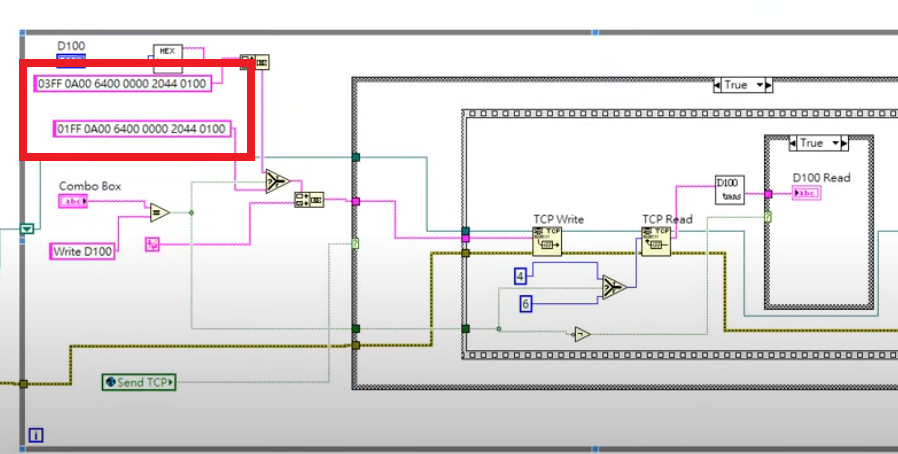

Hi everyone. I started a new VI on LabVIEW 2021 to communicate, about tcp/ip, with a led display like a billboard as image below. I get a communication as TCP/IP through the manufacturer software, but i didn't know what is the package i need to send, the full package. This led display it's a simple command use, with there software (above) or bluetooth in cell phone, but, to communicate for TCP/IP, they install a conversor, the USR-TCP232-T2 module, as image below The image bellow, I show the simple program im LabVIEW to command this led display but the main question is: whats is the kind of package i need send to show the message or change the configuration of the display. In attached, we can see the datasheet that manufacturer send us about this led display and the package to send, but i did'nt undestand how to do 😕 It's my first time towork with TCP/IP on LabVIEW manual progração Serial TechnoLEDs padrão RS232-RS485-TCPIP v8.pdf Something like this:

-

TDF team is proud to propose for free download the scikit-learn library adapted for LabVIEW in open source. LabVIEW developer can now use our library for free as simple and efficient tools for predictive data analysis, accessible to everybody, and reusable in various contexts. It features various classification, regression and clustering algorithms including support vector machines, random forests, gradient boosting, k-means and DBSCAN, and is designed to interoperate with the Python numerical and scientific libraries NumPy and SciPy from the famous scikit-learn Python library. Coming soon, our team is working on the « HAIBAL Project », deep learning library written in native LabVIEW, full compatible CUDA and NI FPGA. But why deprive ourselves of the power of ALL the FPGA boards ? No reason, that's why we are working on our own compilator to make HAIBAL full compatible with all Xilinx and Intel Altera FPGA boards. HAIBAL will propose more than 100 different layers, 22 initialisators, 15 activation type, 7 optimizors, 17 looses. As we like AI Facebook and Google products, we will of course make HAIBAL natively full compatible with PyTorch and Keras. Sources are available now on our GitHub for free : https://www.technologies-france.com/?page_id=487

- 16 replies

-

- 9

-

-

-

- labview

- machine learning

-

(and 3 more)

Tagged with:

-

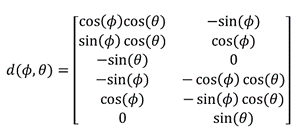

I am using the MUSIC algorithm and the Vector Antenna to determine the AoA of the incoming signals in LabView. My question is how can I implement the Steering Vector for the Vector Antenna in LabView? The mathematical equation of the Vector antenna is in the attachd, where 𝜙 is the azimuth angle and 𝜃 is the elevation angle.

-

有谁知道应该如何进行应用程序恢复?我希望应用程序在计算机突然关闭然后重新打开电源时恢复到断电之前的状态。

-

Hi, I figured people here may be more interested in this project. I have some cool graph extensions I'm building in an open source project, which make some nice graphical overlays for XY graphs and waveform graphs in LabVIEW. I've got a github page where you can grab the code, support the project or just have a look at some of the same screenshots. Everything updates live in the graph, so you really need to see it, to get how it all works, so here you go: https://github.com/unipsycho/Graph-Extensions-LabVIEW Please star follow the project if you want to see this developed and I'd appreciate any feedback or ideas to extend it further. The markers are very much IN development right now, so no where near finished, but the tools can still be seen working. THANKS!

-

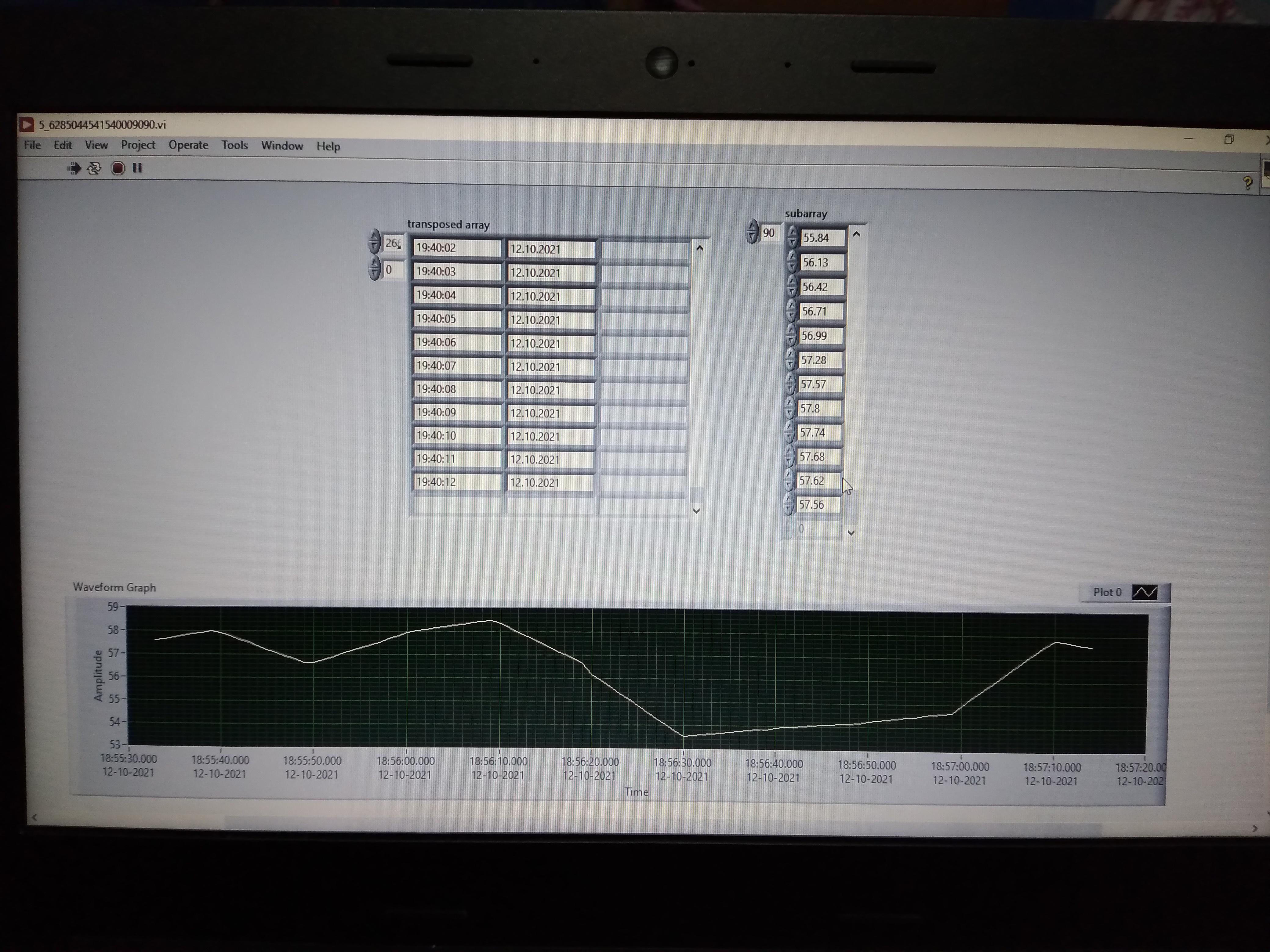

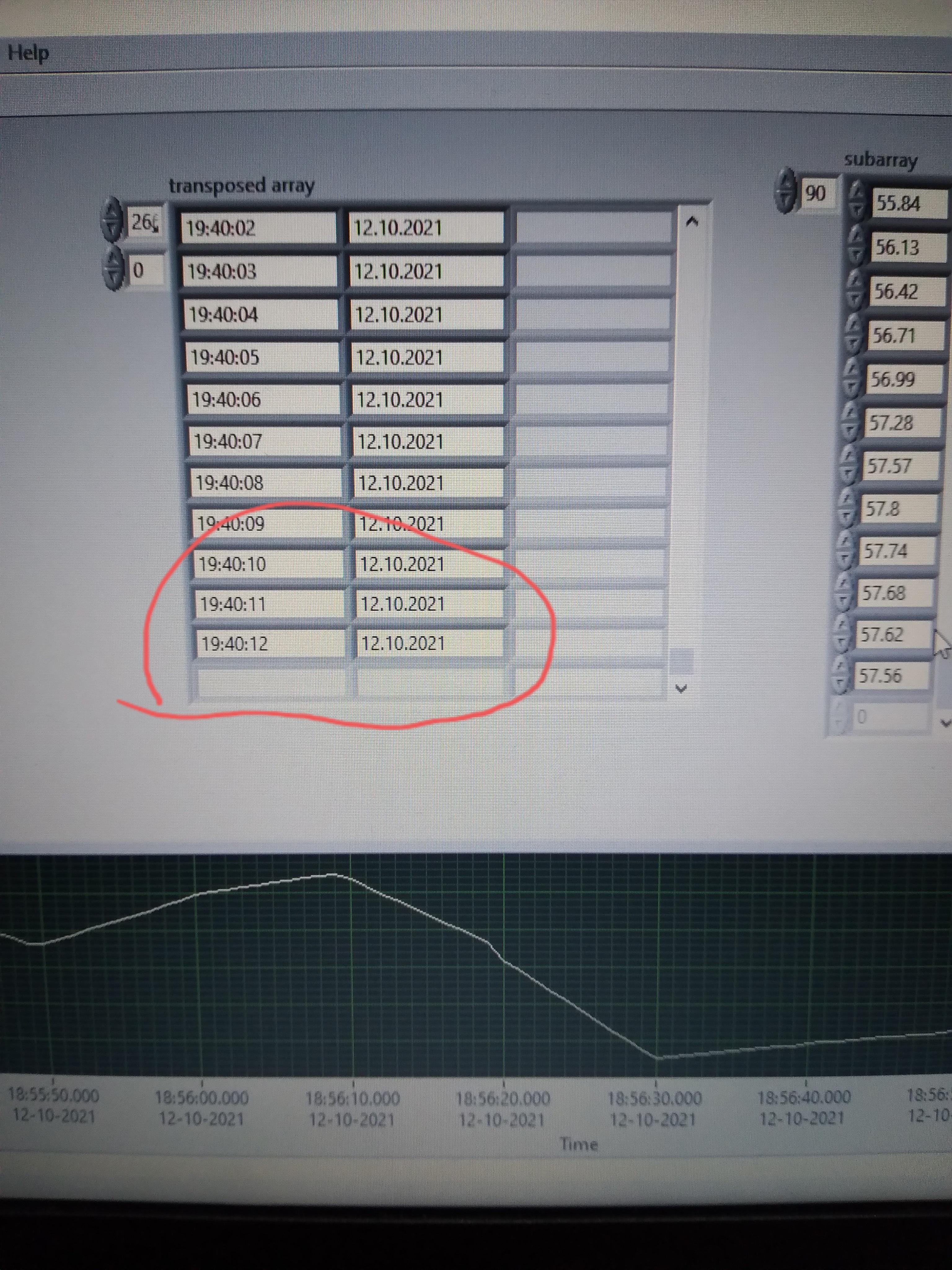

Hello Everyone; How I plot the timestamp in the graph of the x-axis and numerical slider the timestamp given in 2d array. The array in 2d array of string... I will try this but it not plotting full timestamp can you help me to short of this problem. It will be a plot, but a few timestamps will plotting How I plot full timestamp and date... I have attached my problems like the jpg file and .vi file for your reference. Does anyone have solution comments below... 5_6285044541540009090.vi Thanks and Best regards...