-

Posts

59 -

Joined

-

Last visited

-

Days Won

2

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by codcoder

-

-

2 hours ago, dadreamer said:

I think Andrey won't mind if I repost this here.

Oh but I like that! 💗

Small stuff like that has always bothered me in LabVIEW. And fixing small stuff is a way for them to show that they care. And that a big company on which I hinge my career cares is important for me.

-

On 3/22/2023 at 1:58 PM, Antoine Chalons said:

Switching a large project to a more recent version of LabVIEW is quite risky.

(...)

Highly risky.

But this isn't LabVIEW specific. There are a lot of situations in corporate environments where you simply don't upgrade to the latest version all time of a software. It's simply too risky.

-

So it's settled then? NI will become a subdivision of Emerson?

-

Thanks for sharing!

I guess I'm just trying to stay positive. Every time the future of LabVIEW is brought up here things goes so gloomy so fast!

And I'm trying to wrap my head around what is true and what is nothing but mindset.

And I'm trying to wrap my head around what is true and what is nothing but mindset.

It's ironic though: last months there has been so much hype around AI being used for programming, especially Googles AlphaCode ranking top 54% in competetive programming (https://www.deepmind.com/blog/competitive-programming-with-alphacode). Writing code from natural text input. So we're heading towards a future where classic "coding" could fast be obsolete, leaving the mundane tasks to the AI. And still, there already is a tool that could help you with that, a tools that has been around for 30 years, a graphical programming tool. So how, how, could LabVIEW not fit in this future?

-

2

2

-

-

32 minutes ago, ShaunR said:

They lost Test and Measurement to Python a while ago-arguably the mainstay for LabVIEW.

Yes, of course. I can't argue with that (and since English isn't my first language and all my experience comes from my small part of the world, it's hard for me to argue in general 😁).

We don't use python where I work. We mainly use Teststand to call C based drivers that communicate with the instruments. That could have been done in LabVIEW or Python as well but I assume the guy who was put to do it knew C.

But for other tasks we use LabVIEW FPGA. It's really useful as it allows us to incorporate more custom stuff in a more COTS architecture. And we also use "normal" LabVIEW where we need a GUI (it's still very powerful at that, easy to make). And in a few cases we even use LabVIEW RT where we need that capability. I neither of those cases we plan to throw NI/LabVIEW away. Their approach is the entire reason we use them!

I don't really know where I'm heading here. Maybe something like if you're used to LabVIEW as a general purpose software tool then yes, maybe Python is the best choice these days. Maybe, maybe, "the war is lost". But that shouldn't mean LabVIEW development is stagnating or dying. It's just that those areas where NI excel in general aren't as big and thriving compared to other. I.e. HW vs. SW.

-

Idk, why should the failure of NXG mark the failue of NI?

Aren't there other examples of attempts ro reinvent the wheel, discovering that it doesn't work, scrap it and then continuing building on what you got?

I can think of XHTML which W3C tried to push as a replacement of HTML but in failure to do so, HTML5 became the accepted standard (based on HTML 4.01 while XHTML was more based on XML). Maybe the comparision isn't that apt but maybe a little.

And also, maybe I work in a back water slow moving company, but to us for example utilizing LabVIEW FPGA and the FPGA module for the PXI is something of a "game changer" in our test equipment. And we are still developing new products, new systems, based on NI tech.

The major problem of course for me as an individual is that the engineering world in general have moved a lot from hardware to software in the last ten years. And working with hardware, whether or not you specilize in the NI eco system, gives you less oppurtunities. There are hyped things like app start ups, fin tech, AI, machine learning (and what not) and if you want to work in those areas, then sure, LabVIEW isn't applicable. But it never as been and that shouldn't mean it can't be thriving in the world it was originally conceived for.

-

Are you asking about taking the exam at a physical location or online?

I've taken both the CLD and CLD-R online and I can recommend it. It worked fairly well. And the CLAD is still just multiple choice questions right?

BUT regarding the online exam NI is apparanelty moving to a new provider so you can't take it right now:

-

1

1

-

-

On 2/20/2023 at 5:22 PM, Mark Moser said:

Sorry if this is rambly I rarely have the opportunity to talk to other career LabVIEW developers. Thanks for your input!

Out of curiosity, in which country do you work/live?

-

The short answer about arrays in LabVIEW is that they cannot contain mixed data types.

There is a discussion here about using variants as a work around (https://forums.ni.com/t5/LabVIEW/Creating-an-array-with-different-data-types/td-p/731637) but unless someone is faster than me I'll need to get back to how that translates to your use case with JSON.

-

Hi,

So I have question about the inner working of the host to target FIFO for a setup with a Windows PC and a PXIe-7820R (if the specific hardware is important). But it really isn't so much of question as me trying to understand something.

My setup: I transfer data from host to the target (the FPGA module 7820). First I simply configured my FIFO to use U8 as datatype and read one element at a time on the FPGA target. It worked but when I increased the amount of data I ran into a performance issue.

In order to increase the throughput I both increased the width of the FIFO, packing four bytes into one U32, and also reconfigured the target to read two elements at a time.

This worked, so there really isn't any issue here that needs to be resolved.

But afterwards I thought occurd: would I have achieved the same result if I kept the width U8 but read eight elements at a time on the FPGA? Since 4*2 and 1*8 both are 8, would I have achieved the same throughput? Or is it better to read fewer but longer integers (and then splitting them up into U8's)?

I've read NI's white paper but it doesn't cover this specific subject.

Thanks for any thoughts given on the topic! 😊

-

On 12/19/2022 at 9:07 PM, LogMAN said:

Attempting to execute community-scoped VIs results in a runtime error. Here is an example using Open VI Reference.

(...)

The same error should appear in TestStand (otherwise it's a bug).

I can confirm that I was unable to execute the community scoped VI inside the packed library from a TestStand sequence.

I didn't fill my VI with anything special, just a dialog box. TestStand reported an error that it was unable to load the VI. Can't remember the exact phrasing, something general, but it was unrelated to the scope of the VI.

So yes, good that the VI with commuty scope atleast can't be executed. But still bad UX that it is accessible.

-

Thanks for your answer!

So the hiding of community scoped vi's in LabVIEW is cosmetic only?

I guess it makes sense that a VI that must be accessible from something outside the library, let that be the end user through LabVIEW/TestStand or another library, will be treated diferently.

But I still think it's bad ux that TestStand doesn't hide the community scoped vi's like LabVIEW does.

-

Hi,

This is actually a re-post of a message I posted in the offical forum but since I've failed to receive any answer there I'm going to try my luck here as well.

So I am having an issue with the community access scope of a VI inside a packed library (hence the title...) and I can't figure out if I have actually stumbled upon something noteworthy or if I simply fail to understand what the community access scope implies.

Let me exemplify:

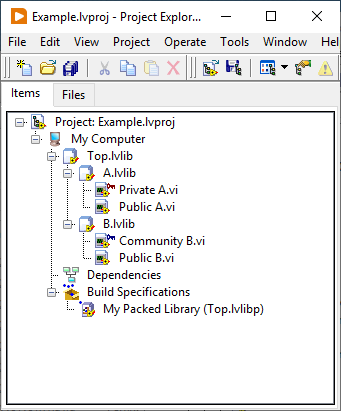

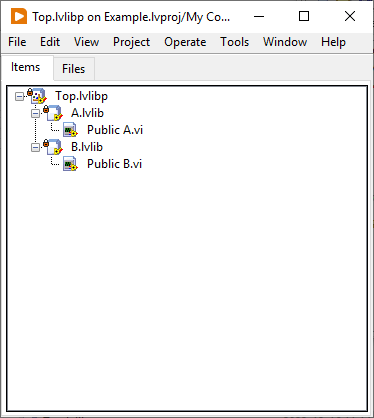

Image 1

I create a library with two sub libraries. I set two of the vi's to public, one to private and one to community access scope. And I create a build specification for a packed library. This is of course an example but it resembles my actual library structure.

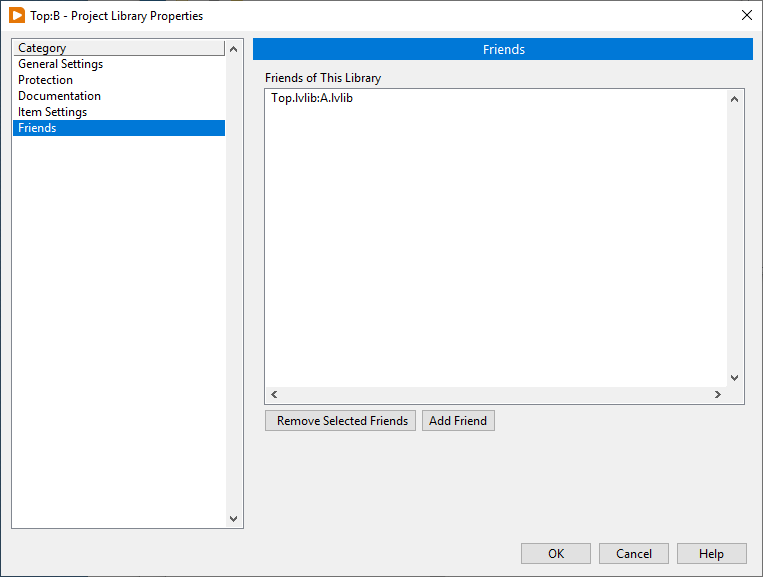

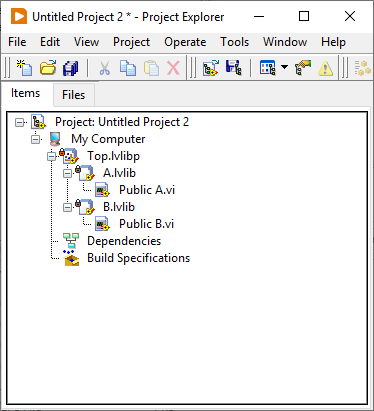

Image 2

I also let library A be a friend of B (although not doing this doesn't seem to affect anything). In practice I want library A to access certain VI's inside library B but they should not be visible to the outside world.

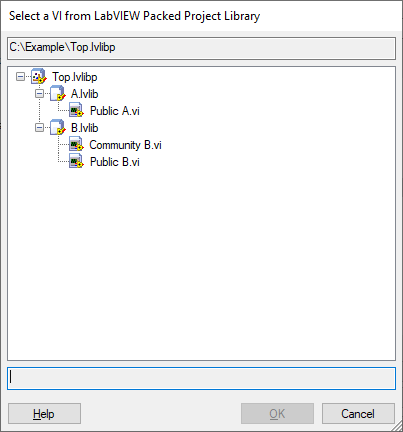

Image 3 and 4

If I now make a build of the packed library and open that build either stand alone or load it inside a new LabVIEW project the packed library behaves as I expect. Only the public vi's are accessible from the outside world but the private and community are not. And, to be clear, this is what I want to achieve.

Image 5

Now this is the strange part: if I load this packed library into TestStand, and open it to select a VI, the public VI's are accessible and the private is not (as expected) but also the community VI is accessible. This is not the expected behaviour, atleast not according to my expectations.

So to all of you who knows more than me: Is this an expected behaviour? Is there something about the community access scope I am not understanding? Is there some setting I should or should not activate? Or have I actually stumbled upon a bug?

BR, CC

-

On 11/14/2022 at 9:35 AM, Rolf Kalbermatter said:

I would attack it differently. Send that reboot command to the application itself, let it clean everything up and then have it reboot itself or even the entire machine.

I was afraid the answer would be something along the line "just do it properly"

The thing is the application does have that feature. It is just that the option to command reboot on system level is so appealing as it works in those cases where the application is non responsive.

-

Hi,

We have a PXI-system with a PXIe-8821 controller running LabVIEW RT (PharLap). On this controller we have a deployed LabVIEW developed application. So all standard so far.

From time to time the application ends up in an erroneous state. Since the overall system is relatively complex and used as a development tool for another system, it is tedious to detect and debug each situation that leads to an error. So the current solution is to regularly reboot the controller. Both at a fixed time interval and when the controller is suspected to be in an erroneous state.

To achieve this remotely we send a reboot command by spoofing the HTTP POST command sent when clicking on the "restart button" in NI's web based configuration GUI. I was surprised that this worked, but it does, and it does so pretty well.

But there is an issue: we have an instrument that can get upset if the reference to it isn't closed down properly. And since this reboot on system level harshly close down our application this has increasingly started to become an issue.

So I have two questions:

- Is there a way to detect that a system reboot is imminent and allowing the LabVIEW application to act on it? Closing down references and such.

- Is there another way to remotely reboot a controller? I am aware of the built in system vi's but those are more about letting the controller reboot itself. What I'm looking for is a method to remotely force a reboot.

Any input is most welcome!

-

Done!

-

A heads up though: all PXI modules aren't fully supported. We replaced a PXI controller running Pharlap to one running Linux and there were some issues.

The LabVIEW application transferred smoothly if I remember correctly (this was last year, August ish) but we never got one of the modules to fully work. The 6683H timing module I think. And NI was aware of this.

We ended up keeping the Pharlap controller. In this application.

-

-

So on a lighter side of everything: was browsing the NI website and discovered that atleast one PXI module has been updated to match the (heavily discussed) new graphical profile: https://www.ni.com/en-us/support/model.pxie-8301.html

Cool grey instead of boring beige.

Still waiting for a green chassis though.

Will NI be offering compatability stickers to allow older modules and cover plates to match the new graphic profile? Will they update older modules? Or will we have to live with mismatched colour combinations for the coming decades? 😜

(posting this in the lounge section instead of hardware for obvious reasons)

-

On 3/5/2021 at 7:24 PM, Gribo said:

You can reverse engineer the protocol with NI-trace. It is quite trivial.

Do you have any examples about this you can share? Becuase I tried using NI-Trace -- and perhaps it's about my lack of knowledge about the tool -- but to my understadning what was logged was nothing but the "high level" writes to property nodes and sub-vi's which I did in my top-level vi's. There were no information about the underlying USB communication.

-

For posterity: I've been in contact with NI Technical Support who confirms that the USB-8451 isn't designed to work with PXI Real Time controllers.

-

Thanks for quick answers.

I was afraid changing hardware would be the answer. Doing that isn't that easy either as the hardware configuration is quite fixed at the moment.

My current "temporary" solution is to use a Windows PC connected directly to the USB device running a looped LabVIEW *.vi which constantly writes i2c to the device every second or so. This works.

I will look into other neater solutions as soon as I get the time. I promise to post an update here if I figure out anything useful.

-

Hi,

I have a problem here. Hope I can get some help for a solution.

The task at-hand is to use an NI USB-8451 to communicate with a device over I2C. This would have been trivial if it wasn’t for the fact that the USB-8451 is connected to a PXI controller running LabVIEW Real-Time OS (Phar Lap ETS) and not a Windows PC.

I haven’t chosen the hardware combination personally and the person who did just assumed they would work together. And I did too… I mean, it's NI's eco system! Just download the NI-845x Driver Software from NI’s webpage, install it on my PC and then install whatever software needed on the controller through MAX. Easy right?

But no. It wasn't that easy.

So I wonder:

(1) Has anyone had any success controlling the NI USB-8451 from anything but a Windows PC? And if so… how?? The PXI system finds the USB-8451 and lists is as a USB VISA raw device but the supplied vi’s/drivers cannot be executed on the controller.

(2) Since what I want to do is actually very little (just writing a couple of bytes once to the I2C device) I have started to toying with the idea of recording the USB traffic from the Windows laptop somehow via Wireshark and then replaying it on the PXI system… has anyone had any success doing something like that?

(3) And finally. If it is impossible to get the USB-8451 to work with my PXI system, is there some other I2C hardware that is known to do? I’m getting the impression that NI only has this USB device apart from implementing the I2C protocol on a dedicated FPGA and that seems a little too much.

Sorry for the long post. Getting a little desperate here. Deadline approaching.

I'm running LabVIEW 2019 SP1 by the way and the controller is a PXIe-8821 if that makes any difference.

BR, E

-

Couldn't agree more.

I also believe NI really need to push LabVIEW more aggressively to the maker community. This is a group of people who adores things that are "free", "open", "lite" and today, LabVIEW simply doesn't make the cut. Of course the coolness factor will still be an issue (a night/dark mode could probably help?) but surely there must be a way to position LabVIEW as a great tool to help creative geniuses to focus less on grit and more on, well, actually creating stuff.

I work in the aerospace-defense-industry. With hardware. But we are fewer and fewer who does. The majority of the engineers now are software engineers who either work purely with software or come from that background. To explain to them why NI's offering makes sense is extremely difficult.

Of course, for now, what we do is relatively complicated and closely tied to the performance of the hardware. So using NI’s locked-in eco system saves us time and money. But NI’s pyramid is crumbling from the base. At other companies I’ve seen well-made LabVIEW application been ripped out and replaced with “anything” written in Python. Of course it didn’t work any better (quite the opposite), but it was Python and not LabVIEW. And that single argument was strong enough.

LabVIEW 2023Q1 experience

in LabVIEW General

Posted · Edited by codcoder

One could argue that LabVIEW has reached such a state of complete perfection that all is left to do is to sort out the kinks and that those minuscule fixes are what there is left to discuss! 😄