-

Posts

3,183 -

Joined

-

Last visited

-

Days Won

205

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Aristos Queue

-

Put a Decoration on a Controls Palette

Aristos Queue replied to Stobber's topic in Development Environment (IDE)

What you're seeing is just the behavior of control VIs, and, since control VIs are VIs, it doesn't matter if you use a regular VI. Since it isn't a typedef, LabVIEW just says, "Well, that means copy the contents over." -

Bunch of suggestions for LVOOP

Aristos Queue replied to Norbert_B's topic in Object-Oriented Programming

Yes, but you share them in a hospital/clean room setting. Most users don't have that kind of research facility. :-) Yes! And *if* the class-creator programmer designed their class to be a reference class, then he/she will write this VI. The entire class must be designed with that thought in mind. If the class-creator programmer designed their class as a by value class which is then stored in a DVR, then the locking must be specified by the class-user programmer, i.e., by scoping the usage with the IPE. This is exactly the situation as it exists today. This is why it is unlikely to change. -

Bunch of suggestions for LVOOP

Aristos Queue replied to Norbert_B's topic in Object-Oriented Programming

I would *not* expect that behavior to match the majority of use cases, and I've actually been looking at classes that users submit to me for which ones would work like that. Take the very simple case of the data accessor VIs -- when folks lobbied very hard for the Property node to support DVRs of classes, they insisted that the single lock should be held for the duration of all the properties being set within the node. When I look at more complex setter functions -- ones that require parameters and thus cannot be used within the property node -- they also tend to need to hold the lock for a block of operations. Also, most classes written as by-value classes will have get and set functions but no modify function, and once people start having DVRs, they expect to read a value, increment or otherwise modify that value, and then set it back. They do not necessarily think about the need to drop an IPE node to hold the lock across the function set. In point of fact, it appears to me to be far MORE common that if you are calling multiple methods in a row, that the lock needs to be held across those methods. The problem is that there might be computation operations inserted between those nodes, so LV could never be programmed to interpret the VI and figure out where the IPEs need to go, short of having an artificially intelligent compiler. It gets worse if those methods are in subVIs -- I'll leave it to readers to play with all the ways that the locks cannot be intuited. When a class is written as a by value class and is then stored in a DVR, it is necessary for the class to transition across a barrier into the by value world, be modified in whatever operations it has, and then be restored to the DVR. There's no way the compiler can *ever* (short of AI) do this for you. When a class is written as a by reference class, then the methods are added by the original developer to support all the modification operations that are necessary -- with the then limitation that the user of the class cannot compensate for any failures on the part of the author without getting some Semaphore refnums involved. (If you don't understand this, try to write an Enqueue Mutliple Items VI for the LabVIEW queues that guarantees that if two Enqueue Multiple calls are made in parallel that all the items from each call are added to the queue in a single block. You'll find you have to package the queue refnum with a semaphore refnum AND you have to prevent the queue refnum from ever being exposed on its own for a plain Enqueue Element call -- you have to wrap every single existing primitive so that you acquire the Semaphore first.) Unfortunately, I do not believe this is a shortcoming of LabVIEW. I believe this is a fundamental limitation of any parallel by-reference architecture in any language. The hoops that you have to jump through to make references parallel safe in C# or C++ or JAVA are nothing short of awe inspiring. And most programmers DO NOT JUMP THROUGH THOSE HOOPS. They'll tell me it is so easy (i.e. JAVA programmers who say "you just put the synchronized keyword on the class!") and then show me a chunk of code that has race condition bugs systemically. The DVRs aren't the problem. By reference programming is the problem. DVRs are the best solution out there for that problem that I know of. The "giant amount of work" that everyone complains about when using them is only giant when compared to the amount of work needed to do by reference programming **badly**. Doing by ref programming right is even more work than DVRs. Stop using references to data. In a parallel environment it is a bad idea. Sharing data between two processes is like sharing hypodermic needles between two addicts -- dangerous and likely to spread disease. Yes, I got that you were passing that feedback. I was trying to answer your feedback by saying that, except for #3, nothing you were suggesting was a new idea. All were ideas that have been considered, generally at great length by multiple people and rejected because they are either logically impossible in LabVIEW (i.e. a mandatory constructor/destructor for a by value type) or would actually be detrimental to the status quo (i.e. overloading with same name). #3 is a good idea in one particular form, and I strongly agree with your wish that we had that kind of polymorphism. And, in case you didn't already know it, a mandatory constructor and destructor are *already possible* if you write your class as a by reference class (i.e. every public method is one that takes a DVR of the class as input and no public method takes the by value object directly). Some of your later comments made me think maybe you weren't aware of that fact. -

Object Tracking on LABVIEW

Aristos Queue replied to Omer Tariq's topic in Machine Vision and Imaging

National Instruments sells the Vision toolkit that can do object recognition in a scene. http://sine.ni.com/nips/cds/view/p/lang/en/nid/209860 I'm not sure if that's sufficient for your needs or not -- you don't give a lot of detail of the type of tracking you're trying to do.- 1 reply

-

- tracking

- 3d tarcking

-

(and 1 more)

Tagged with:

-

Bunch of suggestions for LVOOP

Aristos Queue replied to Norbert_B's topic in Object-Oriented Programming

1) I'd call it "Initialize.vi" instead of "constructor" for most classes. Save "constructor" for a by-reference class template. You could go ahead and create a scripting tool to create this VI for you. There's nothing special about this VI, and nothing needs to be added to the language to allow you to write one. See white paper for more discussion. 2) No for most classes. Yes for a by-reference class template. 3) There are about six features I can think of off the top of my head that have something to do with what you're asking for. The only one that I think is a good idea -- and is a very good idea -- is output covariance. http://en.wikipedia.org/wiki/Covariance_and_contravariance_%28computer_science%29 4) Check out this post: http://forums.ni.com/t5/LabVIEW/My-way-of-hiding-implementation-of-an-API/m-p/2416536/highlight/true#M746202 for 18+ different ways of coding reference type architectures, many of which are singletons. See if any of them pique your fancy. And your request makes no sense -- in a Singleton, there is one and only one instance. There's no need for inheritance dynamic dispatch on any public function because there's one and only one object. Put all your dynamic dispatch VIs in protected scope. 5) No. See white paper. -

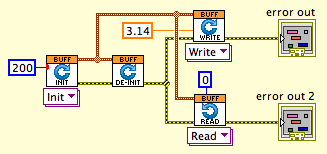

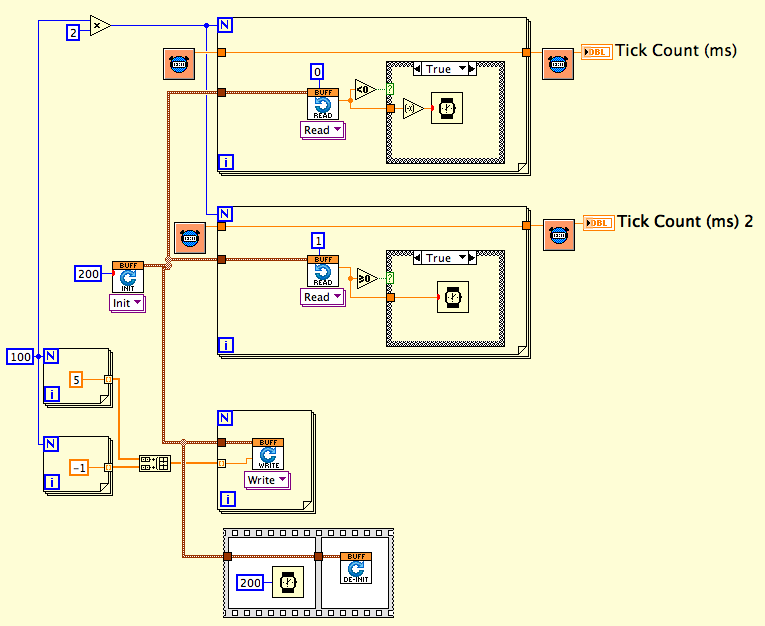

I cleaned up a lot of wiring and layout in Version 4 to make the code more accessible to others -- making wires easier to follow, that sort of thing. In this version, I put the class layer in to replace the raw pointer block. Circular_BufferV5_AQ.zip I'm done making modifications until we can figure out the CAS issue which I consider to be a showstopper to this framework making any further progress. Without a solution to that, there's the potential that this whole mechanism will have to be scrapped in favor of something very different (perhaps a library that implements exactly one disruptor and there can't be dynamic instantiation or some equally radical change). I'll talk to co-workers on Monday, though it might be later in the week before I get back to this.

-

Yes I have used it. No, there's no need for shift registers in the user's code. It is used in the Write VI. Without it, your code hangs when Write is called with a second pointer block. The Writers 2nd Call pointer block is needed *per pointer block*. It is not a First Call for the function itself. It records whether or not the newly initialized block has ever been written to. There are only two known solutions that I can find in any literature ... the refnum solution and the compare-and-set-atomic-instruction solution, both of which I described earlier. The refnum solution cannot be used in this case because of the locks it introduces. That leaves us with CAS and a pointer block that cannot ever be fully deallocated while the application is running. [EDIT] I should point out that the refnum solution is essentially equivalent to the DSPtrCheck coupled with a guarantee of no reallocation of an already-used refnum.

-

[Later] Vesion 3 is still using the First Call and the feedback nodes. This is causing your program to hang when you call Init a second time. Just throw a For Loop around your test program and wire a 2 to the N terminal. Run the VI. It won't finish. The First Call value and the Feedback Node value have to be part of the pointer block. End of story. Also, you crash if a pointer block that has never been initialized is passed in... need to check for NULL on every call. Test this by just hitting Run on your Read or your Write VI. LV goes away. You've got guards on DeInit ... need same guards on read/write. I fixed both the hang and the crash on never initialized. Found another crash scenario -- DeInit called twice on the same pointer block. I can't do anything about that or the crash caused by DeInit being called while Read or Write are still running unless/until we figure out how to generate a CAS instruction or some other equivalent atomic instruction. Circular_BufferV4_AQ.zip

-

Um... yes, they do. DeInit deallocates all of the pointer blocks allocated by Init. In the Version 2 implementation, Read uses Cursor and Array. I haven't looked at Version 3, but I would expect the number of allocations to increase (per our other discussions here) . The Version 2 implementation will crash. It isn't crashing right now in the code I posted above because LV happily goes ahead and uses deallocated memory in those DLL calls and no other part of LV does an allocate during that window. As soon as you have a program where the program proceeds to allocate data for something else, the whole system falls down and goes boom. Yes, we need a CAS to allow DeInit to work.

-

Let's see... We have Read 0, Read 1, Writer, and Deinit all executing at the same time. Here's one possible execution order... Deinit sets the "NowShuttingDown" bit to high. Then Deinit does an atomic compare-and-swap for the WriteIsActive bit (in other words, "if WriteIsActive bit is false, set it to true, return whether or not the set succeeded". It succeeds. Writer now tries to do an atomic compare-and-swap for the WriteIsActive, discovers the bit is already high and so returns an error -- it decides between "WriterAlreadyInUse" and "ReferenceIsStale" by checking the "NowShuttingDown" bit. Then Read1 does an atomic compare-and-swap on Read1IsActive. DeInit then tries to do the same and discovers the bit is already high, so it goes into a polling loop, waiting for the bit to go low. Read1 finishes its work and lowers the bit. Possibly this repeats several times because LV might be having a bad day and we might get around to several calls to Read1 before the timeslice comes down right to let the DeInit proceed (possible starvation case; unlikely since Writer has already been stopped, but it highlights that had this been the Writer that got ahead of DeInit, we might keep writing indefinitely waiting for the slices to work out). But let's say eventually DeInit proceeds and sets Read1IsActive high. The next Read1 that comes through errors out. Having now blocked all the readers and the writer, DeInit deallocates the buffer blocks and index blocks, but not the block of Boolean values. Any other attempts to read/write will check the Booleans, find that the ops are already in use and then check the NowShuttingDown bit to return the right error. (Note that they can't check NowShuttingDown at the outset because they do not set that bit, which means there'd be an open race condition and a reader might crash because DeInit would throw away its buffer while it is reading.) The situation above is pretty standard ops control provided you know that the Boolean set will remain valid. If you're ok with leaving that allocation in play for as long as the application runs (without reusing it the next time Init gets called -- once it goes stale it has to stay stale or you risk contaminating pointers that should have errored out with the next iterations buffers) then I think this will work.

-

You Init. Then you Uninit. Then you take that same already-uninitialized pointer block and wire it into a Read or a Write. How does the Read or Write know that the pointer block has been deallocated and it should return an error? Nope... there's no registered Booleans to check if the pointers have been deallocated. So to implement this solution, we would have to say that there's an Init but once allocated, the pointer at least to the Booleans needs to stay allocated until the program finishes running. Otherwise the first operation that tries to check the Booleans will crash. Are you ok with a "once allocated always allocated" approach? There's still the problem of setting those Booleans. We'll need a test-and-set atomic instruction for "reader is active" -- I don't know of any way to implement that with the current APIs that LabVIEW exposes.

-

A more complete rendition of what's in my head... You currently have a cluster of pointers. We can move those into a class. We can then make the class look like a reference type (because that's exactly what it is). That's good. And that's all we need to do IF we can solve the "references are not valid any more" problem. The ONLY way I know to do that is with some sort of scheme to check a list to see if the pointers are still valid coupled with a way to prevent a recently deallocated number from coming back into use. That's what LabVIEW's refnum scheme provides. Without such a scheme, a deallocated memory pointer comes right back into play -- often immediately because the allocation blocks are the same size, so those are given preference by memory allocation systems. Thus any scheme like this in my view has to add a refnum layer between it and the actual pointer blocks. The list of currently valid pointers is guarded with a mutex -- every read and write operation at its start locks the list, increments the op count if it is still on the list, releases the mutex, does its work, then acquires the mutex again to lower the op count. The delete acquires the mutex, sets a flag that says "no one can raise the op count again", then releases the mutex and waits for the opcount to hit zero then throws away the pointer block. That's my argument why we need a refnum layer. There might be a way to implement this without that layer, but I do not know what that would be. PS: Even if we don't need a refnum layer and we find a way to do this with just the pointers stored in a class' private data, when the wire is cyan and single pixel, many people will still refer to it as a refnum (often myself included) because references are refnums in LabVIEW. The substitution is easy to make. Just sayin'. :-) We offer you the option of crashing because if you're code is well written, it is faster to execute than for us to guard against the crash. You're free to choose the high performance route. ;-)

-

I mean two calls to Read that both use the same number for the ID input. I assume that this block of pointers that we're creating would be encapsulated as a single reference datatype that, from the point of view of a user of the API, would look like a single refnum. Put a class wrapper around that cluster of pointers, make the wire 1 pixel cyan. Refnum is simply a shorthand for "cluster of pointer values". But then there's the question of adding an actual refnum lookup layer, because of this... How do you keep this VI from crashing? How do you know that a Read is not happening at the same time that Deinit is called? Deinit can't unregister the readers if a read operation is in progress because if it destroys any of the pointer blocks, you'll crash the Read. How does a Read know to return an error if it starts working on a refnum that has been unregistered? If all you do is pass in a block of pointers, those pointers could all be deallocated and, again, you'll crash when you try to use them. This is why LV structures like this go through a refnum layer where we guarantee that a refnum that has been destroyed is not going to come back into use so that a read knows to return an error on a deallocated refnum. I don't see any way to have a separate Deinit function without an actual refnum layer guarding your allocated pointer blocks. Nothing that I have read about the Disruptor guards against such abusive usages. They assume that you just wouldn't code a call to Deinit while a Read is still running. That's not something that a general API can rationally assume. Now, in some languages, crashing might be a perfectly acceptable answer, with documentation that if you get this crash, you coded something wrong, but we try to avoid that in LabVIEW.

-

But you would need to acquire the mutex in every read and every write just in case a resize tried to happen. The only time when you know only one operation is proceeding is when the buffer is empty (all current readers have read all enqueued items) and the write operation is called (assuming we figure out a way to prevent multiple parallel writers on the same refnum, which is solvable if we can find some way to expose the atomic compare-and-swap instruction, which might require changes for LV 2014 runtime engine). That's the only bottleneck operation in this system. In that moment, the write operation could resize the read buffer and then top-swap a new handle for the read indexes into a single atomic instruction into the block of pointers. So if you have the Write VI able to have a mode for Resize (and likely for Uninit, as I mentioned in my previous post) then you could resize. I don't see any other way to handle the resize of the number of readers. Anyone else attempting to swap out the pointer blocks faces the race condition of someone trying to increment a block and then having their increment overwritten by a parallel operation.

-

Hm... the Uninit is going to cause a race condition problem. If we just deallocate the pointers, everything crashes if there's a read still in progress. Either we add a mutex guard for delete -- which blows the whole point of this exercise -- or delete is something that can only execute when Write has written some "I'm done" sentinel value and the Reads have all acknowledge it. And we have a second problem of guarding against two writes on the same refnum. They can't be proceeding in parallel but we have no guards against that. Any proposals for how to prevent two writes from happening at the same time on the same refnum? (Or two reads on the same refnum?) Is there a maximum amount that the indexes can be negative and he can use even more negative numbers? Or some mathematical game like that?

-

Still need to propose a solution for handling abort. The DLL nodes have cleanup call backs that you can register, but that's not going to help us here. If you're going to do it in pure G, I think you have to launch some sort of async VI that monitors for the first VI finishing its execution and then cleans up the references if and only if that async VI was not already terminated by the uninit VI. Either that or the whole system has to move to a C DLL, which means recompiling the code for each target. Undesirable. Anyone have a better proposal for not leaking memory when the user hits the abort button? I do. When you do Init, wire in an integer of the number of readers. Have the output be a single writer refnum and N reader refnums. If you make the modification to get rid of the First Call node and the feedback nodes and carry that state in the reader refnums, then you can't have an increase in the number of readers.

-

Moral assertions for code

Aristos Queue replied to Aristos Queue's topic in LabVIEW Feature Suggestions

OH! I get it... you want to *join* the Inquisition! Well, that's a different question entirely. I believe they're taking applications. :-) -

Moral assertions for code

Aristos Queue replied to Aristos Queue's topic in LabVIEW Feature Suggestions

You dare oppose the cleansing fire? You are one of those who lack the stiff backbone to do what needs to be done to purify the code base! I will pass your name along to the Inquisition. Perhaps their tender ministries will help you see the error of your ways. Remember: He who does not cast the first stone commits the next sin! To everyone else: Remember, anyone who opposes this plan is a sinner who needs correction. Let the fate of crossrulz be a lesson for ye. Repent of your sinful code... rejoice when it burns in the flames of "p4 obliterate". Support the moral reeducation of virtual instrumentation! -

A programming language that looks a lot like other programming languages but requires a very strict *moral* code. Please let me know if you think this is a feature that should be added to LabVIEW. It would not be hard. https://github.com/munificent/vigil "Infinitely more important than mere syntax and semantics are its addition of supreme moral vigilance. This is similar to contracts, but less legal and more medieval."

-

mje: Yes, those functions are reentrant. ShaunR: I'd like you to try a test to see how it impacts performance. I did some digging and interviews of compiler team. In the Read and the Write VIs, there are While Loops. Please add a Wait Ms primitive in each of those While Loops and wire it with a zero constant. Add it in a Flat Sequence Structure AFTER all the contents of the loop have executed, and do it unconditionally. See if that has any impact on throughput. Details: With While Loops, LabVIEW uses some heuristics to decide how much time to give to one loop before yielding to allow other parallel code to run. The most common heuristic is a thread yield every 55 microseconds, give or take, when nothing else within the While Loop offers a better recommendation. You are writing a polling loop that I don't think LV will recognize as a polling loop because the sharing of data is happening "behind the scenes" where LV can't see it. A Wait Ms wired with a zero forces a clump yield in LabVIEW -- in other words, it forces the LV compiler to give other clumps a chance to run. If there are no other clumps pending, it just keeps going with the current VI. Because this is a polling loop, if the loop fails to terminate on one iteration, it does no good to iterate again unless/until the other VI has at least had a chance to update. The yield may help improve the throughput a bit. If these were not reentrant, the impact would be huge. The size of the data doesn't matter at all -- you would see a substantial performance hit if those functions were no-ops and non-reentrant. Just the overhead of the mutex lock and thread swapping would be substantial.

-

Ok... the hang when restarting your code is coming from the First Call? and the Feedback Nodes. I'm adding allocation to your cluster to store those values. It needs a "First Call?" boolean and N "last read index" where N is the number of reader processes.

-

Ok. That makes sense. I think I see where this fits in now.

-

I don't know much about byte packing. I'll take your word for it. There's no pragma around the code. It is a class, though I don't think that means anything compile was vs struct.

-

In LV, a child class cannot have a static-dispatc method with the same name as its parent. This is called "shadowing" in other languages. If I had any doubts about that decision, today's text code debacle has cured them.