-

Posts

4,937 -

Joined

-

Days Won

304

ShaunR last won the day on June 18

ShaunR had the most liked content!

Profile Information

-

Gender

Male

LabVIEW Information

-

Version

LabVIEW 2009

-

Since

1994

Recent Profile Visitors

43,534 profile views

ShaunR's Achievements

-

I've just spent an hour arguing with an LLM (Deepcoder). TL;DR A.I. is useless at programming. I had a bug. I'd spent about an hour trying to figure it out and not succeeding but it shouldn't be that hard-I'm just missing something obvious. So. Ideal scenario for a clever AI to show dominance and help out a poor old flesh-bag programmer, right? Just point out the mistake or mistakes and laugh at my stupidity like a real coder. The bug was that the address from recvfrom would be 0.0.0.0 instead of 127.0.0.1. The problem was either bind wasn't binding to a specific address (which it was supposed to) or the address translation was not working quite right. I gave it 3 functions where I thought the bug was and explained that IPv6 seemed to be reporting correctly but IPv4 was in error: one function (Listen) had the bind function one function had IPv6 and IPv4 address translation from a SOCKADDR_STORAGE structure to strings and the other function was the read function with the recvfrom. This is an abbreviation of the conversion: - Round one. The following code has a bug that returns 0.0.0.0 as the IPv4 address from "recvfrom" when it should be returning "127.0.0.1". Find the reason that the code is returning 0.0.0.0 instead of 127.0.0.1 and rewrite the code to fix the issue. It told me how to bind to a specific address and how to use the recvfrom function. It then basically wrote the example on the MS page for recvfrom but within the Listen function. - Round 2. I'm not using the "sockaddr_in" structures; I'm using SOCKADDR_STORAGE structures. Please modify your example to use SOCKADDR_STORAGE structures. It apologised made an excuse about misunderstanding and then proceeded to create an example, still using sockaddr_in structures, but this time hardcoding "127.0.0.1" in the bind address. - Round 3. No, no, no. It needs to support user entered IPv6 and IPv4 but there is a bug with the IPv4 address. It also needs to use SOCKADDR_STORAGE as in the code I supplied to you. Reevaluate the code and write a version of the code that fixes the bug. It apologised, said that it understands why it got it wrong, and then proceeded to create an example, still using sockaddr_in structures, but now hardcoding "::1" in the bind address. -Round 4. Look. It needs to support both IPv6 and IPv4 and that's the reason I'm using SOCKADDR_STORAGE. If you don't have enough information then ask for clarification but you have the code that the bug is in so find the damned bug FFS! Another apology, said it can understand my frustration and then proceeded to spit out the example from MS again. This went on for an hour. No code I could actually use in my functions, never pointed out the bug in my code and the prompts just got longer and longer as I tried to head-off it's stupidity. This was one of the functions. int Addr2Address(SOCKADDR_STORAGE addr, PCHAR Address, int *Port, int *IPvType) { int err = 0; *IPvType = 0; switch (addr.ss_family) { case AF_INET6: { if (Address == NULL) {return 46;} *IPvType = 2; char strAddress[46]; inet_ntop(addr.ss_family, (void*)&((sockaddr_in6 *)&addr)->sin6_addr, Address, sizeof(strAddress)); break; } case AF_INET: { if (Address == NULL) {return 16;} *IPvType = 1; char strAddress[16]; inet_ntop(addr.ss_family, (void*)&((sockaddr_in6 *)&addr)->sin6_addr, Address, sizeof(strAddress)); break; } default: {err = WSAEPROTONOSUPPORT; break;} } *Port = ntohs(((sockaddr_in6 *)&addr)->sin6_port); return err; } The bug is in the AF_INET case. inet_ntop(addr.ss_family, (void*)&((sockaddr_in6 *)&addr)->sin6_addr, Address, sizeof(strAddress)); It should not be an IPv6 address conversion, it should be an IPv4 conversion. That code results in a null for the address to inetop which is converted to 0.0.0.0. I found it after a good nights sleep and a fresh start.

- 8 replies

-

- 1

-

-

- dvr

- ni software

-

(and 2 more)

Tagged with:

-

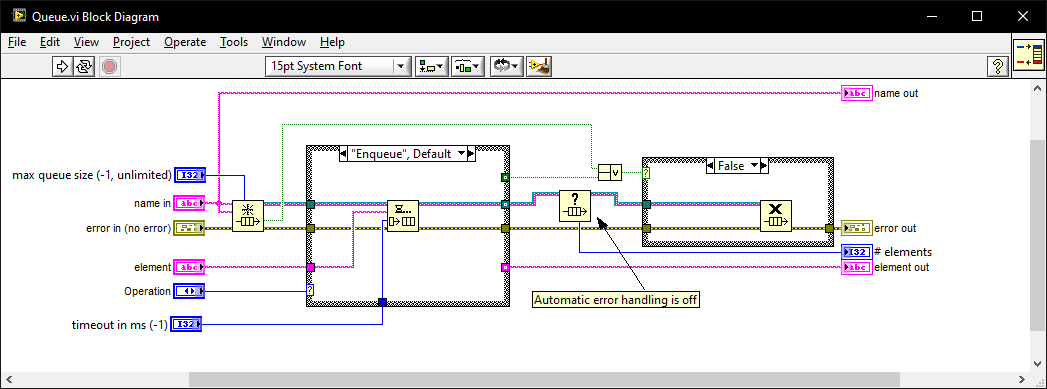

I once went for an interview where they gave me a coding test and asked me to modify it. It was a very long time ago so I don't remember the exact modification they wanted (nothing to do with memory leaks) but I do remember the obtain queue and read queue inside a while loop with the release queue outside. I asked if they wanted me to also fix the memory leak as well as the modifications and they were a little puzzled until I explained what you have just said. I must have seen (and fixed) this while-loop bug-pattern a thousand times since then in various code bases. I also created this VI which I generally use instead of the primitives as it intialises on first call, can be called from anywhere, and prevents most foot-shooting by rolling them all into a single VI and ensuring all references but 1 are closed after use. Queue.vi

-

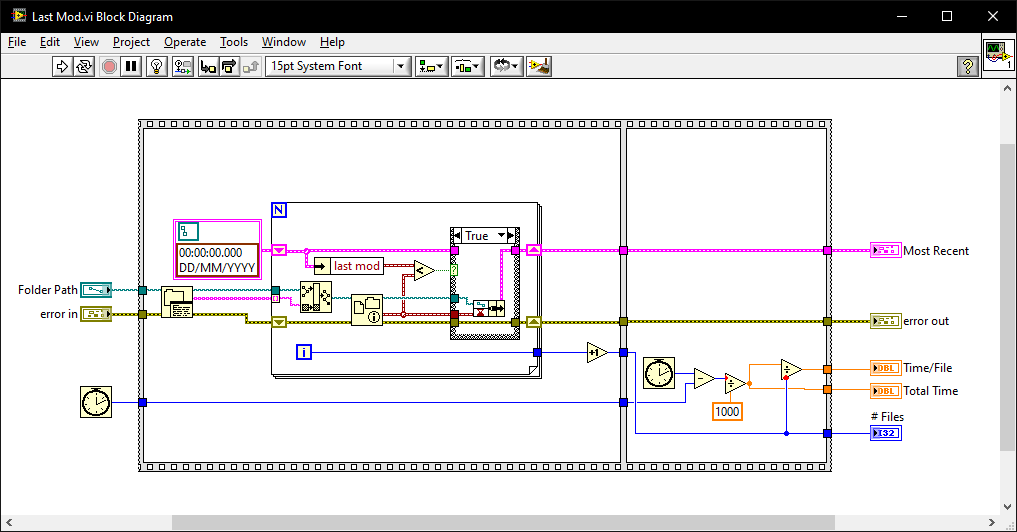

Something like this? file increment.vi

-

Serial Communication Question, Please

ShaunR replied to jmltinc's topic in Remote Control, Monitoring and the Internet

If it is indeed a fixed 5 byte message and not terminated with a char sequence then the message may be terminated by a break. See the Set Break example of how to set it. IIRC, you also need to set the End Mode For Writes property to Break and set Send End Enable to False then add the primitive after your Write. Another thing. The Write and Read have a little watch icon in the corner. This means they are asynchronous. While you are troubleshooting then right click on the icon and select "Synchronous" -

Serial Communication Question, Please

ShaunR replied to jmltinc's topic in Remote Control, Monitoring and the Internet

"Port Connected" is only applicable to NI products and doesn't work with OS Com Ports. -

Those aren't typo's and errors. They are tests to see if we are paying attention.

-

Serial Communication Question, Please

ShaunR replied to jmltinc's topic in Remote Control, Monitoring and the Internet

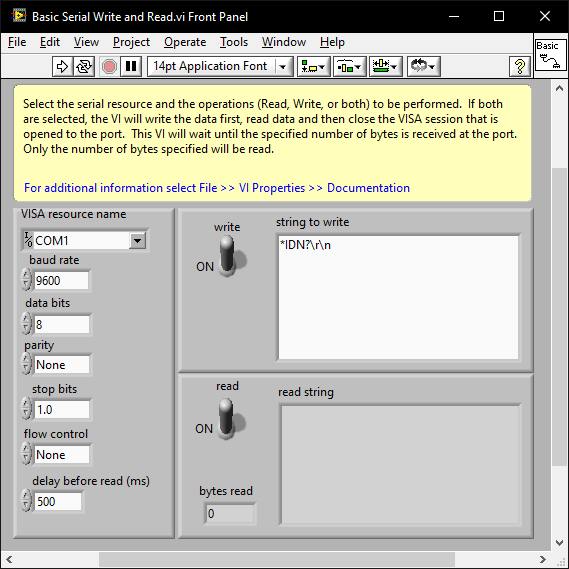

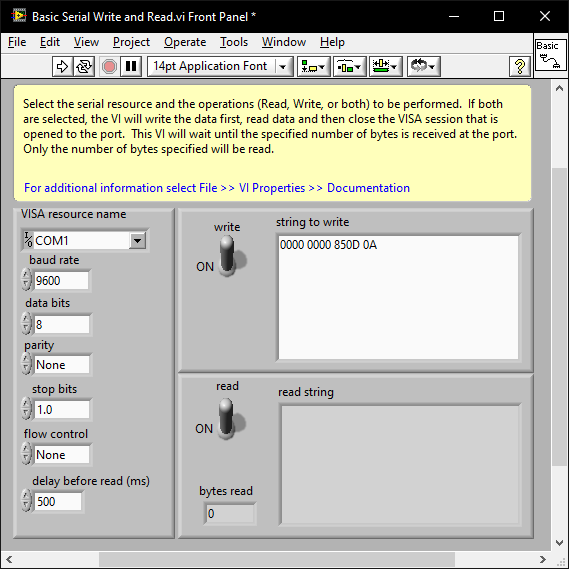

When you know the baud and parity etc; issues that result in the instrument not responding at all are almost always the termination character. Initalise the com port and try a few term chars (CR,LF, CR+LF). ensegre's example turns off the term char. You may just need to add 0D0A to the array. There are examples shipped with LabVIEW and you can also play around with VISA using the NI MAX software. Note that if you right click on the string control and select "Hex Display" you can enter the hex values: -

Seeking Architectural Guidance: Implementing a Plugin-Based System

ShaunR replied to LEAF-1LEAF's topic in LabVIEW General

VI server. Simple and easy to implement with no framework dependencies. Define a distinction between Services and Plugins (plugins don't contain state, services do). Use a standardised uniform front panel interface between plugins. I use a single string control (see this post) and events for returns. An alternative is a 2d array of strings which is more flexible. Each plugin is contained in it's own LLB which contains all of it's specific VI's. Just list the llb's in the directory for plugins to load. Replace the LLB to upgrade; delete to remove. Names starting with an underscore (either in the LLB name, directory name or file name) are ignored and never loaded. They are effectively "private". A scheme to prevent unknown plugins being loaded. -

Dadreamer is talking about minutes per change though. I still think the symptom is probably exacerbated by XNodes but probably not the fundamental problem.

-

Do you see the unresponsiveness in dadreamer's example?

-

Editing a constant in your test VI only results in a pause for about 1.5 secs on my machine. It's the same in 2025 and 2009 (back-saved to 2009 is only 1.3MB, FWIW). I think you may be chasing something else. There was a time when on some machines the editing operations would result in long busy cursors of the order of 10-20 secs - especially after LabVIEW 2011. Not necessarily XNodes either (although XNodes were the suspect). I don't think anyone ever got to the bottom of it and I don't think NI could replicate it.

-

1.8 MB?

-

You're about to solve JSON decoding.

-

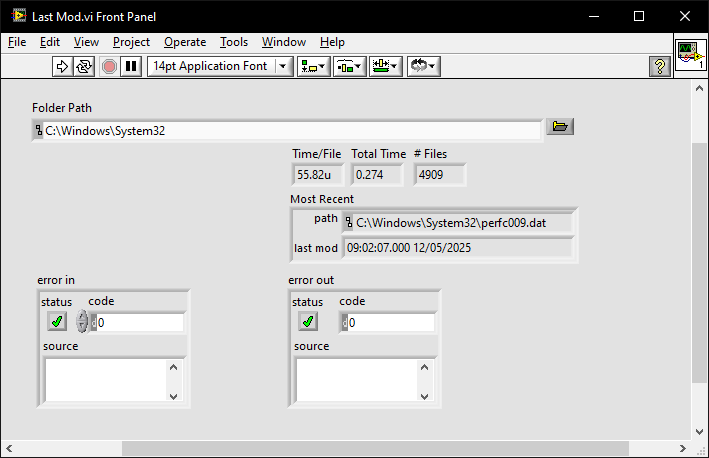

Find most recently created or modified image(file)

ShaunR replied to mooner's topic in LabVIEW General

-

We are on a hiding to nothing as we can't create objects. I abandoned those thoughts over a decade ago (maybe even 2 decades ago ). It is feasible with scripting but so slow and won't work in built applications. The half-way house is to use scripting to make the labview prototypes for typdefs and handlers then load dynamically as plugins but it's a lot of infrastructure just to propagate what are hopefully rare changes. I did play with that (hence my question to hooovahh) but in the end went for a string based solution to avoid it altogether. What happened to AQ's behemoth of a serializer? Did he ever get that working?