-

Posts

361 -

Joined

-

Last visited

-

Days Won

36

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by dadreamer

-

-

1 hour ago, alvise said:

What I can briefly explain is that if I set it in the UI thread, I can run unsafe DLLs. But if I run it as "Any thread", I will run it in the unsuitable thread. This can cause problems

I doubt there would be problems. Rolf already has set the CLFNs in his HikVision examples to any thread, so I could conclude that those functions are reenterant (thread-safe). Of course, it must be clarified with the documentation from the developer, but I accepted it on faith. 🙂

Moreover, you should move your GetJPEG call to a parallel loop as it's blocking your callback event frame, not letting it to process another events. Hence you see freezes of the GUI. Or limit the event queue in the Event Structure settings. Or use Flush Event Queue as suggested.

1 hour ago, alvise said:or is it just one image? I guess not.

It is. Why do you think otherwise?

11 minutes ago, alvise said:I'm struggling with how to save it as a jpeg.

What's your issue? Just write the array into a binary file with a ".jpg" extension exactly one time and you're done.

-

But if GetJPEG call is blocking, you don't have any other ways except implementing PlayM4 callback in the C code.

-

4 minutes ago, alvise said:

Should I select all function nodes as Any thread?

46 minutes ago, dadreamer said:What if you switch all the HikVision and PlayM4 CLFNs from UI thread to any thread (yellow coloring)?

-

5 minutes ago, alvise said:

Everything to UI thread

To any thread. They should become yellow. But yes, you have a way too much events queued!

-

Re-read my previous message. And maybe you have a large bunch of events in the queue? Check with the Event Inspector.

-

1 hour ago, alvise said:

Do you have any suggestions for finding the array size?

Only those stated in the documentation.

QuotepJpeg [in] Address assigned by users for storing JPEG data, no less then jpeg file size: suggested w * h * 3/2, in which w and h stand for the width and height of the image.

This is the minimum size required. But it may occur that this size is not enough - this needs some testing on your side. If you would use BMP, then there's a more or less precise formula, but BMPs are quite larger in size, as you know.

Also I, as Rolf did few posts ago, suggest using PlayM4_GetPictureSize function to know the actual image size. Insert it between InputData and GetJPEG nodes.

2 hours ago, alvise said:I can't close the VI unless the array is already finished with the specified size. labVIEW freezes.

Are you saying that those GetJPEG/GetBMP functions are synchronous and don't return until the buffer is filled? What if you switch all the HikVision and PlayM4 CLFNs from UI thread to any thread (yellow coloring)? Then it would run in another thread and not freeze the GUI.

-

Well, I see from your data that you're finally getting a valid JPEG stream. Can you save that pJpeg array into a binary file and open it in a viewer? Just to make sure it's the cam image. And now you need to figure out the proper array size so the GetJPEG function wouldn't error on you anymore. And in your "Stop" frame you should accomplish the cleanup procedures such as stopping the playback, freeing the port etc. Just find the opposite functions according to your UserEvent frame calls.

-

1 hour ago, alvise said:

if i add the number ''20130528'' here

It's date when pzj coder added that define to the header.

1 hour ago, alvise said:

1 hour ago, alvise said:I get an output like below but it's just outputting an image

What do you mean?..

-

@alvise Based on what Rolf said try to run this VI and report the PlayM4_GetJPEG Error Number.

-

3 minutes ago, Rolf Kalbermatter said:

I think you have to use the PlayM4_GetPictureSize() to calculate a reasonably large buffer and allocate that and pass it directly to the PlayM4_GetJPEG() function to MAYBE make it work.

He already tried to allocate a huge buffer of 3000000 bytes. This buffer is definitely large enough to hold not only a single JPEG content, but even a several BMP ones. But this doesn't work as well.

-

10 hours ago, Rolf Kalbermatter said:

But there is no guarantee and I can not find any documentation for those functions, not even in bad English.

Isn't it what you wanted? (Look at the pdf attachment)

-

You could try to switch to PlayM4_GetBMP, but I assume it gives nothing new. This is odd that even the last error number is zero.

-

-

I slightly modified your VI, try it.

1 hour ago, alvise said:Where did "BITMAPFILEHEADER" and "BITMAPINFOHEADER" come from here?

These are Windows API bitmap definitions. In 32-bit IDE they are 14 bytes and 40 bytes long respectively. So the final formula should be 54 + w * h * 4. But it is valid only if you will use PlayM4_GetBMP! For JPEG format there's a different header length to be defined.

-

That's how some guy did that in Delphi.

function GetBMP(playHandle: Longint): TBitmap; var rbs: PDWORD; ps: PChar; i1,i: longint; bSize: DWORD; ms: TMemoryStream; begin try result := TBitmap.Create(); if playHandle < 0 then exit; bSize := 3000000; ms := TMemoryStream.Create; new(ps); GetMem(ps,bSize); new(rbs); if PlayM4_GetBMP(playHandle,ps,bSize,rbs) then begin i1 := rbs^; if i1>100000 then begin ms.WriteBuffer(ps[0],i1); MS.Position:= 0; result.LoadFromStream(ms); end; end; finally FreeMemory(ps); FreeMemory(rbs); ms.Free; ps :=nil; rbs := nil; ms := nil; end; end;But that bSize := 3000000; looks not an elegant enough, so I'd suggest using PlayM4_GetPictureSize and calculating the final buffer size as

sizeof(BITMAPFILEHEADER) + sizeof(BITMAPINFOHEADER) + w * h * 4

But you may test with that for now to make sure everything is working. Another option would be to use the DisplayCallback, that is set by PlayM4_SetDisplayCallBack. There the frames should already be decoded and in YV12 format, therefore you'd have to convert them to a standard RGBA or any other format of your liking.

-

Did you implement PlayM4_Play as suggested?

-

Both of your PlayM4_GetJPEG calls return FALSE (failure). You need to figure out why.

-

Ok, almost correct. You could replace that array constant with an U64 "0" constant, then configure that CLFN parameter as Unsigned Pointer-sized Integer and pass the "0" constant to the CLFN. But if it works as it is now, then leave.

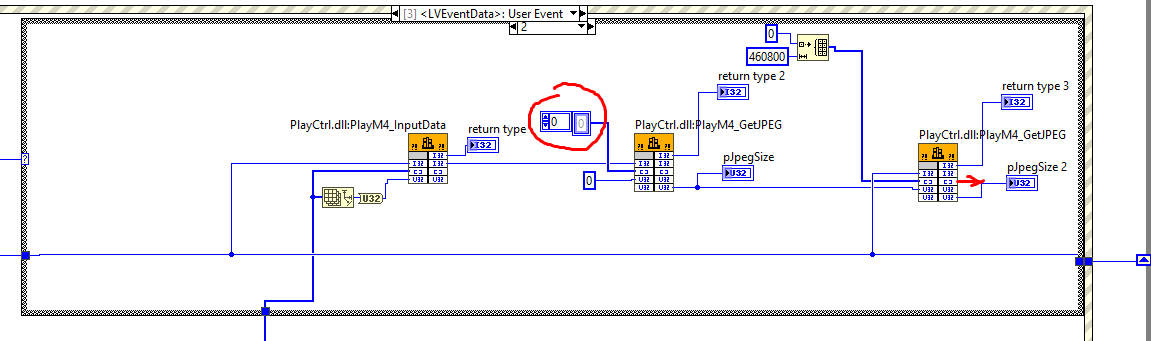

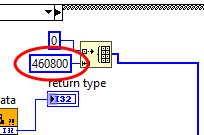

Yes, now delete that "460800" constant and wire the pJpegSize from the first PlayM4_GetJPEG CLFN. That's the meaning of using two PlayM4_GetJPEG calls. First one gets the actual size, second one works with the properly allocated array of that size.

Now create an output array for the terminal marked with the red arrow. If it's a valid JPEG stream, you could try to save it to a binary file with a .jpeg extension and open it in image viewer. In order to convert the stream to a common LV array you may use some extra helper VI like this one.

-

BTW did you test this?

3 hours ago, Rolf Kalbermatter said:What I did mean to do is to call GetJPEG() first with a null pointer for the data and 0 for nBufSize and then use the return value of pJPEGSize to allocate a big enough buffer to call the function again

-

7 minutes ago, alvise said:

This is how I wired dwBufSize->nBufSize.

No! Another guess?

-

Yes. Or you have an alternative - read the last paragraph from Rolf. Small note: you don't need to convert the array to U8 explicitly, just do a RMB click on the "0" constant and select its representation.

-

41 minutes ago, alvise said:

Since I cannot directly create a constant U8 array

Why you cannot?..

https://www.ni.com/docs/en-US/bundle/labview/page/glang/initialize_array.html

-

15 minutes ago, alvise said:

Now I want to adapt it to ''PlayM4_InputData'' labVIEW, but your previous idea was to replace it with ''PlayM4_GetJPEG''.

I think now, PlayM4_InputData is necessary. PlayM4_GetJPEG doesn't have an input to provide the "raw" buffer to decode, hence PlayM4_InputData takes care of it. It's being called on each callback, so looks like it copies the buffer to its internal storage to process.

15 minutes ago, alvise said:So I'm looking into this function, but there is ''pJpeg'' in the parameters as in the picture below, what values should be sent to it and I guess its output should be an array of images, right?

Just allocate an U8 array of "w * h * 3/2" size and pass into the function. You should receive a JPEG memory stream in the pJpeg array, that could be translated into a common LV array later.

-

1 hour ago, alvise said:

PlayM4_Play

Well, it's a bit unclear whether you really need it. I found some conversations, where the guys were passing NULL as hWnd to it, when they didn't want it to paint. Logically it should start the buffer copying and decoding along with the rendering of the contents to some window. But maybe it just prepares its internal structures and the window as the next one is PlayM4_InputData, which actually grabs the buffer.

So I suggest implementing it with the CLFN, but setting hWnd as NULL (0) for now. You may remove it later, if it's not really needed.

Using the DLL files of an application compiled with C# with labview

in LabVIEW General

Posted

Just save and look at it. It's just for a one-time test anyway, so doesn't matters which moment you're capturing on your camera. You don't need that inner "2" Case Structure, remove it.