-

Posts

683 -

Joined

-

Last visited

-

Days Won

2

i2dx last won the day on February 21 2011

i2dx had the most liked content!

Profile Information

-

Gender

Male

-

Location

Duesseldorf/Germany

Contact Methods

- Personal Website

LabVIEW Information

-

Version

LabVIEW 2013

-

Since

2001

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

i2dx's Achievements

-

Yes, it's sad. I don't believe LV has a future, too. Best we can hope, that it is somehow maintained at the status quo. I guess the low acceptance of NXG broke their neck. But I told them, that NXG is "Klick-Bunti-Kinder"-Software (hard to translate that from German, it's something with to many unseless features, way to much focus on the "look & feel" instead of quality and functionalty, overall something you'd give your kids to play with, but not for serious work). They didn't listen - as so often. Ok, it's not that big problem for me, because I decided to walk on new path 3 years ago and switched to STM32 and developing with Open Source Tools. What I did with cRIOs, LVRT + FPGA before I do now with my own PCBs and C. I gave up my SSP 2 years ago, when they told me, that there is no SSP any more and I had to pay for a SAS-plan instead at almost doubled price. But I feel for all the developers, that hat put their efforts into this tool, have maybe 20 yrs or more work experience and now have to learn, that NI is letting them down and they have no other choice then reinvent themselves. Besides that: I'm not a native speaker, I don't understand what you mean with the last sentence.

-

my gut feeling says: you're right starting at Minute 54:17: >>we need patience, give NI a chance to prove itself and >> I am not convinced NI is gonna deliver on that, but I'm hopfull that NI 's gonna deliver on that ... hope dies last I guess LabVIEW is a dead horse.

-

I stumbled uppon this issue, too, some time ago, and finally I kept the code, that generated the menus dynamically. If you don't use H U G E right click menus, there is no recognizable performance loss. I've tested that with 40-50 menu items (which were created dynamically themselfes, depending on the item you clicked on) and did not recognize any delay gl&hf cb

-

are we fighting? oops ... sorry guys, my english is not that good that I would recognize the fine nuances. I only understand the technical terms and "hidden intentions" if they are obvious ... but if you want to blame me for that, just do it, I'm used to it ok, back to topic: I don't think that using references of the controls of a user interface adds "more" decoupling than using e.g. an event strukcture, because in the end you have to write code, that handles all that user interactions, and if youi don't use an event structure, you'll have to write all that code on your own. OK, you gain more decoupling in a technical sense, but in the end you have to reconnect the UI and the parts of your code, that do the "work" so if it's not offensive I'd like to ask the question: what should be "absolute" decouping good for? cheers, cb

-

yepp. that structure you describe is called a "distributed software". I'm writing a lot of this type of code: the "work" is done on a RT-System, the user-interface (I call it "Client") is running on a Windows system. In that case I use the TCP-Messages, I send to the "server" like an event. In fact it is an event - e.g. a pressed button. The only difference is: the "messages" that go directy into the state-machine in the example above are wrapped into a TCP-packet, sent to the server, unwrapped and put into the state-machine on the RT-server ... so the only difference is the method of transportation: writing directly into a queue vs. wrapping into a TCP-packet, sending it across the network, unwapt it and enqueue it ... gl&hf CB

-

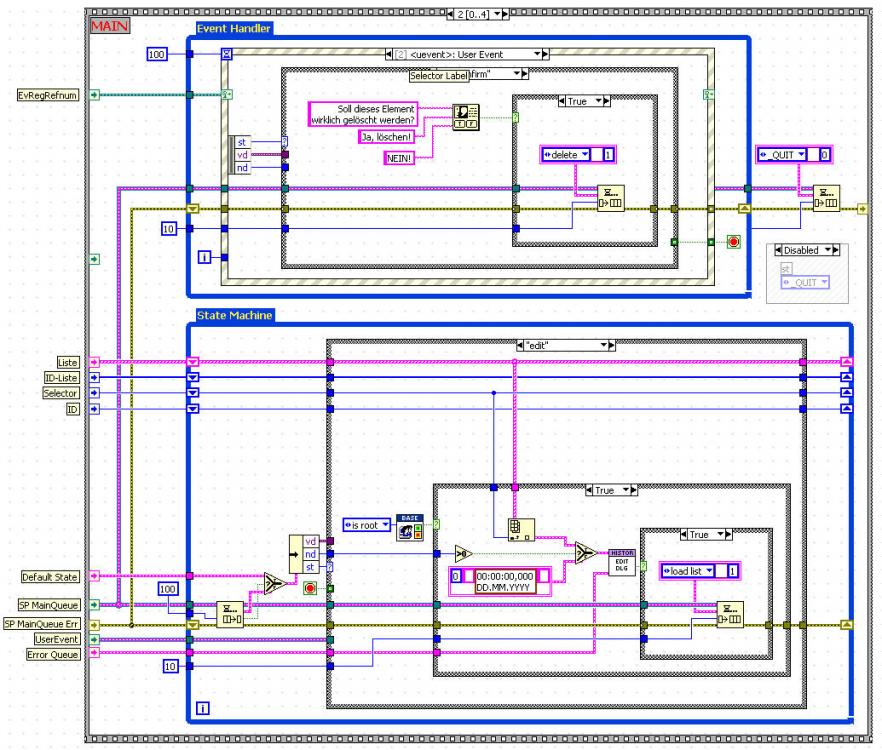

maybe I totally misunderstood the question / discussion, but why are you all talking about using control references when decoupling the UI from the Code? I simply use this pattern: The upper state machine handles all the FP events (button pressed, value changed, etc ...) and the statemachine below does all the work. In the user-event state also handles all the dialogs, etc. - all that stuff that blocks FP actions - and you can use the user-event case, too, do send messages back from the lower state-machine to the event handler (e.g. to disable buttons, set values to controls, etc ...) If you have more "tasks" that need to run in parallel, you can simply expand this pattern with a 2nd or 3rd "lower state-machine" ... gl&hf CB

-

Set "treat read-only VIs as locked" to true as default

i2dx replied to crelf's topic in Source Code Control

great idea, u got my vote -

yea, 7.1.1 was great. OK there was no project explorer (and I could not imagine working on big distributed systems without it!), working with an FPGA was somewhat "tricky" and if you wanted to work on an RT-Target and a Windows-Target at the same time, you had to use some really dirty tricks, so many of the features in the current versions are really improving my day to day work! But on the other hand: almost all features in LV 7.1.1 I used in my daily work were working properly and that's what I'd like to see again. And if that would mean that there are less features or no new features at all in the next versions - that's absolutely ok for me - I'd keep on paying my SSP anyway if I would get a better, faster and improved Development Environment, whith less bugs and more productivity. There are some examples what I don’t like to see: since LV 5 we all knew that at a copy and paste operation would place the pasted code in the BD centered at the last position of the mouse pointer. In LV 8.6.x somebody in the R&D thought it would be funny to insert that code floating in the middle of the screen. This has been already changed to the old behaviour again, but why the heck did anyone even think about changing a workflow, that was established for years without any reason? Did anyone complain about this established behaviour and if yes, why did NI not laugh at him and call him names? Second example: in LV 8.6.1 there “suddenly” was a bug in the property-dialog for numeric controls. There was a hotfix a few days later, but why the heck did someone mess up this dialog, which was unchanged since LV 8.2? Got some Perforce issues, eh? 3rd example: in LV 8.6.1 the dialog for editing enums starts jumping to the first line if there are more elements in the enum, than the MCL-Box can handle without scrolling. I’d really like to meet that pupil that messed up that tool and do a little “debug session” with him Maybe some of you may now think: why does this little stupid not install a newer version of LV? That’s quite simple: I use newer versions of LV in other projects, but I also have one project, where I have to stick to LV 8.6.1 (due to several reasons). That means: at least 3 month a year I have to work with LV 8.6.1 and every day I see those totally unnecessary bugs and get annoyed by them and the worst thing is: I know exactly there is absolutely nothing I could do about that because “my” bugs are fixed in a version I can not use for that specific project and that’s a real frustrating perspective. That's the point for me, too. My customers are satisfied, if the software they get from me is in time, in budget and is stable and fits their demands. The decision how to create that software is up to me. If there is an advantage for me in using e.g. OOP or X-Controls, because, that makes me faster, more flexible or let me produce less bugs, than that feature is – of course!! - a good choice for me. If there is no advantage for me, I leave it. Nobody pays me for creating code with cool new features, I get paid for reliability and speed cheers, CB

-

this is the right thread for me to drop a few lines most of the stuff posted on that "I hate LabVIEW page" is pure bullsh**! Those guys simply should do a Basics I + II course or read a book like "LabVIEW for Newbies" or something like that and most of their "problems" would be gone. If the don't understand how to use LabVIEW and how it works, it's not the failure of the Tool or NI, it's their fault. If I read things like: I'd like to answer: why don't you stupid use the VI Documentation? and why don't you use the text-tool in the BD? --> have you ever heard about the text-tool? You can even change the colour of your text with the color tool, really! --> ROFL! you need a Basics I course man, really, or just use Java, C# or whatever. You are to stupid to understand what dataflow means and what it is good for ... noone, who is serious with LV would really care about the complaints of a no0b which is not willing to learn at least some of the basics. LV is a great tool and a great programming language and I love it (and I earn most of my bucks with it …) but there are also some points I really hate: This yearly major version updates really suck! If someone would ask me I’d say: a major release once in 5 years would be enough, like Microsoft does with Visual Studio. And of course: all VIs written with this major Release should be compatible to all the other minor releases of that major release. The quality of the new releases sucks! I’d really like to se a stable version like 7.1.1 again and I hate it being an unpaid beta-tester in a so-called “release”. What I need is a stable version, I can work with and rely on if things come tough. I don’t need all those new features, where most of them are mainly good for marketing, but almost useless in production. I need reliability, stability and features that improve my daily work and help me getting faster, more precise, etc. For me, the behavior of NI over the last 5 years is like (in the dilbert cartoon posted above): who the fu** needs customers? All we want is the money! And I really don’t like being treated like that! cheers, CB

-

if I am allowed to say this: giving official statements once per year to the users reminds me much more of the german bureaucracy then a modern customer feedback system

-

with my kudo, the counter has now reached 250 ... still 50 to go that's exactly how I use the idea exchange: I visit about once per month and kudo all the new ideas I like ... I would not use that, because that would be information overflow - for my taste ...

-

if you loose the connection (by whatever reason) you have to close the connection on both sides, using the TCP Close primitive. Then you have to open a new listener on the server side and connect again on the client side and maybe you want to give both sides a little wait time to allow the TCP-Stack to call it's clean up routines (50 ms shuold do ...). The client recieves an error 66 when the server closes the connection (e.g. due to an error), you have to handle that one and just ignore error 56, if the server as not jet sent data ... I'm using the Simple TCP Messaging protocol since years now on cRIOS, PXIx, and RT Desktops and never had any problems, except these 2: - you should not "bomb" a cRIO target with 2 connection requests simultaneously, if you use a multi-connection protocol --> use the error cluster to open the connections (ports) one after another - - you should (of course) NOT try to send more data then the physical layer can handle - even with crappy hardware you are on the good side with data-rates of: 5 MB per second on a 100 MBit Link and 50 MByte per second on a Gigabit link cheers, CB

-

yea! I like the function of my micro-wave, too! (really!)

-

I don't think the logo should look to much like a BD. LAVA is the Front panel of the LV-Community (because we are talking in the bright light of the general public here ) not the hidden block diagram i like it!