Search the Community

Showing results for tags 'vi server'.

-

Hello all, Is there any of you ever be able to push more than 30 VI in the exported VI list of VI server? I think I found a bug that is there since at least LabVIEW 2011. WAIT... don't answer the easy one... "export all VI"... if you were about to answer that, that means you are not developing a safe application. Thanks for any help. Benoit

-

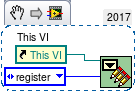

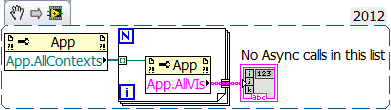

I thought there was an easy, built in, VI server way of doing the following, but I haven't found one. Am I missing something trivial? So I have one application instance, spawning clones of a certain VI. I would like to get an array of the VI refs of all of these clones. I thought I could via some property like Application:All VIs in memory, but I haven't found any suitable. All VIs in memory gets only the base VI names. Missing that, I resort to register all my clones in a FGV as they startup, , and consult the FGV at will. Is there a more linear way? RegisterMovieWriters.vi I also note that I have to associate each VI ref with its clone name in the FGV, otherwise plain refs to different clones match as equal in lookups.

-

I am running into an issue where my VI Server connection goes stale after a few hours. Looking for a fast way to detect this and recover. Currently here is what I am doing: On first call, open the application reference and then open the VI reference. Cache both of these. Use the VI reference to call the remote VI. On subsequent calls, test the cached references to verify they are still valid and then call the remote VI. What appears to be happening is the references still appear to be valid in the caller but the connection is broken so the remote call fails. Then I detect this and reopen the app and VI refs and can again call the remote VI. The issues are: The failing remote call takes a long time to timeout and I do not see how to control this timeout. The test of the refs does not actually test to see of the network connection is still good. The result is it takes a long time to recover and this is upsetting the user since it appears the system is locked up. What I need is a way to control the timeout of the 'call by reference' node or a way to quickly test the references to verify the network connection is still good before I attempt the remote call. Any ideas? thanks, -John

-

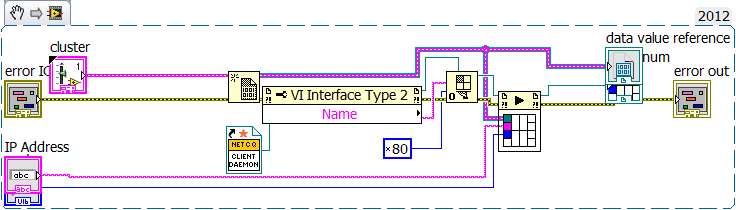

Hi all, I almost never ask for help in LabVIEW... but for this one, I have no idea where to find a solution to my problem. I'm trying to exchange data between two executable. The way I was using in the past was VI server. But it seems that there is something in LabVIEW 2011 that is not working the same way than it was working with LabVIEW 8.6. I created in my project 3 VI. One publisher, one suscriber and one GET-SET variable that will be used to exchange data between my 2 .exe. What ever I'm trying to do, I cannot figure it ou how to read the data from the Get-Set. Even having the list of VI available in memory is not working. How can I access this VI by VI Server with an address at the input of the application reference? I'm preaty sure it has something to do with security... but what.... Thanks for your help.

-

I have run into a bit of a problem with how I am trying to do networked messaging between a client and a server application. I am considering trying Network Streams as a solution but wanted to see if anyone has had success (or failure) with this solution or has a better idea. First, a little background on the application: In my system, there are N servers running on the network. Each of these is running on it's own VM with a static IP and unique machine name. There are also N clients. These are running on physical machines, VMs and a Windows RDS (Remote Desktop Server). The messages are all abstract class objects. Both client and server have a copy of the abstract classes so they can send/receive any of the messages. My current solution is to use VI Server to push messages from one application to the other. There is no polling. Clients and servers can go offline at anytime without warning and the application at the other end of the connection must deal with the disconnect gracefully. In normal operation, the client will establish connection to a group of servers (controlled by the user). The client provides it's machine name and VI Server port. Each server then connects back to the client. All messages then pass via these connections. Therefore, a client is uniquely identified to the server by its network machine name. Now the problem. A Windows RDS session does not have a unique machine name. Instead it uses the machine name of the RDS. So, if two users run the client application in two separate RDS sessions at the same time, when they connect to the servers, they will look like the same machine. There is no way to uniquely identify them and route messages to the correct one. So, I have started looking at network streams as a possible solution. They looked interesting because you could add a unique identifier to the URL so you could have more than one endpoint on a single machine. This looked like it would solve my problem because I could combine the machine name and user name to make my client endpoint unique. But here is the process I would need to follow: Server creates an endpoint to listen for client connections. Client gets the list of server names (from a central DB) and then tries to connect to each server's endpoint. If successful, the client creates a unique reader endpoint for that server and then passes the server the information about how to connect to this endpoint. When the server connects to this endpoint, it also creates a unique reader for the client and sends that info. The client connects to the server using this unique endpoint and we now have two unique network streams setup between client and server, one in each direction. The client then disconnects from the first server endpoint since it now has its own unique connection. Both ends will need to have some sort of loop that waits for messages (flattened class objects) and then puts them into the proper local queue for execution within their application. Both will also need to be able to send a shutdown message to each of these loops when exiting to close the connection. My concerns are how to have the server listen for connections with its generic endpoint to multiple clients at once. There is no guarantee that only one client will try to connect at a time. And once the client is fully connected and releases the generic connection, will the server be able to listen for more connections or will that endpoint be broken? How do I reinitialize it? This is all so much easier with VI Server that I might have to just give up on the RDS solution altogether. But I want to give this my best shot before I do that. Thanks for any tips or ideas or pointing out any pitfalls I missed. -John

-

Un-abortable from VI Server: Start Asynchronous Call Prepare to call and forget 0x80 I'm trying to abort call and forget VIs that don't get shutdown properly. I'm launching using 0x80 (call and forget) Problem is, I can't seem to find them in memory using VI server. If I "trick" labview and just start opening a VI by name, and put in the correctly (guessed) clone name i.e. dameon.vi:3 and it just so happens to be the right name I can find it. Why isn't it appearing in all VI's in memory? Is there any way to find clones of a VI through server? Stranger still: the name of the VI when i hover over the abort button is something like dameon.vi:Hostdaemon:ProxyCaller.234908238:3 wtf? how can I abort call and forget VIs problematically? Lastly, I'm aware of Abort.vi which I forget who programmed. I got it off lavag at some point, see attached. ~Jon Oh yeah, even if I get the right clone name from VI server through my educated guess, I still cant abort it problematically with the abort method. Showing the front panel with an invoke node does work however (wtf? why?) I can then hit the abort button on the front panel of the daemon, and it does stop. ~Jon Abort_LV82_v100.zip

- 10 replies

-

- asynchronous

- call and forget

-

(and 3 more)

Tagged with:

-

I need to find a transport for message objects that allows two way communication without polling but is limited to server side connections only. So, the client can connect to the server but the server cannot connect to the client. First some context: My application communicates over the network using VI Server. My client app (the UI) opens a ref to a VI in my server app (the engine) and sends a message object containing the client app’s machine name and VI server port. The server app then opens a ref to a VI in my client and sends a message object with the reply data. I now have a two way communication channel via VI server and can pass any message object back and forth without polling. I learned today that our IT department plans to block all incoming connections to all non-server machines in the future. So, my client would still be able to connect to the server app within the network, but the server would not be able to connect to the client app because of this rule. This will completely break my networked messaging system. I do not know a way for LabVIEW to setup VI Server so only one end can connect to the other but allow two way communication without polling. Does anyone use a message system that would work in my situation? I would prefer to continue to use VI Server but I am willing to look at other solutions, as long as they were very robust and had low latency. thanks in advance for you help. -John

-

I have been working on an architecture that uses VI Server to send messages between application instances, both local and across the network. One of the problems I have run into is the fact that VI Server calls are blocked by activity in the root loop (sometimes referred to as the UI Thread). There are several things that can cause this: other VI server calls, system dialogs (calls to the one and two button dialog functions), if the user drops down a menu but does not make a selection... (I'm sure there are more...) Since this is a pretty normal way of communicating between applications, I was wondering if anyone had any ideas for a work around. Here is a basic description of my architecture: Message is created and sent to local VI that sends to outside application instance. Local sending VI opens VI server connection to remote instance. It then calls a VI in the remote instance that takes the message as input. This remote VI them places the message in the appropriate queue on the remote instance so it gets handled. If the remote instance root loop is blocked, the sending VI on the local machine is also blocked. I could try to eliminate all system dialogs from the remote application, but that only partially addresses the issue. I really wish a future version of LabVIEW would eliminate this problem with the root loop and VI Server all together. BTW: using LV2012 but this issue exists in all versions. -John