-

Posts

135 -

Joined

-

Last visited

-

Days Won

2

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Götz Becker

-

-

-

QUOTE (Doon @ Apr 16 2008, 05:58 PM)

Even More reason for me to push (read: beg) for an upgrade. Thanks.After I today nearly released a build with some parts configured by conditional symbols as simulated, I am too begging for this to be included soon.

Reducing the overhead of things to remember when the customer is standing in your neck asking if the app is ready yet (answer: no not yet but soon...) would be great!

-

QUOTE (cmay @ May 6 2008, 12:39 AM)

Continuing on this topic... is there a property that tells which type of numeric the control is (i.e. whether it's an I32, DBL, etc.)?Hi,

I would try and play a little with GetClusterInfo.vi, GetArrayInfo.vi and GetTypeInfo.vi found in <vilib>/Utility/VariantDataType/VariantType.lvlib

The VIs in there aren´t documented very well, but you should be able to get the needed information with those out. (Beware... trying to traverse a complex structure programmatically can be tricky

)

) -

-

QUOTE (Michael_Aivaliotis @ May 1 2008, 08:36 PM)

With enough time and energy one could port some OSS C/C++ code to LV.

-

-

-

QUOTE (Aristos Queue @ Apr 21 2008, 06:05 PM)

Too easy for a real Godwin´s Law case. I doubt that this thread will end here! (Quirk's Exception)

-

QUOTE (tcplomp @ Apr 18 2008, 07:36 PM)

although I can´t use your test VIs (DaDa.vi is missing), the behavior is normal. If an indicator is wired to the connector pane and only written conditionally, LV will erase its contents if it is not specifically written.

Download File:post-1037-1208546656.vi

Download File:post-1037-1208546643.vi

Edit: I hope I understood your question right.

-

Hi,

anyone seen such a document for 8.5.1?

-

QUOTE (JFM @ Apr 1 2008, 03:36 PM)

I added a prealloc before and restarted my test (and hope for the best

)

)http://lavag.org/old_files/monthly_04_2008/post-1037-1207125388.png' target="_blank">

Greetings

Götz

-

QUOTE (TiT @ Apr 1 2008, 12:34 PM)

I saw that your methods all have control terminals inside of the error case structue. This might not change a lot, but if I understand correctly the quote above, then it can only be better to have the control terminals outside of the case structure.Hope this can help

Hi,

thanks for the link. I didn´t thought about the controls inside the case structures. Usually I only look for nested indicators and the dataflow of "passed-through" data like references.

I´ll try the hint and hope for the best

-

Hi and thanks for your replies,

I still don´t know why my memory consumption grows.

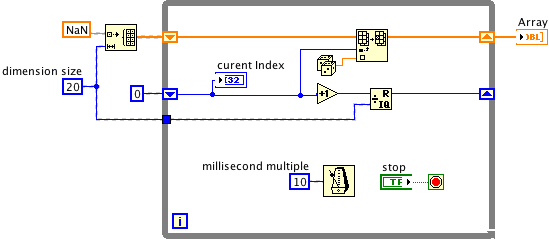

The used code is in the attachement Download File:post-1037-1207038045.zip

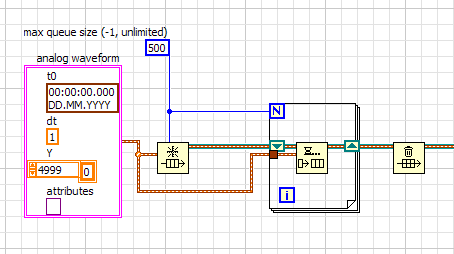

The queue with the waveforms is limited to 500 elements and the producer VI, which writes random data into the Q, has a max Wfm length of 5000 (DBL Values). So the Q size in memory should max out at about 20MB.

Perhaps someone has an idea where all my memory is used.

Edit:

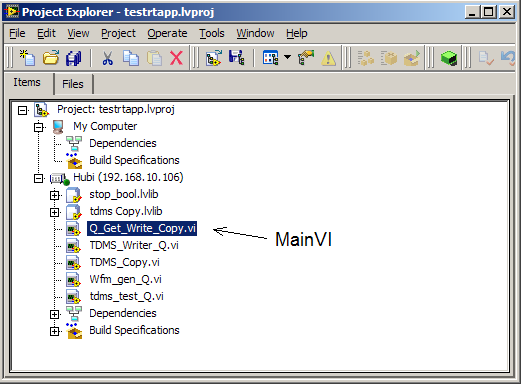

Sorry I didn´t make it clear which is the Main VI (Q_Get_Write_Copy.vi)

-

Hi,

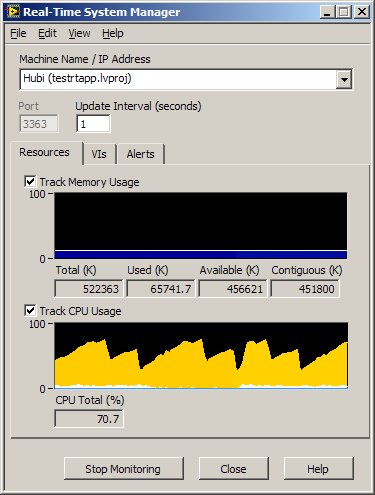

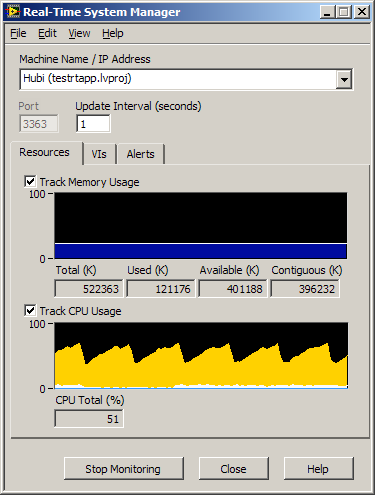

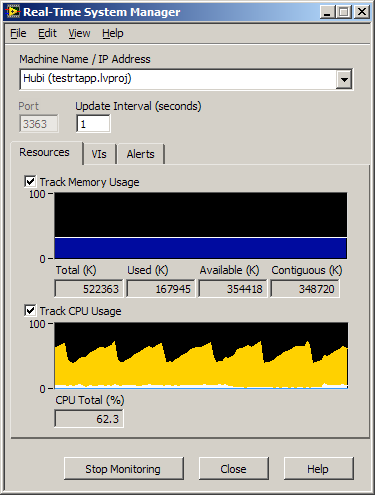

I am running small testapps to check design ideas for a bigger project if they run without memory leaks.

My current app sends different length waveforms to a save routine using queues which writes them into a TDMS-file.

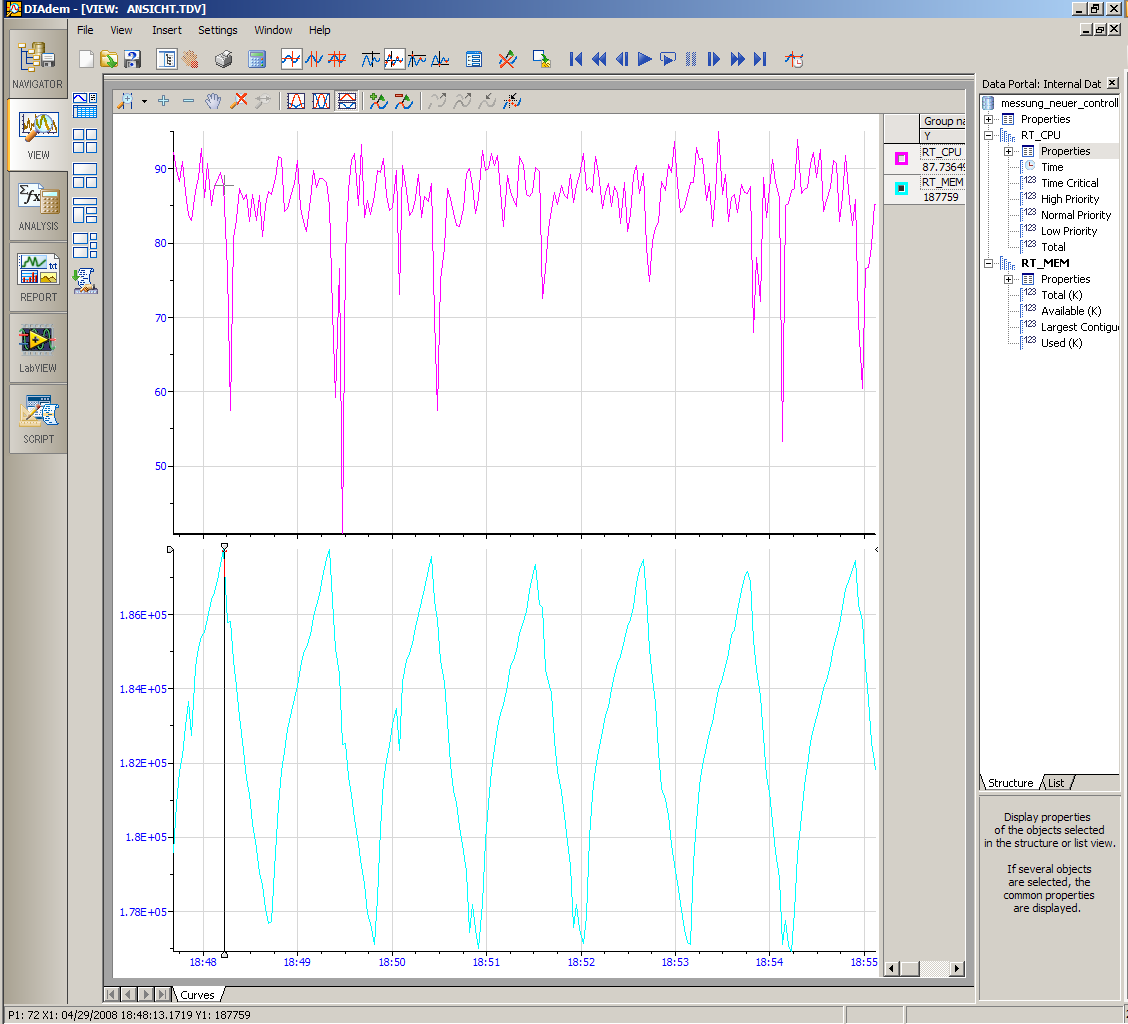

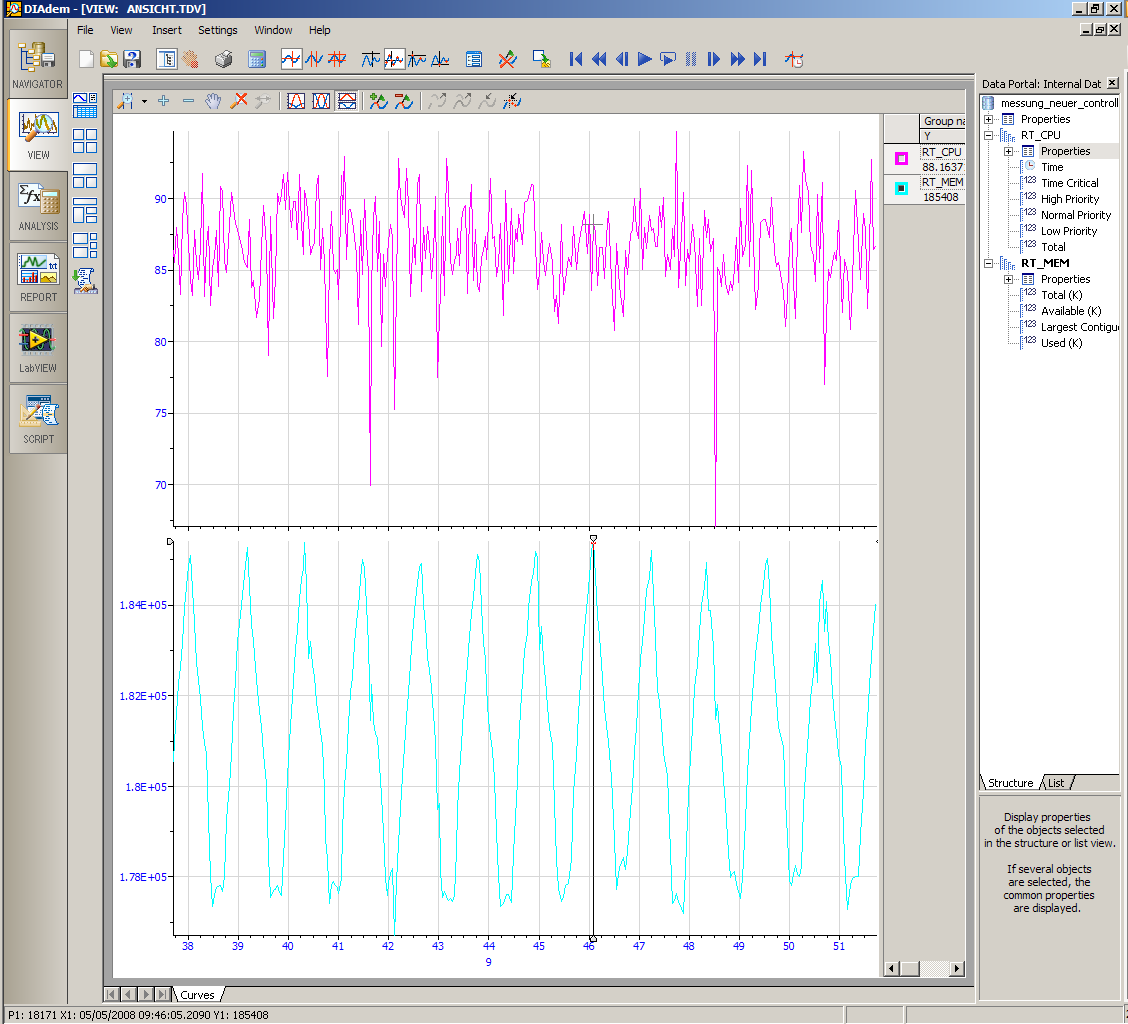

I understand that the way I do this could lead to memory fragmentation. The RT System Manager showed this:

After startup

after 6 days running

after 11 days running

The main question for me is, when does the runtime will free some memory again.

Does it only kick in if a threshold of available memory is under-run? Which would require a different test application to produce cyclic memory shortages or so.

Greetings from a cloudy Munich

-

QUOTE (neB @ Mar 12 2008, 11:48 AM)

Benchmark regularly as you go.I ran a small testapp for several hours yesterday that had some loops and various instances of GetTypeInfo. Not very complex though, but it showed good results with steady memory statistics. If I find something in the real app I´ll report it.

-

Hi and thank you all for your replies.

The target platform will be PXI RT-Controllers and the usage for the variants will be for the RT-Host communication and in the internal sequencing engine. We recently had a discussion with some vague arguments implying possible creeping memory leaks with variants. For the "real" RT-tasks we generally try to stay away from all memory allocations of course.

Your responses and this discussion helped to clear the accusations against our planned architecture :thumbup:

-

Hi,

I am currently using variants in a RT app without problems (basically always a typedef cluster with a Cmd-Enum and a Variant for the data).

For a new 24/7 RT app I am wondering if I could run into problems using variants. Do you generally stay away from Variants in mid-size to large 24/7 RT apps?

Does anyone has bad experience with <vilib>/Utility/VariantDataType/GetTypeInfo.vi in RT?

-

Hi,

I see similar speedups after removing dynamic dispatch.

Another strange thing is that as soon as I open the project in LV 8.5 on Mac OS 10.5.2 one CPU core is 100% busy. No VI open just the project window. No change after recompiling, doing another "Save All", LV restart. Just opening the project automatically marks it as edited (Reason: "An attribute of the project was changed.")

Enough playing with LV and hanging out at LAVA for me tonight :ninja:

I´ll try it on WinXP at the office later this week.

-

Hi,

I tested IVision from Hytekautomation which uses OpenCV some time ago. Looked solid but the Vision project in planning never came, so I haven´t used it in a real, release quality product yet.

-

QUOTE(TEERANit @ Dec 9 2007, 09:46 AM)

http://digital.ni.com/worldwide/singapore.nsf/main?readform' target="_blank">NI Asia/Pacific

Greetings from Germany

Götz

-

QUOTE(Tomi Maila @ Nov 18 2007, 09:17 PM)

Yes I meant the ever ongoing anime loop.

Thanks

-

QUOTE(neB @ Nov 17 2007, 04:34 PM)

I usually try this http://www.sinn-frei.com/zensiert/loituma.swf' target="_blank">classic to replace these kind of songs/sounds.

Btw. is there an english expression for the german word "Ohrwurm"?

Good luck with it

-

QUOTE(paololt @ Nov 17 2007, 06:09 PM)

ok..i have realized the comunication between two pc; i send a string from one of them and i receive the same string.Hi,

out of curiosity, how do you communicate between your PCs?

ICMP, TCP or UDP and what kind of link layer is involved in your setup? I am just wondering if you can find any biterrors using standard hardware. As I recall correctly, there might be several CRC-checks present (TCP for sure and I guess Ethernet frames too) when using standard hw and network stacks.

-

QUOTE(DaveKielpinski @ Nov 14 2007, 10:35 AM)

As far as I know (last time I touched LV-FPGA was in 7.1.1), compiling subVIs of a FPGA-MainVI doesn´t help. Since the result of a subvi compiled separatly would always output a bitstream for the whole FPGA. The same intermediate HDL-Code from the subvi will normally end up different, when its implicitly compiled as part of the mainVI to a bitstream, due to timing and size constraints require other/more optimizations at low level. I don´t think there is a way to incrementally compile HDL-code below http://en.wikipedia.org/wiki/Register_transfer_level' target="_blank">RTL

Feel free to correct me

LabVIEW 8.5.1 on XP SP3

in LabVIEW General

Posted

QUOTE (ygauthier @ May 7 2008, 03:44 PM)

Hi,

I installed SP3 this morning. Everthing went ok, LV 8.5.1 is running but I haven´t tested any DAQmx stuff. The update took some time (30min?) (gave me some nice time for a little codereview (nitpicking) with a coworker :laugh: )

just my 5ct