-

Posts

156 -

Joined

-

Last visited

-

Days Won

8

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Steen Schmidt

-

In the presentation I saw, Jack didn't explain what happened with the forked event reg refnum, merely that it displayed odd behavior and thus was discouraged ;-) Not to take anything away from Jack at all - he's a top guy, and I'd attend any presentation he hosts even when I know the subject inside out - just to point out that there is a difference between what he disclosed and what we're discussing just now. Now I hope he didn't update his presentation to include this info, in which case I'm just embarrasing :-) /Steen

-

I see, really nasty behavior (I don't think I investigated this before LV 2010-ish). And that potentially changing behavior is the danger - might as well see that as definetely will change. So no need to speculate in (mis)using forking the event reg refnum unless it becomes a supported (or at least stable and documented) feature. /Steen

-

I agree with you Yair, that forking an event reg refnum is worse than multiple ES'es with UI events in a VI. After all I do the latter occasionally, but never the former. Forking the event reg refnum shows very consistent behaviour in my eyes though. What happens is exactly that, that whenever an event structure is ready to process one more event from that forked refnum, it gets the next one waiting in the queue - but it doesn't dequeue it, it just peeks it. Others are at liberty to peek this event as well. Whenever anyone *finishes* processing an event from that queue, that event is finally dropped from the queue. This means that a slow iterating event handler will see fewer than all events from that forked refnum, and a very fast one might see all of them. Many events will typically be handled by more than one of the forks. A type of system that could benefit from this behaviour is when you have multiple event handlers with highly fluctuating performance - for instance a number of event handlers that each runs on its own CPU, but also shares that CPU with other fluctuating processes. Then you get a natural load balancing behaviour. The only caveat is that your system must be tolerant of the same event being potentially handled multiple times occasionally (systems that are tolerant to this will have a fast way to identify and discard already processed events). That is the observed behaviour of forking the event reg refnum, but as it is not supported officially, I don't use it either. I must do it the hard way and make a scheduler myself. /Steen

-

I didn't mean that the VI registering for UI events at load time was the same as when you fork an event reg refnum. I meant it was a similarly bad idea. Actually forking the event reg refnum could probably be used for multiplexing event handling in some systems. It's just very particular systems this would benefit. And that is usually not the use case the programmer is trying to implement, when forking an event reg refnum ;-) /Steen

-

Sorry about reviving such an old thread. but I noticed David Boyd never got an answer to his question about where the recommendation to have only a single Event Structure on a block diagram came from. It came from the fact that LabVIEW registers the static UI events in a VI's ES'es at VI load time. This means that if you have the same UI event configured in two event structures on a BD, those two ES'es will register the same event refnum basically, even if these two event structures do not run at the same time. Same as forking the event registration refnum - a very bad idea. For user events, no problem with multiple ES'es per VI (bad design perhaps). For UI events, be very careful not to trigger an event in an inactive ES (i.e. don't trigger an event in an ES that sits in a currently inactive part of your code)! I guess this is the bug-hole AQ and NI wants to get rid of? Anyways, while I'm here, I sometimes use multiple ES'es on a single BD. Rarely for user events (those get put into subVIs typically, since they can), but actually for UI events (and I am really careful doing this, and it's not very often). It happens on those occasions where I have a really simple state machine that steps through a tab strip for instance (more or less one state per tab). In that case it's convenient to register each tab's controls in each their own ES, and activate each one of those ES'es in each their own state of the state machine (usually the ES'es sit in cases in a case structure with a loop around it, you know?). What must not happen in this configuration is statically registering for a UI event that can be triggered from several tabs. You can't (manually) trigger a Value Change event on a control located on another tab than the one you're looking at right now, but you would be able to trigger a Key Down event for instance... Just saying, be careful. Cheers, Steen

-

LAVA BBQ 2014 Registration and Call for Door Prizes

Steen Schmidt replied to JackDunaway's topic in NIWeek

Hello guys, I'm excited to attend the Lava BBQ this year :-) See you in Austin! Cheers, Steen -

LabVIEW 2013 Favorite features and improvements

Steen Schmidt replied to John Lokanis's topic in LabVIEW General

Both LabVIEW 2012 and 2013 has crashed numerous times on me. 2012 In fact much more than 2010... In 2012 we tracked it down to when compiler optimizations were set at above 7, combined with using inlining and recursion together (I have a demo-project illustrating this). A CAR has been filed. There is still a warning dialog popping up almost every time I quit LV 2012. At first I thought the above wasn't present in 2013, as I can easily leave compiler optimizations on 10 (as I prefer it). But then I started experiencing crashes with 2013 as well, although not so severely as with 2012. I think it still has something to do with inlining, but much less of a problem than 2012. I haven't filed a CAR on 2013 yet. /Steen -

Yes, we realized the pain about proxy methods about 2 minutes after we sat down and did a couple of different implementations of composition. That pain stems mainly from the fact that we are forced to create explicit accessor methods for classes. There's no way in LVOOP to specify public or friend scope for class data. I'm contemplating some ideas for class data access á la unbundle and bundle directly on the object wire instead of the current explicit accessor. This is just off the top of my head, and haven't been thought through at all yet, so it may not be desirable at all when I give it some more thought . I'll get back to you on the traits and mixins topic later, right now I'll go cook up some dinner . Cheers, Steen

-

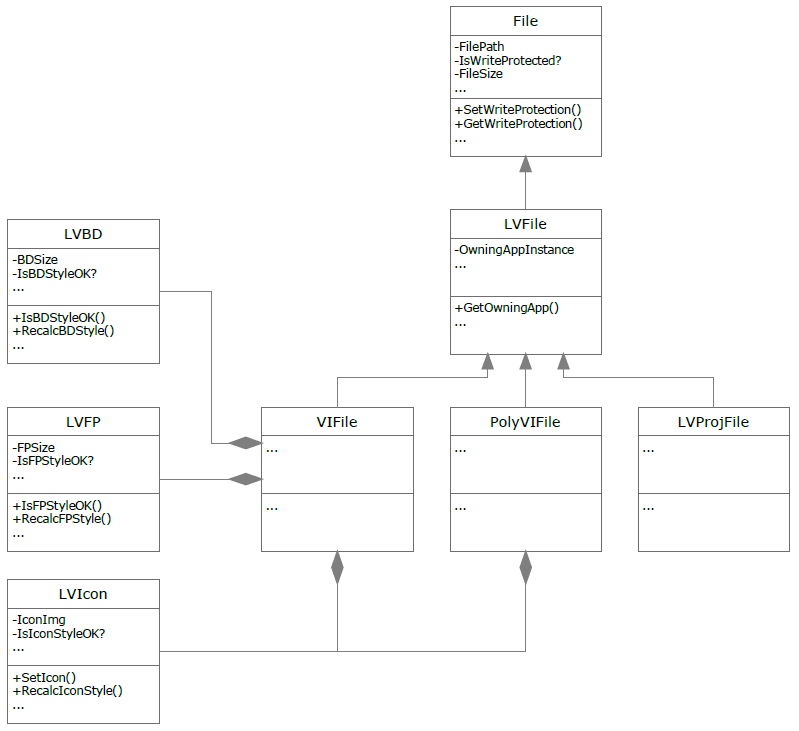

Composition would be a way for us to maintain proper abstraction while living without multiple inheritance - thanks for reminding me of the debate composition vs inheritance ;-) PaulL, if I understand you correctly you're asking me about what I would use this particular File class for? Let me explain in short - here's a fraction of the class diagram that we've been discussing here (illustrating the composition solution): Our use case is an application ("Batch Editor") that will allow us to do a number of things on a collection of files; - You can add any number of LabVIEW files to your current workset in the Batch Editor. - You can then select one or more files within this workset to perform operations on. - Examples of operations: -- Change the write protection of all the files in your current selection within the workset. -- Setting or clearing "Allow Debugging" in bulk. -- Sorting your view to see which files in your workset has reentrancy enabled. -- Calculate if all your files follow a predefined style (BD, FP, Icon, VI Description etc.), and to some extent correct bad style in bulk (FP for instance). Style would be a loadable XML file (probably), and define such things as label position, caption contents, control allignements etc. etc. Define your own style for your company, for your current project etc. OO will be a good strategy for modularizing many parts of this Batch Editor app. Makes sense? /Steen

-

Currently only a single level of abstraction, where File.lvclass is understood to be "LabVIEW files", not xls, txt, ini etc. Had LabVIEW supported multiple inheritance I could have made several super classes each defining their own interface, for instance; File.lvclass (responsible for all generic file traits like disk write protection, file path etc.) LVFile.lvclass (inherits from File, and adds generic file attributes that are common to LV files only, like an owning LV instance if the file is in memory and that sort) LVBD.lvclass (hosts all that has to do with block diagram) LVFP.lvclass (hosts all that has to do with front panel) LVIcon.lvclass LVProtection.lvclass LVExecution.lvclass ... Then VI.lvclass would inherit from all of LVFile, LVBD, LVFP, LVIcon, LVProtection, and LVExecution. PolyVI.lvclass would inherit from the same, except for LVExecution, while LVProj.lvclass would only inherit from LVFile. But we only have singular inheritance, so the above (most correct) encapsulation is not possible in LabVIEW. Within this single string of inheritance I must choose where to put the IsFPStyleOK? data field for instance. If I put it in LVFile then LVProj is also forced to lug around IsFPStyleOK? even though it has no idea of a front panel. If I on the other hand move IsFPStyleOK? down into VI.lvclass and VIT.lvclass (and the others with a front panel) then I can't use dynamic dispatch for that (since VI and VIT has no common parent below LVFile). I could inject an abstraction layer between LVFile and VI/VIT/FacadeVI... for instance - that could be LVFP.lvclass which would own an IsFPStyleOK? data field. Then the inheritance tree for VI would look like this: File <- LVFile <- LVFP <- VI. But again without multiple inheritance I can never flesh out single abstraction paths without overlap to each LabVIEW file type. Thus my question; should I start pushing class data members and dynamic dispatch methods upwards to the first common parent (and don't mind breaking abstraction), or should I stick with strict abstraction and then live without that much dynamic dispatch and having to duplicate data fields across several same-level classes? I love clean abstraction, I think it's one of the really great things about OOP. Murky abstraction - mixing in stuff that doesn't really belong in that class - is a huge code smell, a stinker really. I wish for multiple inheritance in LabVIEW. I'm glad we're allowed to use components from more than a single LVLIB inside a VI . /Steen

-

Hi, Here comes a rather basic question but which I'm always struggling with since you can often argue both ways. Hopefully some of you have a few nuggets to share here... 1) A parent class should have no knowledge of any child class' concrete type, i.e. only data and methods that is inherently common to all decendents of that parent should be defined by it. 2) For dynamic dispatching to work the parent class must define virtual methods for dynamic dispatchees to work from. Those two rules often contradict each other. Methods vetted against 1) will often eliminate the possibility of 2). A specific example: Currently at GPower we're working on a set of classes that enables us to do a lot of stuff on all the different LabVIEW files. So, we have a parent file.lvclass and then a collection of specific children that inherits from "file", for instance vi.lvclass, lvproj.lvclass, ctl.lvclass, facadevi.lvclass etc. A) Generic stuff that has to do with any file obviously goes into file.lvclass data (together with data member access methods for each). For instance IsWriteprotected?, FileSize, FilePath and such. A FileRefnum should probably go into each child class to enable us to have it most specific. B) Then there are data elements that definetely has to do with each concrete file type and thus should be in each child class' private data, such as AllowsDebugging?, IsFPStyleOK?, Icon etc. - as those are only valid for a subset of file types. C) Now it gets a bit more murky; If we define data elements in file that each child can use to flag if it has this or that feature (like HasIcon? or SupportsProtection?), then you can ask each and every file type if it support that, before calling a method to work on that. For the subset of file types that supports a given feature (those that has an icon for instance) it'd be great to have dynamic dispatching though. But for that to work file.lvclass would have to implement virtual methods like GetIcon() and SetIcon() (where the latter maybe recalculates the IsIconStyleOK? data field for instance). But implementing these (numerous it turns out) virtual methods in the parent class, suddenly riddles the parent class with specific knowledge or nomenclature that is very specific to different subsets of descendent file types. I always vet the parent against each piece of information I'd like to put in there by asking myself something like this: Does my generic file know anything about disk write protection? Yes, all files deal with that, so it can safely go into file.lvclass. Does my generic file know anything about block diagrams? No, only a handful LabVIEW file types knows about block diagrams, so I can't put it into file.lvclass. But the latter forces me to case out the different file types in my code, so I can call the different specific methods to work on block diagrams for those file type objects that supports this. Hence, if I want to be able to just wire any file type object into a "block diagram worker" method I need dynamic dispatching, and that only works if my parent has a virtual method for me to override with meaningful code in a few child classes... How do you go about selecting this balance, on one hand abstracting the parent from the concrete types, and on the other hand implementing the necessary dynamic dispatch virtual methods in the parent? Regards, Steen I must add that I'm currently leaning towards the more pure approach of not mixing abstract and concrete. Thus I tend to omit dynamic dispatching except for the very generic cases. I only allow the "do I support this feature" flags into the parent data, and then I implement static dispatch methods for each concrete type - even though these static dispatch methods then will often be quite similar, but that is solved with traditional LabVIEW modularization and code reuse (like subVIs, typedefs and such). It works well, but I feel I don't get the full benefit of dynamic dispatching. But that is what I'd do in C++... /Steen

-

And you are charging money for your application that cracks LabVIEW passwords? Funny... /Steen

-

Any experiance on runing LV on 2012 or 2008R2 Server

Steen Schmidt replied to Anders Björk's topic in LabVIEW General

Ditto to what Jack says. Almost no compatibility issues between Windows Server and LabVIEW anymore it seems, except for one: A few times on 32-bit 2008R2 we've had to disable PAE on the server to get the LabVIEW application to execute without errors. TestStand can be more tricky, if you ever want to use that on Windows Server. But development is not possible over a remote connection. I think that's universal unless you're a very peculiar developer ;-) /Steen -

Ok, so there are many corner cases which makes it quite a task to make such a compatibility matrix, not speaking about maintaining it. What I'd most like to do was make a project (scripting, very easy), configure it with the target in question, and add the subject VI to that target and examine the syntax checker's output. First hurdle is that it takes a long while to execute all this for an exhaustive number of targets/configuration combinations; the scripting part at least takes one second per project + config, often 2-3 seconds. Considering this conformity verification/rectifier tool will typically run on 50 to several hundred files at a time, it all adds up. I can live with this, and there are ways to optimize the execution time and so on. But the second obstacle is impossible to get by; it all gets blocked by the fact that I can't get to the output of said syntax checker. I can invoke the 'Error list' manually by pressing the VI's broken Run arrow, and it'll tell me stuff like "Diagram Constant: Type not supported in current target". NI has been prompted on several occasions to publish an entry point that lets us access this info programmatically, but it's not possible yet (obviously for very good reasons, I don't doubt nor contest that). My hope when submitting this post to Lava was that someone knew a backdoor that'd let me run this syntax checker on my VI and evaluate its output. So far it seems the options stand between an undefendable amount of work or an impossible task. In that case I must opt for a lesser functionality in my verification tool, which isn't completely shocking to me. But thanks for your time anyway . Cheers, Steen

-

You should always be suspicious when you think "This is easy" to one of my questions . In this case you've misunderstood my objective. You're right it's easy to determine at runtime which target type you execute under, but my objective is to determine if a given VI is compatible with each target type. So I want to make some G code that takes as input a path to VI A, and then outputs a Boolean that is True if VI A is compatible with FPGA for instance. VI A never gets run, and VI A may not even be runnable at the moment it's checked. The latter case, where VI A is still under construction, makes it insufficient just to add VI A to an FPGA-target in a scripted lvproj and check for runnability for instance - VI A may be broken due to incompatibility with the given target, or it may be broken due to it's still under development. Therefore I seek a different method for determining target compatibility; one way would be to evaluate each and every BD object against a positive (or negative, whichever is fastest) list for each target. In that light a wire stump will not invalidate VI A from FPGA compatibility, while a DBL constant will. /Steen

-

There are a number of other things too, for instance everything in the Real-Time palette, stuff in conditional disable structures that need consideration on one target but not the other etc. But anyways, I don't actually think it's that big a deal - I'll see if I can come up with some recursive way to check compatibility. If a VI is optimized for Real-Time or not is another situation - that will be quite hard to determine. Almost every VI will fail such a test if it is rigorous in any way. Just completely avoiding dynamic malloc is almost impossible for instance. I think it's more realistic to classify code as Real-Time compatible by aiming for the correct tradeoff that'll let the specific target hardware perform deterministically. Along the lines of 'on powerful hardware you can allow some dynamic malloc, for light hardware you must eliminate dynamic malloc completely' etc. This will be next to impossible to weigh with the current tools, especially with the many ways in LabVIEW to prioritize code execution. And then emerging technologies (like deterministic dynamic malloc) skews the rules of thumb all the time. Can't use VI Analyzer as that is not a free toolset, and I'm limited to plain vanilla LabVIEW. I was hoping for an answer along the lines of "the compiler internally calls this method to determine Real-Time compatibility". No dice. Thanks for your feedback anyway. /Steen

-

I don't mind you saying so . If the questions are these, the project-approach makes perfect sense: a) Is this VI runnable on Desktop? b) Is this VI runnable on Real-Time? c) Is this VI runnable on FPGA? In my use case the questions are these however: a) Is this VI incompatible with Desktop? b) Is this VI incompatible with Real-Time? c) Is this VI incompatible with FPGA? In the latter case the project target test may be inconclusive for a VI under construction (and thus possibly broken). There's unfortunately no way to get that information, and it has been requested of NI at several occasions. Cheers, Steen

-

Yes, that's perfectly possible, but it doesn't solve anything - I ruled this option out from the very beginning. By looking for a broken VI on a specific target type you can't determine incompatibility with that target. The VI can be broken for any number of reasons beyond incompatibility with Real-Time or FPGA. There isn't any way but brute force object traversion looking for target-incompatible objects... /Steen

-

Thanks Michael, I hadn't noticed that method. I work mainly in LV2010 to maintain broader version support for my toolsets, and that method appeared in LV2011. I would wait for LV 2012SP1 before I changed from 2010, but maybe I should jump to 11 before that, even though 2012SP1 is just around the corner... pondering... Anyways, that's an interesting method, and could maybe help in some way. I'm not sure yet of how much functionality (VI hierarchy, ownership and family ties etc.) is retained loading a VI this way, but it looks promising. I'll delve into it. Thanks! /Steen

-

I'm looking for a function like this as well - any news? I want to open a reference to a large number of VIs, no matter if they are broken or not, but without waiting for LabVIEW to search for missing dependencies. I don't need the VIs to run even if they're not broken, only to be able to read and write some properties... /Steen

-

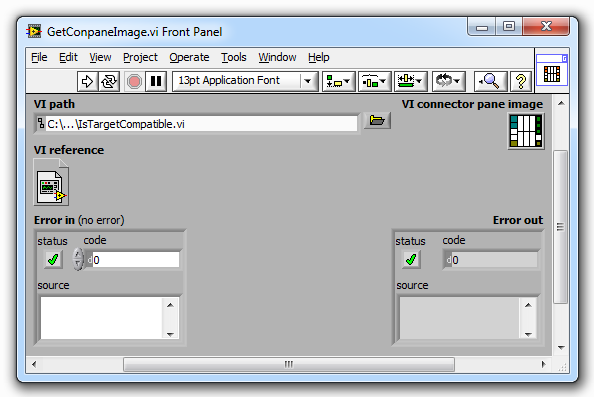

Get connector pane 32x32 image - looking for better idea

Steen Schmidt replied to Steen Schmidt's topic in LabVIEW General

I'll be...... I just tried it again and now it works? Well, must've been grimlins then. Or perhaps a Schrödinger's Cat problem... /Steen -

Get connector pane 32x32 image - looking for better idea

Steen Schmidt replied to Steen Schmidt's topic in LabVIEW General

I tried that, didn't change anything. /Steen -

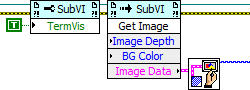

Get connector pane 32x32 image - looking for better idea

Steen Schmidt replied to Steen Schmidt's topic in LabVIEW General

Thanks to everyone in this thread, especially you Darren, who had a VI on your shelf showing that I should just have done what Yair suggested . I've basically reworked Darren's VI, into one that creates a 100% correct conpane image. Here's the FP illustrating an example (the VI itself is attached this post if you can use it): So that's probably the fastest way in G to get the conpane image. I discovered a funny thing while coding this; the Get Image method of the SubVI class returns slightly wrong terminal colors for the conpane image! For instance "Boolean green" is RGB 0,127,0 in the BD, but the image when extracted with the Get Image method contains a slightly darker green color, namely 0,102,0 instead. So an unexpected side effect by this small exercise were even more correct conpane images . Cheers, Steen GetConpaneImage.vi