infinitenothing

Members-

Posts

372 -

Joined

-

Last visited

-

Days Won

16

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by infinitenothing

-

Porting LabVIEW code to another language

infinitenothing replied to infinitenothing's topic in LabVIEW General

FYI, in my particular case there's no UI. It's all headless RT code targeting a ZYNQ [sb,c]RIO. There's a few TCP loops, some logic for homing motors, some RS232 commands out, a bit of data processing/analysis, etc. I can't go out to a dll because my main roadblock is the run time engine. If I can get the run time engine working then I'll just keep everything as LabVIEW. Speaking of data flow, do you think some languages are better targets than others? The options I'm considering Java and Python mostly because I want memory management to stay out of my way as much as possible. Re: black pill. There are many other things I'd prefer to spend my time on. -

Why do you ask? You don't have to worry about buffer overruns if that's the sort of thing you're concerned with.

-

Has anyone gone through the experience of rewriting your LabVIEW code into a different programming language? I'm wondering if it was a total rewrite or if you went line by line translating it to a new language? After the effort was over, was the end result still buggy? Did it take it a while to get it back to its former reliability? For people that haven't gone through that—what's your game plan if the time comes when you have to move your code over? Related thread explaining some of the context of this question:

-

Unfortunately, we tied our cart to the sbRIO. It's the controller for our flagship product and there would be a serious hit to revenue without it.

-

Regarding the supply chain, we found and ordered ~50 boards that are very similar to a 9651 and https://krtkl.com/snickerdoodle/ The FPGA interface doesn't look overly complicated. We can get most of that from Xilinx/Vivado since both the ARM and FPGA are on the ZYNQ. Maybe I'll start a new thread to see if anyone has more experience in Vivado. They were a little spammy but maybe the mangotree folk could point me in the right direction. It seems like a bit more of a legitimate path since NI has the VHDL export tool. @CJC IN six person@MT_Andy Regarding, "If we would go that route I do not expect to reuse much of the existing LabVIEW code in any way" that's rough to hear. If we could keep the LabVIEW code that would Make it so that there's a chance we move back to the sbRIO after the supply chain straightens out Helps sell NI as a good product for R&D and prototyping as the code can move over more easily to the "final" product

-

As I'm sure many of you know, there's an issue sourcing any NI products with FPGAs on them. Lead times are... out there. For anyone who can't tolerate those long lead times, they are probably thinking about a plan B. I'm wondering if anyone has gone through the process of designing a replacement for an NI product. Our application is written in LabVIEW and one of our biggest risks is that the run time engine isn't open source. There's so many test hours behind our labview app but if we run into a "bug" and NI won't support it because it's on 3rd party hardware, we could really find ourselves in a bind. How much did you use the LabVIEW code or did you just start from scratch? What's the process like? Expensive? Buggy?

-

How do these FPGA XNodes work without all the abilities?

infinitenothing replied to Sparkette's topic in VI Scripting

@Darren As far as I could tell, none of those nodes deal with the hardware io nodes I did find a couple nodes in the LabVIEW 2020\resource\Framework\Providers\lveio\ and vi.lib\eio\ folders that sort of seemed to work I still couldn't get grow to work. Here's the closest I could get fpga script.zip -

Read FW Version from PCB board.

infinitenothing replied to Terius96's topic in LabVIEW Community Edition

Can you give us more details on what hardware you're using. Attach a VI instead of a screenshot. -

FPGA Vision Toolkits

infinitenothing replied to infinitenothing's topic in Machine Vision and Imaging

Yes, mostly just post processing. Comparitors and other analog solutions are clever but I'm expecting a use case of needing more than one comparitor each with different user defined thresholds. I also have a use case of calculating things like summing the pixels over the whole image which I guess could be analog but an FPGA gives us maximum flexibility to change that up as the project evolves. I also want to take the binary images, perform combinational logic between pixels in the same location across different images, and then use particle analysis to pull out features (I mentioned Feret diameter above) -

Alternatives to NI-9208 or DAQ competitors?

infinitenothing replied to HorseBattery_StapleGuy's topic in Hardware

We have an external calibration process so calibrating counts to volts isn't important. Yes, layout, emissions, immunity, the front end, have all been fun. Communication wasn't super hard but there were a few surprises. Testing and cert is on a system basis as this is a relatively small part of a bigger system (similar to calibration) I have another system where we customed a temperature input, an RS232 port, and an industrial output. We just didn't need that much accuracy so it was NBD. Try to spec out a similar NI system. I expect we saved a few thousand per unit and you can get a fair amount of engineering time for that. I was thinking, if you wanted to avoid laying out your own board, you could always buy an eval kit. -

FPGA Vision Toolkits

infinitenothing replied to infinitenothing's topic in Machine Vision and Imaging

NI has a great start to a solution for me with the FPGA toolkit I mentioned. I was hoping there were some other options. Maybe I can drop a request in the third party toolkit developer exchange. You can think of my ADC solution as a bit like a frame grabber. Basically, I'm going to grab the voltages off the pixel hardware one at a time. So imagine like 25MSPS and then I reshape that into 60x60 arrays so I can get 7k frames per second. Ideally, most of my processing can be done in parallel (pipelined) with the acquisition (imagine applying a threshold to each pixel as they come off the ADC). Actually, I have the acquisition going fine right now. I can grab images. I was just complaining because the mipi interface would be something I have to implement at this point. It's not a deal breaker (unless there's an inherent high worst case latency limit on mipi and then it might be) but the interface isn't doing me any favors. I am more focused on the processing on the FPGA—I don't want to reinvent but will do if I have to. -

Alternatives to NI-9208 or DAQ competitors?

infinitenothing replied to HorseBattery_StapleGuy's topic in Hardware

You could consider building it yourself. Here's a 24 bit 8 ch 250kSamp/sec ADC we use:https://www.analog.com/media/en/technical-documentation/data-sheets/AD7768-7768-4.pdf -

FPGA Vision Toolkits

infinitenothing replied to infinitenothing's topic in Machine Vision and Imaging

I have a feeling that's not going to be fast enough. My images are relatively small maybe 60x60 x maybe 10 cameras but I need really low latency (sub millisecond). I'm planning on bringing the sensors/pixels into an ADC directly so I don't have to worry about any interfaces slowing me down. I'm hoping the processing is relatively simple (eg take a threshold, measure perimeter). My plan A is a FlexRIO (eg 5751B) or an Scope like the 5170. Have you used the Kria or Jetson platforms? Is my application in range? -

I'm looking to process images with very low latency. I don't know if I have time to ship it off to the CPU and back to make a decision and react. I see that NI has a toolkit https://www.ni.com/en-us/support/documentation/supplemental/18/ni-vision-fpga-function-support-and-compatibility.html It seems a bit limited though. For example, I'd like a bit more from the particle analysis toolkit. Things like perimeter and the Max Feret Diameter. Does anyone have any suggestions? I'd rather buy than build.

-

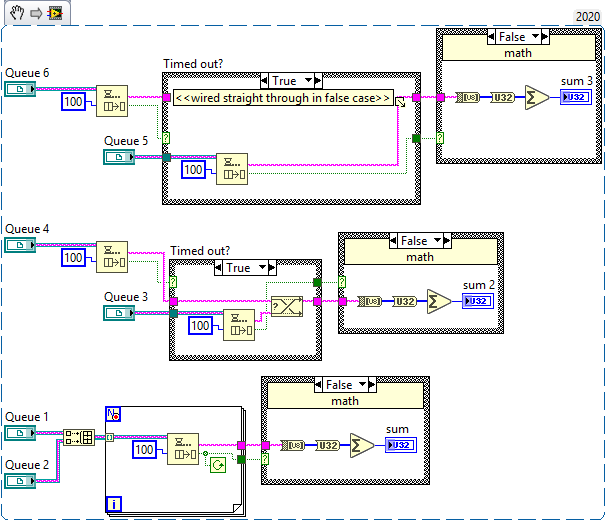

Network streams give you a few things: 1) TCP's guaranteed delivery can be foiled by the OS. I know in some cases you can pull the cable and one side will not get an error because it put the message in the OS buffer but the other side will obviously fail to get the message. It's a little tricky at this point to figure out exactly which messages need to be transmitted. I believe network streams can tolerate that disconnect. 2) Explicit buffer sizing at the application layer. TCP uses buffering at the OS layer which is much harder to poke into. Of course, with network streams, memory use is really bad with variable sized messages so YRMV. 3) A flush method. This comes in handy if you want the host to do something smart when a message takes too long to get to the other side. This is also useful in cases where the host and client are developed by two different parties and the sender wants to prove their transmission. You can of course roll your own with TCP but that's one more thing TBD. 4) "Connected" property node. I'm guessing there's some sort of heartbeat underneath. You're totally allowed to ignore this

-

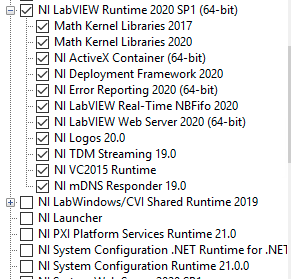

I keep up with the latest so that if NI breaks some functionality I can be loud and obnoxious and hopefully get things fixed in a future version. My thanks goes to all the other early adopters. Oh, but I use the single seats and just pay for the SSP each year. It's just a cost of doing business as I see it.

-

I DMed you but I was more looking for a process than a solution to my particular problem. By process I mean for example, who's the backup for the account manager? Who can tell me if the TSE is out of the office? Those are my usual "go to"s

-

Is there a certain protocol for escalating support issues? I'm calling in on my service request daily now—it goes straight to voicemail. I added a note and emailed almost a week ago. My account manager was CCed. How do I get someone to talk to me?

-

I'm imagining a collaboration where one developer develops the code and a different developer develops and tests the installer. The only problem I see with this is by default, there's just one lvproj file with both the code and the installer build spec in it so both developers would be working on the same "file". It might be possible to make two lvproj with the build spec in one and the installer in the other. Or maybe the code developer delivers a packed project library to the installer developer? Has anyone gone down one of these paths?

-

Need help hiring a competent LabVIEW programmer!

infinitenothing replied to Cat's topic in LAVA Lounge

I was actually just pointing out that the employees of the integrators have had a reasonable amount of training and experience and are possibly an undervalued source of talent worth reaching out to. I'm not sure why you guys are all up in arms about ethics. There's nothing wrong with offering someone a job at better pay. That's just natural market efficiency. It didn't occur to me that you might have the opportunity to hire someone from an integrator you are already working with (I was assuming you didn't have an integrator already) but if that was the case, any ethical issues should codified in the contract you signed with the integrator. -

While I'm extracting "secrets" Unicode in 2022? (Nods hopefully looking to others to assure me everything is going to be OK)

-

Need help hiring a competent LabVIEW programmer!

infinitenothing replied to Cat's topic in LAVA Lounge

Find your local integrator and poach anyone that's been there for a few years. Basically, the integrators like to "train on the job" so the customers are effectively paying for the training. You can usually out pay the integrator for talent because they have to maintain margins on their contract hourly rates. -

When is LV2021 being released? Seems a bit odd to get everyone excited and then not have the product immediately available.