LAVA 1.0 Content

-

Posts

2,739 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by LAVA 1.0 Content

-

-

Hi all,

I'm trying to repair an old labview program written long ago by someone long gone.... The program was moved from an old computer running W2K, to a new computer running XP, and now it doesn't work. I've traced the problem to the attached VI. The Call Library Function Node in the first while loop outputs a value which causes the and gate feeding the select to output false. This causes the select to output a value defined in the case structure. Bottom line the default data sting input to the VI is a path to a configuration file the path is held in a global variable feeding the vi... when the vi runs it changes the drive from C:\filepath where I set it (because that is where the configuration file is) the VI changes the path to E:\filepath. and then puts that is the global. There is no E drive so the file can't be opened.

I modified the program putting a constant True into the select and the rest of the program operaties fine, but I'm hesitant to leave it that way not understanding what this vi is doing.

Any ideas out there??? I would really appreciate any help. Besides the added benefit of being able to learn something.....

Thanks in advance....

-

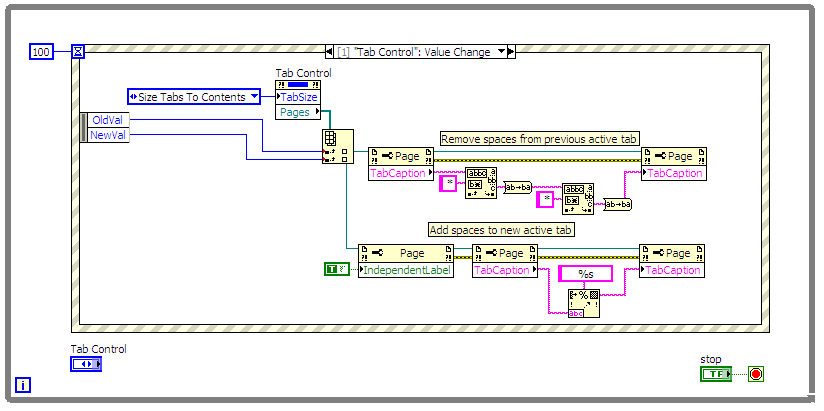

Using the property node for the Tab control you can programatically change the page captions to add and remove spaces for the active page. If the Tab Size is set to Fit to Contents then the size of the active tab will be bigger based on the extra spaces added.

(I didn't use the OpenG Trim Whitespace VI, but I'm sure someone else will point you to them.)

-

QUOTE (crelf @ Jul 1 2008, 08:19 AM)

Can't speak to the ITO but I beleive the post-it notes were a by product of http://www.snopes.com/business/origins/post-it.asp' rel='nofollow' target="_blank">masking tape adhesive research. Rather than making it stronger they tried to make it weaker.

Ben

-

QUOTE (JustinReina @ Jun 30 2008, 03:31 PM)

How about using a cursor positioned at the origin?

http://lavag.org/old_files/monthly_07_2008/post-29-1214915999.png' target="_blank">

Ben

-

QUOTE (KatieT @ Jul 1 2008, 06:24 AM)

Hi everyone, I'm an EE major working on my internship for the summer, and thus started learning labview from the very beginning. Well, I must say this is so much different than text programming, considering that's all we do at school...quite an interesting way to program though

Anyway, nice to meet you all!

Catherine

Wait, your EE major school doesn't do LabVIEW?

If you contact NI, mention the school name NI might be throwing students editions at that school.

What's the internship about?

I know it's normal in the US to do internships in the summer, how do companies handle that? During summer the number of employees is lower than normal so the support to students is less? Could anyone eloborate?

I worked during the summer in nurseries cutting lilies to pay my school (which is really cheap in the Netherlands)

Ton

-

QUOTE (Jim Kring @ Jun 30 2008, 12:41 PM)

That just goes to prove you can get to heaven using a ladder -- especially a very tall one.The problem I have with very tall ladders is that you have time to think about what is going to happen before it occurs.

I think that is part* of the reason children are born short so they figure out the walking thing before they realize its dangerous.

Ben

* Another factor that can't be ignored is what women in her right mind would participate in any activity that resulted in having to birth a full grown human.

-

QUOTE (mzu @ Jun 30 2008, 12:06 PM)

I am a graduate student, doing my PhD in experimental physics. I am finishing my PhD in ~4 month, but I became disappointed in academic career.My PhD is a experimental one, so

- several scientific apparatuses were built, and Labview was used in many.

- most of them distributed (many instruments (20+), several computers (2-3.)

- many required precise synchronization between different instruments

- including real-time feedback based on image processing

- software development culture was maintained:

all code kept in source control (subversion was used),

requirements of specifications were composed for each project,

technical documentation was properly maintained for each project.

- Projects consisted of 500+ VIs, including multiple state machines communicating by sophisticated protocols.

- enjoyed every step from development of architecture development to implementation,

- many pieces of software became standards de-facto for the lab

- supervised development of couple less complex projects in academic environment.

What else?

Around 6 years ago had a job, where I developed number of kernel drivers for Linux and Windows.

Had some taste of embedded development (C, C++, asm for MIPS R4000) for VxWorks like operating system. Developed network card driver and implemented a special purpose low level protocol. Was responsible for quality assurance on one of the projects. Was responsible for communicating with hardware engineers on another one. Debugged multiple hardware-software issues.

During last ~8 years knowledge of C, C++, and general Windows/Linux architecture allowed me to connect multiple (20+) devices to Labview which did not have native driver (different flavors of cameras, nano-positioners, special controllers, raster output device, etc)

Just for the fun of it I took CLAD certification and will take CLD next week. From what I read about CLA, I think I can master CLA as well – just not sure this is the right move for me now.

I even have an article on Labview accepted for the last (unpublished) volume of Labview Technical Resourse. (have a pdf draft with editors corrections)

I think all the above makes a strong continuation point in Labview career.

The question is what kind of job matches my qualifications? Does it qualify for Senior Software Developer?

Does taking CLA make me stand any better? Or something else should be done before getting back on the wagon. Any advice, please?

Check out

DSAutomation.com

CLA will help with us. We have seven CLAs at teh moment and picking up an eigth will be a bonus fo us (We'll have the most in the world, unless bloomy does antoher one).

Are you as US citizen?

If you apply, tell them "Ben" sent you.

Ben

- several scientific apparatuses were built, and Labview was used in many.

-

QUOTE (Aristos Queue @ Jun 27 2008, 08:30 PM)

Most benchmarks have found that the variant attribute (like the one you implemented here) is a fairly efficient lookup table mechanism in LV8.2 and later (it was dog slow before that). I'm surprised if the array version was able to keep up. I can say that the variant attribute model will get a further speed improvement in the next version of LV -- not much, but a bit. You might retry benchmarks when that becomes available.Download File:post-3370-1214837047.llb

QUOTE

I worked with one of those down-loaded from the NI site (somewhere) that did not play well witl parallel process on a cFP unit. I ended up having to sprinkle waits all over the place to get it to share the CPU.QUOTE ( @ Jun 30 2008, 09:01 AM)

STM is fine if the host application is Labview. Modbus TCP is widely know protocol in the automation industry. If I have a cRIO that needs to talk to a PLC or HMI, then STM is not going to work. Modbus TCP is also and easy way to get a cRIO to communicate with an OPC server, like Kepware for example.In all of myLV-RT applications, their is a Modbus TCP server running and any Modbus TCP client can communicate to this device. I have a crude system, much like TCE that is employed to expose the memory map (i.e. CVT) of the Modbus TCP server.David,

Thank you for the feedback. In the case of cRIO talking to a PLC I assume you will use the Modbus Client VIs on cRIO. I will take a look at the current VI library to address the issues mentioned by Ben.

On the Modbus server side I will take a look at developing a Modbus implementation inside of the remote CVT interface (CVT Client Communication) as an altenrate to STM, though this will be more of a long term project. In the short term it may be easier to update the server soie VIs of the Modbus library and develop a seperate reference design to show the use of the CVT with the server side Modbus implementation to expose the controller data to the network.

Christian

-

QUOTE (LV_FPGA_SE @ Jun 28 2008, 06:56 PM)

SCRAMNet has performance specs that I am going to try and measure but it looks like even with the fastest counter timer rig NI sells I'll only be able to time bulk transfers.

RE:

The Modbus Library...

I worked with one of those down-loaded from the NI site (somewhere) that did not play well witl parallel process on a cFP unit. I ended up having to sprinkle waits all over the place to get it to share the CPU. Just trying to help,

Ben

-

QUOTE (han_5583 @ Jun 30 2008, 07:48 AM)

=> you are using the analog output...

QUOTE (han_5583 @ Jun 29 2008, 11:09 AM)

USB-6008. Please advice.=> Analog Output rate 150Hz...

=> your time target is 1 second so that's the reason why it runs with 1 Hz...

=> change the target time of the express function (add an input, to see what happens when you decrease the target time)

=> place a "wait" in your loop...

=> use the stop input from your express-daq vi

=> the express functions produce some "overhead"

-

QUOTE (James P. Martin @ Jun 28 2008, 02:19 PM)

Hi All, I have PCI 6221 DAQ Card (68 pin) and using LV 8.0, I want to control the servo motor using this config., I have experience running the servo motor using PLC by inputting the calculated number of pulses to the servo run command in ladder logic for controlling the applicaton & it worked fine. Similarly I want to run the servo motor using LabVIEW so that I can input some pre defined pulses but dont know how to input the pulses, what pins to be connected and used. I have mitsubishi servo motor and its drive, could any body help me out with some sample code and hardware connection configuration. Thanks & RegardsJamesWhat interface are you using for your drive? Without knowing that, it's not possible to give you a good advice...

I'm using analog output or RS232, for my drives but it depends on the drive...

-

QUOTE (David Wisti @ Jun 28 2008, 09:37 AM)

For Modbus please take a look at the http://sine.ni.com/devzone/cda/epd/p/id/4756' target="_blank">Modbus Library for LabVIEW.

-

Hello Michael,

QUOTE (sachsm @ Jun 27 2008, 04:32 PM)

We considered the Shared Variable, but for various reasons we could not use it in this particular implementation and meet the application requirements. Our primary platform for this architecture is LV RT as well as LV Touchpanel so anything we consider has to work in LV Windows/DSC, LV RT and LV Touchpanel.

This is something we are investigating.

http://lavag.org/old_files/post-3370-1214609959.vi'>Download File:post-3370-1214609959.vi

Our rough benchmark for accessing scalar values using the current CVT implementation is 7-10 microseconds per read on a cRIO-900x controller. This is about the most time we can spend to meet the needs of typical machine control applications, which may contain 100s of tags and have loop rates around 10-100 Hz.

I really apreciate all the feedback and ideas. Please keep them coming.

-

bbean,

I wrote the original code for the TCE and CVT and would be glad to help out with any extra information and explanations as well as the TCE source code.

The machine control architecture was created by the Systems Engineering group at NI in conjunction with a customer to solve a particular application. At the same time we wanted to create a LV reference design that we could share with a wider audience and would hopefully help (more than confuse) other LV developers. We know we can't satisfy everyone's needs with our code, which is why (almost) everything is supplied in source to allow you to make changes and additions as need by your application.

So while we tried to keep things as general as possible, some of the things done and not done are due to the specific application we worked on. Lack of support for arrays was one of these. We knew we wanted support for arrays in the long term but the particular application did not require it. So we added support for arrays to the data structure but did not get the chance to implement it in the CVT. As you mentioned supporting arrays of different sizes in one functional global and perform well in RT is far form trivial and we not open that can of worms. In the TCE it will be a very simple change to add support for arrays.

My plan for the TCE is to release an updated version in a few months (after NIWeek) including the source code and to make a lot more of it configurable (list of cRIO modules supported, list of protocols/interfaces supported, etc.) . Ideally I'd like to create a plug-in model where users/developers can create plug-ins for new protocols and interfaces they want to add. The TCE may become a much more generic tag and channel configuration tool for use in applications beyond machine control. We have many request for such tools in applications with channel counts in the 1000's and we would like to supply some generic tool for this purpose.

Please let me know any specific questions you have and how I can help you out.

-

QUOTE (Yair @ Jun 27 2008, 05:03 AM)

P.S. Stephen, if you see this, please thank the kind soul at NI who decided that placing points on wire junctions was a good idea. It would really have made reading this piece of code easier.I was thinking the same thing. No dots? None of these wires are connected!! :headbang:

-

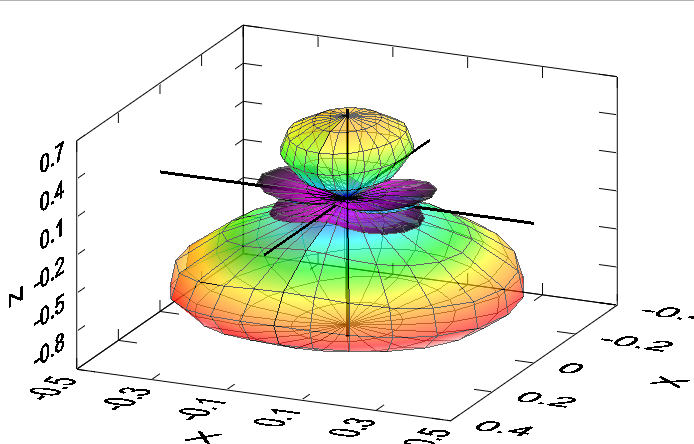

QUOTE (CarlosCalderon @ Jun 26 2008, 12:27 PM)

This is the vi.Thanks Ben

You are plotting the elipsoid fine.

The eqaution you are using assues the elipsoid is centered at the origin and the axis of rotation is along the one of the axis. you need to mod your equation such that it rotates the elipse about the line connecting your two points.

Ben

-

-

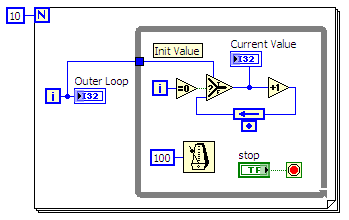

I'm just catching up on some older messages.

Another possible solution to this problem is to use I/O references (new in LV FPGA) in the subVI and specify the I/O channel as constants in the calling VI. The subVI does not need to change and the user can specify the specific I/O in a clear readable fashion. You cannot put the I/O references into an array to pass to the subVI, but you can bundle them in a cluster. I have attached a very simple example for 8 DI channels.

-

QUOTE (Eugen Graf @ Jun 24 2008, 06:58 PM)

http://forums.lavag.org/Special-LabVIEW-version-wish-t3046.html' target="_blank">This thread may be of interest to you. I did at one point create a VI that would produce a 3d hiarchy of an application. Sub-VI's were distributed along the base of cones were the caller was the apex. Shared VI would be placed in a central cylinder. Since that code was written using the public beta version of the 3d picture control, it only supported a very small screen so I was disappointed with the results. I have not returned to that game ever since.

Ben

-

QUOTE (Yen @ Jun 24 2008, 01:09 PM)

QUOTE (mballa @ Jun 24 2008, 11:25 PM)

Thank u ... it is helpful....

-

Unfortunately LabVIEW RT currently (version 8.5) does not contain the necessary driver stack to support USB devices other than USB memory devices.

Christian

-

I have struggled a bit before noticing that if I have a graph overlaid ever so slightly over an intensity graph, adjusting the scale of the intensity graph using the scale legend buttons does not fire a "scale range changed" event (even though the intensity graph is actually updated). Interestingly, changing the scales manually (by modifying one of the displayed number on the scale) still fires an event. It may be more general than that, but this is annoying enough for my purpose. If anybody wants to check this out, I have attached two simple Vis that illustrate this phenomenon.

I have reported the potential bug to NI.

Best,

X

-

-

QUOTE (Justin Goeres @ Jun 24 2008, 12:18 PM)

In my experience, things like this are really tough to implement because there are lots of weird things that can happen after you display the ring control.The first one off the top of my head is what happens when the user pulls down the ring control, but instead of clicking inside it to select something, they click outside it? Normally, that's the behavior when the user wants to cancel what they were doing and exit the item selection without changing the current value of the selector. How do you handle that case?

I'm asking not because I have an answer, and not because I think there's not an answer -- I just mean that the answer isn't obvious to me right now, but your users will expect it to work that way. I've historically found that replicating all the expected behaviors in a LabVIEW control is really hard if not impossible. If you've got a solution, I'd be really interested to hear it.

Ring>>> Mouse Leave Event hides ring?

Ben

Challenge yourself

in LAVA Lounge

Posted

Darn you. You wasted my evening away...