-

Posts

798 -

Joined

-

Last visited

-

Days Won

15

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by John Lokanis

-

Calling .net and thread starvation

John Lokanis replied to John Lokanis's topic in Calling External Code

Yes, I typically have 40-100 parallel sub-systems, each with several threads (and at least one dedicated to .net calls to a DB) running at the same time. Normally a call to the DB via .net executes in milliseconds so there is no issue. But lately, the DB has been having issues of slow response and deadlocking, which I suspect is causing the .net calls to hang for a long time and starving my LV code of clock cycles. And yes, all the timer code is LV. Actually, it is a pure LV system outside of the .net calls for DB access and some occasional XML reading. So, bumping the thread count seems like a good bandaid for the short term without having to recompile the exe. Changing the execution system from same as caller to something else for the .net code might also help? At some point I thought I remember hearing that LV will use the other execution systems automatically if one is overloaded, but perhaps I am remembering that incorrectly. Anyone know the ini strings to adjust the execution system threads? Oh, and does it matter how many cores the machine has or will the OS manage the threads across the cores on it's own? thanks, -John -

I am seeing strange behaviors in some of my code that looks like thread starvation. I have some code that checks the timer, enters a loop and then at each iteration of the loop (should be 1 second or less) checks the timer to see if it exceeded the allowed time. Occasionally, it will timeout with a duration that exceeds the limit by several minutes. This appears to be as if the VI was frozen and could not iterate the loop for a long time. The same application has a lot of .NET calls to a database going on in parallel operations. I am suspecting that those calls are taking a long time and stealing all the threads. I don't have any ini settings that change the thread count to something other than the default and all my VIs use the 'Same as Caller' execution system. So, my question is, how many simultaneous .net calls can I execute before all my VIs stop getting any CPU cycles? From the research I have done, it looks like 8, but I am not sure if that is correct for LV2011. Would it help to set the threads per execution system to a larger number in the ini file? Would it help to make my VIs that call .net execute in a different execution system? This application was built in LV2011 so I need to stick to that environment for now. thanks for any ideas. -John

-

thanks for trying. I will just keep advertising this thread to those who find this bug.

-

Looks like this is still private in LV2014.

-

It's not that I didn't like it. I am still trying to decide what path to take overall. I just want maximum simplicity and maximum functionality. Somewhere they intersect and I hope to figure out where for my needs. I did discuss the different options with Allen Smith at NI Week and the conclusion was for command pattern across a network, the abstraction (or interface) design was the cleanest or at least easiest to understand.

-

AOP architecture issues and options

John Lokanis replied to John Lokanis's topic in Application Design & Architecture

Both, actually. I created a framework that is generic, but has features I need for a few specific applications, like network messaging and subscriptions. Then I started implementing a specific application with the framework. That is where I have observed the dependency issue. I agree and if I could limit it to just a few classes, that would be fine. But these links build up quickly to the point now where if I load Actor B to test it, it loads A and C-Z! To paraphrase that old STD PSA from the 80's-90's: if you are statically linked to one actor, you are linked to every actor they are linked to and every actor those other actors are linked to. I can actually open a new project, add one of my actor classes and watch as LabVIEW loads 80% of the Actors (and many of their messages) into the dependency list. I guess the real question is: should I care? Is this dependency issue just annoying due to IDE slowness and difficulty in testing/developing in isolation or is it a fundamental flaw in the command-pattern message approach that makes it unworkable for anything other than small simple systems? This is basically how I solve the issue when sending messages between two applications. But the level of complexity and extra classes it introduces would make the project even more difficult to deal with. In reality, I would have to create an interface for every Actor and every message to isolate them. It is already become difficult to follow the execution flow with no way to visualize the overall application other than how I arrange the project. Adding abstract classes on top of everything would make that worse and lead to maintenance issues down the road. I am beginning to think there are many solutions but maybe no good ones. Surprised that no AF people have stepped up and defended the use of command-pattern. I would like to hear their thoughts. -

AOP architecture issues and options

John Lokanis replied to John Lokanis's topic in Application Design & Architecture

You are correct, but in most AF systems, the message simply calls a method in the Actor where the real work is done. So, the code that launches B would be in A. But that does not mean you could not put the code in the message 'Do' only and isolate B from A. But then you need to ask how is the message being called? In most cases, A is calling itself to create B due to some state change or other action. In that case, some method in A need to send the message to itself and then A has a static link to the message which has a static link to B. This is exactly what happened to me and took a while to understand what what happening since there is no way to visualize this. Wouldn't this result in even more classes? I would need the abstract interface class and then concrete class for every actor? Or am I misunderstanding you? -

AOP architecture issues and options

John Lokanis replied to John Lokanis's topic in Application Design & Architecture

Yes, there are several ways of coupling classes together. But command-pattern causing a very tight coupling. This is due to message classes being statically linked to senders (they need a copy of the class to construct the message since the message class control defines the data being sent) and being statically linked to the receiver because the message contains the execute method that needs access to the receiver private data. So, if we could divorce the message data from the message execution and then agree to a common set of objects, typedefs or other data types to define all message data, we could break most of the coupling and only share those data objects (the 'language' of the application). But then we need to ensure the message names (strings) are always correct, and the sender constructs the data in the same arrangement the receiver expects. The next issue is spawning child actors. Normally this requires an actor to have a static link to the child actor's class to instantiate it. To break the coupling, we could load the child from disk by name but then we need some means to set any initial values in its private data. This could be accomplished by an init message using the same loose coupling from above. But will the end result be a better architecture? As noted, this design is at risk for runtime errors. But the advantage is you can fully test an actor in isolation. If only there was some way to get both benefits. -

AOP architecture issues and options

John Lokanis replied to John Lokanis's topic in Application Design & Architecture

My goal is to make a better AOP architecture that decouples actors so they can be built and tested separate from the overall application. IDE performance, execution speed and code complexity are just some areas that might benefit or suffer from a move away from command pattern messages. I am hoping to get either a 'stick with command pattern, it is really the best and worth the issues' or a 'dump it and go with the more traditional string-variant message because it is the best way in LabVIEW'. And I want to be sure I can truly decouple when using string-variant and what pitfalls and best practices exist for spawning child actors and having a mix of common and specific messages. So, I appreciate your thoughts on the use of case to select execution of messages. I am not looking forward to a major re-factor of the code so I am hoping for answer #1 but the sooner I get on the right track the better as I am about 50% of the way through this project. -

Thanks for all the replies on this. After some discussions with other DEVs at NI Week, I concluded that the way I am decoupling my network messages is the only practical solution for a command-pattern based message system. And now this thread and those discussions are leading me to reconsider the command-pattern design altogether. I still find it difficult to give up on because of its benefits and the fact that NI chose it for their AF architecture. It must be good, right? Well, I have started a different thread on this issue over here if you want to continue the discussion and give feedback. I think we need to find a best practice for AOP designs if not a specific architecture. Personally, I had to go down the command-pattern road myself to truly see where it led.

-

AOP architecture issues and options

John Lokanis posted a topic in Application Design & Architecture

I am posting this in the Application Design and Architecture forum instead of the OOP forum because I think it fits here better, but admins feel free to move the thread to the appropriate spot. Also, I am posting this to LAVA instead of the AF forum because my questions relate to all Actor Orientated Programming architectures and not just AF. Some background, I looked at AF and a few other messages based architectures before building my own. I stole liberally from AF and others to build what I am using now. But I am having a bit of a crisis of confidence (and several users I am sure will want to say 'I told you so') in the command pattern method of messaging. Specifically in how it binds all actors in your project together via dependencies. For example: If Actor A has a message (that in turns calls a method in Actor A) to create an instance of Actor B, then adding Actor A to a project create a static dependency on Actor B. This makes it impossible to test Actor A in isolation with a test harness. The recent VI Shots episode about AOP discussed the need to isolate Actors so they could be built and tested as independent 'actors'. If Actor B also has the ability to launch Actor C, then Actor C also becomes a dependency of Actor A. And if Actor B sends a message to Actor A, then the static link to that message (required to construct it) will create a dependency on Actor A for Actor B. So, the end result is adding any actor in your project to another project loads the entire hierarchy of actors into memory as a dependency and makes testing anything in isolation impossible. This is the effect I am seeing. If there is a way to mitigate or remove this issue without abandoning command pattern messaging, I would be very interested. In the meantime, I am considering altering my architecture to remove the command pattern portion of the design. One option I am considering is to have the generic message handler in the top level actor no longer dispatch to a 'do' or 'execute' method in the incoming message class but instead dispatch to an override method in each actor's class. This 'execute messages' method would then have a deep case structure (like the old QMH design) that would select the case based on message type and then in each case call the specific method(s) in the actor to execute the message. I would lose the automatic type handling of objects for the message data (and have to revert back to passing variant data and casting it before I use it) and I would lose the advantages that dynamic dispatch offers for selecting the right message execution code. I would have to maintain either an enum or a specific set of strings for message names in both the actor and all others that message it. But I will have decoupled the actor from others that message it. I think I can remove the launch dependency by loading the child actor from disk by name and then sending it an init message to set it up instead of configuring it directly in the launching actor. I guess am wondering if there other options to consider? Is it worth the effort to decouple the actors or should I just live with the co-dependency issues. And how does this affect performance? I suspect that by eliminating all the message classes from my project, the IDE will run a lot smoother, but will I get a run-time performance boost as well? Has anyone build systems with both types of architectures and compared them? I also suspect I will get a benefit in readability as it will be easier to navigate the case structure than the array of message classes but is there anything else I will lose other than the type safety and dispatch benefits? And does anyone who uses AF or a similar command pattern based message system for AOP want to try to defend it as a viable option? I am reluctant to give up on it as is seems to be the most elegant design for AOP, but I am already at 326 classes (185 are message classes) and still growing. The IDE is getting slower and slower and I am spending more and more time trying to keep the overall application design clear in my head as I navigate the myriad of message classes. I appreciate your thoughts on this. I think we need to understand the benefits and pitfalls these architectures present us so we can find a better solution for large LabVIEW application designs. -John -

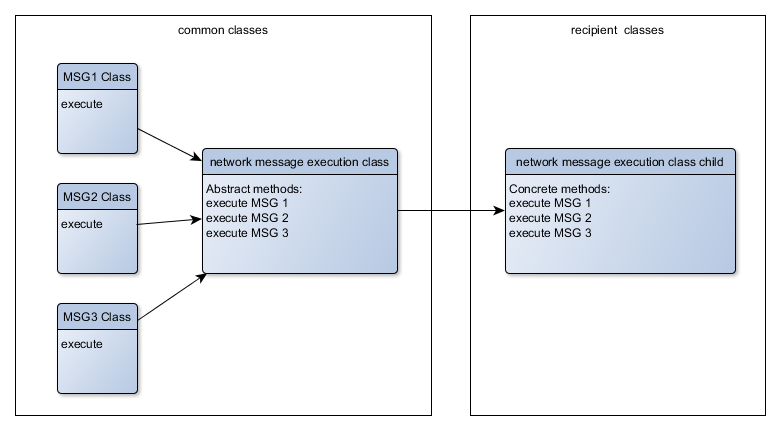

Thanks. Your example is actually similar to what I am doing. I will try to find some time to build an example to post. The real project is already well over 2000 VIs and not something I could share here. To answer your question, my architecture differs from AF in that I have a singleton object wrapped in a DVR that represents the communication buss allowing my processes (actors) to communicate. This make it a flat message system and not a hierarchy like AF. To add network messaging to a project, you can instantiate the system using a child class of the system object that adds the ability to store the local implementation of the 'network message execution class'. Since the architecture has no idea what messages the project will need to execute, the value of this variable in the system class is the 'network message execution class' top parent with no methods. The version for the project that contains the abstract methods is a child of this class. So, when we write the execution code in the message, we read the variable (which is of type 'network message execution class' top parent), but cast it to the child with the abstract methods. This then allows us to call the specific abstract method for this message. Now, if this is executed in a specific system where the variable has been set to the grandchild class with the concrete implementation of the execute methods, then dynamic dispatch will call the grandchild method and we will get our desired execution behavior. I just read that three times and I think it is as clear as I can make it. Sorry if it is not. No worries. I kinda follow your thinking (I think). I will have to ponder this some more.

-

I should have entitled the original thread 'Decoupling LVOOP class based message systems that communicate across a network' or perhaps been even more generic and asked: 'How to best decouple a class from it's implementation?' As stated, I am not interested in changing the whole architecture and abandoning message classes. For the most part, they work very well and make for efficient and clean code. But every architecture has its issues. And serialization (as far as I understand it) really does not help anything because you still need the class on both ends to construct and execute the message. I did not intend to jump down anyone's throat but if you re-read your responses, they seem a bit pushy instead of being helpful for solving the problem. I would prefer to focus on the OOP decoupling problem and solve that then to 'pull out all the nails and replace them with screws'.

-

Yup. Should have linked to that old thread. I am revisiting my solution in hopes of finding ways to improve it. If I hard wire it in the parent method of the message, then it is statically linked again. By setting it at runtime in an init routine, I can break the static link. Here is how it works: I have a parent class of type 'network message execution class'. This is part of my architecture. It has no methods. IT just exists to give a type for my implementation variable storage and to inherit from. For each App-to-App communication path I need, I create a child of 'network message execution class'. This child has only abstract methods for each message that App A and App B might send to each other. I then create a grandchild in App A that just overrides the methods for each message App A can receive. I do the same in App B. So, I now have a parent, a child and two grandchildren. Only the grandchildren have code in their methods that statically link to other methods within their respective App. Both apps need a copy of the parent and the child but only App A has a copy of the grandchild for App A. I store an instance of the grandchild in a variable (of my generic parent type 'network message execution class') in each App on init. I then want to send message class X to App B. So, App A and App B need a copy of message class X. App A uses the class to construct the message (sets the values of the private data) and then sends it over the network (via some transport). In the execute (or Do.vi for AF people) method of message class X, I access the local grandchild from the variable, but cast it as the shared child with the abstract methods for each message. I then call the specific (abstract) method in the child class for message X. At runtime this gets dynamic dispatched to the override method in the grandchild that is actually on this wire (from the variable I stored) and I get the desired behavior executed in the receiver (in this case, App B). I hope that was not too cumbersome to read through. If you refer to my original diagram, it might make more sense. As for why all this variable stuff, I am trying to make a generic architecture where you can create different message execution implementations for each project. So the architecture just supports some generic execution class but the specific one with the abstract methods is customized to the project. I also actually store this in a variant attribute so when the message accesses it, it can choose from several by name so I can support multiple separate message groups between App A and App B, C, D, etc... That is exactly what I am doing. So, maybe my implementation is the best solution but I was hoping for something simpler. I would like to have less pieces to it if possible. As it is now, for each message I want to add, I need to: Create the message from my network message template. Add the abstract method to the 'network message execution class' child. Edit the message execute code to call the new abstract method. Override the abstract method in the grandchild class of the receiver and implement the specific actions the message recipient is required to do. I just have this feeling that there is some OOP trick or design pattern that I am missing that could make this cleaner.

-

I knew it was a bad idea to give a specific example in a discussion like this. Inevitably, someone would read way too much into it and make a ton of unfounded assumptions that just go off in a tangent away from the original topic of the thread. So, to quickly answer your question: sometimes the DB is on the other side of the planet and it is not accessible to be queried by the client. Sometimes there is no DB but ranter a few XML files on a file server or on the machine the LV based server app is running on and those are not accessible to the client. And the client has no need to know the source of the data. It just needs a copy of the data so it can fulfill it's role as the VIEW in this MVC architecture. Without a full understanding of the requirements of a system, suggesting solutions completely outside the context of the topic of the thread is not helpful. And as for the string based message solution, as I stated before, that is a different solution with it's own set of complications. I have chosen class based messaging for the benefits it offers, like easy message construction without using variants or flattened strings and automatic message execution without having to decode and select the implementation. Thanks to inheritance and dynamic dispatch, this makes a nice clean implementation, with the drawback of strong coupling when crossing the network. If anyone has some ideas relevant to decoupling an LVOOP class in a cleaner or simpler way than I proposed, please share your thoughts. But let's keep this thread on topic and not degenerate it into a comparison of architecture styles. We can start a different thread for that elsewhere if other wish to discuss it.

-

This might be a helpful link to check out and to record your experience while in Austin for NI Week: http://www.hotelwifitest.com/hotels/us/austin/

-

Well, maybe I am doing things the *wrong* way, but the data sent between 'actors' in my systems is almost a class. For example: My server loads a hierarchical set of data from a database and stores it in a class that is a composition of several classes that represent the various sub elements of the data's hierarchy. When a client connects to the server, the server needs to send this data Y to the client so it can be formatted and displayed to the user. So, both will need the ability to understand this Y data class. And the client's BB class must accept this Y class as input (normally by having it be an element of the message class's private data). Now I suppose I could flatten the class on the server side and send it as a string using the generic CC class, then on the client side I could write the BB class to take a string data input so the CC class could pass the data to the child 'do' method in the BB class, but at that point I would have to unflatten the string to the Y data type so it could be used in the client. How is this any better than the old enum-varient cluster message architecture? You still need to cast your data. You are just casting classes instead of variants. One of the advantages of class based message architectures was the use of dynamic dispatch to eliminate the case selector for incoming messages and the use of a common parent message class so all the different class data could ride on the same wire and you would never have to cast it in your 'do' methods. There is another advantage to keeping the data in its native format. I can send the same message using the same functions to an external application or an internal 'actor' and the sender does not need to know the difference. The architecture will automatically send it to the right destination and use the right method for sending it based on how I have setup my application. This makes it very easy to break an application apart into two separate entities with very little code changes. But I think we are getting away from the original question. I accept the fact that there are other ways to send messages across a network that do not use classes or that convert the data into a string and use some other method than class based messaging to send the message. But if I REALLY want to stick to class based messaging, is there a better or simpler way to decouple a message from its sender and receiver than the way I outlined in my original post? So far, my method is working for me, but I would love to find a way to re-factor it to something simpler.

-

Ok, but the data Y could be a data class and could be specific to msg class BB, so even though server does not need a copy of BB, it still needs a copy of Y. But I see your point. You are decoupling by using the class name in text format to select how to execute received data. You still need to come up with a way to package data Y (and X and Z and etc) on the server side since each unique message has the potential to have unique data types. And I don't see how to make CC as generic as you indicate. I understand that CC can load an instance of class BB by name from disk and then call it's do method, but how can it translate the data from a generic type to the type Y that BB requires as input without having some way to select the class Y to cast it to?

-

Thanks but that defeats the goal of "retaining the functionality that class based message architectures offer". I realize there are many ways to implement a message based architecture and each has its trade offs. Since I am working with class based messages, I want to solve the problems that this architecture poses. I actually like the benefits it has so I plan to stick with it for now.

-

Goal: Find the best methods (or at least some good options) for message decoupling that are simple to implement and efficient to execute. Background: Messaging architectures in LabVIEW that use a class for each unique message type are strongly coupled to the recipient process (aka ‘Actor’). This is due to the need within the message execution code (a method of the message class) to access the private data of the recipient process or to call methods in the recipient process in order to do the work that the message intends. (See Actor Framework for an example of this). The problem arises when you wish to send these messages across a network between two separate applications. In most cases, these applications are not duplicates of each other, but rather serve completely separate purposes. An example would be a client and a server. The client needs to message requests to the server and the server needs to push data to the client. For a process to send a message, it needs to package the inputs in the private data of the message class and then transmit it via the network transport (which can be implemented in multiple different ways and is not material to this discussion). In order to construct the message, the sender will need a copy of the message class included in their application. This will mean they will need to also load the class of the message recipient since it is statically linked to the message class within the method that executes the message. And since that will trigger many other class dependencies to load, the end results is the majority of the classes in the recipient application will need to be included in the sending application. This is not an optimal solution. So, we need to find a way to decouple messages from their recipients but still be able to execute them. My solution: The way I have been handling this is for each message that needs to cross the network I create a message class whose execute method calls an abstract method in a separate class (call this my network message execution class). Both the sender and the recipient will have a copy of these message classes and the network message execution class. Inside the message class’s execution method, I access a variable that stores an instance of the network message execution class and then calls the specific abstract method in the network message execution class for this particular message. In each recipient application, I create a child of the network message execution class and override the abstract methods for the messages I intend to receive, placing the actual execution code (and static links to the recipient process) within the child class methods. Finally, when each application initializes, I store its child network message execution class in the aforementioned variable so it can be used to dynamically dispatch to the actual method for message execution. The advantages of this are: Messages are decoupled between my applications. The disadvantages are: For each message I wish to transmit, I must create a new message class, a method in the network message execution class and a method override with the real implementation in the recipient’s network message execution class child and then edit the message class to call the method in the network message execution class. This also means that each application must have a copy of all the message classes passed between applications and the network message execution class. The problem arises when you add a 3rd or fourth application or even a plugin library to the mix and wish to decouple those from the other applications. You must either extend the current set of messages and the abstract methods in the network message execution class, having each entity maintain a copy of all of the messages and the network message execution class even though they never send or receive most of those messages, or you must add additional variables to your application to store different implementations of the network message execution class for each link between entities. So, now that you have read my long explanation, does anyone have ideas for a better way to solve this? I would love to simplify my code and make it easier to maintain, while retaining the functionality that class based message architectures offer. But decoupling must be addressed somehow.

-

Given the occurrence of this problem year after year, I think you give them too much credit. I would bet that track and level overlap is not even a criteria for scheduling. Personally, I think they should post the session list much earlier and let everyone choose the sessions that interest them. Then simply apply something like the traveling salesman algorithm to optimize the session times so the least number of conflicts for the least number of people is achieved. That what computers are for, after all....

-

A few interesting points: The mobile app will not allow you to add personal events (meetings, LAVA BBQ, Etc) but the full web version will. The full web version will not allow you to schedule more than one event at the same time but the mobile one will. The full web version will not allow you to schedule a personal event that takes up part of another event (like one that runs into lunch). Kinda annoying overall. As for the sessions, I have been able to book up most of Tues and Weds but on Thurs I have at least 3 sessions at each time slot I want to attend. Why do they do this every year? For each track, they should spread the sessions in the intermediate and advanced categories out so there is as little overlap as possible!

-

thanks! would it kill the web guys to put that on the main page somewhere? I just accidentally discovered I can get to it by attempting to re-register. not very intuitive...

-

Ok, how do you get to the regular site? The email I got only had links to get the app or access the mobile version via web. That version does not have the export feature. And I do not see a link on the NI main site.