-

Posts

798 -

Joined

-

Last visited

-

Days Won

15

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by John Lokanis

-

-

Thanks for the trick. I will try that next.

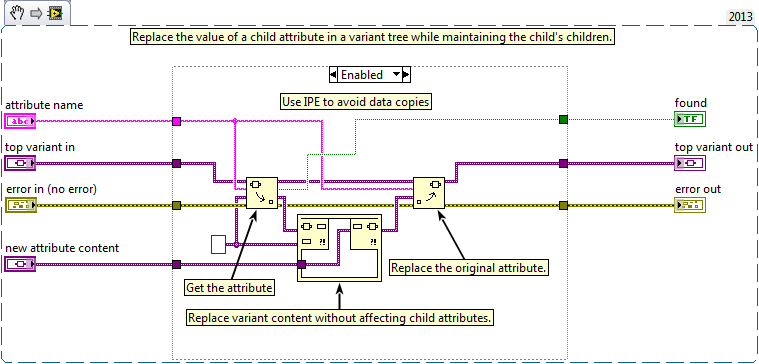

I just tried the re-factor using the IPE instead of the for loop to preserve the child attributes. The result is a 31% DECREASE in performance. I must admit I am very surprised by this.

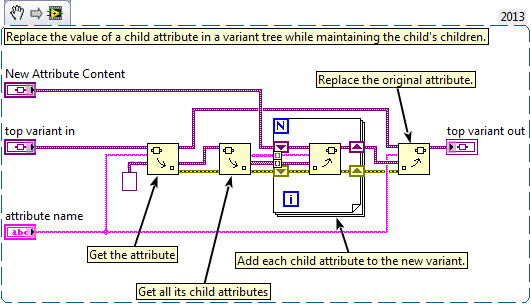

Assuming I did this right, I think your other solution sounds better. I'll post my results once I complete the edits...

-

Thanks for the tips! I will see if I can re-factor my code to avoid the copy. I do have a few questions:

1. Using the IPE, it appears that I still need to do the copy to extract the first attribute. But it does eliminate the need to reattach the children of the attribute.

2. If I move the node data to an attribute, there would be no need to use the IPE as far as I can see.

3. I often extract all child attributes from one of the top attributes and then perform an action on each of those. If the node is now an attribute, I would need to remove that item from the array of attributes before I process them. There are two ways I can think of doing this. The first is to test the attribute name inside the process loop and skip the one named 'NodeContent'. The second is to search the array of attribute names for the element named 'NodeContent' and then delete it from the array before I process the array in a for loop. Any opinion on which solution would be faster? I expect most attribute arrays to have less than 100 elements.

thanks again.

-John

-

Does anyone know a faster way to accomplish this? The profiler marks this as one of my top hits for VI time. I wish I could speed it up but I cannot think of a better way.

Thanks for any ideas. (Even if that idea is to not use variant trees for storing data. But if so, please offer a faster data store method.)

-John

-

Are you sure you meant to say "turn on icon view for terminals" and not to "turn off icon view for sub-vis"?

I honestly cannot see any benefit to making the terminal take up more space. I do agree with your point of spreading things out and leaving room to grow if necessary in the future.

But, I have seen benefit to turning off icon view for a sub-vi on occasion. Especially for situations where you cannot keep the con pane down to 4224. But now with LVOOP, that seems to be less of a problem.

I do agree that accessors have no good reason to have FPs. For that matter, they really don't even need BDs. Maybe I am in the minority but I never edit 99.9% of the ones I auto-create and they mainly mess up my VI analyzer tests because the scripting that creates them does not deal well with long class names. (terminal labels tend to overlap the case structure)

Seriously, icon view terminals? The next thing you will suggest is using Express VIs!

-

1

1

-

-

I am not sure I would want to support the idea of eliminating the FP from sub-vis. While I agree that it's layout does not serve any functional purpose in the code and is just another time consuming step to deal with, i feel it still has value as a unit test interface. That is one of the best things about LabVIEW that text based languages lack. So, Jack, if you want to kill off the FP, you need to tell me how to debug my sub-vis just as easily as I can now.

I would support some way to auto arrange the FP in a sensible way. One thing I would love to see is the controls being limited to the visible space on the FP. For example, when you copy some code containing control terminals from one VI to another, those new controls should be placed within the visible portion of the destination VI's FP. Currently, I often have to hunt down where they landed far off screen and move them back into place.

I think we have a pretty solid basic agreement on sub-vi FP layout. I would be satisfied if we could simply designate a VI as a sub-vi when we create it and then the IDE would auto-place/move controls to meet those guidelines. The rules I follow are:

- All controls must be visible on the FP (within the current boundaries. No scrolling allowed)

- Controls must be laid out to match the connector pane orientation.

- Error clusters are always in the lower left/right corners.

- When possible, references are passed in/out the upper left/right corners.

- All controls snap to the grid (I use the graph paper grid) with at least one grid block separation.

- There is a minimum margin of one grid block around the perimeter of the FP.

- The FP is positioned so the origin is precisely in the upper left corner.

Bonus things I do:- The minimum size of the FP is set so the entire toolbar is visible. Even if this means a lot of white (grey) space on the FP.

- The VI description is copied to the FP in a comment block at the bottom of the FP.

Even if we just had some scripting example to accomplish most of this that would be a step forward. I know many people have made their own version. I would prefer an official solution so we could have some continuity between developers.

-

Thanks for the thread-jack guys.

I seem to have triggered some deeply suppressed feelings about LabVIEW.

Back on topic: I just hope someone at NI can get to the bottom of this and fix it in the next release. (I did report this to tech support)

-

I am not sure I can totally prove this but here is what I have seen:

In a large project built in LV2012 and then loaded into LV2013, I recompiled and saved all files.

Now every time I open the project, a bunch of VIs want to be saved because they claim a type def was updated. I go ahead and save them then close the project and reopen it. Again the same VIs want to be saved. There seems to be no way to get them to save the change once and for all. (Oh, and of course it never says WHICH type def changed!)

After fighting this for weeks I finally opened the project and then opened one of the types defs used in one of the files wanting to be saved. I nudged the control by one pixel and saved the type def. I then saved the calling VI. I closed and reopened the project and now the number of VIs wanting to be saved was smaller (and the one I 'fixed' was not among them).

I then repeated this process with every type def in the project and saved (individually) each calling VI.

Finally, now when I open the project there are no VIs that want to be saved.

So, my theory is when LabVIEW converts from LV2012 to LV2013, it changes the type def files and triggers the need to save the calling VIs but for some reason that change is not saved in the type defs so each time I open them, they again convert from LV2012 to LV2013 and re-trigger the change. By editing them I forced something to permanently alter them into LV2013 type defs and that fixed the issue.

Anyone else see something similar to this?

ps. I have all code set to "separate compiled code from source file".

-John

Oh, and 'mass compile' did not fix this issue.

-

Love this topic. I have been doing this since the first day I started programming in LabVIEW. I am willing to bet 20% of my coding time has gone to perfecting my BD. But I don't see it as OCD. Just like text programmers will create elaborate function comment blocks using characters to make a pretty box around them and aligning the text inside, I see a well laid out diagram as a means of communicating the operation of the code efficiently. I prefer to position things so the flow is as obvious as possible. I never run wires under objects (unlike *SOME* frequent LAVA posters I know

). I stick to 4224 connectors but I will occasionally convert one to 4124 or 4114 to allow a connection to come in from either the top or bottom, if it makes the diagram cleaner. And I do like to align object in multiple cases. Usually you get this for free when you copy one case to make alternate versions but if it gets out of alignment, I will toggle back and forth to find the issue and nudge it back.

). I stick to 4224 connectors but I will occasionally convert one to 4124 or 4114 to allow a connection to come in from either the top or bottom, if it makes the diagram cleaner. And I do like to align object in multiple cases. Usually you get this for free when you copy one case to make alternate versions but if it gets out of alignment, I will toggle back and forth to find the issue and nudge it back.One thing I do that I am not sure others do is the wires entering and leaving the side terminals must approach and leave on the horizontal axis. I never allow a wire to connect to the sub-vi from the top or bottom unless it is going to a central terminal. The same goes for all structures. I never have wires enter or leave from the top of bottom. I will add an extra bend in necessary to achieve this.

Embrace your OCD. It makes you a better wire-worker!

-

This just saved me again with a single column listbox. Thanks again Darren!

(Please lobby to make this public in the next version of LV)

-

Under VI Properties >> Window Appearance, is "Show front panel when loaded" checked?

Ok, this is really weird. Yes it is. And I never set that. But what is really strange is it is grayed out and I cannot figure out why or how to unselect it.

-------------------------------------

Ok, sorted it out. If you accidentally select "Top level application window" and then customize it, you will get the the "Show front panel when loaded" checked and grayed out. If you instead select "Dialog" or "Default" first and then customize, you will get the "Show front panel when loaded" unchecked but still grayed out. Seems like there is no option that lets you control this manually. That could be a bug. I don't recall this being always grayed out in the past.

As for the 66 VI that always want to be saved, that looks like an unrelated problem with this project.

-

2

2

-

-

I am seeing some strange behavior each time I open a certain project in LV2013. For some reason a VI I was working on recently always opens its front panel when the project is opened. And it shows a broken arrow for a few seconds while the cursor is in a busy state. Then, the arrow changes to unbroken. I close the VI, I do a save all (and LV saves 66 VIs for some reason) and then I close the project. When I reopen the project, it does the exact same thing.

Has anyone see this type of behavior before? Is this due to something I did or is it a bug? Any fixes/workarounds?

i have not contacted NI yet since this could be my fault and it only happens with a certain project and only recently (I have been using LV2013 for awhile now without this issue).

thanks for your input.

-John

-

New idea about class renaming and labels:

-

Added a new idea to the exchange relating to the issue I was trying to address. Please kudo it!

-

I'm working on a large project and have run into a quandary about how to best deal with a large shared data set.

I have a set of classes that define 3 data structures. One is for a script read from disk that the application executes. Another is the output data from the program, generated as the script is executed. The last is a summary of the current state of execution of the script. The first two can be significant in size and are unbounded.

In my application, I have one actor (with sub actors) responsible for reading the script from disk, executing it and collecting the data. It also updates the summary status data. Let's call this the control actor. I have another actor that takes the data and displays it to the user, allowing them to navigate through it while the script is being executed. Lets call this the UI actor. The last actor is responsible for communicating the summary to another application over the network. We will call this the comm actor.

So, the control actor is generating the data and the other two are consumers of the data. I had originally thought to have all the data stored as state data in the control actor and then as it is updated/created just message it to the other actors. But then they would essentially have to maintain a copy of the data to do their job. This seems inefficient. Then I thought I could wrap the data classes in DVRs and send the DVRs to the other classes. That way they could share the same data. The problem with that is they would not control when their data gets updated. And I am violating the philosophy of actors by creating what is essentially a back channel for data to be accessed outside of messages. Also, I could block the 'read' actors when I am writing to the DVR wrapped class. I would have to be careful when updating subsections to lock the DVR in an in-place structure to do the modify and write. Then comes the question of how to best alert the readers to changes made to the data by the writer. Simpy send them a message with the same DVRs in it? Or send a data-less message and have them look at the updated values and take appropriate action?

So, any best practices or thoughts on how to resolve this issue? I appreciate any feedback..

-John

-

Thanks! That did it. There are so many helpful things hidden away in vi.lib...

Here is my cleanup VI, if anyone else needs to do the same.

-

1

1

-

-

I am trying to automate some housekeeping tasks and have run into a problem.

I decided to rename some classes in my project. In several VIs, I place those classes as block diagram constants. I usually display their labels when I do this for readability. Unfortunately, when I rename the class, the labels do not update. (Fixing this would be a nice Idea Exchange feature).

So, I decided to write a little scripting VI to go though my BDs and find all objects of type LabVIEWClassConstant and then extract their new class name and use it to change the label. I have done this in the past with front panel controls successfully by using the TypedefPath property to get the class name.

So, I traversed the BD and returned an array of LabVIEWClassConstant refs. These were returned as GObjects so I had to cast them to LabVIEWClassConstant type before I can access the TypedefPath property. But when I do this, the value returned from the TypedefPath is empty. I am guessing this is because the cast loses the object's identity and makes it a generic LabVIEWClassConstant.

So, now am stuck. Unless I find a way to get the actual class name, I cannot fix the label and must go manually fix it on every BD.

I think I am about to add VI Scripting to the realm of regular expressions. In other words, if you have a problem and you decide to solve it using VI Scripting, now you have two problems.

thanks for any ideas or pointing out obvious mistakes/misconceptions I may have made...

-John

-

I have created projects that would crash or warn on exit in multiple versions of LabVIEW. The most recent one turned out to be a corrupt 3rd party class file with bad mutation history. There is now a CAR to address it. So, be careful labeling version A or version B stable or unstable. It might be bad data in your source files.

I have been able to build and release applications with every version going back to when the application builder was first introduced that have been stable. It all depends on what features you choose to use and what extras you bring in. I have always found the core functionality of LabVIEW to be stable.

You may still run into some issues at the fringe, but NI has always been good about tracking and fixing issues in a timely manner, in my opinion. Much better that the majority of other software tool vendors.

-

No, I'm saying the MHL sends a message to the helper loop telling it what step to execute.

MHL: Do step 1. Here are the data you need.

HL: I finished step 1. Here are the results.

MHL: <checks results and figures out the next step> Do step 2. Here are the data you need.

HL: I finished step 2. Here are the results.

MHL: <checks results and figures out the next step> Do step 8. Here are the data you need.

etc.

In the presentation I said any time I drop a MHL I consider it another actor. That's another simplification. I only call a MHL an actor if it has the characteristics I consider essential for agile actors--atomic messages (no sequential dependencies) and always waiting. The helper loop in this case could be a message handling loop, but it's not an agile actor because it directly executes lengthy processes.

Ok, that helps understand the role of the helper loop better. But what about external Actors wanting to have the sequencing actor perform just step 5? You would have to make a separate message just for that case, but if you made each step a message, you could internally execute them in any particular order and you could externally execute them individually.

A concrete example I am thinking of is reading a settings file. You need to read this file as part of the process when you init your application and configure your system. But what if the customer requested the ability to re-read the file while the application is running because they edited it with an outside tool and want the new settings to be applied? If that part of your initialization process was a separate message, you could execute just that part again. If your state data had a flag that indicated if you were doing a full initialization, you could use this to determine if the next step in the sequence should be called or skipped as appropriate. Your actor is still executing atomic steps. You just have the option to have it cascade to additional steps if in the correct mode.

Why is this a bad idea? You mentioned race conditions (I understand how the QMH has those) but I think this implementation would avoid them as you are not pre-filling a queue and you have the opportunity to check for for exit conditions before started the next step.

-

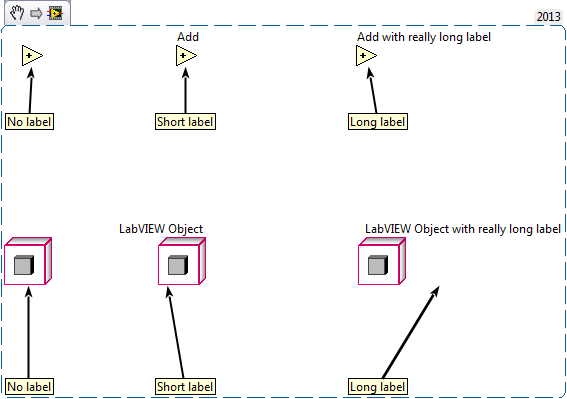

I am really liking the new labels with arrows but I think I may have found a bug. It seems that it is able to point to the diagram element correctly with primitives but if you have an object with a long label showing, it calculates the center of the item using the label and resulting in an arrow pointing in midair.

Not sure if this applies to other diagram items as I have only tried it with a few.

-

Correct.

Good. I'm glad I got one right!

I don't like having *loops* send messages to themselves. An actor may consist of multiple loops, so an actor can send messages to itself (helper loops) internally.Having a loop send messages to itself isn't inherently bad--it is possible to do it safely. I choose not to do it because it is very easy to overlook race conditions and very hard to verify they do not exist.

So, if the MHL does not trigger the next step in the sequence by sending itself a message, are you saying that the 'helper loop' should send the MHL a message to do the next step in the sequence? And if so, wouldn't that mean that the helper loop would be less useful since it would always trigger the next step even if you only wanted to repeat a single step? It seems to me that since the state data of the actor lives in the MHL loop, that it should be making the logic decisions about what step to do next in the sequence or to abort the sequence altogether if some error or exit condition has occurred.

What DB are you using? Databases support multiple connections. I don't understand why you are intent on limiting yourself to only one connection.You are making me sorry I used a database as an example. Yes, databases support multiple connections. And opening a connection and closing it for every transaction is expensive. And I understand your point about pooling connections and opening a new one if two transactions are called at the same time. You don't actually have to do that as you can execute two transactions on the same connection at the same time (the database will sort it out). But what you are missing is the real world fact that databases often stop working. They become too busy to respond because some IT guy's re-index process is running. They because unreachable because some IT guy is messing with the network routing or a switch has gone down, been rebooted or is overloaded. They disappear altogether when some IT guy decides to install a patch and reboot them. If your system is dependent on continuous access to the database, you must do everything in your power to correct or at least survive any of these scenarios as gracefully as possible.

So, let's look at your pooling idea:

Actor A requests data from the DB Actor. The DB actor spawns a helper to execute the transaction using the available connection. The database is unreachable so the helper loop closes the connection, sleeps, reestablishes the connection (the ref has now changed value) and retries the transaction. It repeats this process 10 times over a periods of 15 minutes, hoping the DB comes back. Each attempt is logged to the error log.

At the same time, Actor B requests data from the DB Actor. The DB actor adds a new connection to the pool, giving Actor B's request the new connection. A second helper is spawned to execute this request and runs into the same problem, closing the connection and then reopening and retrying. More errors pile up in the error log.

This is repeated many more times, resulting in a bunch of connections being added to the pool just so they can fail to connect, the error log is so convoluted with messages that it because nearing impossible to untangle the threads of errors, and all for what? So you can call two database transactions at the same time? Not worth it.

But I digress from the topic. The point I was trying to illustrate was an example where something other than an actor was the best solution. If you don't like my example, think of a different one. I just think your presentation would benefit from a discussion of where it is appropriate and not appropriate to use actors.

-

Ok, I am not going to quote a bunch of posts but I am going to try to respond to your points above and try to get this back on the topic of how to improve your presentation.

But first a slight deviation off topic to clarify the point about making a singleton object vs an actor:

I used database access as an example but I think this can apply to other shared resources. Here are the things I am trying to consider:

1. There is overhead to opening and closing a connection to a database. So, caching a connection is preferred.

2. A database connection reference can go stale for many reasons. Also, the database can go down and be restarted. To be immune to these situations, error handling code needs to be able to reestablish connection to the database and reattempt the execution of a database call before issuing a critical error to the rest of the system.

3. Some database operations do not require a response and therefore should not block the caller. Other operations require a response before the caller can continue and are therefore blocking. The ability to have both options is desirable.

4. Having a central actor handle all database operations can work in some messaging architectures but is problematic in hierarchical systems (like AF).

By having a object handle database communication (instead of an actor), you can call methods inline (the callers thread is used to execute the operation) when you require the response to continue the work of the caller OR you can spawn a dynamic daemon and have it call the database when you simply want to write data and do not require a response (unless there is an unrecoverable error).

By making the database object a singleton, you can reuse a connection between calls (saving the open-close overhead). This makes most sense if you anticipate making a lot of calls but not a lot of simultaneous calls. Also, by having a single object, when there is an issue that can be resolved by retries and reestablishing the connection, you block other callers while working the issue. When you unblock them, you are passing them a repaired connection or you have issued a critical stop because the database is down. Either way, you avoid the issue of multiple callers attempting to talk to a dead database and filling up the error log with redundant information. And yes, this could be achieved with an actor but then you lose the ability to inline the calls and add the need to have reply messages from the database actor. Finally, you have to break the hierarchical messaging architecture in AF to do this.

So, my point of using this example was to talk about some cases where an actor might not be the best choice. If you are designing a system and you want to use actors, there are still going to be cases where you want to use other techniques as well. Your presentation should address this in some ways. Maybe give a few examples of places where an actor is not the most efficient solution.

Ok, back to the main discussion. Making actors that do not block. I have given this some more thought and I think I now understand what you are saying but let me state it in my own words and you can confirm.

The message handler of an actor should not be blocked but the overall actor 'system' (the message loop and all helper loops) can be in a busy state.

So, if you have some process that can take an undefined amount of time (let's use the database call again as an example) then you should call that process from the helper loop of an actor and have the message handler respond with a status while the helper loop is busy. If another request comes in, it should queue that job until the helper loop is available again and (if required by your design) reply with a status (ie: I'm busy, you are in the queue). This should leave the actor always able to respond. For example, it could receive a message asking for status and response with how many jobs are in the queue.

So, to summarize If you send a message to the actor telling it to do something that takes time, it should hand that off to a helper loop and go back to listening for more messages. Lengthy processes should never be done by the message handler. One point: you sometimes say that your helper loops are like actors, but I think you need to make the point that they do not need to adhere to the rule that they are always ready to receive a message. Otherwise, they would need to be wrapped by a MHL and you start getting into that turtles reference you made earlier.

As for sequencing, I think the actor should encapsulate the sequence from the caller but I am less clear on why it is bad to call itself. For example, if you need to initialize a system with several steps, I would anticipate a design like this:

1. Actor is asked to initialize system.

2. Actor calls helper loop to perform first step of initialization and sets state data to indicate what phase of the initialization it is in.

3. Helper loop responds that first step is complete. Actor updates state and calls helper loop to perform next phase of initialization.

4. Process repeats until all steps completed. Actor responds to caller with message that initialization is complete.

At any time during the above process the caller can ask the actor what its status is and it can response with what phase of the process it is performing. This could then be used by the caller to update progress in the GUI.

In this example, it seems to me that it would make the most sense to make each phase of the initialization process a separate message that the actor responds to. That would allow the developer to easily rearrange the order in the future and it would allow the caller to request re-initialization of a single phase at a point in the future. So, that is why I thought having an actor message itself was a good idea.

Expanding your presentation to include some common real world scenarios (like executing a sequence) would be helpful. I would include a discussion of the pitfalls in this example and how to avoid them. I still think it would be best to use simple diagrams to illustrate your examples instead of actual G code.

I hope this is helpful. I know this discussion has helped me in better understanding actor programming (or at least shown me what I do not understand about actor programming!

)

) -

I see what you mean. The receiving actor has a single behavioral state, so all messages are always handled exactly the same way. Yes, you can do that for certain actors. I don't know if it is feasible to design all your actors that way. My gut feeling is eventually an actor somewhere in the app will need to handle a message differently depending on its internal state data.

It seems to be that in some cases, when a message is executed, the last step of the execution may be to execute another message (send to self) to continue some sort of sequence. This could be dependent on some state data (check if we performing a multi-step process) so that you could still execute the step independent from a sequence. Also, this gives you the ability to interrupt the process with exit messages.

It's been a long time since I've done any programming with DBs, so my terminology might be wrong and certainly some of the details on how I think they work are wrong. For this response I'll use the term "DB connection" to mean a connection to the database that can only process one operation at a time.The general solution to reuse anything expensive to create is pooling. The initial approach I'd take with a DB is to create an actor that abstracts the database. The DbActor creates a private pool of connections it uses to service the requests it receives. Internally it keeps a look up table of the connections currently executing an operation and an address where the response should be sent. When a connection returns a response DbActor looks up the associated address, sends the response to it, and returns the connection to the pool.

I would not distribute DbActor in a DVR for the reasons you mentioned. I also would not give connection objects to the other actors. One of the responsibilities of DbActor is to manage the connection pool, and if it is giving connections to others it can no longer do that.

I am really leaning towards the DVR idea. Here is my reasons why:

I can allow multiple actors access to the database without them having to message a central DB actor. They can still serialize their execution using an inplace structure. I can perform error handing and retries within the DVR class and all actors can benefit from this (if I have to drop and reconnect the DB handle, when I release the DVR, the next actor gets the new handle because it is in the class data of the DVR wrapped class).

If at some point I do not want to do a DB operation inline, I can simply alter the class to launch a dynamic actor to process the call and then terminate when complete. Since the state data is in the DVR wrapped class, It will work the same.

Regarding shutdown, when I have low level processes that might take some time to shut down, I write my shutdown logic so an actor doesn't shut down until all its sub actors have shut down. That's easy enough to do with hierarchical messaging. Aren't you using a variation of direct messaging? I've never tried doing a controlled shutdown with that, though I suppose you could as long as your actor topology has a clear hierarchy of responsibility.What good is a MHL that is always waiting if it is waiting for a child actor to shut down? It seems that it is still being blocked in that case. I just do not see a way to truly free up all actors at all times when there are processes in an application that take unknown amounts of time to execute.

I keep thinking that actor programming is somehow different from other ways of designing applications and that it is supposed to make things more adaptable and maintainable, like OOP does. But I just can't seem to wrap my head around how to do this for applications with a lot of sequenced steps. The 'actor is always waiting' and 'actor can handle any message at any time' rules just seem impossible to adhere to when there are so many preconditions that need to be met before many operations can be performed and many operations can cause blocking.

-

I'll respond to the rest as I get time, but this part jumped out at me.

NO! This is the wrong approach to take!

You must accept that you cannot--in general--prevent an actor from receiving a message it is not prepared to act on. Even if you only have two actors talking to each other. This is a fundamental truth of concurrent programming. Quit trying to break the laws of time; it's not likely to work out in your favor.

(I've written about this subject before. Search for "sender side filtering" or "receiver side filtering" and you might find something.)

Perhaps I was not being clear. What I meant was to design the system so the Actor that had the ability to tell an actor to do something with data was the one that also sent the data needed. So, in theory, you would not get in a situation where an actor get a message it could not act on because the message was designed to include the data in the first place.

But the more I think about this, the more I am unsure if this is even possible. I need an actor to have state data to act on. I need to have multiple messages that command it to do something with that state data. Somehow I need to have the actor load the state data in the first place. And I need the ability to re-load that state data in the future.

More thought required...

-

Yes, that task should be handed off to either a helper loop or a sub actor. Which one you choose depends on how much functionality that logical thread needs. If it’s just waiting for a response from the db, will forward it to your MHL, then exit, I’d say make it a helper loop. If instead it’s an abstraction of the database connection, I’d probably make it a full-blown actor.

Would you dynamically create an actor to handle each DB request and have it destroy itself after it completed and returned the results?

What if you wanted to share a DB connection, to avoid the expense of opening and closing the connection for each transaction? You could wrap the DB class in a DVR and then have each DB actor use that object. This would have the effect of serializing your DB calls (if you needed logic to do error retries and restore the connection if it goes bad). Would that be a bad implementation? (this could apply to any shared resource like a file or some hardware)

It seems to me that it might be best implemented as an actor or helper that has limited lifespan and is exclusive the caller. But, my only concern is cleanly shutting down the system if we try to exit while the DB actor/helper is in the middle of processing. If we are going to call it an actor, then it is already violating the principle that its MHL is always waiting, right?

Most of the time I design systems without having to use request-response messaging, but sometimes it is necessary. When I need a RR message I usually design them to be non-synchronous. Actor A sends the request to Actor B, optionally setting an internal flag indicating “I’m waiting for a specific response.” When a message arrives from Actor B, A checks the flag to see if it's the message he is waiting for and acts accordingly.I understand this but I am not sure I like it. I am thinking it might be better design for Actor B to send the data to Actor A along with the next step it should do. That way Actor A only knows two things: How to ask for data and how to process data when received. This sounds kinda like what you say below with your sequencer.

I can't tell you what you should do--what behavior you want that actor to have? You could discard the message and tell the sender to try again later. You could store the message in an internal buffer and process it when the first DB call returns. You can open another database connection (dynamic launching FTW) and have both queries going in parallel.

[Edit - If you don't know what you should do with the message then your system design is incomplete. Step away from the code and go back to your model. That's where you'll find the answer to your question.]

I want to design it such that an Actor is never asked to do something it is not ready to do. It seems like it might be best to divide the flow logic up between multiple actors.

You’re asking about a sequencer. Like implementing a flow chart with branching logic and whatnot, except you also want interrupts (which incidentally flow charts don’t allow) right? I’ll describe the basic approach I take to ensure there are no race conditions, but there could be alternative rule sets that are also thread safe.

Let’s say you have designed your process using a flow chart and decided it is correct. Create a sub actor with a message handlers for each process block on your flow chart. Do not create message handlers for decision blocks. Do not have message handlers queue up other message handlers. The sub actor is simply a concurrent thread capable of executing the individual processes required by your flow chart. It knows about each step in the process, but it has no idea how the steps are connected.

Every time a step is finished, the sub actor sends a StepCompleted message to the super actor along with any data that needs to be persisted or is required for branching logic. The super actor takes that information, figures out what the next step should be, and sends a message and data to the sub actor requesting it to do that step. Rinse and repeat until your process is complete.

The super actor is responsible for knowing what the next step should be; the sub actor is responsible for knowing how to do that step. Because the super actor’s queue is not clogged up with lengthy flow chart steps, it is free to receive messages from external entities requesting to change the normal sequence of steps. That’s about as close as you can get to implementing interrupts in a data flow language.

I like this concept. I am going to see if I can do something similar.

1. I don't understand what you mean by "data dependencies between messages." Can you give me a use case to help me understand?

2. Any message handling code can be inlined as long as it finishes before the next message arrives. (Helpful, huh?) As a rule of thumb, I inline data accessors and decision logic (the brainy stuff) in the MHL. Lengthy computations, continuous processes, periodic processes, etc. (the brawny stuff) gets pushed into helper loops and sub actors. Deciding between a helper loop or sub actor comes down to how much functionality you want that logical thread to have. Complex functionality requires an actor, simple functionality gets by with a helper loop.

3. I assume you mean behavioral state machine, and not queued state machine, yes? I implement BSMs by nesting message handling loops inside a simple state machine. (If you really meant queued state machine, then my response is "I don't." They behave badly, why would I want to replicate that?

)

)1. I think you answered this above already. I was referring to the case where Actor A needs data from Actor B before it can process a message from Actor C. Mainly, the point was some messages are acted on differently based on the current state of the actor. I think your discussion should have some example of this and how to deal with it.

2. All good points.

3. Your description of the sequencer answered this.

As to your overall presentation, I still feel you should avoid actual LabVIEW code examples and instead use pseudo code or pseudo-block diagrams (not 'G' block diagrams!). I would not waste your time on fancy animations. They rarely do much to communicate information and mainly just entertain the observer. (I'm not saying that your presentation should not be entertaining.) Just focus on putting up pictures with very few words. Maybe just add arrows between slides to emphasize portions of a diagram.

If you dont have a lot of text on your slide, then you cannot make the mistake of reading your slides aloud for the audience!

For making your diagrams, I recommend you check out yEd.

Updating a middle element of a variant tree

in Application Design & Architecture

Posted

where?