-

Posts

2,767 -

Joined

-

Last visited

-

Days Won

17

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Grampa_of_Oliva_n_Eden

-

-

Could someone please point me a help file or tell me why I can't see any replies to anything I have posted.

What would be ideal is the "View unread" as is worked in version 1 of LAVA. The view unread link at the top right is only showing me threads I have nevenr looked at. Once I have looked and replied the new posts to those threads aren't showing up in a list.

Is there a setting or something that i need to just be able to keep on the new stuff?

Ben

I would not mind someone PM the hint to me since I don't know where to look to see if anyone replied.

If these questions sound dumb, there is nothing wrong with your ears.

Ben

Forget my last. It was a setting somewhere under my profile or settings.

So where do I look ot find the filed that lets me edit the text under my name?

Is that option still available?

Still wondering,

Ben

-

I was wondering where you'd wandered off to.

Along with the "last 24 hours" link is the "View Unread Content" link that should be in the upper right hand corner of your screen.

Thank you.

I don't theink the "View Unread" showed up unitl after I posted to this thread. I also did not see your replies show up when I was clicking the "View Unread...".

I'm stil geting used to the new format so give me time I'll catch-up.

I "had wandered off" because I had an opportunity to compete one-on-one with someone I respect on the Dark-Side. That deed is done so I can relax again. I had to get back here sooner or latter for the sake of "humility" at the very least.

Ben

Humility was never my strong point.

-

What you still use the 'standard' system?

Ton

Yes I'm late and just catching up...

I rember when the US was all geared up to do the switch and then they figured out how much it would cost to replace all of the road signs that gave number of miles too and speed limits. It was never funded so it never happened aside from the part where the government did not have to pay (labeling on packages). So to this day pacakges are marked in both standard and metric and serve as a ready reference when doing unit conversions durring dinner.

Ben

-

Robert Clain and Miguel Salas Intro to Microcontroller Programming final project:

http://instruct1.cit...mos22/index.htm

The Effects of Hydrogen Sulfide table was an eye-opener

and the second patent referenced reaffirms my confidence in my government...

and the second patent referenced reaffirms my confidence in my government...Thanks for the link. Three notes;

1) H2S is not nice. I have scars to prove it.

2) I recently delivered an app to charaterize the methane concentrations in a coal mine (methane poors out of the rock face). My wife said "You could use that to figure out who did it, couldn't you?

3) If you smell rotten eggs or pop-corn, it time to take a break, preferably outside, in a strong wind.

Ben

-

I am transitioning from Lurker to non-lurker status now that the dust has settled on the new format of LAVA.

There used to be an option/link... that I would use that would just let me look ath the new posts in all forums. Could someone provide a link to whatever the equivelent is now?

Thank you,

Ben

-

-

QUOTE (crelf @ May 22 2009, 03:37 PM)

...who's next?

The Evil CRELF who creates havoc on all that come in contact with him and is signature lines "Damn it Jim I'm a software developer not a brick-layer" and "We stayed in scope, shot to kill!" will soon be apearing on lunch-boxes near you soon (may the Schawartz be with you).

Ben

-

QUOTE (Gary Rubin @ May 22 2009, 08:13 AM)

<rant>So, as some regular readers may know from my previous posts, I spent a few days last week struggling to figure out whether my PCI-6601 Counter/Timer board was capable of performing the pulse generation that I was trying to do. After striking out in my attempts to find applicable examples or knowledge base articles, I tried posting here and at the NI forums. At the suggestion of Ben and our local field engineer, I called NI's application engineers. The application engineer just refered me to the same knowledge base articles that I'd already found. When I pointed out that none of these really did what I was looking for, he concluded that what I wanted to do might be possible, but it would be up to me to decide whether figuring out would be a good use of my time.

Now, fast forward a week. I gave up on trying to make the 6601 do what I need. Instead, I'm using an external NAND gate to operate on the 6601 output and everything is working fine. This morning, I got an email from NI that starts as follows:

Does anyone else find that second sentence somewhat off-putting? Just because an AE spent 15 minutes talking to me, saying "Read this. Read that." doesn't mean that he solved my problem.

What rubs me the wrong way is that implication in that email was that a) NI's documentation is so clear and b) the customer is so naive that all it takes to solve a problem is to politely tell someone to RTFM (or in this case "RTF Knowledge Base"). At least that's how I read it.

I think "we hope we helped", rather than "we presume we helped" would be a much less arrogant way of dealing with customers.

As far as I recall, this is my first question to an AE - maybe others have had better results.

</rant>

Hi Gary,

Your experience is one of the main reasons I have been such a nut-case about answering questions on the NI forum. I was ashamed of the type of support novice LV user where recieving and had it within my power to help, so... well the rest is history.

I have been working with "support" from one company or another for about 30 years. Over that time I have learned that the quality of support I get is really up to me (no I am not blaming you!). So here are smoe suggestsions.

1) If not geting help from AE, call the local rep. THey can get your call escalted.

2) Do your research ahead of time and make it clear that none of the published info holds the answer.

3) Don't trust them to "get back to you". Let them put you on hold and listen to elevator music.

4) When you do need to let them off of the phone make sure you know their name (this is psycological, if you know their name you have power) and the SR#.

5) Get them to commit to when they will get back to you and ask for it in writting. Tell them "THen if I do not hear from you by X I will be calling".

6) Get them to read back to you the exact specs of your request before you let them go.

7) If they can't get an answer then ask "Can we please escalate this issue?". They don't like escalating but it gets the request in front of the appropriate product support for that product.

8) If they do not escalte ask the local Ni rep to get it escalated. They make money from you buying their stuff so it in their interest to get the stuff working.

9) If the AE you talk to does not know what they are talking about, just call back and log another service request (I call this Support roulette).

And to grease the skids going forward...

If you get good support from an AE, ask for their supervisors name and e-mail and send an "atta-boy" or "atta-girl" letter. THank you letters are rare these days so your name will spread internally and eventually they will know not to feed you bovine-scat.

For there are a couple of questions my fellow developers hear me saying every time I call NI for support;

A) Are you premiere support?

B) Have you been warned about me?

Again I am sorry you had that experience.

Your brother in wire,

Ben

-

-

QUOTE (jgcode @ May 16 2009, 05:58 AM)

http://forums.jkisoft.com/index.php?showtopic=1104' rel='nofollow' target="_blank">[Cross posted to JKI]Hi

What is the standard practice for testing source distribution code:

Should I write tests cases for source code OR source distribution code OR both?

I can see issue with either choice:

Source only - you want to test source to pick up any errors before you make it into a source distribution but...

Distribution only - distribution may apply name changes and in case of an error with dependency code that meant the source was ok but the distribution isn't

Both - best coverage, but redundant tests case, changes mean updating code (tests) in two places (due to re-naming etc..)!

What do you guys do?

Cheers :beer:

JG

Both.

The earlier in the development cycle a problem is found the easier it is to fix so unit testing at the very least.

But on the final application testing tells me if it works or not.

Ben

-

QUOTE (scott123 @ May 21 2009, 08:20 AM)

I have two PXI systems with PXI 8106 controllers. My code is acquiring data, calculating information based off this data, running PID and open loop control, outputting values to hardware, and transfering data to a host PC via TCP.I am using LabVIEW 8.5.1 and RT 8.5.1.

I have not tried a simple VI, but this is a good idea. I will allow the getmemoryusage.vi to run all by its self on a test platform to see if i can recreate this situation.

I have not tried the execution trace tool kit.

The system seems to run fine after the increase happens, no freeze or crash. Therefore, this does not seem like a major concern, but if another spike were to occur after it has spiked to 60% then we may not be able to guarentee(sp?) a shutdown to protect the device under test.

I saw something similar once back in LV-RT 6.

In my case I was getting some "out of range errors" so my error cluster which ussually had empty strings (source) now had data blah blah blah, ...

So I ended up changing my code to clear the "source" and just keep the numeric and the boolean.

The other situation was related to strings that where writtein or read form file. WHen the jobs where small the recipe and log files where small. When the job ran to long the buffer that held the string for the small jobs was not large enough so a new larger was allocated and memory took a hit.

In my experience these bumps are often due to stirings or arrays that are built.

Avoid both like the plague while working in RT.

Ben

-

Please see the "Clear as mud" posting in this thread by Greg McKaskle on the DarkSide.

Ben

-

QUOTE (crossrulz @ May 19 2009, 07:45 AM)

It sounds like the episode where they find the silicon life form on that mining planet, at the end when Bones is trying to heal the "mother". Cause then Bones pops up and and says something like "Maybe I am a bricklayer" once he healed the "mother's" phaser injury. I couldn't tell you the episode name, but I remember it being on the first season.... and the nodules they kept finding where eggs of the "M..." creature. When they hatched they started doing the work of the minners. Can't recall the name though.

Aside:

I'm an old school Treker that never accepted any of the new versions. Netflicks offers a download service that we have set-up to be able to watch any of the episodes on demand. As soon as it was set-up we just had to watch the episode where Kirk makes gun powder to do batle with the Gorn. Boy are those costumes cheesy in high def! There few episodes where I remeber thee names but my favorite was (I believe) "City on the Edge of Forever" where the phrase "Edith Keeller must die." was used more than once and Spock used that wonderful line about using stones and bearskins to enhance his tricorder.

Last tidbit:

I have a first edition Star Trek Techinal Manual that includes a schematic of a comunicator. I believe it was a super-hetrodyne(sp?) reciever.

Ben

-

QUOTE (normandinf @ May 13 2009, 08:47 AM)

Of course... I was simply pointing out that a huge proportion of newbies (first post) profiles mention thath they don't use LabVIEW 8.x nor 7.x, but exclusively 2009.I noticed this funny fact because when I want to post a reply, I generally look at the versions used by the author, to determine if I can upload some code snippet they could use.

If anyone out there as the time and inclination to Beta test 2009 I'd like to encourage you to sign up for the beta program in the hopes of 2009 being a killer version.

...

Ben

-

QUOTE (Gary Rubin @ May 12 2009, 10:27 AM)

Hello all,I'm trying to get a counter-based application set up using a PCI-6601 counter board. I haven't done NI DAQ in years, and this is my first foray into DAQmx. I feel like I'm really fumbling around here. My current approach is to find an example that has part of what I want to do and cobble it together with parts of other examples. It's a lot of trial and error (mostly error). I'm not finding the DAQmx Help particularly helpful either. It explains how to call each function, but that's really only helpful if you know which function to use in the first place. Any advice on where to start?

In case anyone has any insight, here's what I'm trying to do:

1. Counter0 generates a pulse train at a constant rate. This represents the system trigger.

2. Counter1 generates a pulse train which is synchronous with Counter0 and delayed from it.

3. Counter1 uses the Pause Trigger Property so that it is suppressed when one of the DIO lines is high. When the DIO line returns to low, it Counter1 pulses should come back in the same place relative to Counter0.

I can get 1 and 2, but not 3, or 1 and 3, but not 2.

If I make Counter0 and Counter1 part of the same task, then they are synchronous, but the Pause Trigger property seems to only work on tasks, not channels, so I can't apply it to Counter1 without applying it to Counter0.

If I make Counter0 and Counter1 differents tasks, so I can apply the pause to just Counter1, I lose synch between the two counters. I tried using DAQmx Start Trigger (Digital Edge).vi to sync the Counter1 task with Counter0, but get an error that I can't use both Pause and Start triggers in the same task. I then tried using the ArmStart property instead of the VI mentioned above. This worked fine, until I unpaused; Counter1's pulsetrain was then synchronous with the unpause, rather than Counter0.

Can anyone offer any advice, or at least point me toward some useful resources? If I remember right, it was this confusion of how to find the right tools that led me to use traditional DAQ for my last DAQ project, even though DAQmx was available at the time.

Thanks,

Gary

First let me suggest cheating.

If your I/O is something that can be configured and tested in MAX then use the wizard to save the task. THen in LV drop a task constant and one of the pop-up will generate code that matches what MAX did. I don't think your situation can use that approach.

Next (still cheatting)

I log a call with NI support and tell them what I am trying to do and ask them to provide an example.

Done cheating now suggestions to help with the hard stuff.

When you are navigating for properties and methods, make sure you use output tasks on outputs etc. This caught me off-guard since they have the same naames but using the wrong version will return errors.

THat basicly gete me through most of my I/O challenges. I hope it helps you!

Ben

-

QUOTE (Antoine Châlons @ May 12 2009, 10:01 AM)

I would suspect you are going to have to hound NI for an answer to that Q. Between LV 7.1 and * they added the ability to zoom a picture (I think, don't quote me) so that change may be playing a part in what youare seeing.

Ben

-

QUOTE (Antoine Châlons @ May 12 2009, 09:17 AM)

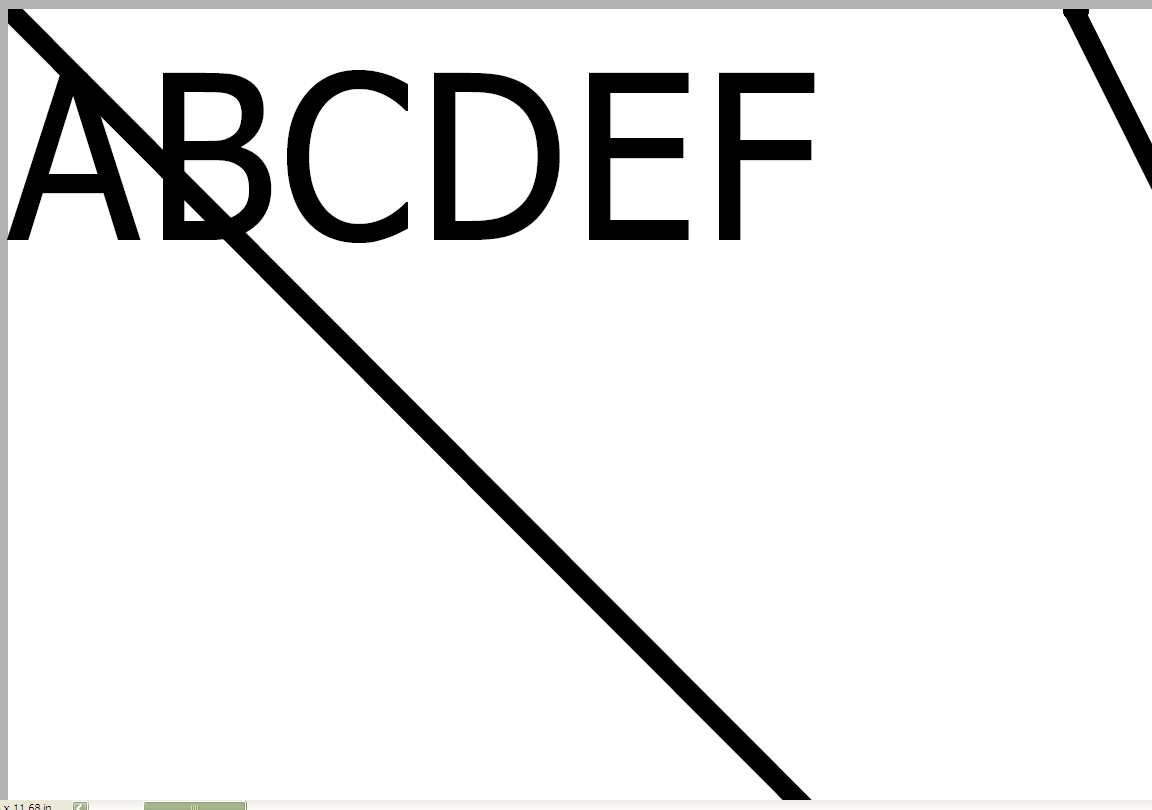

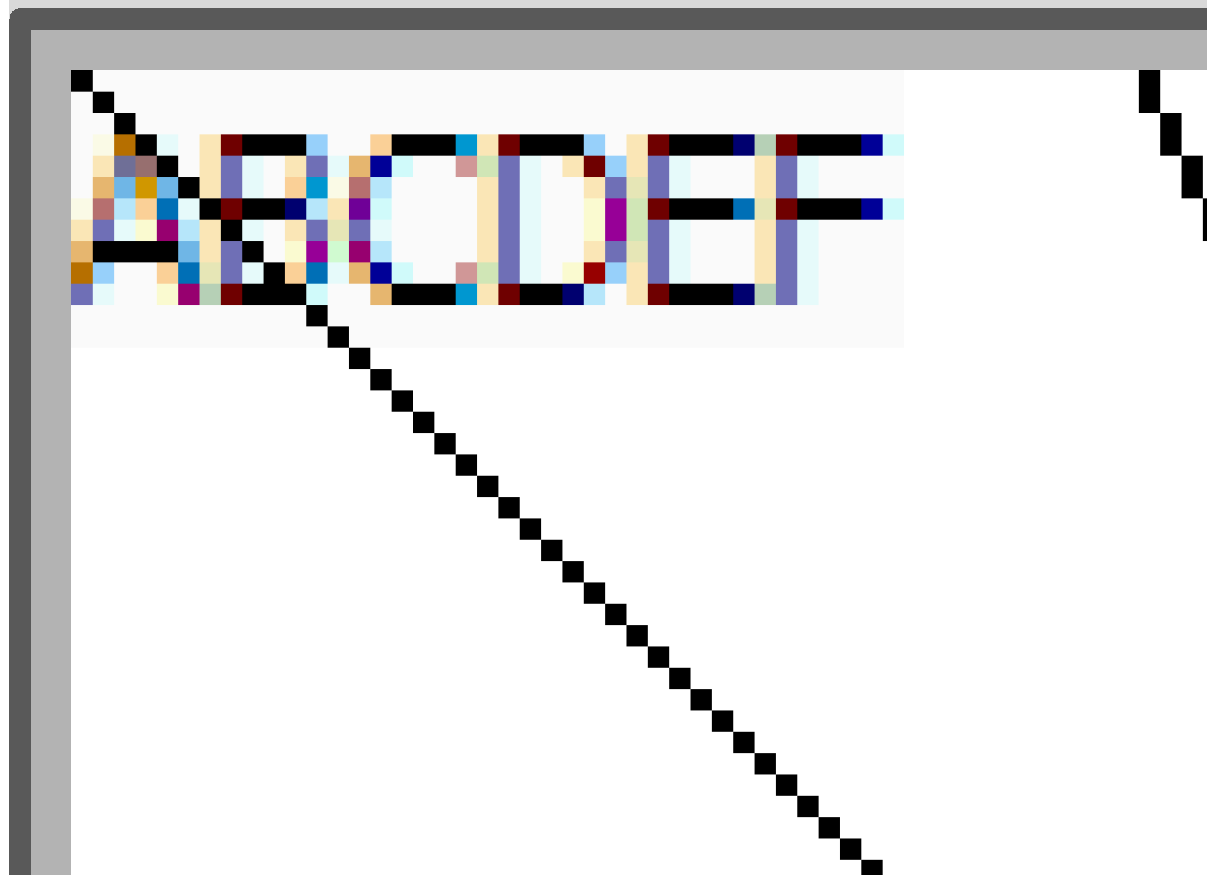

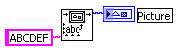

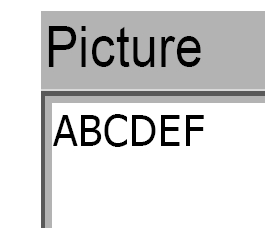

Hi all,I'm upgrading some code from LV 7.1 to LV 8.6 and have an issue with texts into a Picture Control.

will give this if I print to à pdf file and zoom on the pdf

In LV 8.6, the same code will give this...

If I zoom even more on the pdf generated with LV 7.1 it appears that the text is vectorial and always appears very nice, but it seems not to work in LV 8.6.

Anyone knows how I can fix that?

I checked my LV option for printing in 7.1 and 8.6, they are similar.

Q1) If you explicitly define the font in both cases, do you still see the same thing?

Q2) Do simple lines show up the same way when exported and zoomed?

Just trying to narrow it down,

Ben

-

NI is looking for feed-back about sorting options in the project when combined with SCC in this thread on the NI Community site.

If you care about this topic, go take a look.

Ben

-

QUOTE (Y-P @ May 10 2009, 03:05 AM)

Search the Ni forum tags For "http://forums.ni.com/ni/board/message?board.id=170&view=by_date_ascending&message.id=343317#M343317' target="_blank">Find shared".

Ben

-

-

QUOTE (Gary Rubin @ May 7 2009, 01:09 PM)

Why does the Boolean palette include both a True constant and a False constant?Why does a numeric constant in the BD default to I32, while a control/indicator on the FP defaults to a double?

Thank you to whomever changed the Compound Arithmetic so that it defaults to a logical operation when selected from the Boolean palette, and an arithmetic operation when selected from the Numeric palette.

Amen to that!

Other subtle changes that I apreciate include;

When we wire a error cluster to a case it puts the code in the No Error Case

When we create an sub-VI with an error cluster the error in is optional while all other inputs are required.

Insert node on a reference wires to the refence input instead of the error cluster...

These have all mde my life easier.

RE: Why both True and False?

I can only guess that in version 1 of LV you could not click on a constant and change it.

Re: Philosophical...

Before LV will be able to realize true artificial intelegnce, we will need a "Maybe Gate".

Ben

-

QUOTE (ShaunR @ May 6 2009, 03:51 PM)

...This is one of the aspects I really don't like about the "new" labview (far more I do like though). One of the reasons Labview was much faster in developing applications was because (unlike other languages) you didn't have to worry about memory management. As such, you could get on and code for function and didn't necessarily need an in-depth knowledge of how Labview operated under the hood. There used to be no such thing as a memory leak in Labview applications! Now you have to really make sure you close all references and are aware of when references are automatically created (like in queues, notifiers etc). It's good news for C++ converts because they like that sort of nitty gritty. But for new recruits and programming lamen (which was really where Labview made its ground) the learning curve is getting steeper and development times are getting longer.

There was an in dpeth discusion that was started by Shane on the Dark-Side that can be found here.

What LVOOP does is make it easier to get yourself into these cluster related situations.

BUT at the same time as LVOOP made the streets (or there-abouts) NI released the inplace operations to let us "reach under the hood" when we have to.

I like the "NEW LabVIEW". If I could only learn it as fast as they release it....

Take care,

Ben

-

QUOTE (Neville D @ May 7 2009, 12:33 PM)

My guess is about 30% more time. In general its quite easy. Develop the application for windows, try to minimise use of property nodes & too much UI flim-flam (pop-up config windows, subpanel VI's and such), and you can port the exact same application to RT with a minimum of fuss.I have a vision application that started out windows-based and then was ported to RT. If you have a target with lots of memory (like a PXI controller) then it becomes easy to run the code directly on it for debugging (in development mode) without needing to build executables.

Also, you need to add additional code to log errors/state transitions etc. for debug, and specific code to check file paths for development mode and executables, but then I would assume you already did when you developed the windows version

.

.Neville.

I agree with Neville provided this is not your first RT app or if the RT app does not have to run 24 X 7 error free. I put that restriction on my estimate because RT is less forgiving than Windows when it comes to memory usege. In Windows you can walk off into Virtual Memory Land (Using more memory than available physical memory) and you may not even notice. In RT it just dies.

If the app has to be deterministic then throw in another fudge factor to learn how to get info into and out of a deterministic loop with blowing the timing.

The good thing about developing on RT is when you come back to Windows you will good habits that will make your Windows based apps ever better.

Ben

-

QUOTE (Gavin Burnell @ May 7 2009, 05:00 AM)

I think it would more fun to have a coding challenge to write the most obfuscated code that does some defined task that does not employ any 'graphical' code hiding techniques and instead relies on daft ways to achieve a trivial operation. One my favourites is something like the following:Of course this sort of the challenge is also a good test of the LabVIEW compiler's ability to optimise the code...

About four years ago we started an "Obfuse Code" challenge in the Braekpoint on The Dark-Side where we where trying to implement the "Hello World" example from the world of C. In post #40 of that thread I posted an example (that is now probably dated) that has the binary of a sub-VI saved as a constant on the diagram. The constant was writtein to disk and the VI server was used to run the sub-VI... and I think I deleted after teh run. That method escapes the VI Analyzer. Check out that thread for some interesting obfuse examples. Technically the competions is still open becuase we never agreed on the rules.

Ben

Nebulus is now Sir Ben

in LAVA Lounge

Posted

Thanks you.

I was working on that on LAVA I. I seem to have lost about 400 posts so give some time to get back to where I was. Once I figure out how to navigate the new forum, I'll do what I can witout distrurbing the SNR.

Ben