dblk22vball

Members-

Posts

120 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by dblk22vball

-

I have watched several of the videos online regarding building PPLs and a plugin architecture, and some of the build issues that have been encountered. One thing that I havent seen is how to handle both 32 and 64 bit PPLs. Currently, we have both the 32 and 64 bit versions of LabVIEW on the same PC, and I am running into an issue of how to properly deal with the PPL plugins. We distribute the reuse PPLs via VIPM. The install process for this is typically: - When built, Package both bitness into the same VIP file - Unpack to a temp folder - Determine the bitness and move the desired bitness PPL to the install folder (Ex: C:\ProgramData\PPL\DB Base\DB Base.lvlibp) - Delete the temp folder with the "incorrect" bitness. This works when we only have one version of LabVIEW on a PC. But there may be times when we need both the 32 and 64 bit on a machine, depending on the programs installed. An example being a test software that needs 64 bit and we would also want another tool that we developed and is in 32 bit, and they both need to use the DB Base.lvlibp. Having both try to access the same file (C:\ProgramData\PPL\DB Base\DB Base.lvlibp) wont work for one of the bitness'. Is it better to append the bitness to the file name? (Ex: C:\ProgramData\PPL\DB Base\DB Base 32 bit.lvlibp) Or to the folder? (Ex: C:\ProgramData\PPL\DB Base 32 bit\DB Base.lvlibp) In either case, I can see my build process getting more complicated for the plugin module (DB Plugin), which inherits from the DB Base PPL.... - Do I have a 32 bit project (DB Plugin 32 bit.lvproj) that points to the C:\ProgramData\PPL\DB Base\DB Base 32 bit.lvlibp and a 64 bit project that points to the 64 bit base PPL (or folder)?

-

I am trying to convert some JSON, but the datatype changes based on the UUT response. Since I am using the stock "From JSONText" vi, I was using a type def cluster, of a string, and two doubles (as seen in rxample 2). But if the device has not been configured yet, it responds with 3 strings (example 1), which causes an error in the conversion process since the data types dont match. Example (pressure and temperature are strings): {"attributes": { "flow": "<unset>", "pressure": "<unset>", "temperature": "<unset>" } Example 2 (pressure and temp are doubles): {"attributes": { "flow": "100", "pressure": 5.235, "temperature": 25.5 } I can use two type def clusters, and if I get an error with one, try the other. But I figured there might be a more elegant way to handle it.

-

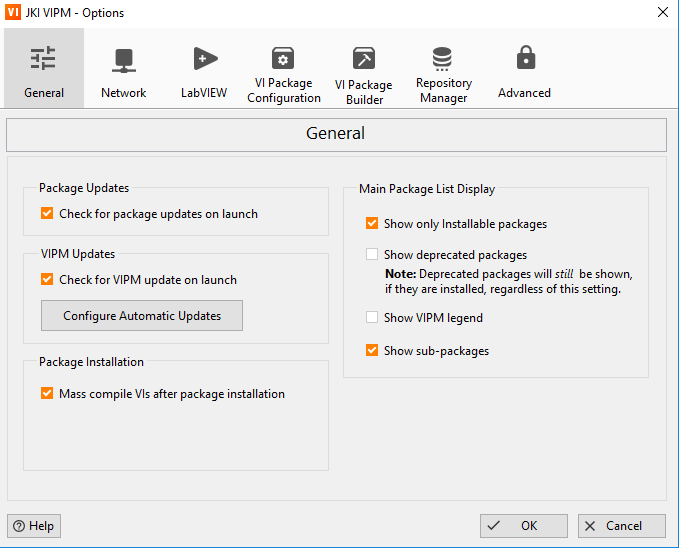

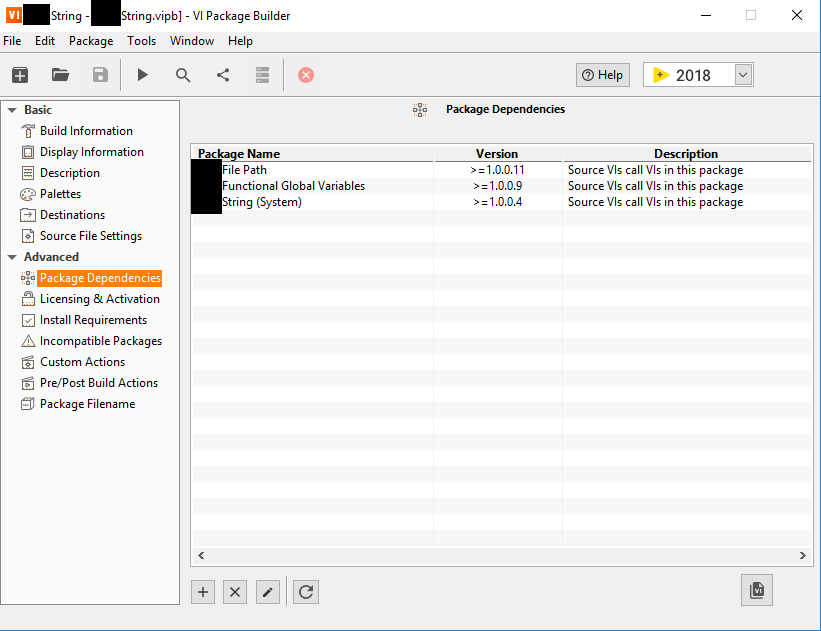

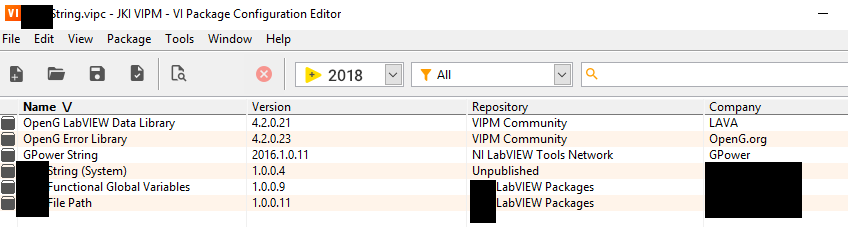

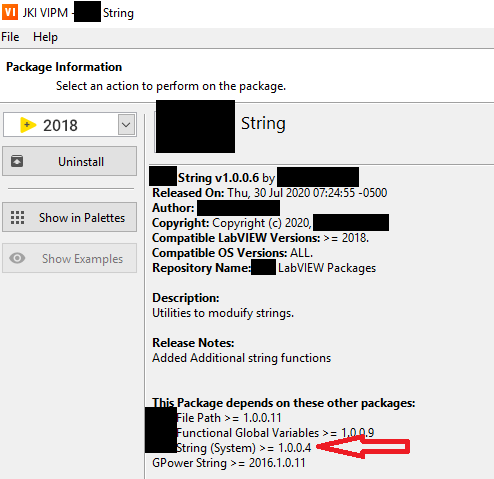

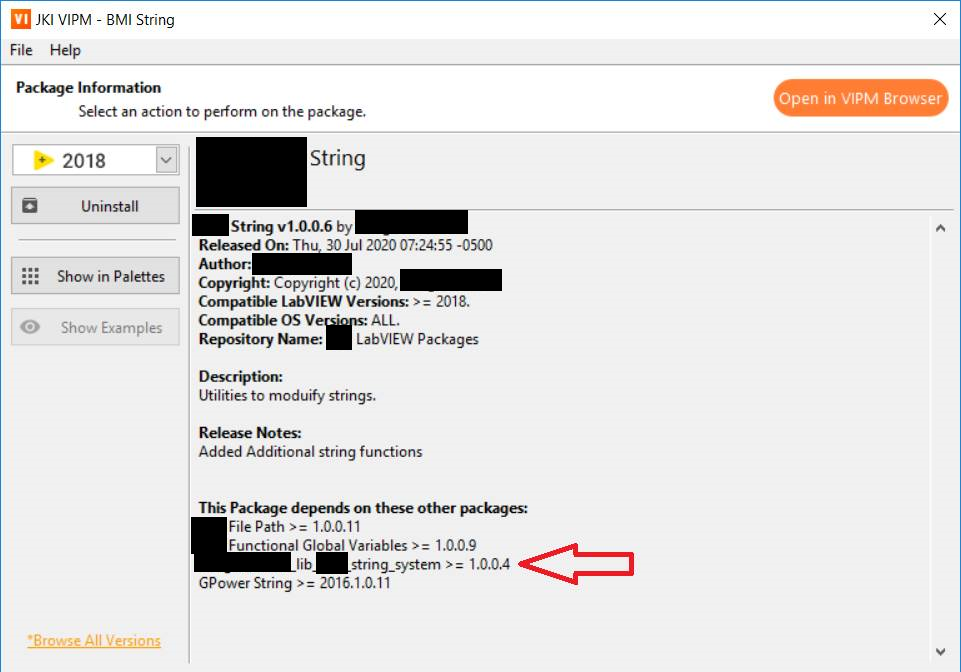

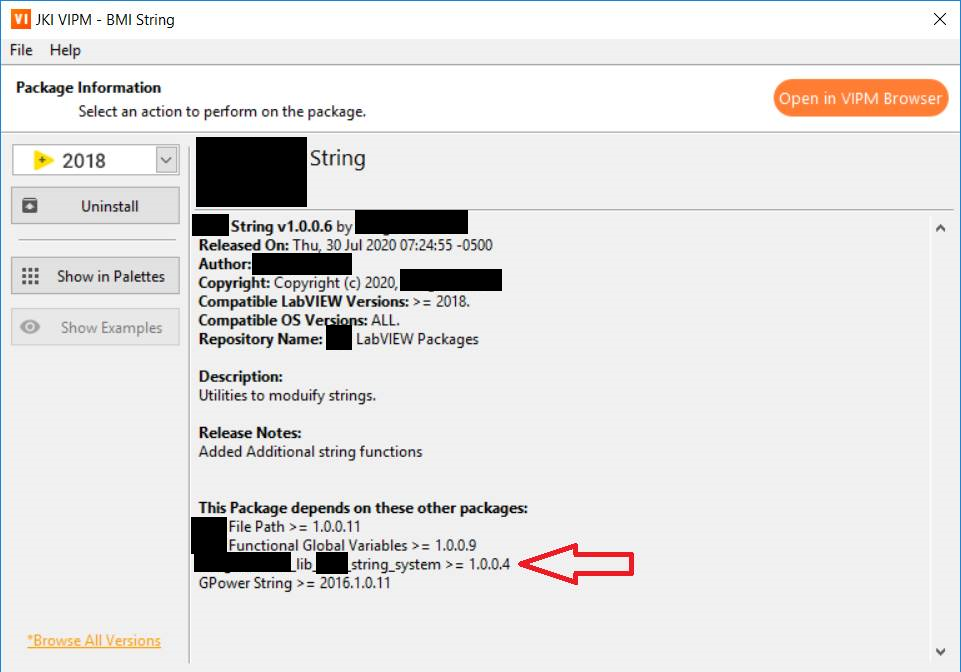

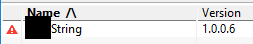

I am using the newest version (2020.1) of VIPM, but I had this same issue with the release of 2020. For two of the packages that I have created for internal repos, VIPM has decided that I need a System Package. The posts I have seem from JKI indicate that this is a sub-package that is used internally to the main package, and should be automatically included. However, after I am seeing issues on any PC other than the PC where the package was built. Is there a setting I am missing? Showing the (System) package as a dependency: Package Configuration in the VI Package Configuration Editor Package installed, on the PC where the package was initially created (no issues, no exclamation mark) Package when installed on another PC (NOTICE: the System Package name has changed and there is a red exclamation mark, but there are no errors shown on install of the package).

-

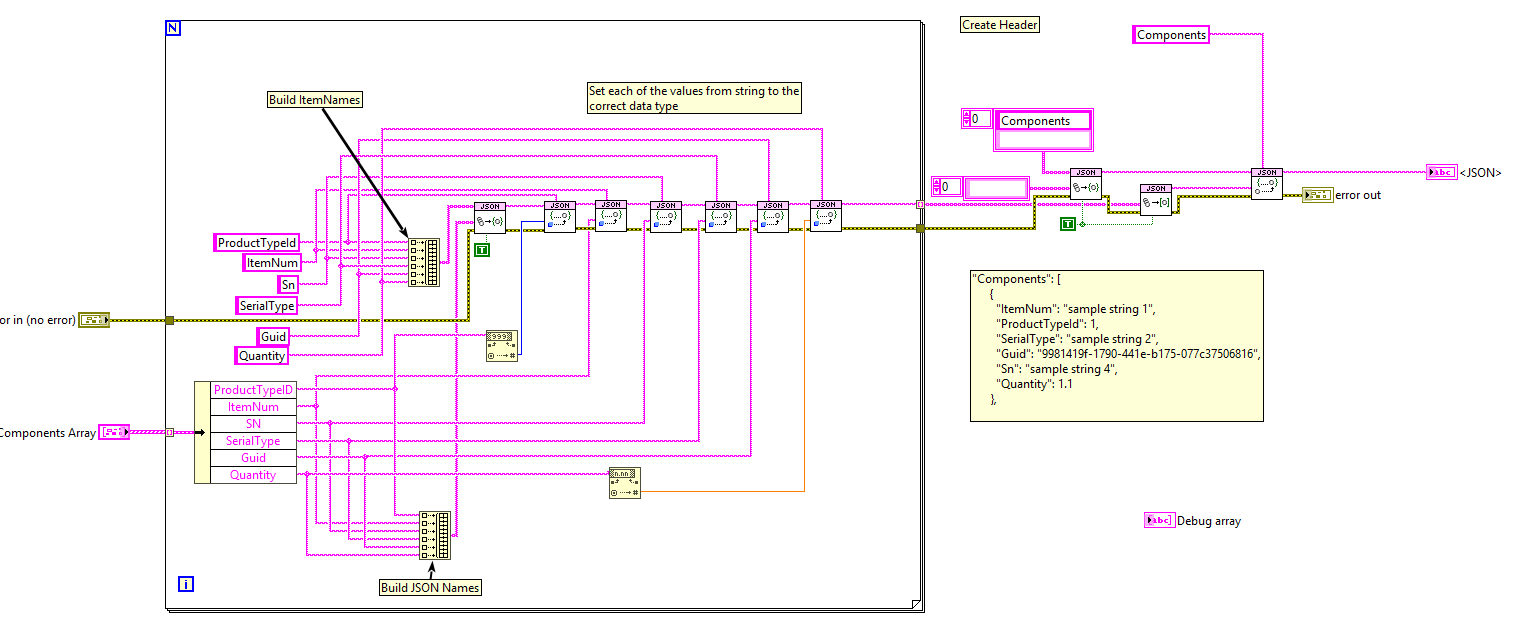

I am having an issue where the parser is error-ing out on building my JSON string, and I cannot figure out why. I am trying to build a JSON structure used as part of our data logging process. The parser is giving me the following error: JSONtext.lvlib:Find Object Item Locations by Names.vi<ERR> State: Parser Error Near: '{ "ProductTypeId":11, "ItemNum":"12345-111", "Sn":"132465", "SerialType":M' in '{ "ProductTypeId":11, "ItemNum":"12345-111", "Sn":"132465", "SerialType":MFG, "Guid":null, "Quantity":null }'' Make Components Array in JSON.vi

-

I was able to get it working. Basically, I need to "auto increment" myself and manually set the destination path. It appears that the API does not handle the [VersionNumber] tag properly, but no error is thrown. I have been in discussion with NI application engineering, so hopefully this will be fixed.

-

I am trying to get a build script going, and I use the destination folder format with the tag [VersionNumber] in the Primary Destination Path (http://digital.ni.com/public.nsf/allkb/617928B8E8DE3B6C86257CC400429194) What is happening is that when I do my programmatic build, the lvproject only "remembers" the last build I manually did through the project itself, instead of the programmatic build. for example, say I right click on my executable build specification, select "build". My build will run, complete, and then all the files will be stored in the folder builds\EXE 1.0.0.0. The flag "Auto Increment" is set to True. Now, I run my build script 5 times. I will have folders EXE 1.0.0.1 through EXE 1.0.0.5. I open my exe build specification in the labview project, navigate to the "Version Information" page, and the Version Number correctly shows 1.0.0.6 as the next build number. So my build script is updating the build specification with the correct auto increment, and I have verified that LabVIEW is creating the correct files in the correct folder. But, when I go to the build specification, right click and select "Explore", LabVIEW opens the EXE 1.0.0.0 folder, instead of the EXE 1.0.0.5 folder. The consequence is that when I do an installer build, LabVIEW thinks that the 1.0.0.0 build was the last build, so the incorrect exe is copied into the installer. Has anyone run into this issue with programmatic builds?

-

Hrmm.....I have been getting access violation issues as well with my LV18f2 system, I will have to upgrade and see if the issues are resolved. NI R and D has been trying to figure out my issue and has not had success yet.

-

I am looking at the NI Systemlink for remote deployment of the test programs. Part of the writeup on the justification for SystemLink (or alternate), is that I need to find some alternates. But finding the alternates is a struggle (which may assist in my justification, but I want to be sure I am not missing something). Our company uses a mixture of LV and Visual Studio (C/.Net) test programs, so the solution cant be LV only. I think this would rule out Studio Bods BLT, since it appears to be restricted to LV. I am aware that the SystemLink is built on SALT, and that it is open source. Initial investigation seems like this could work, but would require a lot of man hours to get up and running. I can see there are alternates to SALT, but all seem to be geared to IT, not MFG. I have found maintainable.com, which looks similar to SystemLink, I am waiting for a sales person to call me back to discuss the capabilities. My google foo is evading me on finding other alternatives. I see tons of Windows management programs for IT departments (deploying windows updates, company policies, etc), but am struggling to find alternatives for the MFG side. Requirements: We have a headquarters, where most of the engineering and test software comes out of. Then it is deployed to 10+ remote locations, with 100's of test fixtures. Windows based machines (Windows 7, 10, or CE) All of the test fixtures are not available on the internet, on the local intranet (so need a relay to get outside the plant)

-

I am guessing this has been discussed on here before, but my search skills are failing me. As we expand our company, we are now starting to use multiple suppliers for building (and testing) the same product. Updating each test fixture by remotely logging in, copying over the new sequence files is going to become cumbersome. We are discussing having the test sequence on a SQL server, in this instance since we already use SQL servers, and then the testers will then query the server based on part # and maybe some other information to get the proper sequence. This way we just need to push the update from the master server at "headquarters" to the remote servers, and all the testers have the new sequence files. Some features we are planning to have: - effective date (when did the sequence go active, preserving all previous sequences). - The test fixtures are on the same in house network as the remote servers, so unless the building loses power, there should be any significant latency or bad connections. (already in place) Any big caveats to this method? Other ways people have managed multiple sites? For those who are better searchers, feel free to point me to other topics already discussing this.

-

Update: It may be solved. I will continue testing more next week (7:15 PM where I am at) I had to uninstall all NI software, and only reinstall LV 2014 items. Then I ran a Registry cleaner. I also updated the Agilent IO Suite from 16.7 to Keysight (new Aglient name) IO Suite 17.1. I also made the changes suggested above (Synchronous mode and 1 ms wait in the loop). Thank you for your help.

-

This is an actual serial port. I have already changed all the Power settings for the USB devices to always be on, as that did cause issues. Thanks for the suggestion. The manual says that you are supposed to send 0x00 for 1 sec, followed by the sync byte 0x95. The UUT will then acknowledge it saw the "wakeup" string with a 0x4B + 4 other bytes. There are no termination characters with this protocol. I added timing vi's to measure how long it too to send the 0x00's, and reached the conclusion that 960 for the FOR loop count worked out to ~1 sec (see the block diagram of attached vi). I apologize I forgot to put that in the first post. NI has commented that the VISA time out is "consistently inconsistent", ie it will vary. I have played around with different timeouts from 200 ms up to the 10000 ms and still the same result. BTW, the UUT will go back into sleep mode within 250 ms after a wakeup command if no other commands are received. So waiting 10 seconds for a response from the UUT would not work in the long run. If you look at the "works" vi I attached above, the timeout is set to 200 ms initially, and then after the wakeup is received the timeout is reset to 10000 ms.

-

I attached the files to the first post. COM1 is where the IR comms happen.

-

I am getting very frustrated trying to figure out what is causing me issues communicating with a device over a serial to IR device and using LabVIEW. I am working with NI directly on this issue, but I thought that maybe someone on here might have had a similar issue and were able to resolve it. We are using a serial port (DB9) to IR head to communicate with devices in the test nest. We have a system that was done originally in LV 2009, and it works just fine. When we decided add additional test racks, I wanted to re-use as much code as possible, but of course there were some new features we wanted to add. I "copied" (re-created the LV 2009 IR communication vi) in LV 2012 at the time. I tested the vi on the development machine (laptop with docking station so it had a "real" serial port) and it worked great. I then built the .exe and put it on the test rack. We were unable to communicate with the UUT. But if we restarted the test program, we could then talk to the UUT just fine. So then I replaced the 2012 IR vi in my program (no other changes) with the 2009 vi (exactly the same just one was created in 2009 vs 2012) and rebuilt the .exe. When tested on the rack, no program restart required, IR comms established right away. Huh??? I adding all sorts of logging to see where (if) I was getting any errors on the test rack (logging the error cluster after configure serial port, flush buffer, read, write) and the only error I am seeing is the VISA Read timeout error when it does not get a response from the UUT. I have made sure that VISA and LV runtimes are the same between the test rack and my development machine. Windows 7 Development Laptop with docking station (development environment or running exe) - Serial IR head, USb to Serial adapter to Serial IR head or USB IR Head = Communication with out a program restart using the 2012 vi. Windows 7 Test Rack touch panel PC (runtimes only, running exe) - Serial IR Head - Requires program to be restarted when using 2012 vi. With 2009 vi, communication established without program restart. Windows 8 Personal Laptop (runtimes only, running exe) - USB to serial adapter to Serial IR head or USB IR head - Communication works without a program restart using the 2012 vi. There seems to be something different in the way VISA or the code behind the vi acted in 2009 vs 2012 (I have also tried 2014) in regards to the serial port. Running NI IO Trace so far has not yielded any "ah, ha!" differences. EDIT: Attached files. Rev 242 is the version that does not work (2012/2014), Rev 243 is the version that used the 2009 IR vi. IR_WakeupAndTransmit LV 2009.vi serial trace files.zip

-

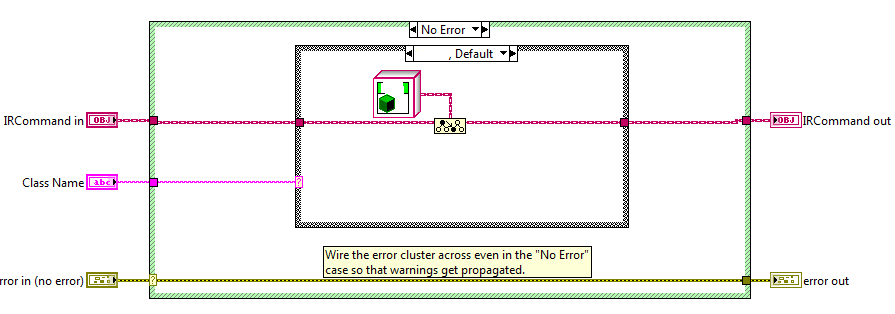

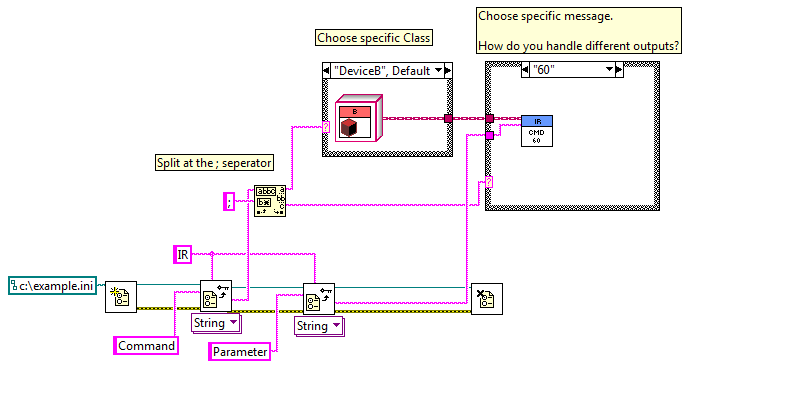

I am struggling with how I handle the situation in the title. We have a number of devices that use different IR protocols. I have a Parent Class (IR), and multiple child classes (DeviceA, DeviceB, etc). I have a Test Sequence Editor that we use on our test program, and an option I want to add is IR Command selection. I would also add a IR Parameter section to allow for specific inputs based on the command selected. So the Test Step would have: IRCommand: 'DeviceA,60' (for Protocol 'DeviceA' and to send command 60) and maybe IRParameter is a string 'ABCDEF'. Each command can have different inputs and outputs (string, numeric, etc). I figured I could use the 'To More Specific' class to choose the child (see pic below), but I am not sure how I can setup the message correctly. I currently have it implemented where I have a case structure in my program for each command. By this I mean, case 60 uses the Command 60 implementation of the IR Parent class, and each child is dynamically dispatched. However, I would prefer to move this into a class somehow, instead of copy a case structure into each program (defeats purpose of just updating class and not each program that uses this). I attached a simple example (please excuse the horrible wiring, it was a fast example) Classes.zip

-

[CR] SET Localization Toolkit

dblk22vball replied to Porter's topic in Code Repository (Uncertified)

Thank you for making this toolkit free for us all to look at. I was looking into making a similar (but much less sophisticated) toolkit. A possible update: The Add Language popup will only let you select from Languages already populated by the developer, ie you cannot "add" a language by typing the language while using the toolkit. It looks like I would need to edit the Language Selector.ctl to add more languages, then reopen the toolkit in LabVIEW to add the language to my project.- 16 replies

-

- 1

-

-

- translation

- localization

-

(and 2 more)

Tagged with:

-

Or that I needed to start drinking at the end of the week.....

-

Annnnd I feel like an idiot. Thanks for your help.

-

Ok, I feel like I have missed something trivial, but I cannot figure out the cause. I have a front panel in LV2014 where I have 4 copies of a custom cluster. Inside the cluster is a Silver-type boolean button with indicator led called "Enable Cell" There are also the Start and Exit buttons. In Edit mode, I can press the "Enable Cell" buttons just fine. But in Run mode, I can only press and toggle the Start and Exit buttons. When I press the Enable cell button, nothing happens (light on the control should change colors, button should appear to "depress" etc). My event structure captures all the events from the Start and Exit buttons, but does not "see" the Enable Cell events. Any idea what is "blocking" the buttons from being pressed?

-

I am going back and forth on how I should go about designing my next program. Whatever I use, I want to be able to re-use for multiple projects. I am not in a particular rush at the moment to create this next program, so I do have a little time to "play" around. Most of my Low-level vi's are re-used on many different test programs (IR message builders, etc), but the high level program seems to run into the "Cluster of death" scenario a lot. Our company currently has 3 test engineers, I am the main LabVIEW programmer. One test engineer thinks LabVIEW is horrible and only writes in C or .Net if required. The other test engineer is able to modify some code, but even queues and FGV's are not in his wheel house at this time. I have done a little OOP (I do have a program using basic OOP for the Instrumentation currently deployed), but I am still very much on the basic end of the spectrum with OOP. Most of the projects I am involved in require about 5+ instruments (IR COM device, Sig Gen, Freq Counter, Spec Analyzer, DMM, PSU chassis, etc), but they have been 1 UUT fixtures. We would like to scale to using the same hardware, just an additional IR com device (one for each UUT), and the test hardware is shared between the other UUTs via switches if they do not have enough inputs. Something similar to the Continuous Measurement and Logging (NI-DAQmx) example that ships with LV 2014 seems like a typical solution that has worked for many developers. QSM, separate Logging, UI, Acquisition loops. But I am not sure how easy it would be to scale, and how do you avoid trying to access the same hardware when running each UUT separately? One solution is to use a For loop, to cycle through each nest location at each test step. But this ends up being a serial process and does not save a lot of time. I did a couple of the tutorials on the Actor Framework, and I am still wrapping my head around that. I did also see the MAL Plugin framework, which had the hardware checkin and checkout. I thought this was a nice feature as it would allow you to test many UUT with limited hardware. But the learning curve for me seems pretty steep (for using the AF), and I am sure that my co-worker will have no idea how to support it if he ever had to.

-

Hello all, My company pays for annual dues for a Professional Organisation (IEEE, etc). I am an Electrical Engineer by degree, but I have worked most of my career as a Test Engineer/Software developer working mostly in LabVIEW. As such, I work heavily in the Manufacturing world, both in electronics manufacturing as well as non-electronics manufacturing (mostly with water related products). IEEE is obviously the biggest organisation for EE's and the most recognized. But it seems to be focused on new product development and not much on manufacturing. SME seems to be focused on machining and more mechanical aspects. There is the American Test Engineer Society, but that does not seem to be very active. (http://www.astetest.org/) There is also the International Test and Evaluation Association, which seems to be more active. (http://www.itea.org/) Has anyone had any involvement with any of the groups? Are you part of any professional organisation?

-

Sorry, I guess I forgot to mention about the SQL server in this case. If the SQL server is local, then a 30 day query will take maybe 30 seconds (not outrageous for a wait time). But in this case, the SQL server is at a remote vendors site, their manufacturing facility, and they do not have a good internet connection (Malaysia). So we do a "Linked Server" which allows you to query the remote server through a local server. A 30 day query can take 4 minutes, and I guess they do not want to wait that long.

-

Our company uses SQL servers to log all the data for our test fixtures. Quality would like me to create a "Data Analysis" tool to monitor daily stats, and have the option of looking at the past 30 days worth of data. Currently, I have been asked to start with just one line (3 test fixtures), which can have 1000 entries each day (some days there are none). In the future this can grow significantly to other fixtures. IT has asked that I not run a 30 day query every day to update the rolling 30 day stats, a local cache is desired and only the previous days test data will be queried. My current plan: Have a folder in which each Date is a unique file. I can List all the files in the folder, get any dates between the current date and the newest file via SQL query, then delete any files that are past the "# of Days to Store" ini file value. This will save me from indexing through the date column of a huge file to delete old data. In order to prevent running analysis on each date every time the historical view is called, I was thinking I could create sections in each Date file (what Quality has asked for on each date): FPY Stats All Data Failure Only SN that were tested more than once Then when the Quality Engineer wants the past 30 days FPY, I get the FPY section of each date in the folder. Has anyone implemented anything like this before in their application? I am looking for ideas on how to best approach it or does my current idea seem reasonable.

-

Quick guess - is the buffer getting overrun/full? Your boolean control is set to a "latching" mechanical action, so when you ask it to send a command, you are most likely send a LOT of commands (only a 5 ms wait in each loop). Also, you might want to wire through the TCP reference to a shift register.