-

Posts

3,950 -

Joined

-

Last visited

-

Days Won

275

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Rolf Kalbermatter

-

Inelegant DLL Interface [long rant/question]

Rolf Kalbermatter replied to tkuiper's topic in Calling External Code

Why do you say this is inferior? It is not, because doing that is basically the only proper way to merge a C callback with the LabVIEW dataflow programming. There are several possibilities depending on the requirements you have. 1. Calling the PostLVUserEvent() function you can pass data back to LabVIEW, in fact any data you want but you need to create LabVIEW compatible native data for that (LabVIEW handles for strings and arrays). The event is then processed in the according user event in the event loop, so there is a possible problem with serialization of all the callbacks if processed in the same event structure. 2. You can use Occur() to trigger a LabVIEW occurrence. The advantage is that it is not serialized with other occurrences that you might trigger from other callbacks but the disadvantage is that you can not pass data back with the occurrence trigger. 3. To solve the problem with the occurrence not having any means to pass data with, you can do your own queue code in C that holds elements that the callback functions put in before they trigger the occurrence. When the occurrence is triggered in LabVIEW you can read the queue for new data. So it is definitly not inferior to do that. The only problem with this is that you do need to write some (good and not very trivial) C code, but that is no reason to call this inferior. The main issue with this is not so much the fact that they need to access GOOP data or any LabVIEW data, but much more a maintenance nightmare. As soon as those callbacks DLLs are not compiled in exactly the same version as the LabVIEW version you use to call them, those DLLs will execute in the according LabVIEW runtime engine and as such are in fact almost out of process from the calling LabVIEW environment. This will indeed make the sharing of data between the DLL and the calling LabVIEW process impossible but there are also other problems why you rather don't want to do that. Actually LabVIEW executables can serve as Activex Automation server just as well as the LabVIEW development system. Check out the build settings of your project for the place where you enable ActiveX server and specify the name under which it will be registered. But the problem with ActiveX for doing things will be probably the same as for TCP/IP since they are both out of process technologies so if TCP/IP won't work, ActiveX likely will be even worse. Because ActiveX and .Net have a very strictly typed interface description where LabVIEW can get all the necessary information to create the interfacing code for you. In comparison, direct C code interfaces have no formal description of the data types and calling interfaces at all, and no, a header file does not count as that by a very long stretch since it is missing a lot of information that is sometimes buried somewhere in a text documentation, but more often is simply the result of programmer intuition and trial and error with a good source code level debugger. These last three things are something LabVIEW is still several light years away of being able to do. Of course there could be something like the LabVIEW Call Library Configuration dialog to allow to configure callback interfaces to VIs that can then be passed as function pointers to Call Library Nodes, but the according dialog would likely be even more complicated than the Call Library Node dialog and, considering how much difficulties most LabVIEW users have with the existing CLN already, as such very hard to use for more than 99% of the LabVIEW users. Seems to me you got stuck between a wall and a hard place. Why even porting 3.5 MB C code to LabVIEW at all? From what I see you seem to need to actually decide in the callback whatever and return data from there to the caller of the callback so this means synchronous operation of the callback. In that case you are right that the only possible solution would be to wrap LabVIEW VIs into a DLL, making sure this DLL is created in exactly the same LabVIEW version as your calling LabVIEW application and then GetProcAddress() those DLL function pointers and pass them to the Call Library Node as callback pointers. As I already said this is going to be a rather messy maintenance nightmare and another problem is there too! The C wrapper created for calling the actual VIs in those DLLs has to process and convert all variable sized data from the C function pointer into LabVIEW handles and before returning again back to C pointers. This can be a lengthy and performance hungry process for the amount of data you seem to think would make a TCP/IP interface unfeasible. Rolf Kalbermatter -

Sending an array of clusters to a C dll

Rolf Kalbermatter replied to c_w_k's topic in Calling External Code

Yes you have to! Don't configure those as strings since LabVIEW strings are not only no C string pointer but also no fixed size strings. Leave them as the byte clusters as you did before and convert them to strings after you got the data from the DLL. You need to setup the clusters just the same as before but doing this with Adapt to Type and pass as C array pointer (only possible in LabVIEW 8.2 and above) will save you the hassle of typecasting/unflattening and byte and word swapping. Rolf Kalbermatter -

Installer image

Rolf Kalbermatter replied to cs_BOT's topic in Application Builder, Installers and code distribution

Very easy by using your own installer technology, like InnoSetup or InstallShield. Rolf Kalbermatter -

Documenting State Machines

Rolf Kalbermatter replied to Cat's topic in Development Environment (IDE)

Well I do have some cluster sometimes but it contains only things that are specific to the entire state machine such as a flag to remember the previous state for some cases if I need to do special handling depending from where the current state was coming from. Of course this could be entirely avoided with a different state separation but sometimes it is easier to add such a special handling after the fact than to redesign several states more or less completely. Rolf Kalbermatter -

Documenting State Machines

Rolf Kalbermatter replied to Cat's topic in Development Environment (IDE)

I personally wouldn't do that either. I would tend to actually put those object references into functional globals too. (But that would be isolating and encapsulating the object itself into a sort of higher class which I do tend to do in my current functional globals design, so that might be why I can't see the additional benefit of LVOOP getting involved in the picture.) Rolf Kalbermatter -

How to rotate a control/indicator object

Rolf Kalbermatter replied to menghuihantang's topic in User Interface

If the clock arms do not need to look exactly like this you could use a gauge. Otherwise it will not really work anyhow in LabVIEW. The arms would need to be imported as graphics and doing that as vector graphic would be difficult to impossible in all versions of LabVIEW so far, but rotating bitmaps is a very ugly thing to do. Rolf Kalbermatter -

Documenting State Machines

Rolf Kalbermatter replied to Cat's topic in Development Environment (IDE)

It seems to me you are keeping all the data the states will work on in this shift register. This is in most cases not a very good idea as it ties the entire state machine implementation very strongly to the data of all states, even though most states will only work on some specific data on this. There is also a possible performance problem if your state data cluster gets more and more huge over time. I have only a very limited state data cluster in my state machines and it is limited to containing data that is important for most of the states and directly important to the state machine itself. The rest of my application data is stored in various functional variables (uninitialized shift registers with different methods). (And yes I know it is a lot like doing LVOOP without the formal framework of LVOOP and I should be looking into using LVOOP, but have not yet found the drive and time to do so.) The various VIs do pull whatever data they need from those functional variables when they need it and where they need it and put the data back in there when needed. This keeps the state machine implementation very much decoupled of the application data itself and allows for much easier addition and modifications later on. The state machine itself will not document what data it uses but I feel this to be information that is not exactly part of the state machine design itself. It should be defined on a much higher level (anyone saying application design specification?) The individual data should not really be important to the different states in most cases but what you want to know is what state transitions your state machine involves when, where and how. Rolf Kalbermatter -

Sending an array of clusters to a C dll

Rolf Kalbermatter replied to c_w_k's topic in Calling External Code

Well, Swap Words swaps the two 16 bit values in a 32bit value. It has no effect whatsoever on 16bit values (word) or 8bit values (byte). So you do not need to apply the Swap Bytes and Swap Words to each individual value but can instead put them simply in the cluster wire itself. They will swap where necessary but not more. Rolf Kalbermatter -

Sending an array of clusters to a C dll

Rolf Kalbermatter replied to c_w_k's topic in Calling External Code

Adam, you did miss in your previous post that the original problem was not about interfacing a DLL specifically made for LabVIEW, but about interfacing to an already existing DLL. In there you have normally no way that they use LabVIEW data handles! Your last option is however a good one. I was missing that possibility since the Pass: Array Data Pointer for Adapt to Type is a fairly new feature of the Call Library Node. It seems it was introduced with LabVIEW 8.2. And there is no pragma necessary (or even possible since the DLL already exists) but the structure is using filler bytes already to align everything to the element size boundaries itself. The void arg[] datatype generated by LabVIEW is not really wrong or anything as it is fully valid C code, it is just not as perfect as LabVIEW could possibly make it, but I guess when the addition of the Pass Array Data Pointer to the Adapt Datatype was done, that person did not feel like adding extra code to generate a perfect parameter list and instead took the shortcut of printing a generic void data pointer. Rolf Kalbermatter -

I tried to report http://lavag.org/top...dpost__p__63616 as possible spam but got that error message. And earlier in other sub forums with other messages the same. Rolf Kalbermatter Admin edit: The report didn't make it through to the report center.

-

Flattened LVOOP Class

Rolf Kalbermatter replied to Eugen Graf's topic in Object-Oriented Programming

Hmm looking at the data I wonder if you mixed up Little Endian and Big Endian here. Especially since all other LabVIEW flattened formats use Big Endian. The first four bytes making up Num Levels do look to me in MSB (most significant byte first) format which traditionally has always been referred to as Big Endian. Of course adding a new datatype you guys would be free to decide to flatten its data differently but the example would point to the fact that you stayed with the predominant Big Endian flatten format as used in all other flattened data in LabVIEW. And therefore byte reversing would by now be necessary on any platform except the PowerPC based cRIOs. As to the padding of Pascal Strings, this has been traditionally padded to a 16 byte boundary in the past but I guess that was in fact a remainder of the LabVIEW 68000 platform roots, where 16 byte padding was quite common to avoid access of non byte integers on uneven byte boundaries. Rolf Kalbermatter -

ActiveX container and Web browser

Rolf Kalbermatter replied to Bjarne Joergensen's topic in Calling External Code

You can sure report it but I'm not sure it would amount to a real bug. Most likely the ActiveX control does do something with the container if it is activated so the message you get is most likely technically correct. Rolf Kalbermatter -

No, the LabVIEW runtime does not stay in memory, but Windows does something called application caching. Applications and DLLs once loaded do stay in a special cache that can be activated almost instantaneously on subsequent launches. Without this Internet Explorer would be such a pain to use that nobody would use it anymore. In the case of IE, Microsoft cheats even more as most of the IE engine is part of the shell that gets loaded when you startup Windows and the Explorer instance that displays the desktop. Rolf Kalbermatter

-

GOOP Developer free of charge for commercial use

Rolf Kalbermatter replied to Kurt Friday's topic in Announcements

I want to see an NI marketing announcement where they do mention anything else but the advantages of their product over competitors (besides of a feature list and one or more enthusiastic user comments) ! Rolf Kalbermatter -

No scripting license from NI for LabVIEW < 8.6 and I don't expect that to come really. You can get it to work on non Windows versions by copying some files and editing the INI file but on Windows there is no way to get it to work without helping hands from NI to get the right license file and as I already said I'm not seeing that happen anytime soon, and with the next version of LabVIEW being just around the corner the chances for that get even smaller. Rolf Kalbermatter

-

GOOP Developer free of charge for commercial use

Rolf Kalbermatter replied to Kurt Friday's topic in Announcements

It's obvious you are not a marketing guy . What marketing guy would post both advantages and disadvantages in the promotion of their product? But it is definitely a very sympathetic treat. Rolf Kalbermatter -

LabVIEW video/image toolkits at low price now!

Rolf Kalbermatter replied to Irene_he's topic in Announcements

Well if you talk with them and really want to get 100 or multiple of that license packs, I'm sure Irene would be more than happy to give you a substantial discount. Of course claiming you will distribute 100 of apps but really only want 2 runtime licenses now, but with the 100 license discount schedule anyhow, is always sounding a bit lame. Rolf Kalbermatter -

I'm the same! And I know I'm getting old, which is maybe the main reason for being that way! Rolf Kalbermatter

-

NI-Week Session: Advanced Error Handling in LabVIEW

Rolf Kalbermatter replied to crelf's topic in NIWeek

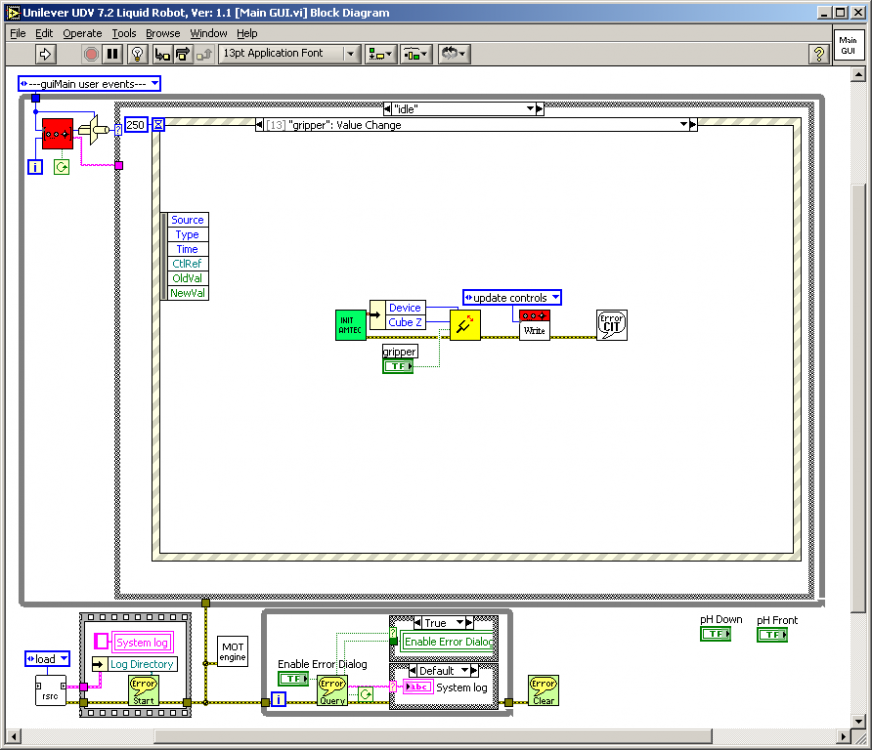

Yes my error handling goes back to LabVIEW 5 or maybe even 4. So no events there. Instead I have a FGV that uses occurrences to do the event handling. The FGV has a method to initialize it, a method to post an event/error from anywhere, a method to poll the FGV, and a method to shutdown. All those methods are wrapped in easy to use wrapper VIs that hide the complexity of calling the FGV completely. A compromised overview what each method does: start: create the occurrence, set the log path where all events/errors get logged, prepare the message buffer post: it has two wrappers one trivial one to just log system events, such as when the application was started up or when a configuration was changed/save or anything else that might be interesting to protocol but does not indicate any form of difficulty/problem or such. The other wrapper is the most complex as it actually does all of the error handling VI and on first execution adding custom error codes from *.err files in a central location. The FGV method itself does format and add the message to the message buffer, does the logging to disk, and sets the occurrence. query: its wrapper comes into its own loop on the main diagram that does use a wait occurrence to wait for an update, it also returns the contents of the message buffer as a string to display in a log string on the UI if desired. The most important thing it does is dsplaying a non modal dialog for all errors if the actual option is enabled. The dialog contains a checkbox to disable that option to prevent error dialog flooding. A boolean output indicates when the FGV has been shutdown to terminate the error handler loop too. shutdown: sets the occurrence and the internal shutdown flag so the error handler loop will terminate properly. clear: clears all internal buffers (optional step and not really necessary) The addition of this to any application is fairly easy. It just needs a tiny little loop somewhere in the main VI and the initialization VI being called at application initialization. The event poster can be added anywhere and the error poster comes everywhere one would otherwise use the NI Error Handler VI. On terminating I call the Shutdown VI and the whole application terminates properly including the error handler loop. Here is a use in a fairly simple application: Rolf Kalbermatter -

If the application is ActiveX or .Net and has the ability to be used as container object (contrary to being an ActiveX/.Net Automation Interface which only provides a interface that can be accessed through Property/Method Nodes), then you can do it through the according container element in LabVIEW. Otherwise there is only an obscure possibility to make the main window of the process you want to embed a child window of the LabVIEW window. This requires in fact only a few Windows API calls, but this is a rather obscure way of doing business, there is typically no way to control the application in any way, starting up the application and embedding it into LabVIEW is an ugly process as the application will first startup as independant process through System Exec and then suddenly get forced into the LabVIEW Frontpanel, and last but not least, depending on non standard menu and window handling of such applcations it can result in strange visual artefacts or even inoperability of the (child) app. While it is theoretically possible, the most likely reaction you would get from your users doing this is a big: WTF!!!!!!!! Rolf Kalbermatter

-

VIpreVIEW - Interactive VI preview

Rolf Kalbermatter replied to vugie's topic in Code In-Development

I don't think it is as much a legal problem as it is a technical one. Creating a file formaat is not something you can legally prohibit. Only copying it could be prohibited but this does IMHO not apply here. However there are many aspects of a VI that are not accessible even through scripting, so you can not create a fully descriptive alternate file format. And reverse engineering the original format, while IMO an exercise in vain already, would be violating your LabVIEW license you accept when installing it. Rolf Kalbermatter -

Based on what criteria? I guess one of the LabVIEW style guides would be a good read for you. There are also books like LabVIEW GUI: Essential Techniques from David Bitter and many more on Amazon or your favorite bookstore. Many posts on the NI site can be found too, like http://zone.ni.com/d.../tut/p/id/5319. A google search with "LabVIEW (G)UI Design" will likely give you more hits than you can comprehend and most importantly while there are good basic rules, what is a pleasant UI is also to some degree a matter of taste and we all know that taste is something that can not always be argued about. Rolf Kalbermatter

-

Programmatically create axes for X-Y Graph?

Rolf Kalbermatter replied to Scatterplot's topic in User Interface

I think it is not even possible currently as the number of available scales seems to be a compile time thing. So no way you can do that on a running VI nor in a built application. Rolf Kalbermatter