-

Posts

633 -

Joined

-

Last visited

-

Days Won

4

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Gary Rubin

-

-

Does anyone know if it is possible in LV7.1 to rotate a string indicator to make it vertical, like the Y-axis labels on graphs/plots? I'm interested in modifying the cursor palette so that it's a long vertical strip, rather than a long horizontal strip. I can go in and customize the cluster so it is stacked vertically, but the width is still defined by the length of the text indicators.

Thanks,

Gary

-

Express VIs are to LabVIEW what crayons are to art; very few serious artists use them in their work but they get a lot of people to try their hand at art and a few people that try will become one of those serious artists. And those serious artists typically out grow crayons except where it really makes sense in their work.

Nice analogy! :thumbup:

I never use express vi's. I tend to use Labview for data processing or data collection using 3rd party acquisition boards. For that reason, the DAQ Assistant that everyone else is using isn't very useful. Because I'm usually dealing with high data rates and/or very large data sets, I usually try to maximize my code's performance, which means that the extra overhead associated with Express VI's scares me.

Gary

-

I think this is the main difference in both VI's, maybe if you add another case (-1) where the data just flows through gives you a better solution.

That did not change anything, which I guess I would expect based on bsvingen's explanation:

The indicator can have different dimension compared to the control, so a separate buffer for the control is set. -

Thats a good question

Sorry, but i din't see that at first. I think the answer is that the control and indicator have separate memory allocation rules, different from wires and subroutines. The indicator can have different dimension compared to the control, so a separate buffer for the control is set.

Sorry, but i din't see that at first. I think the answer is that the control and indicator have separate memory allocation rules, different from wires and subroutines. The indicator can have different dimension compared to the control, so a separate buffer for the control is set.I ALLWAYS make sure that i do not change array dimension in subroutines unless that is part of the functionality. I learned that from trial and error and from boards like this. I think your example show the impotrance of this, although *exactly* what is going on inside the compiler is still a bit mystic to me.

Thanks,

I'll try Ton's suggestion too, although at this point, all of this is mostly academic, since I have the other version of the subvi that works more efficiently.

Michael A., if you happen to read this, what do you think of an Optimization forum?

Gary

-

Whenever you change the size of an array, memory need to be allocated/deallocated. Therefore, if the array dim entering the tunnel on one side is different than the dim on the other side, labview creates a buffer. Replace array subset conserve the dim, while build array changes the dim. This has to be so even though the dimension does not really change in your vi, because LV has no way of knowing the the dim in the new data is the same as the dim in the deleted data.

So that would explain the allocation at the tunnel, but why another allocation at the indicator?

-

Hmm....I get some strange results with that VI.

Can you define "strange results"?

Dumb question, but did you put anything in the for loops?

If I paste your nested loops into one of my for loops, and run it 10 times (all the patience I have right now), I get 490ms per iteration on my 2.4GHz WinXP desktop.

Gary

-

Not much of benchtest really. But now I'm curious about the differences in speed I might see between the systems. Has anyone else noted differences in execution speeds between LV for Windows and LV for Linux?

I'm quite interested in this too.

I'm also attaching the benchmark VI I use for comparing the speed of two different routines, but it can of course be used for testing a single routine.

The higher the number of iterations, the better your answer will be.

Gary

-

Hello all.

First some background: I want to essentially create an Intensity Plot that scrolls down instead of to the left. There are a couple of ways to implement this, and I wanted to determine the speed trade-off associated with using Delete from Array and Build Array vs. Replace Array Subsets. I've gotten it working with no problems, and found that the Replace Array Subset approach is much faster. Along the way, however, I discovered something that perplexed me a bit and was wondering if any of you gurus out there had any thoughts on this.

The attached subvi does the following:

If the graph is not "full" yet, it simply replaces a column in a preallocated array.

If the graph is full, it deletes the first column and tacks on a new one.

If use "show buffer allocations", I see an allocation at the output of the build array, at the tunnel, and at the indicator. The Replace Array Subset solution (also attached) does not have these buffer allocations, and therefore runs much faster even in the False case in which the 2 subvi's are doing the same thing.

That leads to my question: Aren't some of those buffer allocations redundant? Why is there an allocation at the tunnel AND at the indicator, while there's no similar allocation in the Replace Array Subset implementation?

Some day, maybe I'll actually understand and therefore not be surprised by some of Labview's allocations.

Thanks,

Gary

-

Does anyone know what sorting algorithm is used in Labview's native 1D sort function?

Thanks,

Gary

-

You could convert the 2D array into a 1D array of clusters.

The cluster would consist of as many scalars as you have columns in your 2D array. Build the clusters such that the primary sort column is the first element of the cluster, the secondary sort column is second, etc.

Sort the 1D array of clusters using Labview's 1D Array Sort.

Convert the Cluster back into a 2D array, putting the columns back in the correct order.

This may or may not be practical to implement, depending on the number of columns you have.

-

Well, that's somewhat disappointing.

Thank you very much.

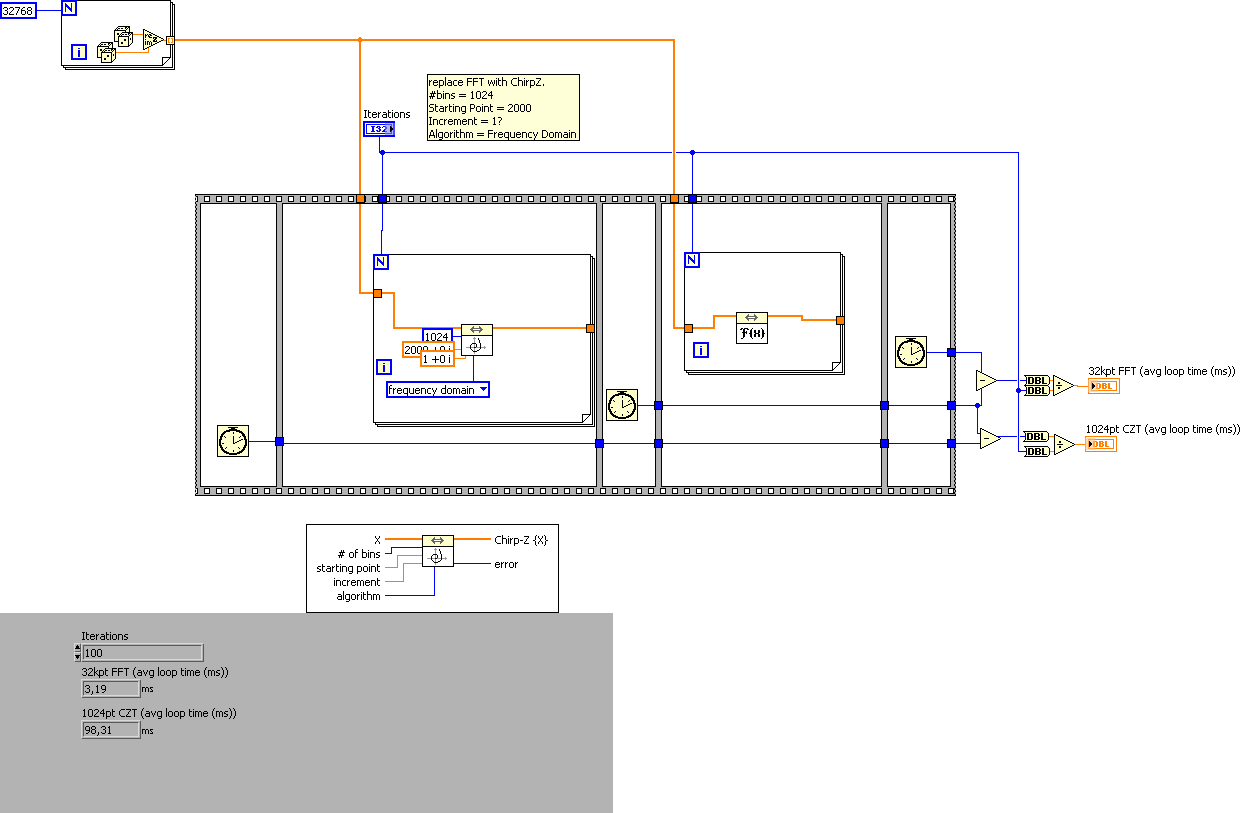

Interestingly, your FFT is approximately twice as fast as mine.

I'm running LV7.1 on a 2.4 GHz P4 w/ 1GB RAM. I wonder if it's all because of differences in machines, or whether NI has recoded their FFT between 7.1 and 8.

Thanks again,

Gary

-

I would like to evaluate the relative speeds of a 32k point FFT vs. a 1024 pt Chirp-Z transform. The Chirp-Z transform is available in the non-base version of LV8. I am still on LV7.1.

Is there anyone with LV8 who would be willing to insert the Chirp-Z subvi into the attached benchmarking vi, run it, and tell me what kind of speed results you get? I understand that the speeds will be dependent on implementation, and this will eventually be implemented in something other than Labview, but this will at least give me a ballpark estimate of the relative speeds.

Thanks!

Gary

-

Yes.

Great, thank you for the information.

Gary

-

That depends on whether the driver DLLs have the same interface on Linux as they do on Windows.

By that do you mean the same function names, parameters, etc.?

-

I'm looking to transition a project from Windows to Linux. The Windows version uses Call Library Function Nodes to make calls to dll's associated with hardware drivers. Given that all of my hardware has Linux drivers available, do the calls to the drivers work pretty much the same way in the Labview Linux environment as in Windows?

Thanks,

Gary

-

...FFTs of the data and I believe subtracting the mean value out will cause problems with these FFT programs.

If the data varies about a constant mean value, that value will only be represented in the 0-Hz bin of the FFT. Subtracting this mean value prior to calculating the FFT should only affect a very small number of points in the FFT. Again, that's assuming that the mean value is constant across the data, and therefore looks like a DC bias.

-

A block diagram would help. I assume the problem has to do with shift registers and the placement of the indicator within the diagram, but nobody can say for certain without seeing the code.

Gary

-

Convolution can also be implemented MUCH faster in the frequency domain, using FFTs.

See here.

-

Thanks again Pete. I know some more about my hardware. Well I thought of something and now turn my problem in to this: let say I want to compare two successive signals, and if the first signal is zero and the second signal is not zero, I'll increase my count by 1. Would you help modify my program? I am sweating over here as dealine is around the corner. I use a random number generator in place of my signal. Thanks in advance.

I might not completely understand what exactly you're trying to do, but this is one way to check to see if a number has crossed a threshold. You can make it more complex by adding more shift registers and more logic.

Gary

-

Labview happens to be my "glue" tool to get all the computers and instruments working together, although typically I see my final product as being the data I provide - not the LabView VI's that I develop.

I think I'm in the same boat as Pete on this.

I typically use Labview for two types of applications. The first is to provide control and and user interface for high-speed data acquisition systems. These are typically non-NI A/Ds, so Labview is just my language of choice -- the users don't care what language was used.

The second, and what I spend most of my time doing, is using Labview as a general programming language for developing tools for radar data processing. As in Pete's case, the consumers of the processed data, whether it be me or my coworkers, care much more about the data itself than what language I used to develop the tools. I just happen to prefer Labview because I'd much rather spend my time working on the processing algorithms than debugging parentheses and semicolons.

Gary

-

Here in my company, our Labview programmers are a physicist (me), a computer engineer, an EE, and a nuclear physicist.

I'm just curious - is this variety of backgrounds typical of Labview users? Are most engineers of some flavor or another?

Just wondering...

Gary

-

...as a physicist...

Speaking of which, what's the background of most people in the forum? I am also a physicist (at least that's what the diploma and business card say, although it's often easier to tell people I do software development).

Are most LAVA users engineers of some variety or another? EE's?

Gary

-

When was the last time you heard some say "sy-mul-tain-e-us" (simultaneous).

THe "mul" has become "mo"

That's how I pronounce it. I guess I'm living in the 1940s (or 1850s)

-

How can i let it excecute every fifth iteration??

There is a function that does integer division and returns an integer quotient and a remainder. It can be found with all of the other arithmetic operations and has an R and IQ on it. Use this to divide the loop iteration value (the little i in the lower left corner of the loop) by 5. Every 5th iteration, the remainder will equal zero. Use a case structure so that the part of the code inside the case structure only executes when the remainder is equal to zero.

Rotating string indicators?

in User Interface

Posted

Sorry, I meant the Cursor Legend, where you can manipulate graph cursors.

EDIT: It's called a Cursor Palette in the property nodes.