rharmon@sandia.gov

Members-

Posts

91 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by rharmon@sandia.gov

-

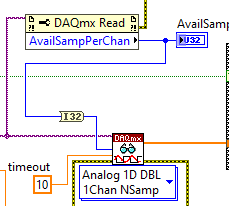

I've returned to continuous samples, I set my rate to 100000 and samples per channel to 1000 and on my DAQmx Read.vi I've hard-wired Number of Samples Per Channel = 10000 (1/10th of my rate) when I interject my own 90 usec pulse I catch every pulse. But I'm worried, the only difference from my old setup which could run for months before getting a buffer overflow error is the hard-wired Number of Samples Per Channel = 10000. In the past I used the DAQmx Read Property Node to check Available Samples Per Channel and would read that value instead of the now hard-wired value. Does my current setup sound appropriate to catch the random 90 usec pulses? Rate = 100000 Samples per Channel = 1000 Hard-wired into the DAQmx Read.vi Number of Samples Per Channel = 10000 (1/10th of my rate) Thanks, Bob

-

Using Continuous Samples I had problems with buffer over flows errors -200279

-

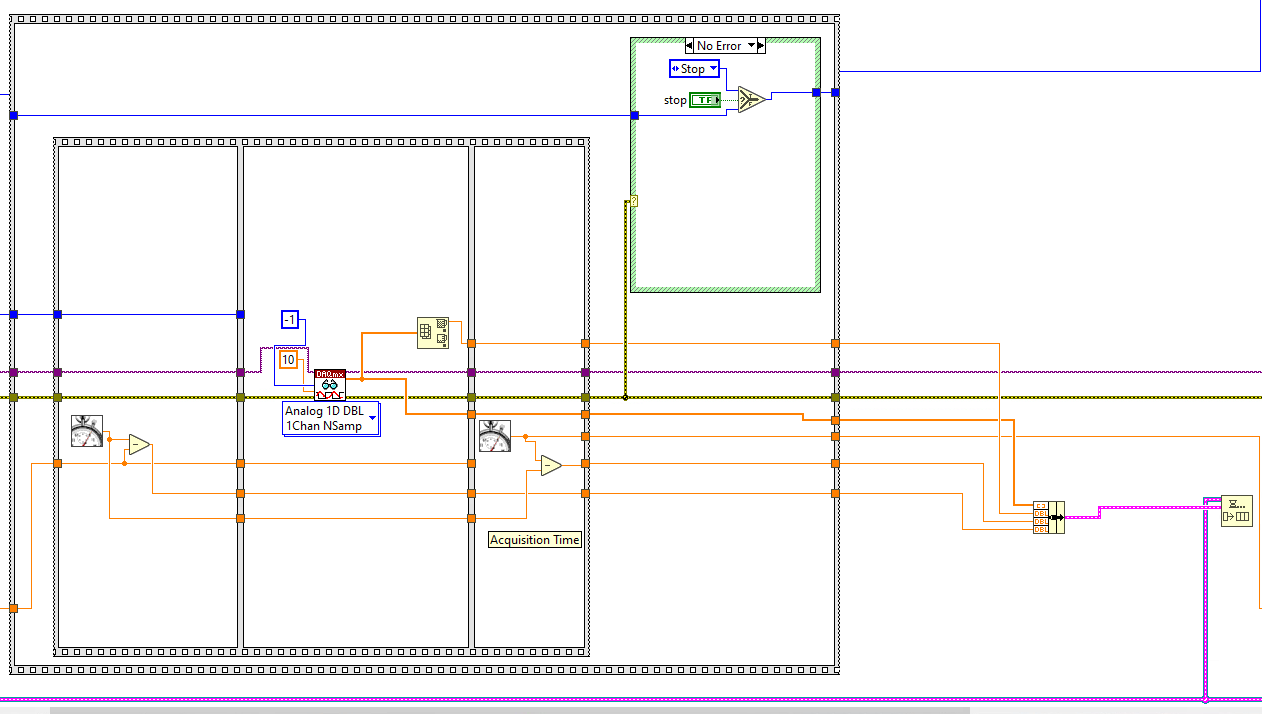

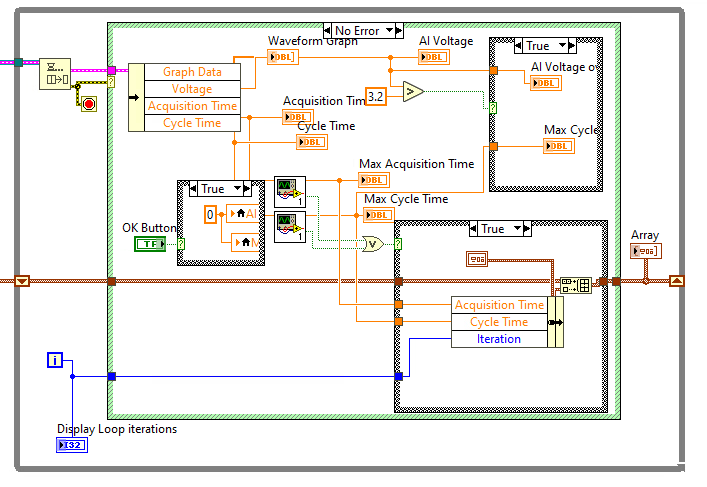

I'm trying to catch/count a random 90 usec pulse. I'll admit I'm just using brute force.... I'm acquiring Finite Samples, 100000 samples per channel (just one channel) at a rate of 200000 on a PXIe-6341, when I'm confident this test is working it will run for a year. Interjecting my own 90 usec pulse I catch the pulse 100 times out of 100 attempts. That part I like... I define Acquisition Time as the time it takes to acquire my huge acquisition and Cycle Time as the time it takes to queue my acquisition and circle back for the next acquisition. There are other things happening on this computer, but this vi only acquires this acquisition. Acquisition time is .502 seconds and Cycle Time is .0000207 seconds so if my pulse is 90 usec and my cycle time is 20 usec I should always catch the pulse. I think. Now for the part that concerns me... I've run this test for days and I'm catching the Max Cycle Time and Max Acquisition Time catching being every time the value is larger than the last largest value I toss it into an array (I only have 28 elements in this array over a several day period). My Max Acquisition Time is .5139955, however my Max Cycle Time is .0002743 considerably larger then my 90 usec pulse. I've moved almost everything out of the acquisition loop, I just don't know what to try next. Is there a way to prioritize this? Any thoughts? Thanks in advance... Bob

-

I'm missing something here

rharmon@sandia.gov replied to rharmon@sandia.gov's topic in LabVIEW General

ooth, Thank you so much... That was the issue... I should have guessed... Right!!!! Really appreciate your help... Bob -

Trying to modify this code I found online (Editing Individual Cells in an Excel File.vi), but whenever I right click on a property node I get "No Properties" or "No Methods". I think it's because the Active X for excel isn't loaded or enabled but I'm not sure. I tried to select the Active X Class, what comes up is Version 1.3, but I don't have a clue what to change it to... I tried ExcelTDM 1.0 Type Library Version 1.0, but that didn't work. Any ideas or help would be greatly appreciated... Thanks Editing Individual Cells in an Excel File.vi

-

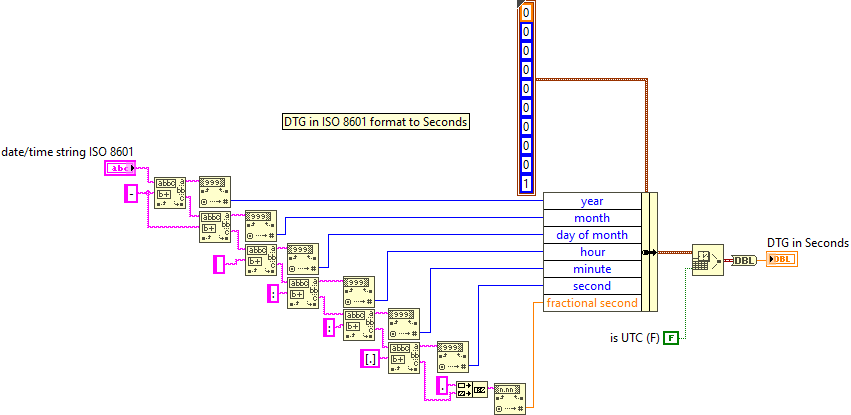

Thanks for your great explanation. As I look into modifying/correcting my issue changing to UTC time would be very involved and probably take more time then I have available to accomplish. I'm leaning toward just changing the DST to -1 and moving on. Just so I think I understand, by changing DST to -1 the operating system will make the corrections for Daylight Savings... When we leap forward... My data will be appear to be missing one hour of data. The data will still be taken, but the timestamp will increase making it appear I lost one hour of data. And when we fall back my data will appear to take one hours data again. The data will still be correct, it will just appear I have taken two sets of data during that one hour... Does that make any sense at all???? My head is spinning. Thank you all again...

-

Wow, I think I'm happy this Daylight Savings hasn't just bit me... Sorry guys but I'm always happier in a full boat, even if its taking on water!!! I think the overall consensus is to use UTC within the software and convert to Time Zone corrected display for the user. I'm guessing UTC just keeps marching on and never changes for Daylight Savings Time, but my conversion for the user would need to change. I'm guessing to do the conversion I would use the DST value being 0 for standard and 1 for Daylight Savings time to determine how I'm converting. I'm hoping this DST value changes right at Daylight Savings Time. I think all this makes sense. I'm finding it difficult to wrap my hard head around Dr. Powell's comment "I would go with DST=-1. There will be one hour per year (Fall Back) where LabVIEW won't be able to know what the UTC time actually is (as there are two possibilities), but otherwise it would work. Note, though, that i never use that function that you are using." How are there two possibilities about actual UTC Time? I can't have one hour a year when time isn't correct, my luck would have that one hour out of 8,760 hours be the actual time I truly need. Dr. Powell says he never uses Date/Time to Seconds Function... What do you use? What makes your method stronger? I'm sorry, I'm just crazy busy right now and I'm trying to get the answers I need to correct my issue so when I get time, I won't be asking you guys questions... That said I haven't taken the time to look at your Libraries yet. I will soon, I promise. Any extra thoughts, concerns, knowledge would be greatly appreciated. Thanks, Bob

-

I just ran into a problem with my coding... And I think I found the issue, I just don't understand the WHY? After the time change this last weekend, my elapsed time has been off by an hour, it added an hour to the actual elapsed time. My software grabs the time in seconds when something starts and uses the Elapsed Time to calculate how long it's been running. This has been working just fine until Daylight Savings Time hit me. I'm guessing with my DST set to 1 in the cluster constant that for the last several months my elapsed time calculations have been correct because the actual Daylight Savings was 1, but when Daylight Savings Time changed this last weekend this DST should be a 0. The help file for the Date/Time to Seconds Function states the following: DST indicates whether the time is standard (0) or daylight savings time (1). You also can set DST to -1 to have the VI determine the correct time automatically each time you run the VI. If is UTC is TRUE, the function ignores the DST setting and uses Universal Time. This statement tells me I should have set DST to -1 in the cluster constant. My work computer won't allow me to change the date of my computer so I can't think of an easy way to test this. I'm hoping you guys years of experience can tell me if I'm right. I know this works for now, but I don't want the problem to return when we spring forward next spring... Are there pitfalls to setting DST to -1 and just letting the OS take care of Daylight Savings Time? Thanks in advance for your help... You guys always have the right answers... Bob

-

OK, I found my stupid... Right after I posted... I had setup my event structure wrong... too fast on the mouse... I was getting the Previous row/column when they were wrong... Not sure why I got -2 -2... Not going to worry about that now... Thanks for looking at my post and laughing... I'm sorta laughing too...

-

I can't seem to find a consistent means of retrieving the row and column using a mouse click inside a table cell. Property nodes "Edit Cell" and "Selection Start" seem to work sometimes... sometimes I get -2 and -2, but sometimes I even get the wrong row column... It just doesn't seem to make any sense to me... I'm probably doing something really stupid, but I'll be darned if I can figure out what the stupid thing I'm doing???? Thanks

-

Does anyone know of a way to determine from a LabVIEW program if a PXIe-1085 is powered up? Maybe also if it can communicate via PXIe-8301?

-

Thought I'd give a quick update on where I'm at.... But first a HUGE Thank You to everyone here... I can't express what a fantastic resource you guys are. I decided to try Tortoise SVN, mostly because everyone seemed to say it was the easiest to use, for a beginner in SCC I thought that very important. So far so good... I created a repository on my computer and (terminology is going to bite me here) Imported my project. It all appears to be fine so far... I know small steps.... This week has been crazy busy.. I'm hoping to actually change some vi's today and see how that goes. Next question... Where to keep the repository... I'm just far enough in that if I needed to start over with a new repository I wouldn't be so bad. I actually tried to start the repository on a TeamForge site but got an error something about Tortoise SVN not supported something to do with DAV whatever that is. I plan to look into this a bit more today. But back to the question... I've tried using a LabVIEW Project that was on a network drive at work, and working from home was painful. LabVIEW would lock up looking for the network drive sometimes for up to 20 seconds... Drove me crazy... This is going to surprise everyone, but I'm horrible at backing up my laptop. So keeping the repository on my laptop worries me a lot. If I decide to keep it on my laptop I can set it up to sync on a networked drive at work. I don't think this would upset LabVIEW too much, But that is yet to be determined. Other then the obvious that I need to backup my laptop. Any other concerns about keeping the repository on my laptop? Thanks again to everyone... You are all amazing....

- 26 replies

-

- source code control

- subversion

-

(and 3 more)

Tagged with:

-

Eric, I think you are exactly right... My thoughts of a branch was I have a software package and probably 90% of that code will be used in another project. I was thinking I would just branch off the second project and make the necessary changes to make the second project work. It would not be my intension to ever re-unite the branches. Thank you for catching my mistake... You have probably saved me from a future huge headache....

- 26 replies

-

- source code control

- subversion

-

(and 3 more)

Tagged with:

-

Great Information... I think reading through these posts I'm leaning toward Subversion or Mercurial... Probably Mercurial because from my conversation leading me toward source control touched on the need to branch my software to another project. Next step... download the software on another computer (so I don't endanger my current projects) and give it a try. I can't express how helpful these posts have been, It would have taken me days to try all different source control options available... Just read Crossrulz's reply and it states "just don't branch and you will never have to worry about merging" Cool.... I guess I'll just have to cross that bridge when the time comes. Thank you to everyone who replied... Now I'm going to go jump in the source control pool... It's cold outside I hope the water is warm...

- 26 replies

-

- source code control

- subversion

-

(and 3 more)

Tagged with:

-

Yes... Very frightening... Buy toward the end it gets interesting... Maybe git is not so bad after all... Pretty sure it's not for me though... If one of my LabVIEW hero's is having trouble using it I better run away fast!!!

- 26 replies

-

- source code control

- subversion

-

(and 3 more)

Tagged with:

-

Great information packed post... Thank you

- 26 replies

-

- source code control

- subversion

-

(and 3 more)

Tagged with:

-

Ok, I'm about to show my ignorance of source control software, but I've noticed a bunch of comments complaining about issues with merging files. I'm not quite sure what merging a file means. Could you explain a little for me... thanks

- 26 replies

-

- source code control

- subversion

-

(and 3 more)

Tagged with:

-

So I spent much of the afternoon looking over postings here on Source Control Software and LabVIEW. I must say I came away discouraged. I've been programming LabVIEW for over 20 years in a single one technician lab and never really needed any stinking source control. Well now that's not the case. But thought I'd just read some posts, determine what everyone else likes and be done!!!!! That didn't work out for me ether... Seems nobody really likes source control after all. Or at least there are issues with just about every option. So here is my situation. I'm still the single labVIEW developer on a project. But the project requires a more structured source control environment. So I'm looking for source control that works flawlessly within LabVIEW and is easy to use, I'm Using latest versions of LabVIEW. Since most of the posts I read today are from years back, I'm hoping things have really improved in the last couple years and you guys are happy as a lark with your source control environment. If you could take a minute and tell me: 1. What type of source control software you are using? 2. You love it, or hate it? 3. Are you forced to use this source control because it's the method used in your company, but you would rather use something else 4. Pro's and Con's of the source control you are using? 5. Just how often does your source control software screw up and cause you major pain? Thank you in advance. Bob Harmon

- 26 replies

-

- source code control

- subversion

-

(and 3 more)

Tagged with:

-

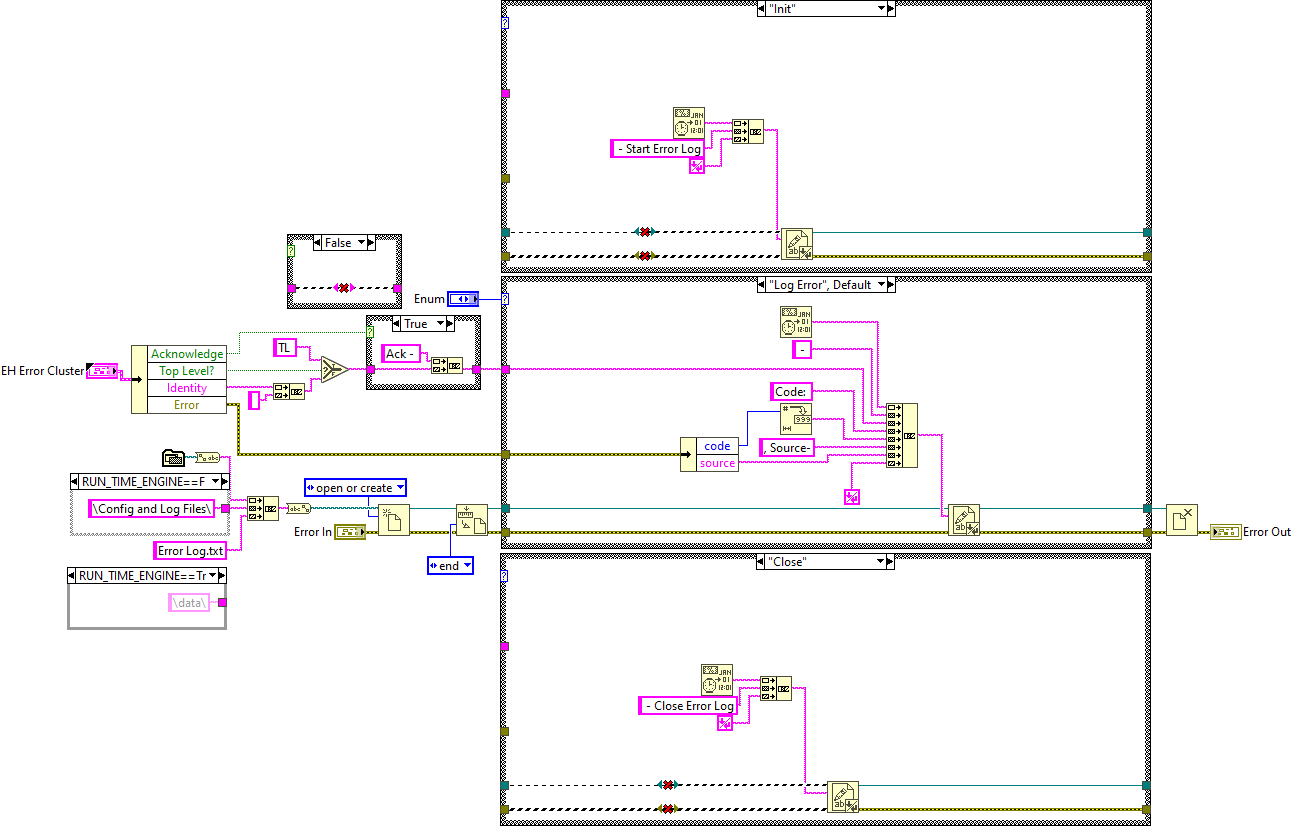

I've been using this vi to write error information to a log for months at this file path C:\LabVIEW Working Folder\Tester Software\Config and Log Files\Error Log.txt and as an executable at this file path C:\LabVIEW Working Folder\Tester Software\data\Error Log.txt Now for some unknown reason randomly but usually on the close i get a file dialog box asking which file to write... Any idea what I'm missing... what stupid mistake did I make? It just doesn't make sense...

-

Log File Structure

rharmon@sandia.gov replied to rharmon@sandia.gov's topic in Application Design & Architecture

You guys always have the best information/ideas... Thank you all... Since I really like the new entries at the top of the log file, and my major worry is that the file gets too big over time and causes the the log write to consume too much time I really like dhakkan's approach of checking the file size periodically and flushing the file and saving the data to numbered files. -

I have an error log and a couple other logs associated with a program I've written. My question is this, when you read a log file, do you expect the newest information at the beginning of the file or the end. I think I prefer it at the beginning of the file... That said I worry about large log files. The only way I figured out how to accomplish this was to read the entire file, add my new entry at the beginning and then re-write the entire file. Seems like a lot of overhead just for a style preference. Is there a better way to accomplish writing new entries at the beginning? Or does everyone prefer new log entries at the end of the file? thanks for you comments and ideas... Bob Harmon

-

I have an executable that I install on new computer that doesn't have LabVIEW installed. This executable uses a PXIe Chassis and several different cards. I refer to these cards in my software as "PXI1Slot2_AIO_UUT1" and "PXI1Slot11_SMU_UUT1". I realize I need to setup MAX with these names the way I currently have things setup. But my question is How Do You Guys deal with this on a blank new computer. I would rather not install LabVIEW on these machines. Can I just install MAX? Are there other options? Thanks for your input...

-

I have a program where I launch a clone that runs in the background and updates the Top Level VI. In normal operation the top level vi tells the clone to stop and everything seems to be fine, but every once in a while the clone stops, or maybe the top level stops and leaves the clone running. Is there some method to monitor running clones? Maybe with the ability to stop a running clone? This is how I launch the clones. Thanks for your help...

-

USB to RS-232 Converters

rharmon@sandia.gov replied to rharmon@sandia.gov's topic in LabVIEW General

All, Once again I want to thank everyone who chimed in to help me figure out this communications issue. I have attached a small project file that communicated with the Tripp-Lite SmartOnline UPS. If Tripp-Lite tells you to use communications protocol 4001then this software should work. To say again, Tripp-Lite was not helpful. The protocol document they sent me in no way works. Not even close. What was helpful. 1. Input from you guys who chimed in here. Huge help... Thanks. 2. https://github.com/networkupstools/nut/blob/master/drivers/tripplitesu.c although I don't do C, reading the information here was very helpful 3. The Tripp-Lite Programmable MODBUS Card Owner's Manual. A colleague at work found this document and although it doesn't translate perfectly it was helpful. 4. My bullheadedness and refusal to give up when I knew it should be able to be done. So obviously without input from Tripp-Lite I can give no guarantees on the accuracy of this code. I feel it's right. If anyone wants to try it and let me know how accurate you think it is... That would be helpful. Even better yet if you find errors please let me know. Thanks again, Bob Harmon Tripp-Lite Project.zip