-

Posts

264 -

Joined

-

Last visited

-

Days Won

10

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by bbean

-

-

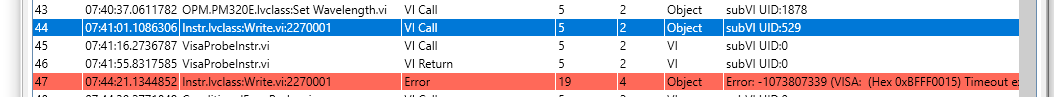

I have an issue where reading the VISA Instr Property "Intf Type" of a USB Instrument hangs for about 40 secs:

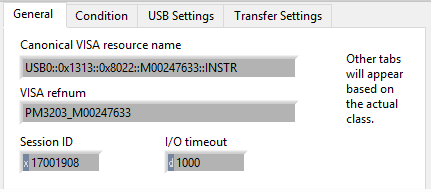

followed by an asynchronous VISA Write hang for 2+ minutes! The timeout on the VISA instr session is set to 1000ms. Here are the other details of the session:

and here's a snip of the VI:

Any idea why these long timeouts are occurring? or why the 1000ms timeout is being violated for both the Instr property call (no idea what goes on under the hood here) or the VISA write.

-

6 minutes ago, drjdpowell said:

By default, subVI are set to "Same as Caller" execution system, but they can be a specific system instead. I suspect it might be just the subVI that does the TestStand call that needs to be in a different Execution System, not the calling Actor.vi itself. So try just changing the subVI.

If that doesn't work you may have to separate the TestStandAPI calls out. Are you using your TestStand Actor as a GUI or user interface? If so you may have to create another Actor to separate out the TestStand API calls that are causing the log jam into a new Actor....That new actor should not have any property/invoke nodes which would force its VI into the UI thread.

-

1

1

-

-

26 minutes ago, drjdpowell said:

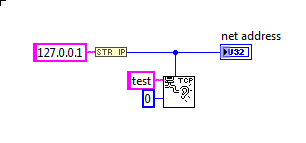

What number do I feed to "net address" to make it local host only? I wasn't aware of such a feature.

I would imagine the string 127.0.0.1 (or u32 Net Address 2130706433)

-

1

1

-

-

I worked on some Ethercat issue a few years back and remember that at the time the cRIO doesn't support Beckhoff array datatypes and we had to make individual IO variables for each item in the array on the Beckhoff side. Were you able to import the XML file OK into the LabVIEW project?

-

never mind. found a work around to my problem. I was getting runtime errors whenever I saved my VI with the activex control saying the control doesn't support persistence. I fixed by saving a null variant to a connection property before exiting the vi and the problem went away

-

Did you have any luck with solving this issue? I'd like to do the same thing.

-

... we have covered the Client OS licensing part of things so it frees us up to create a VM per "project"

Curious how your company worked or works out the OS licensing. I tried to go down this rabbit hole with MS a couple of years ago and ended up giving up because it was so difficult to figure out for a small business.

-

Yeah I could see that being helpful at some point. What happens when you have more than one primitive/Subvi on the diagram in the node? Does it just place another entry at the bottom of the unbundle?

-

If you don't need to do FPGA image processing, I would explore the other options for Camera Link cards that are not FPGA based and see if they will work with Pharlap

With regards to the FPGA example, this may be a long shot If you haven't compiled FPGA code and I'm not sure it will work at all. I don't have time right now to fully explain but to summarize:

- Open the example 1477 getting started project

- Save a copy of the project and all VIs to a new location (so you don't overwrite the working windows target version from NI)

- Close the off the shelf example project

- Open the copy project

- Create a new RT target in the project (right click on project in project tree, select new targets and devices, select RT desktop

- Move the FPGA Target from the Windows Target to the RT Desktop target

- Move the Host VI from the windows target to the RT target

- Compile the FPGA target VI

- Open the Host VI (now in the realtime target) and reconfigure the Open FGPA reference to point to the new compiled FPGA VI.

-

What do you think of this solution?

I guess I would need to know about your requirements, but I think that would be a road less traveled. Do you need base, medium, full or extended full? do you need power over camera link? etc. Why do you need real-time?

In the future I would recommend talking with Robert Eastland and purchasing all your vision related hardware /software from Graftek. He has been extremely helpful with me in the past and knows his stuff. I have no affiliation with the company.

Did you try my suggestion to compile the example FPGA code and move the host example to the real-time target to see if its even a possibility?

-

According to the specification:

http://download.ni.com/support/softlib//vision/Vision Acquisition Software/18.5/readme_VAS.html

NI-IMAQ I/O is driver software for controlling reconfigurable input/output (RIO) on image acquisition devices and real-time targets. The following hardware is supported by NI-IMAQ I/O:

.......

- NI PCIe-1473R

- NI PCIe-1473R-LX110

- NI PCIe-1477

the frame grabbers should work under Labview Realtime. Do you see it that way?

The statement of NI (Munich) is now (after the purchase) that the frame grabber PCIe 1477 should not work under Labview Realtime.I also had the impression that NI was not really interested in solving the problem. For those, Labview Realtime is an obsolete product.The question to NI (Munich) whether the frame grabber PCIe 1473r works under Labview Realtime has not been answered until today.

Too bad that nobody else made experience with the frame grabbers under Labview Realtime.

A nice week start

Jim

Unfortunately, the card probably does not work directly in LabVIEW Realtime. NI's specifications and documentation are often vague with hidden gotchas. I had a similar problem with an NI-serial card years ago when Real-time and FPGA first debuted. I wanted to use the serial card directly in LabVIEW real-time with VISA, but I ended up having to code a serial FPGA program on the card because VISA did not recognize it as a serial port early on.

Is there anyway you can try to compile the FPGA example and download it to the card?

C:\Program Files (x86)\National Instruments\LabVIEW 2018\examples\Vision-RIO\PCIe-1477\PCIe-1477 Getting Started\PCIe-1477 Getting Started.lvproj

After you compile and download the FPGA code to the 1477, I think you would have to move "PCIe-1477 Getting Started\Getting Started (Host).vi" from windows target to the Real-time target, open it up and see if it can be run.

-

The FPGA tool is also available. The frame grabber runs on the same PC under Windows 7 (other hard disk).

Does anyone have an idea why the frame grabber is not recognized in realtime?a nice weekend

Jim

At least for the 1473R according to this:

"The NI PCIe-1473R Frame Grabber contains a reconfigurable FPGA in the image path enabling on-board image processing. This means that the full communication between the camera and the frame grabber goes through the FPGA. It is then a major difference comparing to the other standard frame grabber without FPGA.

"It means also that the camera will not shows up in Measurement & Automation Explorer."

I'm guessing here but I think you have to create a new Flex RIO FPGA project with option for the card

https://forums.ni.com/t5/Machine-Vision/PCIe-1473R-fpga-project/td-p/2123826

Maybe look and see if you can compile an example from here

https://knowledge.ni.com/KnowledgeArticleDetails?id=kA00Z000000kIBdSAM&l=en-US

..\Program Files (x86)\National Instruments\LabVIEW 2018\examples\Vision-RIO\

-

TCP is not free of pain either though. I've been on networks where the IT network traffic monitors will automatically close TCP connections if no data flows across them EVEN if TCP keep alive packets flow across the connections. For whatever reason the packet inspection policies effectively ignore keep alive packets as legitimate. We ended up having to send NO-OP packets with some dummy data in them every 5 minutes or so if no "real data" was flowing.

-

So not sure how to do RUDP.

You would have to create/send the packet header(s) as defined by RUDP in each data packet in LabVIEW on pharlap side by placing it before the data you send. Then you would have to send a response packet with the RUDP header(s) on the LabVIEW host side based on whether you received a packet out of sequence (or invalid checksum, etc). You would effectively be creating your own slimmed down version of TCP at the LabVIEW application layer. Quite a pain unless absolutely necessary.

-

you could try installing pyvisa-py (partial replacement for ni-visa backend) on the rasberry-pi and see if it can implement remote sessions eg. visa://hostname/ASRL1::INSTR .It doesn't look too promising based on this discussion,

https://github.com/pyvisa/pyvisa-py/issues/165

but it seems to indicate if you know the address and don't rely on the pyvisa-py resource manager it may work.

-

6 hours ago, Benoit said:

I think the biggest mistake from NI was to not add 20 years experienced user into their development team....... but no real user.

Benoit

This.

I tested NXG for the first time at a feedback session during the CLA summit. So I was learning NXG on the spot in front of one of the NXG developers. When I would get stuck trying to figure it something out, the developer would ask how would you do that in legacy LabVIEW and I would tell him, then he would show me how to do it in NXG. My understanding was that the NXG IDE was designed to make the number of programming number steps more "efficient". Unfortunately this sometimes sacrifices the many years of muscle memory doing things in legacy LabVIEW. A bad analogy would be brushing your teeth with the opposite hand because studies have shown that ambidextrous tooth brushers clean teeth slightly better. It may be slightly better in theory but the pain of learning outweighs the benefits. Some of the things I remember being slightly different (annoyingly):

- Quickdrop functionality

-

Adding a terminal on the block diagram seemed more tedious and defaulted to not showing the Control/Indicator on the front panel

. WTF.

. WTF.

While I'm sure the NXG team has received guidance/direction/development/feedback from very experienced insiders at NI, I walked away feeling like there was no way the internal NI experienced LabVIEW users were developing only with NXG on a daily basis by default. Otherwise muscle memory things like quickdrop would work exactly like they did in legacy LabVIEW. I think what needs to happen is Darren needs to un-retire from fastest LabVIEW competition and compete next May at NI-Week using NXG.

That said the NXG developers and team leads were very receptive to my feedback and seemed genuinely open to making changes. Now whether that carries through to the end product or not we will see. I also saw some new IDE features (new right click options for instance) that made me think that makes sense and I can see that helping speed up development once I get used to it.

If and when I use NXG I would like to see a checkbox in the options that says "maintain legacy front panel, block diagram and keyboard shortcut behavior as much as possible"

-

1

1

-

thanks for all the feedback. its why i love this site.

-

On 12/8/2018 at 3:21 PM, Michael Aivaliotis said:

From NIMax you can format a cRIO.

Is there anyway to do this without MAX? or a description of what happens when MAX executes the format?

Unfortunately no Windows boxes are allowed in the previous mentioned "secure" area. So the wipe needs to be done without MAX. Once the cRIO is wiped it can leave the secure area and all normal NI stuff (MAX, RAD, windows) can be used. As someone told me, its the security policy it doesn't have to make sense.

-

5 hours ago, Michael Aivaliotis said:

Creating a crio image is a little different than changing the entire OS stack from Windows to Linux.

You can image a crio using the Replication and Deployment utility. I use this all the time.I think you would need to get the installation image NI uses to setup that specific Linux cRIO and some instructions. NI has the image and they can choose to give it to you or not. If they can't provide it due to warranty or licensing issues. Then they should offer a service where you send it in so they can do it for free or even a fee. It's not unreasonable to ask for a service fee since this is not a common request. However, considering the astronomical cost of the hardware, they should do this without question. In the past, when I've requested things from support that are out of the ordinary, they tend to shrug it off. However, once I get a sales rep involved and explain the customer need and criticality of the situation, then they have the power to get support to do anything. NI should be doing this, not you.

I know about the RAD. But I think my needs are similar viSci, because I actually need to "wipe" a cRIO before removing it from a "secure" area and then re-image it from the clean media.

-

On 12/5/2018 at 10:12 AM, viSci said:

Has anyone had success doing this?

I need to image a few cRIOs in the near future. Have you had any luck finding details for restoring the cDAQ image?

-

Do you have ASRL1 open any where else, say in MAX or another part of your program?

Is it possible the CONSOLE OUT switch is enabled which will then use the COM1 port?

https://knowledge.ni.com/KnowledgeArticleDetails?id=kA00Z000000P9zxSAC&l=en-US

Edit: Just noticed after finally finding a picture of the 9045 on the NI Website 👾 that it doesn't look like it has DIP switches for CONSOLE OUT. So not sure if you can check in MAX to see if its enabled.

<\rant> Its impossible to find anything on the NI website nowadays. Its gotten so bad, that I now wish they would just create one giant webpage for each product line (cRIO, PXI, etc) that has every single document in pdf format for each piece of hardware in alphabetical order in plain text. This BS of clicking on something and then having the website perform a search of the website is ridiculous

</rant>

</rant>

-

21 hours ago, Aristos Queue said:

BBean: How bad is the limitation? Just annoying or is it actually blocking work? And was the error message sufficient to explain the workaround?

I've looked further at creating the mapping system -- it's a huge amount of effort with fairly high risk. I'm having a hard time convincing myself that the feature is worth implementing compared to other projects I have in the pipeline.

Its just a hassle. I understand the risk reward decision. I just wanted to indicate Mads isn't a lone wolf.

-

On 8/15/2018 at 9:39 PM, Aristos Queue said:

So, I put the limitation in for private data access and waited to see how many people would object. One so far. :-)

Two

-

1

1

-

-

Currently exploring using some of these:

https://www.acromag.com/sites/default/files/TT334_996h.pdf

to condition the signal to an AI cDAQ module

LabVIEW hang on VISA instr property

in Hardware

Posted

Thanks for the quick response. its a rather annoying problem.

I think this may have been the problem but wont know until I test again on Monday. You are correct that its a PM320E. And so far their driver has been a pain. The suspect command is the error query the parent class implements by default "SYST:ERR?" but PM320E requires ":SYST:ERR?". A nuance (nuisance) that I failed to notice.

PS. I think I may have borked the instrument up by upgrading the firmware too. Oh well thats what you get for trying to get something done before a holiday weekend.