-

Posts

264 -

Joined

-

Last visited

-

Days Won

10

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by bbean

-

-

One other comment after looking at the VIs a bit more. Why do use different conventions for identifying the recipient/sender for MSG Get (the caller VI's name or name override) and MSG Send (parsed out of the Msg input string)? This is confusing. Why not just do the Msg Send the same as Msg Get (using the caller VI name)?

-

- I would normally put both in a sub VI (look inside the get VI) so that get refnum and Generate event is a single VI, drop in replacement, for the Generate primitive. As I said before, The "Name" is preventing me from doing that and I'm still umming and arring about it because I don't like that it is not a single VI. It annoys me enough that I keep the "Name" unbundle even though I could make it a separate terminal on the "type VI" to remove it, It keeps me thinking about how to get rid of it.as it is a blight in all VIs I have to work on

I have an idea regarding the "Name" issue. Would an Xnode be a better solution instead of a .vim file? I think you could use the AdaptToInputs and GetTerms3 functions to figure out the "Name" of the data input (at development time only of course) when it was wired to a variant data terminal. Then in GenerateCode wire up a static name to the SEQ input of your template VI. Not sure if its possible, but you may also be able to dynamically (in dev env again) rename the "Data" input terminal in GetTerms3 to have the same name discovered during AdaptToInputs... potentially also fixing the generic "Data" Event in all event structures to something more meaningful.

- I would normally put both in a sub VI (look inside the get VI) so that get refnum and Generate event is a single VI, drop in replacement, for the Generate primitive. As I said before, The "Name" is preventing me from doing that and I'm still umming and arring about it because I don't like that it is not a single VI. It annoys me enough that I keep the "Name" unbundle even though I could make it a separate terminal on the "type VI" to remove it, It keeps me thinking about how to get rid of it.as it is a blight in all VIs I have to work on

-

Attached is a demo using the VIM events. It works pretty well, when it works, but the VIM technology is far from production ready as we were warned..

Thanks for the example of VIM events and the hard work that went into that example. I noticed the VIM Event Test.vi ran a little choppy on the first run, hesitating every now and then. After stopping and restarting it seemed to be fine. Wonder what's happening here.

Attached are recompiled versions for 2012 / 2014 to save others the pain of manually re-linking the .vim file. Do .vim files work more reliably if they are placed in the user.lib/macros/ ?

Just a quick question....In your "subsytem" VIs (File and Sound) is there a reason for putting the srevent.vim file inside the while loop? could it be outside the while loop to prevent unnecessary calls to the subvi to just return the reference? Or is it necessary to be in the while loop for some reason?

Edit - Updated 2014 zip file to have relinked Main.vi

-

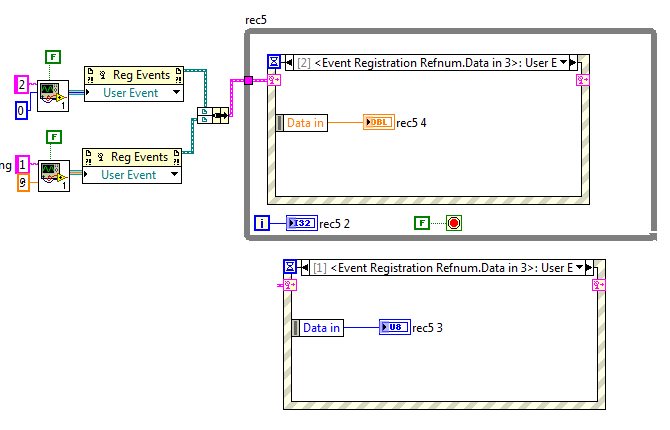

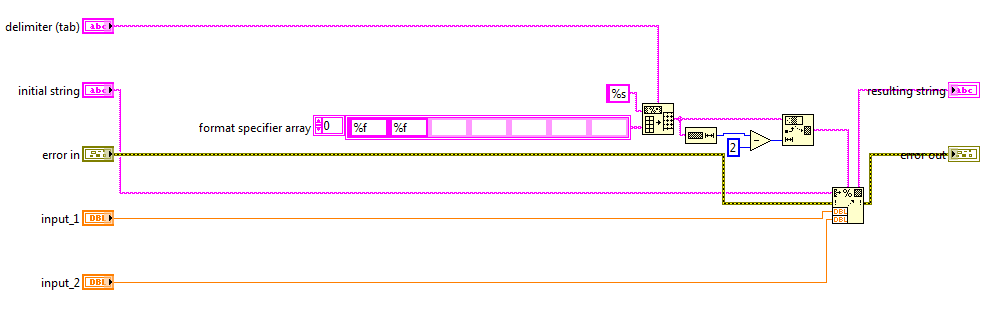

Played for a few hours with vim and event generation.

I wanted to create universal vi for creating and adding UE. It seems that we can create one vi for registering and sending UE data, but registering more than one UE will require xnode

I added one flag to decide when to register and when to generate.

vim needs to be extracted because I couldn't attach it.

Coffee hasn't kicked in yet and I feel a little slow this AM. Can you explain:

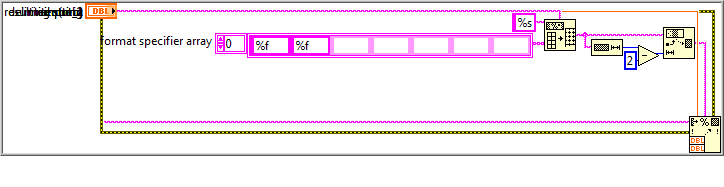

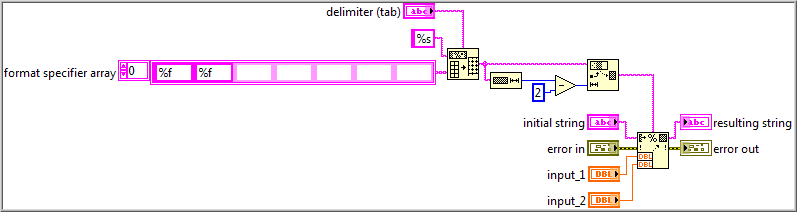

- the use of the queue primitives and what they are for in the .vim?

- why register flag doesn't get passed to the "created new" True case and wrap around the Reg Events Primitive?

And is the jist of the naming issue:

-

That being said not being able to pass wireless data into our out of subVIs, or even structures is pretty limiting. Thanks for sharing.

Still waiting on this front. LabVIEW needs to move to 3d diagrams like CAD programs.

Sorry but I just can't figure out what the picture represents on the 'receiver' side

-

- Popular Post

- Popular Post

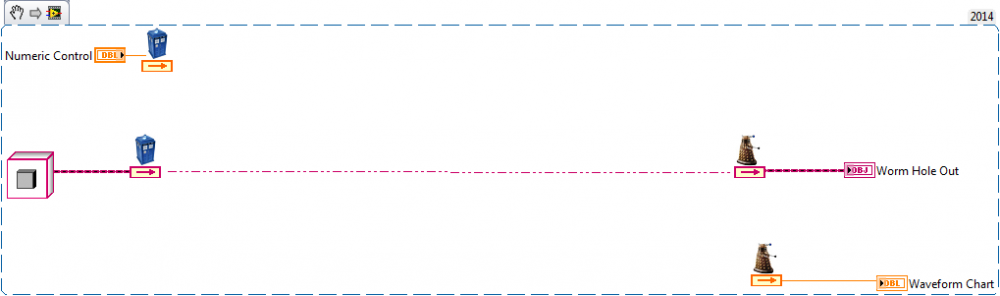

Asynchronous wires seem to be an extension of the wireless wires I was working on with the Wormhole xnode.

The wormhole xnode would have had the wireless cleanliness of a local variable with the speed of direct wire. Oh well.

The wormhole xnode would have had the wireless cleanliness of a local variable with the speed of direct wire. Oh well.Disclaimer: do not use this anywhere in your code...its just for fun

-

4

4

-

Why, for example does the "Return Settings" need file terminals? How could we tell the wizard to not wire and create those terminals for that enum?

"Return Settings" doesn't need file terminals. If you look at the code I added to delete terminals, I use a very rudimentary approach where I check to see if the case selector tunnels are wired to anything in the case structure and delete the controls with the same name as the tunnels that are unwired. Its crude and probably not very robust at this point.

I agree with you concerns about code bloat which is why I dislike polymorphic VIs. Would there be a generic way to do this with an Xnode FGV wrapper that goes through similar operations as hoovahs script, but self creates its inputs and outputs based on the case (enum) you selected from the Xnodes menu item? Would it be worth it? Or do you just transfer the complexity to the scripting environment where the potential for mistakes (and code errors) is more likely and less transparent?

-

I added a delete feature to your latest version that will delete the controls for each case if they are not wired inside the case structure of the FGV. Its not perfect and I had to rename the initialization constant in the example FGV so it doesn't delete the input terminal (the constant had the same name). Anyway thought I'd throw it out there.

-

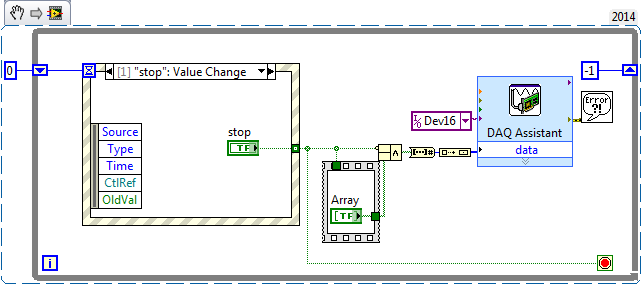

However, I think the reason why my code is inefficient is because I am stopping and clearing every time in my while loop.

Yes

And my stop button logic has way to many not gates I could probably combine it into one not gate and send that out to multiple writes.

Is that something that would fix my inefficiency?

No

Use a DAQ Assistant (1 for each device) in a loop. Its a beginners friend (and sometimes advanced users). It automatically sets up things for you so you don't have to worry about starting and stopping every loop. If possible write to the whole port at the same time.

Hint:

-

1

1

-

-

Your "final" looks cleaned up to me. Sure the terminals aren't quite right, and wires are a bit long, probably indicating that the terminals are added after the code generation (just a guess not sure XNodes actually do this). But you don't have wires on top of each other like the first image.

Since this XNode lives in the VI that calls it, and is pretty much inlined as far as we can tell, the terminals are probably added when you right click the XNode to look at the generated code, and before then an interface to the XNode is this nebulous thing (at least to you and I).

That makes sense. Thanks for the help.

-

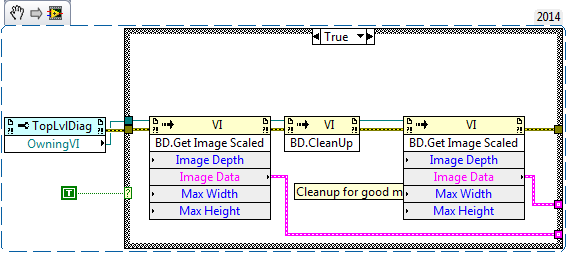

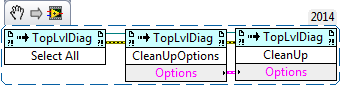

hoovahh I implemented the same operation as your subvi and it did not appear to work when I looked at the generated code. So I went back and created some debugging code to get the block diagram image before and after cleanup to compare to the final code:

The result of probing these images is that it appears block diagram cleanup occurs but then gets lost:

Before BD.Cleanup:

After BD.Cleanup (just what I want):

Final "Generated Code" (somewhat crapified)

The only other thing that happens after the Generate Code Ability VI is that I do an "Update Image" (calls the Image Ability). Could this cause the diagram to get screwed up?

-

Rookie question here. I'm getting lazy and don't want to click the cleanup diagram button when I'm debugging generated code in the Xnode.

Is there a way to clean-up the Xnode diagram with scripting? I tried putting some cleanup commands on the diagram reference in generate code ability, but I'm guessing the code is not actually generated until after this VI is completed. Or maybe I'm doing something wrong. Is there another ability that can run after the code is actually generated where I can cleanup the diagram for debugging purposes?

-

1

1

-

-

Tnx for the reply

So if i understand correctly. I still use consumer producer. In the producer there is only DAQ blocks that fill up queue. In the consumer loop i can than use circular buffer and later save the data?

You can still have a producer/consumer loop if you want. The nice thing about Hoovah's circular buffer is that its DVR based so you could spit the wire and read the data in parallel if you want.

I haven't seen your code and do not know how you want to trigger the save data. Do you need to analyze the whole buffer every iteration to determine whether to save or are you doing something like just triggering on a rising edge level and only need to look at each daq read chunk?

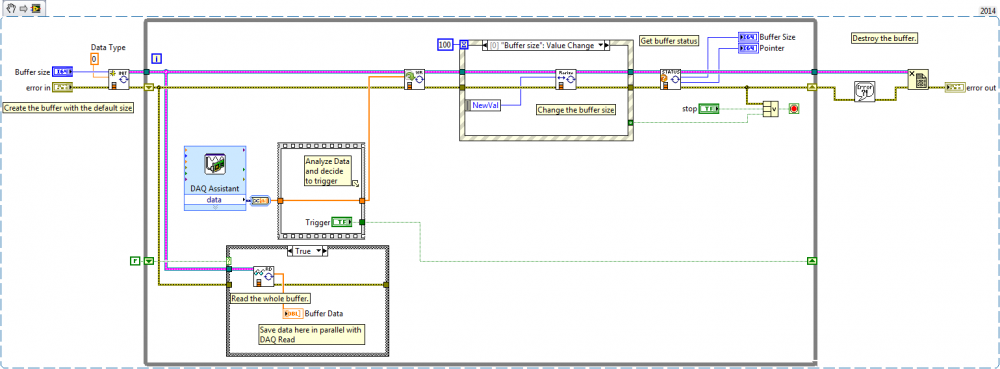

You can probably do all the DAQ reading/triggering/saving in a single loop if you use pipe lining. But I can only speculate unless you attach code. A crude example using the Circular Buffer Demo.vi as a starting point:

-

-

The easiest way to do this is just the replace “States on back†with a User Event message to oneself, but with a little sophistication one can create reusable subVIs that either do a delayed User Event or set up a “trigger source†of periodic events. Here’s an example of a JKI with two timed triggers:

Very impressed with the simplicity and elegance of that timer solution.

<possible thread hijack>

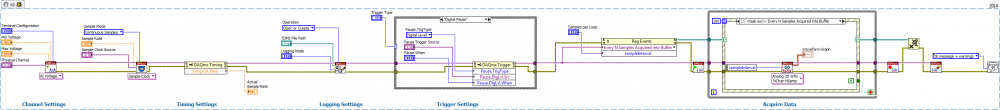

Along the same lines....do you guys ever use the DAQ Events functionality within the JKI state machine in the same manner? eg using the DAQmx events along with a daqmx read in the event structure similar to LabVIEW example:

\examples\DAQmx\Analog Input\Voltage (with Events) - Continuous Input.vi

Or are the downsides to reading daq at high speed in an event loop outweigh the potential benefits?

-

Hi bbean,

Thank you for your reply. Quick question before I try this, wouldn't this not work since the data flow will be in the Wait to Scan Complete while loop? If I press the Stop button, the event structure will not operate because I am stuck in the while loop.

I would need to stop the while loop, prior to moving on to the event structure. Please correct me if I am wrong.

The event structure is in a parallel loop (just like your consumer loops) with a branch of the VISA session going to it after your initialize. The event loop will be waiting for an event (in this case the stop button press).

When you press the stop button it will fire an event , in that event you would execute the VI I attached which should kill the VISA session, which will cause an error in the Read VI (unlocking it) in your loop where you currently have the stop button control wired up.

-

Not sure this will work, but try using the attached VI in a parallel while loop with an event structure and event case for stop button value change. Its just a wrapper around the viTerminate function in VISA.

Also put a sequence structure around the current stop indicator and wire the error wire from just above to the new sequence structure to enforce dataflow to the stop button. This way it will read the correct value after the event structure fires.

In terms of your idea with the timeout, I would like the hardware to do the counting of 15 minutes not the software.

Not sure why you would do this but if its your preference then go ahead. As you can see its causing you problems

I am leaning towards modifying the subVI and constructing my own algorithm to read the buffer of the hardware.

Don't waste your time.

-

The last time I did data acquisition that required start triggering (ai/StartTrigger) was 5 years ago in a LV8.6 application. I seem to remember that the dataflow would pause at the slave's DAQmx Start Task.vi until the master arrived at its DAQmx Start Task.vi, then each task would proceed to their DAQmx Read VIs.

Fast forward to LV2014 where I'm trying to help someone on another project get DAQmx start triggering working. In LV2014, the process appears to operate differently and the slave's DAQmx Start Task.vi executes without pause and continues to the DAQmx Read.vi where it doesn't return data until the master AI input starts.

Did something change or am I growing old and developing dementia?

-

Recently I was having massive slowdowns opening VIs, including classed, but the culprit turned out to be an old link to an SCC Perforce Server that was not valid. Not sure if that is your problem or not, but if you have source control enabled in labview, you could check that.

-

2nd thisRight click on the read and write primitives and select "Synchronous I/O Mode>>Synchronous".

-

The manual says that you are supposed to send 0x00 for 1 sec,...

What if you decrease the frequency of the 0x00 eg. have a 1ms wait between each 0x00 send and use a while loop that exits after 1 second of elapsed time (vs a for loop).

-

I can't really tell from the NItrace or code. Do you have the manual that describes the wakeup protocol that you could also attach?

Is the touch panel a "real" serial port or a USB to serial converter?

One problem I've had in the past with USB comm (or USB->serial com) was with the OS sending the USB ports to sleep. Not sure if it could/would be able to do this on a "real" COM port. Check the Advanced Power Settings in Control Panel\All Control Panel Items\Power Options\Edit Plan Settings and disable this everywhere. You may also have to disable something in the BIOS. The worst part about the USB sleep feature is that the more efficient you make your program the more likely the OS is to power down the USB port.

-

Can you attach your 2009/2012 VIs and NI IO Trace logs?

-

VIM Demo-HAL

in VI Scripting

Posted

This is a continuation of conversation from here https://lavag.org/topic/19163-vi-macros/?p=115771

So I decided to update ShaunR's VIM Event Demo with Xnodes. Its still rough around the edges, but I think it cleans up the diagram pretty well and adds some benefits to displaying relevant Event names in the Event Structures. For now I got totally rid of the "name in" input on the event / event register Xnode so you have to name all the constants or controls that go into the Xnode or it won't work. I could put the name in back in and make it so it would override, but I don't have time right now as I'm preparing for more important things...vacation.

Take a look and tell me what you think. Hopefully I didnt leave out any VIs. Many thanks to hooovahh as I used his Variant Write repository Xnode as a starting point and have learned a bunch of stuff from his Xnodes.

Xnode Event 2014.zip