-

Posts

291 -

Joined

-

Last visited

-

Days Won

10

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Omar Mussa

-

I found the CLA-R to be basically as advertised in the prep materials (practice exam, etc) - I also found it helpful to take the free 'skills assesment' exam online (which basically is designed to steer you to NI Training -Basics?) as it helps to practice answering NI style exam questions.

-

Congrats John! What, you don't want to take the whole exam again?

-

I'm trying to print a "Standard" text report using the Report Generation Toolkit - for some reason if I set the font type to 'Courier' (using "Set Report Font.vi") and print the report, it always prints at the same size (10 I think) no matter what I actually enter as a font size (trying to use a smaller size --> 8). However, if I set the font to 'Courier New' I can set the font size to 8 and it seems to work (the printout is smaller). I'm using LV2009 SP1. Anyone else see this before? Is it a LabVIEW bug in the Report Generation Toolkit (RGT)? Is it a printer driver bug on my machine? Also, the 'Font Output' always returns the default data - which makes it useless to me right now - I was hoping to see it update the font name/size to ensure that the data was set correctly. Another RGT bug?

-

Way to start the planning early this year! Looking forward to it!

-

LVOOP for 8.2 - any hiden problems?

Omar Mussa replied to mzu's topic in Object-Oriented Programming

I think 8.2 also had issues building EXEs that contained LVOOP classes that used dynamic dispatching (name collision issues) - I recall having to have my classes in specific destination folders outside of the exe (pretty sure that was in 8.2). -

Good luck Deirdre! You've been an amazing LV Community Promoter!

-

LabVIEW Development System Has Stopped Working

Omar Mussa replied to BobHamburger's topic in LabVIEW General

When you get done with this trip, you should definitely consider using virtualization to prevent getting stuck in these situations. If you're already on Windows 7, I'd recommend installing VMWare Workstation. Basically, it lets you support each customer project in isolation - which greatly reduces risk for your multiple projects/LV Versions. Also, when really painfully annoying issues like this happen, you can generally revert a VM (via a backup or snapshots, etc) a lot easier than fixing an issue on your physical machine. -

Best Practices in LabVIEW

Omar Mussa replied to John Lokanis's topic in Application Design & Architecture

I agree with you that unit testing is not free, but am surprised to think it may be a common perception. Also, I would agree that its not valuable to create a comprehensive unit test suite (testing all VIs for all possible inputs). What's most valuable is to identify the core things you care about, and test those things for cases you care about. Unit tests can be a really useful investment if you plan on refactoring your existing code and you want to ensure that you haven't broken any critical functionality (granted that the tests themselves will most likely need to be refactored during the refactoring process). But they do add time to your project, so just make sure that you're testing the stuff that you really care about. -

Non-zero default values for reference types

Omar Mussa replied to Tomi Maila's topic in Object-Oriented Programming

I can't think of a single instance where I'd want a refnum constant to not have a default value other than a null refnum. There isn't really such thing as 'refnum persistence' that I know of so why should the data type even allow storing a value other than null for its default value? -

I believe the proper usage is iSuck

-

Dynamic dispatch simulation subsystem

Omar Mussa replied to mzu's topic in Object-Oriented Programming

Sounds like a bug to me. Make sure that if you already setup your class hierarchy and are now 'adding' dynamic dispatching, that all dynamic dispatched methods with the same name in your class hierarchy have dynamic dispatch terminals and the same conn pane. -

Thank you everyone!

-

Happy holidays everyone! I'm happy to announce that our family has grown bigger! (Actually, the main event was about a month ago but life moves by quickly). The newest addition to my family is Ella Rose! Hope everyone has a happy and safe holiday break!

-

Suite -- I mean -- sweet! I plan on looking at what you've done later but it sounds like you've got a more flexible pattern for creating/reusing your tests in different test environments now

-

Yes, this is typically what I have done. My TestCase Setup methods are still useful for things that MUST be initialized for that specific test. For example, if I need to create a reference I can do it in the TestCase.Setup. Its really more of an art than a science for me right now but my end goals are simple: Easy to write tests Easy to debug tests (alternatively, easy to maintain tests) Usuallly But not in this case, at least for me. Until I started working on the last round of VI Tester changes where I added support for the same test in multiple TestSuites, I wasn't really using properties of the TestCase with accessor methods for configuring a test. But I find it is a powerful tool and intend to use it now that it is supported better. I also haven't really had a use case for creating a bunch of 'Setup' methods for each test case that is called in the TestSuite.New --> so I really am thinking that this is an interesting way to setup tests. I like your approach for this and right now I can't think of a better way to do this. Just thinking out loud ... Since its inception, I've wanted to create a way for tests to inherit from other tests within VI Tester (which is not supported right now) and maybe that would make it easier to run the same tests against your child cases --> I can't recall where I got stuck trying to make this happen within VI Tester development but I think it may be what would really be the best ultimate solution. Glad that I could help!

-

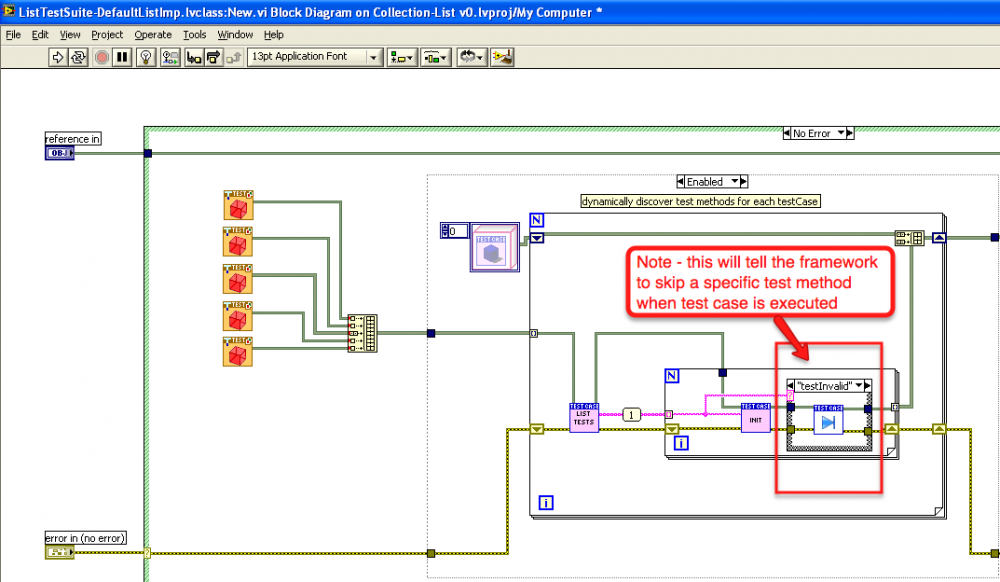

Actually, this is not true. You can call the TestCase.skip method to skip a test. You can do this in two different ways ... 1) You can call skip within the test itself which is what is how we show it being used in our shipping example via the diagram disable structure. 2) You can use it from within a TestSuite -- basically invoking the skip method during TestSuite.New will cause the execution engine to skip that test when the TestSuite is run. This is an undocumented feature and is not obvious. Here is a screenshot example of what I mean: I think your implementation is interesting. I think that it should let you scale well in that you can test various input conditions pretty easily based on your accessor methods in the TestSuite.New class. I typically have done it differently --> namely sticking all of the List methods in one testCase and then using multiple testSuites to test the different input environments. This causes my hierarchy to have more TestSuites than yours. However, I like what you are doing as it makes your tests very easy to read and you can easily reuse the test (by creating a new TestSuite for example) for a ListTest.Specialized child class in the future and your tests should all still work, which is nice. I think this is just a personal choice. You can code it this way so that you don't 'accidentally' misuse the mock object. Or you could have just included a Create/Destroy test in each of the other testCases and had a mock object available for all of those cases and just had each test choose whether or not to utilize the mock object. (Side note - I know this code was in progress but just want to point out that I think you forgot to set the mockObject in the TestSuite.New method for this test case --> it looks like the test will just run with default data right now where I think you intended to inject a mock object using the accessor methods). Yes, this is correct. And TestSuites can contain other TestSuites so it is possible for different parts of the test environment to be configured in different 'stages' essentially and the test will run in the combined test harness.

-

Really cool! I'm happy to hear this! Actually, while VI Tester is built using OO, it is intended that users without OO experience can use it. I'm glad to see that this is the case. At JKI, this has saved us from deploying our products (including VIPM) with major bugs that would not have been caught through normal user testing. I am glad VI Tester has worked for you as well. I think of the tests as an investment against things breaking in the future without me knowing about it. Awesome! That is how I tend to use VI Tester as well at the moment.

-

I haven't had enough time to really document VI Tester testing strategies properly yet, partly because the *best* patterns are still emerging/evolving. Here are some of the quick notes I can give that relate to strategies I've found -- I really intend to create more documentation or blog posts but I've had no time to really do this. 1) TestSuites are your friend. I haven't really documented these enough or given good examples of how to use them, but they are a powerful way to improve test reuse. TestSuites can do three things for you: 1) Group tests of similar purpose 2) Allow you to configure a test 'environment' before executing tests 3) Allow you to set TestCase properties (this is something I only recently started to do -- you can create a TestCase property 'MyProperty' and in the TestSuite Setup you can set 'MyProperty=SomeValue' --> Note that you'll need an accessor method in the TestCase and it can't be prefixed with the word 'test' or it will be executed as a test when the TestCase executes). 2) A TestCase class can test a class or a VI --> In a truly ideal world each TestCase would be for one test VI and each testMethod in the TestCase would exercise different functionality for the VI under test. LVOOP doesn't really scale in a way that supports this (as far as I've used it so far) and so I typically create a TestCase for each Class and I design my testMethods to test the public API for that class. 3) It doesn't matter if you can't execute a TestCase method without it running within a TestSuite. From the VI Tester GUI, you can select a test method at any level in the tree hierarchy and press the 'Run Test' button and it will execute all of the TestSuite setups that are needed to create the test harness. Similarly, if you execute a test from the API, as long as the test is called from the TestSuite, it will be executed correctly. For debugging these types of tests, I find 'Retain Wire Values' to be my best friend. I hope this helps, I aim to look at your code at some point but it won't happen until next week as I'm out of the office now.

-

Build Spec order does not take into account dependencies

Omar Mussa replied to John Lokanis's topic in LabVIEW Bugs

The best workaround I can think of would be to do an automated build and programmatically invoke the build specs in the correct order. Note - this won't help the novice user, but probably most novice users aren't building multiple target files. -

Congratulations to Aristos Queue on 10 years at NI

Omar Mussa replied to crelf's topic in LAVA Lounge

Congratulations and thank you! -

I agree with all the sentiments above. Plus, the Bay Area LabVIEW User Group is a particularly good group, you should definitely go.

-

which Subversion client for MacOS X?

Omar Mussa replied to Antoine Chalons's topic in Source Code Control

I use SmartSVN because there's a free version and it does enough of what I want to make it useful (plus its cross platform). Earlier, I tried Versions and really liked it but I don't use SVN from my Mac that often so I decided not to buy it. -

I need to reply just so that I can get my post count up Congrats JG!