-

Posts

3,183 -

Joined

-

Last visited

-

Days Won

204

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Aristos Queue

-

-

Every wire of your class type is a separate object. Every object is an independent instance of a class.Hi All

Is it possible to create different instances of the same class programmatically?

Suppose if i want to have a graphical displays, Can i create a Graph Class and create multiple instances it?

Regards

Suneel

-

I agree with this analysis generally. Now, having said that, there is a concept of asynchronous dataflow, where data flows from one part of a program to another, and a queue that has exactly one enqueue point and exactly one dequeue point can be as dataflow safe.So that node (Dequeue Element) is executed under dataflow rules, like all LabVIEW nodes are, but what goes on inside that node is a non-dataflow operation, at least the way I see it. It's "event-driven" rather than "dataflow-driven" inside the node. Similarly a refnum is a piece of data that can and should be handled with dataflow, but the fact that a refnum points to some other object is a non-dataflow component of the LabVIEW languageShared, local and global variables also violate dataflow for the same underlying reason as refnums. A variable "write" doesn't execute until its input value is available, but what it does under the hood is dependent upon non-local effects.

So the best definition I can offer for "dataflow safe" is "all nodes execute when their inputs are available and, regardless of the behavior behind the scenes, no piece of data is simultaneously 'owned' by more than one node." I've been trying for some time to refine this definition.

Actually, NI is trying to encourage the use of "G" as a way to differentiate the language from the IDE. This is contrary to our earlier position, because we were trying to avoid confusion among customers, but as we have gotten larger and our products have been more used in larger systems, the distinction has become more useful than harmful. That was something we started differentiating at the end of 2010, and it will take a while to permeate our communications/documentation/etc.(we're not still calling it 'G', are we?). -

I might buy this argument for the CLD, but not for the CLA. You're not having to fill in all the detailed code in the CLA, which means its mostly "Create some basic classes and put empty VIs into those classes."I've used OO in all my LV applications since 2000, but I agree, I won't use OO in the exam, since without a toolkit it would take me to a bit too long time.

Somewhere between 2 and 3 out of 10 of all CLA exams submitted do use LVOOP as their primary design orientation. I did both my CLD and CLA exams with "no VIs that weren't a member of some class" partially because I usually design that way and then prune back later if it seems prudent, so at the architecture stage it really is all classes, and partially to prove that it could be done within the time.

The exam doesn't care so much about the terminology as long as you explain what you're doing. The value of the terminology is the shared vocabulary can save a lot of time. If you can just write down "put a standard producer/consumer loop pair here linked by a queue", that's faster than explaining all the bits and pieces. There is a common parlance that develops around any technical field. The CLA exam does not require that you speak it, but it does weight advantage to those who do.Yes! I kept reading over and over on LAVA about this wonderful thing called "plug-in architecture", finally researched it, and discovered it was something I'd been doing for quite some time -- I just didn't know the fancy name for it. I will admit to some concern over potentially being stuck by not knowing "correct" terminology when/if I ever get around to taking any of these certification exams.

And, for the record, since you are reading LAVA, you've picked up that bit of terminology. Anyone who keeps reading like that will eventually pick up the terms.

It would be worth asking whether the CLA graders would accept a VI that says, "Download standard toolkit XYZ from location MNO and instantiate the following template, then put that subVI here." ... and similar comments. Any of the tools like LapDog, or AMC, or ReX, or the Actor Framework, or the GOOP Toolkit might be usable then. After all, that's exactly the instructions I would give to a developer in some of these cases.No, but I'd have to give enough details that an over-the-wall developer could implement it. That means describing the details of the MessageQueue class, the Message class, and the ErrorMessage class.I seriously doubt that it would get you off the hook for actually doing the module design and layout of pieces, but if you can broadly mimic the pieces that you want those tools to generate, that might pass examination. Worth asking, IMHO.

My understanding of the intent of the CLA, and how it is used by managers who look for the certification when hiring, is that the CLA is a leader of CLDs, someone who works a bit faster than a CLD because of long practice, but more importantly, someone who can teach. Not every crackerjack developer can actually teach others how to develop. An architect has to have that skill. "This project is larger than what any single developer can build alone; I'm going to spec out the parts of that system and then farm them out to you guys. If you build them wrong, I'll show you why it doesn't fit with the rest of the system." Over time, a CLA should be able to create new CLDs from untrained new hires. That's my understanding, anyway, and I believe that's why documentation counts so much in the exam.I suspect it is driven by business needs. Customers want some indication of whether a consultant is worth his salt or blowing smoke, so NI created certification. I'm not particularly fond of the way the CLA is structured, but perhaps that is driven by customers too. If customers are asking for architects to do designs so they can be implemented by lower cost labor elsewhere, then maybe that's what NI should give them. -

Or don't since the method reinitializes the value property to default and those things *are not* values. It would be really frustrating for apps that don't dynamically populate their tables etc if LV wiped out the content every time the value was reset. Rings shouldn't be wiped, neither should tables.Get that on the LabVIEW Idea Exchange! Unless it already is, and in that case: link me to it!

-

I wouldn't recommend the library refnums for anything other than editor support. They were never intended to be in the runtime engine -- they got added in LV 2009 to support TestStand talking to LabVIEW, and, honestly, I think including them is a bit misleading. The library refnums all go through the project interface. The functionality that they were designed to promote is not the runtime reflection that an OO programmer might be looking for, but instead for the editor capacities that a LV add-on author might be looking for. They require a swap to the UI thread, which is an obvious drawback.

I haven't found any place where I actually get the qualified name from an object. The code that I thought I had done the "to variant" conversion trick apparently I found a different workaround and ended up not needing the qname at all. So the Flatten To String is still your best approach in all shipping versions of LV, as icky as that may be.

There just hasn't been much request for the reflection API, and for most APIs, the path has been sufficient since any API designed to be used in a running VI (as opposed to a scripting editor tool) doesn't have to worry about classes that have never been saved to disk.

-

Thanks, but that only gives the FQN for the class of the wire, not the info for the current actual class.

With the GetLVClassInfo.vi approach, I guess I could include a must override VI in every lvclass in which itself the GetLVClassInfo.vi gets called... but then I could just return a string constant since I know the value at edit time.

*head smack* I forgot about that. It's the type descriptor included in the variant, which is the wire... it doesn't delve into the object.

Somehow I did get the right type descriptor into the variant. Now, if only I could remember how I did that...

-

We couldn't find a VI or primative in LV that gives us the Full Qualified Name (FQN) for a given LVOOP object, so we came up with our own function to do this (see attachment, LV2010 SP1).

Ask and ye shall receive...

vi.lib\Utility\VariantDataType\GetLVClassInfo.vi

Just wire the class wire directly to the Variant input.

Shipped with LV 2010 at least, but I'm *mostly* sure it was in LV 2009. *May* have been earlier, but I *think* it was 2009.

LabVIEW version of any library (including .lvclass) can be gotten with an App method "Application:Library:Get LabVIEW File Version" without having to load the library into memory. Class version is the same as library version... if you have a reference to the class itself, you can use the "Library:Version" method.(LabVIEW Version is easy to get from a library reference. Class version I think we'd need AQ to weigh in on).-

1

1

-

-

This bug will be fixed in LV 2011. If you are part of the LV beta program, you should be able to confirm this fix in the second beta.

-

2

2

-

-

I believe dynamic despatch (required for overrides) might mean that depending on how you are calling the methods, all classes may have to be loaded even if they are not actually used since it is not known at compile

Incorrect.

-

I forgot to check LV 2010 before the SP1 patch. Well, I'm sorry it broke, but I'm glad we fixed it.I bet that you have SP1 installed... I haven't. Before tackling this issue with a piece of code in CR, I had not used LV2010 since last NI Week. So I guess you guys corrected the bug with the 2010SP1 release.

-

1

1

-

-

No one has ever mentioned this behavior before. I've certainly never seen it. I'll keep my eyes open. Obviously if you figure out what triggers it, please let us know.

-

Since LVOOP requires the entire class to be loaded into memory and this is a large project, hundreds and hundreds of unused VIs are loaded into memory and the application is not responsive.

I'm not going to comment on any design aspects. I assume you're design is good and this is what it takes. So let's focus on load time.

1) Put all your VIs and classes into a .llb file. The .llb optimizes the contents of the file and significantly improves load speed when a block of VIs must load as one.

2) Try loading in a runtime engine and compare to the load time in a development environment. Are they significantly different (there will be some difference, but it should be a second or two, at most) If so, you're probably doing something in the dev environment that is causing the block diagrams and panels of all those VIs to load needlessly. There are various things that can cause this -- scripting tools running in the background, VIs that are saved broken and become good after they load because something else loaded their missing subVIs, etc.

3) If you have LV 2010, you can build packed libraries. DO NOT UNDERTAKE THIS WITHOUT READING DOCUMENTATION. The packed libraries can give you BLAZING FAST LOAD SPEEDS. But they are a power that comes with consequences. Notably, the classes in the packed library have a different qualified name than classes not in the packed library, so any caller VIs need to be written in terms of one or the other. The packed libraries are designed to be "I build this separate component, and then I go develop something that uses that built component", not "I build this monolith of source code and then I turn it into two different components." There are tools to make the latter approach work, but the former approach is what I'd recommend.

-

-

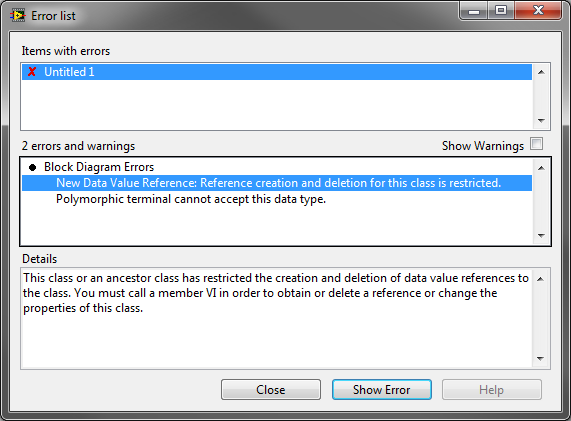

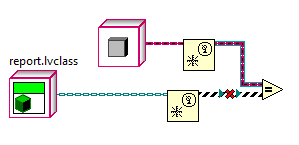

I cannot reproduce the good wire on the Equals prim in LV 2010. Here's what I get:

Do you see anything that I have different from what you were doing? I tried adding the other nodes on the wires (such as the Send Event node) and that didn't make a difference.

And in the VI you just sent, can you just use parent events and send the appropriate data later?

-

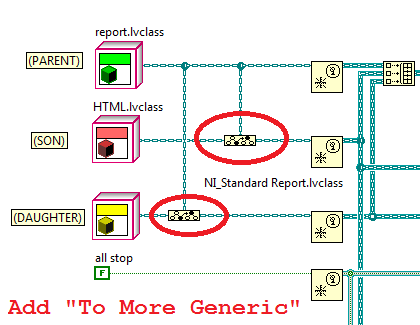

The wire that is now broken is correctly broken.

The wire that is now fixed should still be broken, but at least that one won't crash LV, whereas the other one will.

You should never be allowed to cast an event of child as an event of parent. Consider a hierarchy of Parent, which has two children named Son and Daughter. I create an event of Daughter. I register it with an Event Structure, which now has a Daughter type output. Then I cast the event refnum as an event of Parent. Then I use Send Event to fire the event, but the data I give it is of type Son. The son data is transmitted and comes out of a Daughter terminal in the Event structure. This is a problem because the private data of Son will be incorrectly interpreted, and you may crash. Yes, this metaphor leads to some terrible jokes. But the crash that occurs is no joking mater.

I do not know why the Equals prim is allowing the comparison, but at least that comparison isn't actually trying to interpret the data, and it will always return False, since we've blocked the casting.

Now, having said all that, there's a back door to casting that I cannot ever close. Use the following at your own risk: If you have an event of Daughter, you can use the Type Cast node to cast it as an Int32. Then you can use a second Type Cast node to cast that Int32 as an event of Parent. You now face the same danger of crash that was there before. Your framework had better guarantee that the event of Parent is buried so that no one can transmit arbitrary data to that event.

I'll file a CAR about the Equals operation. Don't write code that relies on that comparison being legal -- it's going to close again now that I know about it.

[LATER] CAR 294872 filed.

-

And I will file a bug report to look at that specific error message and try to improve it.

[LATER] CAR 294353 filed.

-

If you want to use it elsewhere, I suggest you'll need to use the "Seconds To Date/Time" primitive and then write that cluster to the config file. I think that's the only way to get a portable version of the data. Even if you knew the encoding, you'd still have to have that other system know the epoch.

-

The failure was not in the software developer who spec'd the device, but in the software manager (aka king) who poorly specified design requirements. The software developer actually spec'd out a really nice breakfast food processor. If that wasn't what the king wanted, he should have said so. I hope that the monarchy is overthrown by angry subjects a decade later when they find out that in the republic next door, food replicators are commonplace.

-

1

1

-

-

Or just paste an entire picture into your source code, like the flow chart, or hardware configuration...Inability to write descriptive comments!I'd like to answer: why don't you stupid use the VI Documentation? and why don't you use the text-tool in the BD?

Define "major release"... VS put out versions 2003, 2005, 2008, and 2010. Are these major upgrades? They completely keel hauled the user interface between each one. They've got a two year cycle, most of the time. And the entire .NET language is revving once per year, a very fast cycle time.This yearly major version updates really suck! If someone would ask me I’d say: a major release once in 5 years would be enough, like Microsoft does with Visual Studio.LabVIEW specifically and NI generally tries to strike a balance between putting out new features (which is the only thing users are really willing to shell out money for) and patching existing features, and between backward compatibility and forward innovation. I think we're doing a good job when I compare us against the other tools that I have to use. Yes, the speed is blazing and it is hard to keep up, but I think LV's front panel is a perfect example of what happens if we don't rev every single year. We took our eye off of that particular ball for a while, focusing on improving the compiler, making new toolkits, expanding diagram syntax, and in just a couple years, instead of ooching along with small differences annually, the panel looks really dated, and everyone comments on it. My theory is that LabVIEW as a whole would suffer that same rapid slippage if we didn't try to rev continuously, and the cost to catch back up -- as we're seeing with the panel -- is substantial.

-

Back in February, Daklu asked me to review this thread, but I missed seeing his private message. Sorry about that...

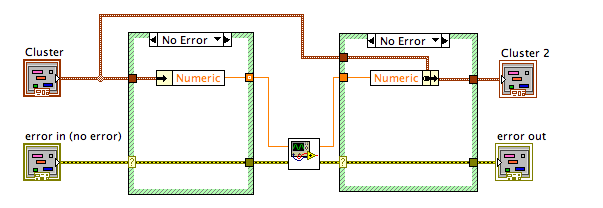

For good error handling, the "error case structure" (hereafter called ECS) only needs to be on the leaf subVIs. In other words, if your subVI is composed entirely of other subVIs or built-in nodes that already check for error, there's no reason for your subVI to check for error. Adding the ECS just adds an unneeded check. It improves performance in the error case, which is the less common case. Is the hit minor? Yes -- just a single "test and jump" assembly instruction. Can that hit be devastating? When replicated thousands of times, yes.

On the next topic, how many of you would consider this to be good code?

Having error terminals on data accessors is equivalent to this. It's a complete waste. This may make you ask, "So, if that's your opinion, and you wrote the templates for data accessors for LV classes, why do the templates have error terminals?" Well, my opinion isn't the only one that matters. :-) And I am the one who later added the options to the dialog to create accessors without error terminals.

At the moment, there's no performance hit other than the case structure check to having the error terminals. However, LV's compiler continues to improve, and in a not too distant future (maybe next year), the version without the case structure should see a significant performance boost over the version with the case structure. The compiler can do more to optimize code that will always execute than to optimize code that conditionally executes.

-

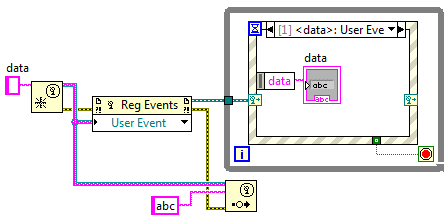

Basically, the Register For Events node should add items to the Edit Events dialog, and creating a case to handle the event should register for the event.

That would introduce a nasty race condition into user events.

Consider this block diagram. Under LV's current scheme, the Event Structure is guaranteed to hear the event. If you changed the behavior of the Register For Event node to not actually do the registration, you would miss the event. That safety net makes possible a number of event architectures that would not otherwise be possible. Moreover, the Event structure would have to redo its registration every time the while loop iterated, which would either be a performance penalty or another timing hole, depending upon how it was implemented.

The view inside R&D seems to be moving toward, "If a user had found this during the LV 6.1 beta, we would definitely call this a bug and change LV to break break the VI unless there was a case for every dynamic event. But it has been there for 9.5 years. So how the heck do we fix it now?" I don't know what if anything will happen with this CAR. It is getting a thorough debate. At the moment, it could be anything. Ideas I've heard include just updating the documentation, doing something special during Execution Highlighting to show that the event fired but nothing happened, mutating to have a default case, or mutating to add a no-op case for every dynamic event and *then* breaking any future VIs that violate the rule. We'll see what the future holds.Given that this behavior is not likely to change, I do agree with having a default event case or generating an error if an event isn't explicitly handled. -

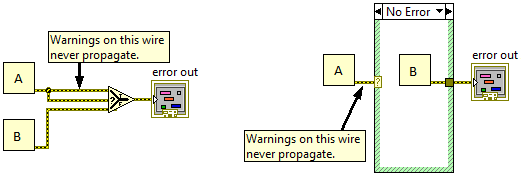

This thread is about generation of errors. Please do not tangent off into handling of errors, except insofar as generating one error to replace one from upstream is a form of handling. I'm asking this question partially as LV R&D, with an eye to the future, but more as a CLA user with an eye to writing code that is good style.

In the question about dropping warnings, this is the type of programming that I mean:

-

Step 1: Archive your entire application. If any of the following fixes the bug, I would still like the corrupt version archived if you can possibly share it with NI. I don't care how large your application is... if you can share it with us, we'll do the work of extracting and isolating.

Step 2: Load your corrupt VI, do ctrl+shift+click on the Run arrow. Then run your app again. Does problem go away? If yes, proceed to Last Step.

Step 3: There's really only one way that this can happen -- the unbundle node is indexing the wrong memory address. So let's assume that node is actually corrupt and no amount of recompiling will fix it. Delete that unbundle node and drop a new one from the palettes. Run your app again. If that fixes the bug, proceed to Last Step.

Step 4: Still broken? In that case we have to look for something more exotic. Are you using class properties? They're brand new in LV 2010. I haven't heard of anything like this, but newest features are the most likely culprits, so move the accessors out of the property nodes, replace the property nodes with subVI nodes, and let's see if the problem goes away. If it does, proceed to Last Step.

Step 5: Proceed to Last Step, but despair of having a workaround because that's all that I can think of.

Last Step: If any of the previous actually fixed your bug, do File >> Save All and keep working. Please send the archive to NI if you possibly can.

-

I see FPGA as one of the more critical platforms to have OOP supported. I pushed really hard for it despite a lot of doubt from others. I understand that it isn't typically done, but as I see it, that's largely because in most languages, objects tend to be reference based and don't deploy to the FPGA, so FPGA programmers never get used to thinking about them.It seems that using OOP on an FPGA would be counter-intuitive. The paradigm for programming FPGAs and their end use make this hard to understand why this would be done.Class encapsulation provides protection for data. It allows a programmer to prove -- conclusively -- that a given value is definitely within a given range, or that two values always maintain a particular relationship. Most academics researching software engineering tell me that OOP improves debugability and code maintainability as code base increases in size, regardless of what the task of the software is. That to me means that the more important that verification and validation are to a platform, the more valuable OOP becomes. FPGA programming is often used for direct hardware controls, often in some very high risk domains, situations where you want to be damn sure that the software is correct. OOP is one programming paradigm that helps assert correctness of code. You could pass raw numbers around through your program, but a little bit of class wrapping can help make sure that numbers representing Quantity X do not get routed to functions that are supposed to have numbers representing Quantity Y, and, more importantly, that only meaningful operations on X are ever performed.

Class inheritance provides better code reuse. It's true that dynamic dispatching at runtime cannot be done on the FPGA. The compile fails unless every dynamic dispatch node can be processed at compile time to figure out which subVI will actually be called. However, if you're writing many different FPGAs that each have a slight variation in some aspect of their data, it is a lot easier to change out the upstream class type and override one member VI than to "Save As" a copy of your entire VI hierarchy, change a cluster typedef, and rewrite a core function.

Also, most OOP code isn't parallelized to take advantage of the FPGA's parallel computing.It can be. It just often isn't because so many folks think in references instead of in values. With LV's compiler, the classes are proptyped through the entire VI hierarchy to statically determine every dynamic dispatch, then are reduced to clusters, and from there the rest of the FPGA compilation takes over, which allows parallel operations on multiple elements of the cluster. So OOP code written with LabVIEW classes does often get that parallelism kick. Even when it doesn't take full advantage of the parallelism, running on an FPGA is sometimes done for speed, and being able to deploy an OOP algorithm onto HW may be important to some folks.

Create Different Instances of a Class

in Object-Oriented Programming

Posted

The use of OO in this case is irrelevant -- you may decide to use it or not. What is key is the use of reentrant VIs. They are a much MUCH better solution than template VIs. Use "Open VI Reference" on a VI that has been marked as Reentrant to display multiple copies of the function.