- Popular Post

-

Posts

3,183 -

Joined

-

Last visited

-

Days Won

204

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Aristos Queue

-

-

Your post here prompted me to take another look... I'm now inclined to call this a bug.

I'm never sure how to read feedback nodes that are initialized... To my eyes, when the loop executes each iteration, that variant constant is going to generate something and send it to the initializer node. The fact that the initializer chooses to do nothing with it is its problem. It's one of the reasons that I don't like feedback nodes at all, especially inside loops. In LV2010, I know we got new optimizations to improve dead code elimination and loop unrolling, so I figured this was covered by those optimizations. But I tried a couple of other feedback nodes in a loop and didn't see the same slowdown, so maybe it is a bug that is variant specific.

-

Not quite fair to call it a bug in LV2009. We added new features to the compiler to do deeper optimizations in LV2010, which gave us better performance than we've had before. A bug would be something that got slower in a later version. This is just new research giving us new powers.6 hours later I've isolated a bug in LabVIEW 2009 SP1 regarding initialized feedback nodes of variant data .

.

-

No, it doesn't. And neither does the...The pre-11 Synchronous node forces an initial swap to the user interface thread on the receiver end to deliver input data as I understand it... -

Or, rather, the typedef is treated as a single element by the class. Since the class may or may not be in memory when the typedef is edited, the class can only know "this version of the typedef changed to this version" and it does the same "attempt to maintain value" that you get on a block diagram constant -- which sometimes means resetting to the default value.Mutation history is not maintained is this case.Except for enums, I pretty much avoid mixing typedefs into private data controls these days. It wasn't something I avoided when classes first entered LV, but these days, I find it easier to avoid them.

-

Allow me to introduce you to LabVIEW 2011. It... huh? Oh... I can't talk about that yet? Seventeen more days? Well, ok... but you're not going to keep a secret like the Asynch Call By Reference node quiet for ever. ... anyway, there's help coming.But what about the case of dynamically called code? An unnamed queue isn't going to work there.

I have. In the Actor Framework that I posted as part of my NI Week 2010 presentation. That's why we've made changes to the framework using LV 2011, where we have the Asynch *mmmffph mmmph oooph argh* No, really, I'll stop talking about it. I promise. Really. Just stop the beatings. I can wait 17 days...Personally I haven't run into situations in my code where the only solution is to use a named queue. -

The emphasis on architecture choice is more in the CLA, not the CLD. The CLD focuses on getting the app working and documenting what you did so clearly that someone else can pick up your app and figure out what you did. If two engineers reviewing the code missed the core of your app, I gotta say that does suggest some weakness in the documentation department. On the other hand, I've had days where I "just can't see the Matrix" and no amount of staring at the code makes it make sense. ;-)Yes watch out for submitted architectures that are more advanced than what is typically submitted.

For my CLD, I used a MVC architecture with a subpanel plugin Controller process with messaging to the 'HMI' View.

This seemed appropriate since the fictitious system described would in practice be designed with an embedded controller and HMI.

I initially failed and assumed, since 2 qualified engineers had reviewed my exam, that I had misjudged

the rigorousness of the exam. Despite the 'all grades are final' statement, I contacted NI and it turns out

that somehow they missed entirely the 'process' code that was in the solution project. I did finally pass, but

clearly understood that no credit was given for the more 'professional' i.e. robust and flexible approach.

That to me is misplaced emphasis. Obviously, if there was more time, anybody could add comments and

better vi descriptions, but understanding the best architecture to use is garnered from experience and skill.

-

It does change, regularly. But then we bring in a new crop of interns and new hires, and the process of untraining them from their C++/C#/JAVA faith and indoctrinating them with dataflow begins anew. Some never learn; others do. :-)But this isn't a point of view widely adopted I'd say? Not even inside NI it seems. That probably ought to change. -

Just write an empty array to clear the mutation history. But the version number is never allowed to be set backwards because even with the version number wiped out, there could still be data out there of the earlier version number, and that needs to return a "this data is too old to be inflated" error. Only "Save As >> Rename" sets the version number back to 1.0.0.0.Is something like this:

-

I strongly hope not, because the more it moves away from wires the less optimal and the more failure prone applications written in the language become.LabVIEW is evolving into a language more and more devoid of wires;

Your time table here is a bit messed up, but I understand your point. I don't think that bundling and dynamic dispatch belong in your list, however. There's a difference between representing the wire in an alternate form (bundle is several wires all routed together; dynamic dispatch is equivalent to a case structure around a number of subVI calls) and abandoning the dataflow syntax entirely (I'm looking at you, Shared Variable).First you bundled stuff together, then we magically wafted data off into the FP terminals of dynamically dispatched VIs, then we got events, and now we have all sorts of wire-less variables like Shared Variables.And, more important than all of that... my whole reason for asking for this thread to start in the first place... is that I think any drift away from wires is unnecessary, but it is seen by many as necessary because of the architectures we favor today. Those architectures are the problem that I'm wanting us to address.

Whether or not you can successfully use variables and DVRs is irrelevant to this conversation, IMHO, because I believe you can use wires equally as well or better if we structure them correctly and I know that the compilers, debuggers, and analyzers can definitely handle wires better, so it behooves us to prevent as much drift as possible.

-

1

1

-

-

Hmmm. If that is true. How is it reconciled with the events of front panel controls which surely (neck stretched far) must be in the UI thread. I could understand "User Events" being able to run in anything, but if bundled with a heap of front panel events; is it still true?

As I said before:

UI events are generated in the UI thread, obviously, but they're handled at the Event Structure. -

Neither have I. *pointed look at all of you*Maybe customers do exist who have well defined requirements and don't ask for changes partway through development. If so, I haven't encountered them.:-)

-

1

1

-

-

Yes. Each Event Structure has its own events queue, and it dequeues from that queue in the thread that it is executing that section of the VI. The default is "whatever execution thread happens to be available at the time", but the VI Properties can be set to pick a specific thread to execute the VI, and the Event Structure executes in that thread.Where do they run then? In the execution system of the vi properties?The "Generate User Events" node follows the same rules -- it runs in whatever thread is running that part of the VI. UI events are generated in the UI thread, obviously, but they're handled at the Event Structure.

A good prejudice to have, generally. But I have to ask... why wouldn't you use the same event structure to handle both? The Event Structure was certainly intended to mix dynamic user events and static UI events handling, and it works really well for keeping that behavior straight. And if the two event structures are handling completely disjoint sets of user events, that's one of the times when the prejudice can be relaxed without worry. Not that I've ever seen a reason to do this, but it would be ok.I think the thing that has put me off events is that it forces one to use an event structure as the only way to handle messages and then one might actually want an event structure somewhere else in the same VI and I have this prejudice against two event structures on the same diagram.. -

No, they don't.but I think they may also run the the UI thread

-

The question originally came up because it's hard to teach Notifiers to beginning LV programmers. It's a lot simpler to teach local variables, which do often work for stopping two loops on the same diagram, albeit with some difficulty around a button's mechanical action. Surely there must be a quick-to-program, easy-to-understand way to stop two parallel loops? It's a question I've asked myself for years, but only recently undertaken trying to identify why it is so frigging hard (compared with the expected level of difficulty) to see if a better pattern could be found.Isn't the two parallel loops exactly what notifiers were invented for ?

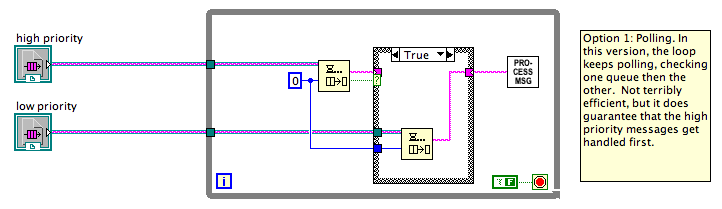

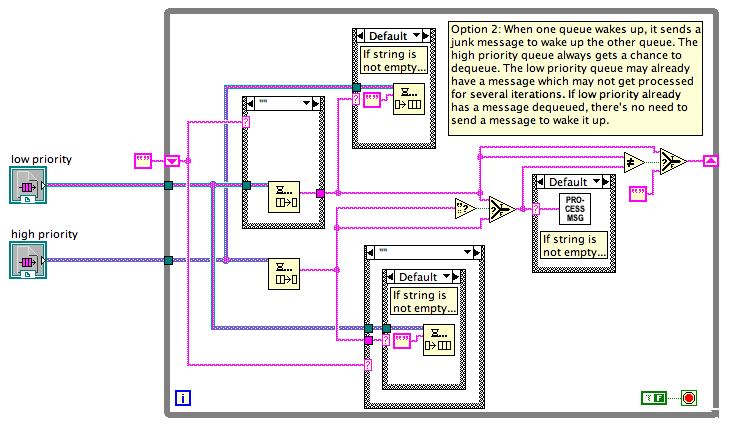

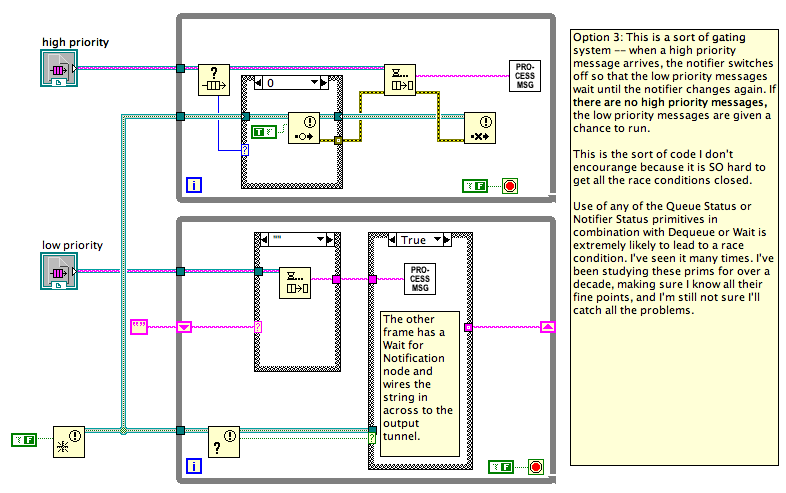

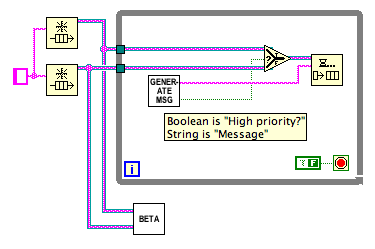

Ten points and a bowl of gruel to Shaun for hitting on the core point!And if a queue is already being used, it makes sense to try and incorporate an exit strategy using it.Here are three possible solutions to what Beta.vi could look like:

All solutions I've seen to this problem are either extremely messy or extremely inefficient (because they poll instead of sleep). In my experience, this sort of mess crops up whenever you try to mix multiple communications channels -- either you end up polling all of them, you end up having some complex "wake everyone up when one wakes up" scheme, or you have sentinel values to give time to each one (this last not being an option if all channels are equal priority). A good architecture rule appears to be "Between any two parallel loops Alpha and Beta, there should be one and only one communication channel from Alpha to Beta (there's a separate conversation to be had about whether communication from Beta to Alpha should use the same or a separate communications channel).

The rule "there should be only one communications channel from Alpha to Beta" implies that the right way to stop two loops is not a fixed answer. If you say, "Use notifiers to stop the loops", that would imply you should only ever use notifiers to communicate between loops, which is daft. Instead, the right way to stop two loops is to use whatever communication scheme you've already got between the two loops. If there isn't any then, yes, Notifiers are probably the simplest to set up and get right for all use cases (including the quite tricky "stop both loops and then restart the sender loop but only after you're sure that both loops stopped"). But if you have an existing queue/event/network stream/etc, then use that.

Using the same channel does not necessarily mean tainting your data with a sentinel value for stop. The pattern of "producer calls Release Queue and the consumer stops on error" is an example of using the channel.

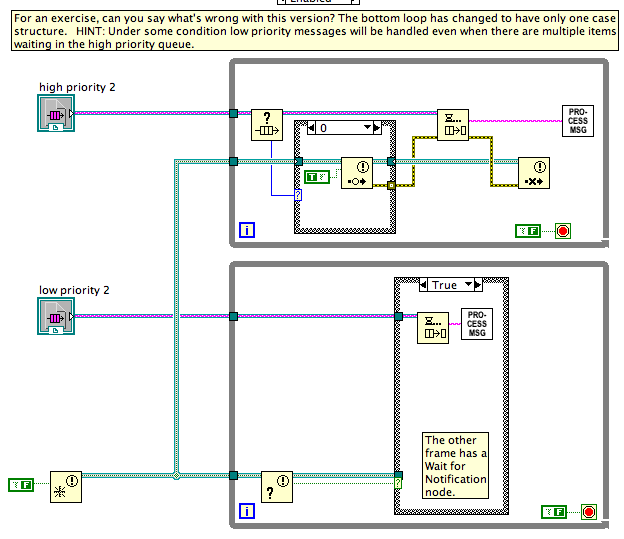

Complete and total tangent: Option 3, as I say in my comments, is the sort of code I generally discourage people from trying to write. Mixing the Status functions with the Wait functions (meaning Dequeue, Wait for Notifier, Event Structure, etc) is a perfect storm for race conditions and missed signals because the Status check and starting the Wait are not atomic operations. Here's a slight variant of Option 3, but this variant doesn't work... there are times when a low priority message will be handled even when there are multiple high priority messages waiting in the queue. This is my diagram, but it is based on some other code I've been shown that tried to do this, and it looked right to the author.

-

Use the example I provided a few years back for the Scrolling LED XControl. That gives you a pattern for forcing an update of an XControl based on timing.

-

1

1

-

-

Steen, thank you for posting this information.

I have held off replying in this thread... I'm still trying to take all of the content in and formulate a reply, but I can give some feedback at this point.

Your first post is a set of use cases. However, all three of them make assumptions about what the code needs to do, a bit too late in the design process from where I hope to intercept and redirect. Take the "stop parallel loops" for example. The way you've written the use case, there's an unspoken assumption that the code requires a special communications channel among the loops in order to communicate "stop". This particular problem is *exactly* the one that a group of us inside NI have spent over a year debating. We found a wide variety of techniques used in various applications to do this task. After a lot of analysis, where we were looking for ease of set up, correctness, inability of a stop signal to be accidentally missed, etc, we came to a conclusion.

I just typed that conclusion and then deleted it. I am unsure what the best way to approach this is, whether to bootstrap this conversation with some of the preliminary ideas of the small group inside NI or whether to let this LAVA post develop and see whether the results that develop here are the same as have developed in our small group. The problem is debating all of this over threads of LAVA is a LOT harder than doing it in person. I'm going to be presenting some portion of this content at NI Week this year -- I'm still working on the presentation, and at this point, I have no idea how much I'll pack into the limited time I've got.

Ok... let me try this and see how it goes.

Continuing to focus on "stop two parallel while loops." Suppose we have two loops, Alpha and Beta, both in the same app but not on the same block diagram. Let's ignore "stop" for a moment and focus on the normal communications between these two loops. Global VIs, functional global VIs, queues, notifiers, user events, local variables, TCP/IP, shared variables, etc... We've got a list of about 20 different communications technologies within LV that can all be used to do communications between two loops. Suppose for a moment that Alpha is going to send messages to Beta. Alpha was written to have two queues, one for high priority messages and one for low priority messages.

What does Beta.vi look like? Assume that it, like Alpha, is an infinite loop that does not error checking... what I want us to focus on for the moment is how it receives and processes the messages from Alpha. Assume that the goal is to call a subVI "Process Message.vi" for each message, but don't waste time processing messages in the low priority queue if there are messages in the high priority queue waiting. If it helps, feel free to assume that an empty string is never a valid message.

How efficient can you make Beta.vi? Does it sleep when there are no messages in either queue? How much wiring did it take to write Beta? Is it more or less wiring than you would expect to have to wire? How does the complexity of code scale if the architecture added a third queue for "middle priority", or even an array of queues where the first of the queue was highest priority for service and end of the queue was lowest? What are your overall thoughts on this communication scheme?

[LATER] I have now uploaded three different variations that work for Beta.vi in a later post. But before you skip ahead to that answer, I encourage you to try to write Beta.vi yourself. It's a really good LV exercise.

-

Nope, that's the only one.Other than not being able to load persisted objects from disk, are there any gotchas you know of that I should watch out for? -

Thanks for the tip. I discovered renaming the class doesn't clear the mutation history in 2009. There was still 46 or so entries in the list. However, I was able to use the Set Mutation History vi to clear the list and reduce the file size back down to where it should be.

Right... renaming still preserves a lot of the history because any child class that tries to unflatten its old version may need the mutation records of the parent as it was.

-

Ever get the sense that the scripting API is a hodge-podge of features that we built as we needed them for our own internal needs and then released to the public because users said having it was better than nothing? If you do, that's an accurate feeling. :-) If it's any consolation, we have a couple guys who have been spending the last couple years polishing on it, and they continue to work on it more.

-

A game is unfair when someone changes the rules.

Life is a game.

As a teenager, all the rules are changed by forces outside the player's control.

By that standard, being a teenager == unfair.

The biggest problem, of course, is that English allows you to stop after the word "unfair." We have transitive verbs which require a direct object. This is a situation that would benefit from some sort of "transitive adjective", where just speaking required you to fill in the blank "unfair between _____ and _____". Unfair, after all, requires an imbalance between two parties. There is no "unfair" in a game of solitare.

-

It's just the place where they manufacture the CDs/DVDs. No development or other manufacturing is done there to the best of my knowledge. There is a sales office there, but I doubt that's of interest to you.I've noticed in the past my LabVIEW software often comes from Ireland. It got me wondering how big the NI presence is there. If they're hiring developers.

-

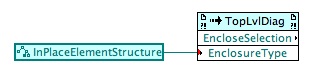

Thanks, but I'm missing some things, e.g. "In Place Element Structure" for one. Can you share where it's coming from?

Br, Mike

IPE may not be on that list. Doesn't look like anyone ever added scripting support for creating that node. If you want to script it, you may be able to work with having a template VI that has nothing on it except an IPE and use copy/paste to copy it to your target diagram.

I'll file the CAR to add IPE creation support.

As a workaround, use this:

-

There are VIs in vi.lib\utility\editlvlibs\lvclass to allow you to inspect the mutation history information.

-

1

1

-

-

Both Daklu and Black Pearl have some gist of it. But it isn't perhaps about eliminating one or the other. If I take Black Pearl's analogy and extend it, having a bathroom separate from a kitchen is healthy, and having one room that tried to support both activities would end up serving neither goal well. But notice that both the bathroom and the kitchen have lights. They both have running water. Air conditioning and heating ducts. Walls. Electrical sockets. Doors. Indeed, there's a whole set of "room" attributes that both things have. Bringing this analogy back to LabVIEW...

- We have a "conpane" for subVIs but we have "tunnels" for structures. Do we need two separate concepts? Maybe yes, maybe no.

- Both have a description and tip. Are there other settings that apply equally well to both? Could a structure node be meaningfully marked as password-protected? How about marking a structure as "UI Thread"? The execution settings page of VI Properties is the most intriguing point of congruence between VI and structure.

- What about a subVI that is marked as "inline" -- can we just show its contents directly? Could a user looking at the caller VI be able to edit the inlined subVI right there without having to switch windows? How bad would that be? How common are subVIs small enough for that to be even slightly useful?

- What if I had a subVI that took a single scalar input and produced a single scalar output... could I have "autoindexing" on the subVI and just wire an array to the input and get an array out?

- How much commonality is there between marking a subVI as "reentrant" and marking a for loop as "parallel"?

How far could we -- in theory -- go with unifying these two aspects of LabVIEW? These are two features that essentially developed in isolation from each other, and although I have no idea whether anything will come of it, it's been interesting to explore the design space around the commonalities. This is the part of my job they call "research". Just sitting around drawing pictures... :-)

Variant attribute list always sorted?

in LabVIEW General

Posted

The list should always be sorted. I have filed CAR 308287 to update the documentation accordingly.