-

Posts

361 -

Joined

-

Last visited

-

Days Won

36

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by dadreamer

-

-

3 hours ago, ShaunR said:

1.8 MB?

Looks like Block Diagram Binary Heap (BDHb) resource took 1.21 MB and the rest is for the others. There are 120 Match Regular Expression XNodes on the diagram. If each XNode instance is 10 KB approximately, and they all are get embedded into the VI, we get 10 * 120 = 1200 KB. The XNode's icon is copied many times as well (DSIM fork). So, the conclusion is that we shouldn't use XNodes for multiple parallel calls. The less, the better, right?

Ok. The load time seems to reduce with these tokens:

QuoteallowDeferredXNodeCodeGen=True

DisableLazyLoadXNode=False

ForceLazyLoadXNode=TrueStill the editing is sluggish tho.

-

4. WinAPI version using ChooseColor function.

Far from ideal, don't kick too hard. 🙂 Determine Clicked Array Element Index is from here.

-

Well, I see no issues when running XNodes at the run-time, when everything is generated and compiled. What I see is some noticeable lags at the edit time. Say, I have 50 or even 100 instances of one or two XNodes in one VI, set to their own parameters each. When compiled, all is fine. But when I make some minor change (create a constant, for example), LabVIEW starts to regenerate code for all the XNodes in that VI. And it can take a minute or so! Even on a top-notch computer with NVMe SSD and loads of RAM. Anyone experienced this? I've never seen such a behaviour, when dealing with VIM's. Tried to reproduce this with a bunch of Match Regular Expression XNodes in a single VI. Not on such a large scale, but the issue remains. Moreover the whole VI hierarchy opens super slowly, but this I've already noticed before, when dealt with third party XNodes.

-

I kind of liked this idea and wished VIM's could allow for such a backpropagation. Even had a thought of making an idea on the dark forums. But then I played a while with the Variant To Data node. It doesn't play well. It can't determine a sink, if a polymorphic VI is connected or even when a LV native (yellow) node is connected. Borders of structures are another issue, obviously. So, it'd require making two ideas at least: to implement VIM backpropagation and to enhance the Variant To Data node. (As a hack one could eliminate the Variant to Data in their code with coerceFromVariant=TRUE token, but then the diagram starts to look odd and no error handling is performed). If someone still wants the code, shown in the very first post, it's here: https://code.google.com/archive/p/party-licht-steuerung/source/default/source?page=3 (\trunk\PLS-Code\PLS Main.vi). And these are the papers to progress through the lessons: LabVIEW Intermediate I Successful Development Practices Course Manual. Nothing interesting there for an experienced LV'er though.

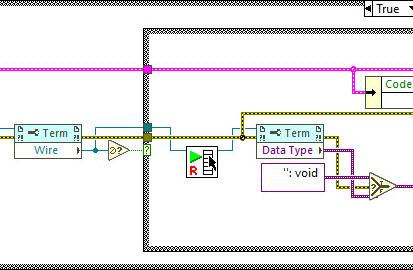

XNodes demonstrated here work a way better, and could be a good alternative (if you're OK with unsupported features, of course). As I tried to adapt them for my own purposes, I decided to improve the sink search technique. It surprised me a bit, that there's still no complete code to walk through all the nested structures to determine a source/sink by its wire. Maybe I didn't search well but all I found was this popup plugin: Find Wire Source.llb. It stops on Case structures though. I have reversed its logic to search for a sink instead of a source and tried to apply recursion, when it encounters a Case structure. Well, it's still not ideal, but now it works in most my cases. There are some cases, when it cannot find a sink, e.g. wire branches with void terms:

Too many scenarios to process them all. Nevertheless, this little VI might be useful for someone. You may use it as a popup plugin, of course, or may pull out that Execute Find Wire Destination (R).vi and use it in your XNodes. As an example:

Already tried such nodes in a work project. I must admit that not all the time back-propagation is suitable, so about 50/50. But when it's used, it works.

-

1

1

-

-

In addition to the LV native method, there are options with .NET and command prompt: Get Recently Modified Files.

-

1

1

-

-

I remember I even had an idea, that would make it easier to track such situations: Add Array Size(s) Indicator. In design time it would cost almost nothing. Although I admit, its use cases are quite rare.

-

Started playing with XNodes a bit and noticed the same behaviour as well. Really upsetting. But there is the solution. Just send FailTransaction reply in a Cancel case in the OnDoubleClick ability of your XNode and that 'dirty dot' never appears! That's exactly what the Timed Loop XNode does internally.

QuoteFailTransaction:

LabVIEW will abort the current transaction. This will avoid putting transactions in the undo list when the user just hit cancel.Looking at this description I get the impression that this reply was invented precisely to overcome that bug (was even given its own CAR #571353).

Similar thread for cross-reference: LabVIEW Bug Report: Error Ring Edit + Cancel modifies the owning VI

-

1

1

-

-

On 8/30/2024 at 7:44 PM, Rolf Kalbermatter said:

This function exists in LabVIEW since about at least 5.0 or thereabout and likely has never been looked at again since, other than use it.

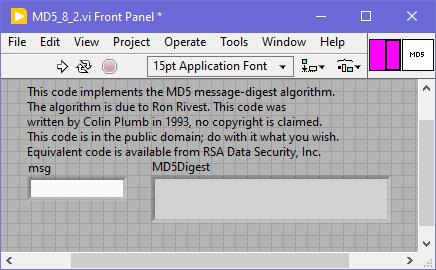

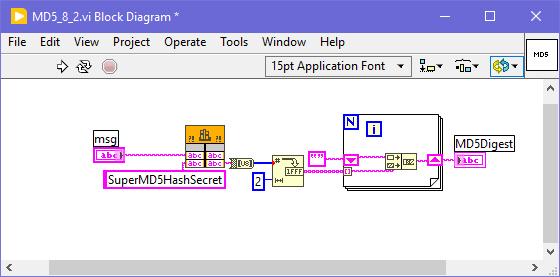

For an odd reason I don't see such a function in LabVIEW 5.x, 6.x and 7.x. It appears only in 8.2, maybe it's available since 8.0 too, can't check right now. Well, I ran a quick binary search for "GetMD5Digest" word and found, that such a function was being used in ...\LabVIEW 8.2\vi.lib\imath\engines\lvmath\Utility\MD5.vi. That VI was password-protected, the password was calvinthegorilla (😁) and, of course, was removed like 1-2-3. Here's the VI to play:

As shown, the prototype is still correct, just some extra "secret" / key was applied. Works well in LV 2023 Q3 64-bit.

-

1

1

-

-

The Create NI GUID.vi is using App.CreateGUID. On Windows App.CreateGUID private method calls CoCreateGuid function, which could be easily called directly from BD, as shown in that cross-posted link.

-

1

1

-

-

The task of intercepting WM_DDE_EXECUTE message has been around for a while here and there. Except that private OS Open Document event there are two more ways to get this done.

1. Using Windows Message Queue Library

2. Using DDEML of WinAPI and self-written callback library

More details can be found in this thread. I now think that it could even be simplified a bit, if one could try to hack that internal LinkDdeCallback function and reuse it like LabVIEW does. But I feel too lazy to check it now.

-

4 hours ago, mcduff said:

Does this token need to be added to the INI file of a compiled exe or does it automatically get included?

It's just a cosmetic token to get "Run At Any Loop" option visible in the IDE mode. After the flag is set, it's sticky to VIs, on which it was activated. No need to add the token to the EXE's settings.

-

1

1

-

-

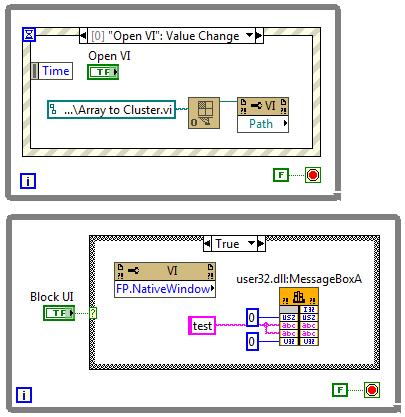

2 hours ago, Rolf Kalbermatter said:

The Open VI Reference will block on UI Rootloop even in the first (top) one.

There's obscure "Run At Any Loop" option, being activated with this ini token:

showRunAtAnyLoopMenuItem=True

Firstly mentioned by @Sparkette in this thread:

I've just tested it with this quick-n-dirty sample, it works.

Also some (all?) property and invoke nodes receive "Run At Any Loop" option if RMB clicking on them. But from what I remember, not all of them really support bypassing the UI thread, so it needs to be tested by trial and error, before using somewhere.

-

1 hour ago, eberaud said:

Only 17 years later 😅 ... Has the situation changed?

Is this what you are looking for?

Front Panel Window:Alignment Grid Size

VI class/Front Panel Window.Alignment Grid Size property

LabVIEW Idea Exchange: Programmatic Control of Grid Alignment Properties (Available in LabVIEW 2019 and later)

-

On 11/19/2023 at 9:24 AM, Robert Maskell said:

LabWindows 1 and 2

I have seen LabWindows 2.1 on old-dos website. Don't know if it's of any interest for you tho'. As to BridgeVIEW's, I still didn't find anything, neither the scene release nor the customer distro. Seems to be very rare.

On 11/19/2023 at 9:17 AM, Robert Maskell said:I have all the old LabVIEW 1 floppies and the original LV1 demo disk set.

Sure some collectors out there would appreciate, if you archive them somewhere on the Wayback Machine. 🙂

-

52 minutes ago, viSci said:

Does lvserial acutally support regex termination strings? How is that accomplished?

It's utilizing the PCRE library, that is incorporated into the code. It's a first incarnation of PCRE, 8.35 for a 32-bit lvserial.dll and 8.45 for a 64-bit one. When configuring the serial port, you can choose between four variants of the termination:

/* CommTermination2 * * Configures the termiation characters for the serial port. * * parameter * hComm serial port handle * lTerminationMode specifies the termination mode, this can be one of the * following value: * COMM_TERM_NONE no termination * COMM_TERM_CHAR one or more termination characters * COMM_TERM_STRING a single termination string * COMM_TERM_REGEX a regular expression * pcTermination buffer containing the termination characters or string * lNumberOfTermChar number of characters in pcTermination * * return * error code */Now when you read the data from the port (lvCommRead -> CommRead function), it works this way:

//if any of the termination modes are enabled, we should take care //of that. Otherwise, we can issue a single read operation (see below) if (pComm->lTeminationMode != COMM_TERM_NONE) { //Read one character after each other and test for termination. //So for each of these read operation we have to recalculate the //remaining total timeout. Finish = clock() + pComm->ulReadTotalTimeoutMultiplier*ulBytesToRead + pComm->ulReadTotalTimeoutConstant; //nothing received: initialize fTermReceived flag to false fTermReceived = FALSE; //read one byte after each other and test the termination //condition. This continues until the termination condition //matches, the maximum number bytes are received or an if //error occurred. do { //only for this iteration: no bytes received. ulBytesRead = 0; //calculate the remaining time out ulRemainingTime = Finish - clock(); //read one byte from the serial port lFnkRes = __CommRead( pComm, pcBuffer+ulTotalBytesRead, 1, &ulBytesRead, osReader, ulRemainingTime); //if we received a byte, we shold update the total number of //received bytes and test the termination condition. if (ulBytesRead > 0) { //update the total number of received bytes ulTotalBytesRead += ulBytesRead; //test the termination condition switch (pComm->lTeminationMode) { case COMM_TERM_CHAR: //one or more termination characters //search the received character in the buffer of the //termination characters. fTermReceived = memchr( pComm->pcTermination, *(pcBuffer+ulTotalBytesRead-1), pComm->lNumberOfTermChar) != NULL; break; case COMM_TERM_STRING: //termination string //there must be at least the number of bytes of //the termination string. if (ulTotalBytesRead >= (unsigned long)pComm->lNumberOfTermChar) { //we only test the last bytes of the receive buffer fTermReceived = memcmp( pcBuffer + ulTotalBytesRead - pComm->lNumberOfTermChar, pComm->pcTermination, pComm->lNumberOfTermChar) == 0; } break; case COMM_TERM_REGEX: //regular expression //execute the precompiled regular expression fTermReceived = pcre_exec( pComm->RegEx, pComm->RegExExtra, pcBuffer, ulTotalBytesRead, 0, PCRE_NOTEMPTY, aiOffsets, 3) >= 0; break; default: //huh ... unknown termination mode _ASSERT(0); fTermReceived = 1; } } //Repeat this until // - an error occurred or // - the termination condition is true or // - we timed out } while (!lFnkRes && !fTermReceived && ulTotalBytesRead < ulBytesToRead && Finish > clock()); //adjust the result code according to the result of //the read operation. if (lFnkRes == COMM_SUCCESS) { if (!fTermReceived) { //termination condition not matched, so we test, if the max //number of bytes are received. if (ulTotalBytesRead == ulBytesToRead) lFnkRes = COMM_WARN_NYBTES; else lFnkRes = COMM_ERR_TERMCHAR; } else //termination condition matched lFnkRes = COMM_WARN_TERMCHAR; } } else { //The termination is not activated. So we can read all //requested bytes in a single step. lFnkRes = __CommRead( pComm, pcBuffer, ulBytesToRead, &ulTotalBytesRead, osReader, pComm->ulReadTotalTimeoutMultiplier*ulBytesToRead + pComm->ulReadTotalTimeoutConstant ); }

1 hour ago, viSci said:Is that capability built into an interrupt service routine or is it just simulated in a polling loop under the hood?

As shown in the code above, when the termination is activated, the library reads data one byte at a time in a do ... while loop and tests it against the term char / string / regular expression on every iteration. I can't say how good that PCRE engine is as I never really used it.

-

7 hours ago, mooner said:

The pointer to the image in memory and the width of the image as well as the height are known

And what's the image data type (U8, U16, RGB U32, ...)? You need to know this as well to calculate the buffer size to receive the image into. Now, I assume, you first call the CaptureScreenshot function and get the image pointer, width and height. Second, you allocate the array of proper size and call MoveBlock function - take a look at Dereferencing Pointers from C/C++ DLLs in LabVIEW ("Special Case: Dereferencing Arrays" section). If everything is done right, your array will have data and you can do further processing.

-

7 hours ago, Jeffrey Oon said:

There's nothing special about these two VIs. They are just helpers for the higher level examples. You don't need to run them directly. In real life projects you won't need those VIs at all.

7 hours ago, Jeffrey Oon said:For your information, i am a Labview beginner user so not so familiar with LABVIEW program.

What about taking some image processing and machine vision courses?

-

2 hours ago, Jeffrey Oon said:

Fusebox Inspection Example.llb

The whole examples folder from VDM for LabVIEW 2010.

2 hours ago, Jeffrey Oon said:Another example is "Tile identification"

Couldn't find any VI with the same or similar name.

-

- Popular Post

- Popular Post

14 hours ago, coco822 said:If anyone has any idea how to detect the "Mouse Move" event when the mouse is pressed

As a workaround, what about using the .NET control's own events?

Mouse Event over .NET Controls.vi MouseDown CB.vi MouseMove CB.vi

-

4

4

-

Technically related question: Insert bytes into middle of a file (in windows filesystem) without reading entire file (using File Allocation Table)? (Or closer, but not that informative). The extract is - theoretically possible, but so low level and hacky that easy to mess up with something, rendering the whole system inoperable. If this doesn't stop you, then you may try contacting Joakim Schicht, as he has made a bunch of NTFS tools incl. PowerMft for low level modifications and maybe he will give you some tips about how to proceed (or give it up and switch to traditional ways/workarounds).

-

1

1

-

-

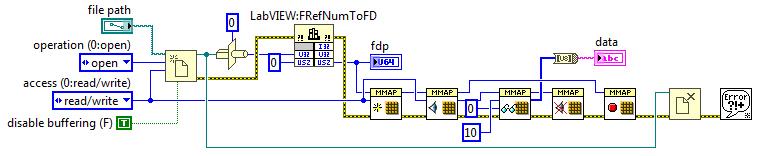

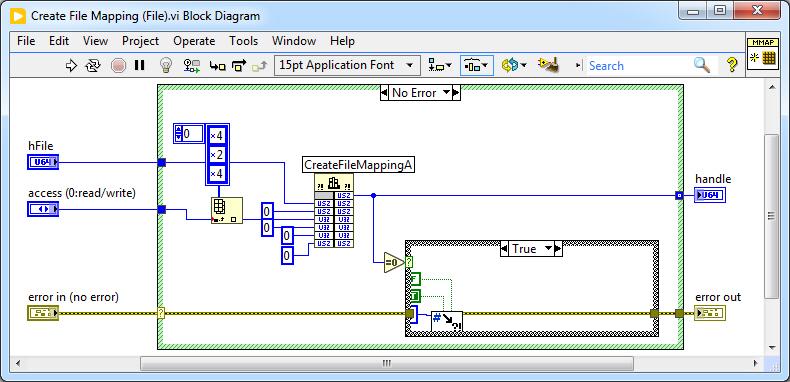

7 minutes ago, ShaunR said:

You can use it to copy chunks like mcduff suggested

It is what I was thinking of, just in case with Memory-Mapped Files it should be a way more productive, than with normal file operations. No need to load entire file into RAM. I have a machine with 8 GB of RAM and 8 GB files are mmap'ed just fine. So, general sequence is that: Open a file (with CreateFileA or as shown above) -> Map it into memory -> Move the data in chunks with Read-Write operations -> Unmap the file -> SetFilePointer(Ex) -> SetEndOfFile -> Close the file.

-

28 minutes ago, ShaunR said:

There is a limit to how much you can map into memory.

Not an issue for "100kB" views, I think. Files theirselves may be big enough, 7.40 GB opened fine (just checked).

-

I would suggest Memory-Mapped Files, but I'm a bit unsure whether all-ready instruments exist for such a task. There's @Rolf Kalbermatter's adaptation: https://forums.ni.com/t5/LabVIEW/Problem-Creating-File-Mapping-Object-in-Memory-Mapped-FIles/m-p/3753032#M1056761 But seems to need some tweaks to work with common files instead of file mapping objects. Not that hard to do though.

A quick-n-dirty sample (reading 10 bytes only).

Yes, I know I should use CreateFileA instead of Open/Create/Replace VI + FRefNumToFD, just was lazy and short on time.

-

Not sure why this is still unanswered, but there is a bunch of similar threads, when googling something like string color array site:ni.com. The main verdict is "not possible, use Table/Listbox, Luke" (or any other suitable workaround of your choice).

XNode Execution Settings

in Development Environment (IDE)

Posted

About a minute for a change, that's what I've seen on a production machine. On my home computer it's as on yours though: a single edit takes 1-2 seconds. I'm still investigating it. I will try different options such as enabling those ini keys or disabling the auto-save feature. To me it looks like the whole recompilation is triggered sometimes. By the way, there are more keys to fine-tune XNodes:

I haven't yet figured out the details.