-

Posts

3,365 -

Joined

-

Last visited

-

Days Won

268

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by hooovahh

-

-

For personal use at home I use Acronis. I've only ever used it for whole OS backing up. It supports incremental backups, and has a pretty decent scheduler. So my home computers all backup to a network location once a month doing an incremental backup. This is much smaller but only tracks the differences from one backup to another. After 5 incremental backups it performs a full backup. After so many backups it deletes the old ones. There was a 3 pack of software that went on sale years ago which came with a mail in rebate basically making it free. It has a CD image that you can boot to restore the image from outside of the OS, or in most cases can select from within Windows the image to restore, then it reboots and restores it. Again this is more useful for OS back up, and probably not what you want but it likely does work with just a set of folders.

-

Not that I know of. You can email certification@ni.com for questions. They are pretty responsive.

-

Thanks for the video, definitely never seen that. I'd suspect it is an interaction with Parallels, like LabVIEW losing track of what you are under as you transition to other systems. No idea.

-

I don't follow you and may need a video to understand. Are you saying you can't drag an item from the palette onto the block diagram? I've never seen what it sounds like you are describing.

-

Just anecdotally I'd say my 2020 RT is more stable than 2017 and 2018. I do use a couple RT utility VIs, but I have separate projects for the Windows Only and the RT Only portion. In the past I had issues with opening things in the other context causing problems and long recompile times. With two separate projects there is no code under the other target.

-

Sorry about the snippet thing, LAVA sometimes strips out the meta data for it. In any case Crossrulz is right there is a function for this already.

-

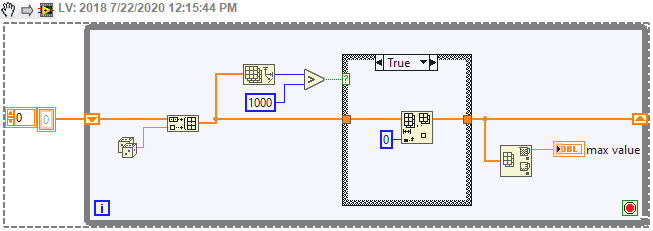

Your Fig 1 still has a couple issues. First the memory issue. You build the array, then put it into a shift register that continues to build. You need to perform the array subset, and then put that into the shift register. Because adding elements at the start of an array were less efficient than at the end (not sure if LabVIEW improved) I often put elements at the end, then perform an Array Size, and if it is greater than some value, I use the Delete From Array, providing the index to delete as 0. Just a pointer that probably doesn't matter. The other issue with Figure 1 is you start with 1000 elements, then add elements one at a time. I suspect you either want to start with an empty array, or use the replace array subset but then things like pointers and shifting might be an issue. If you are just trying to keep it simple, I'd suggest this. Also if you are preallocating the array with a bunch of data, doing an Array Min/Max is going to use that preallocated data too which probably isn't what you want. If you go with a preallocated method you can still use array min max, but you will have to keep track of how full it is with real data, and then do array subset before Min/Max. This seems easier and honestly isn't that bad.

Also you should be posting VI Snippets, these allow for posting pictures of your code as PNGs, that can then be dragged into the block diagram. Then others can get your code more easily.

-

1

1

-

-

I also was a bit frustrated by this some times. So attached is a demo of some panel movement code. It has a few main functions.

The first is sometimes I'd have a dialog I wanted to pop up, but I wanted it to be centered on whatever monitor the mouse was on. My thought was that this was the monitor the user was using, and they probably just clicked something, so put it there.

I also had times that I would pop up a dialog under the mouse and so I wanted the panel to be centered on the mouse as best it can, but stay on the same monitor. This doesn't always work well with taskbars that can be hidden, and size and position not being consistent but it mostly works.

And lastly I sometimes had a need to set a panel to maximize on a specific monitor. We typically would have 2 or 3 set side by side in the system. So this VI can set a panel to maximize on the "Primary", "Left", "Right", "Second from Left" or a few others.

As you noticed this won't fix LabVIEW native dialogs, and any solution I can think of for those will be a bit hacky.

-

1

1

-

-

The answer like most things is it depends. Are the values from one iteration loop, into the next needed for some comparison check? Like are you going to be checking to see if a value in the array already exists from a previous iteration? If not I would suggest wiring the output of the loop and change the tunnel to be indexing. This has the added benefit of having the conditional input as an option which can choose which elements to have be passed out or not.

Another method that might be suggested is to preallocate the array with the initialize array function, then perform replace array element inside the loop.

The reason why building in a loop like this is discouraged, is because beginners of LabVIEW often leave this array building unchecked in size. For simple tests that don't run long this might be just fine. Not optimal but will work fine. The larger problem is when this type of technique is used in a program that will run for months or years non-stop. Memory will grow forever, and it will crash.

There is a performance hit from having to change the array size every loop which in this example will be pretty small. If you are okay with this, and if you have a way to limit the array size some how, then I'd be fine with this type of code. This might be something like check the array size each loop, and if it is greater than some value, get an array subset. This can be a type of circular buffer.

-

I don't fully understand the problem. I see that you press one button at a time, and that appends to the string which is limited to 4 characters. That all seems to work fine. But what is the loop trying to do with the hard coded pins? Are you trying to see if the pin matches any of the hard coded ones? If so you can just use the Equal function with the scalar string, along with the 1D array constant. This will return an array of if they are equal or not, and an Or array elements can tell you if any match.

-

Also seeing this in 2020. I just thought it was me, and my remote environment.

-

- Popular Post

- Popular Post

That's a mighty fine VM you got yourself there. Almost like having a VM of this Linux RT target is a super useful tool, that helps troubleshoot and debug features of the embedded UI that are at times "inconsistent" as you put it. For anyone else that finds this useful you should go vote on the idea, and/or contribute to the conversation.

-

1

1

-

2

2

-

I'd like to see some probes just to make sure those are the actual values going in, since it might be what you think is going in but isn't. Assuming that is fine I'd check to see if it works on a new file and not one that already exists and has a different data type for that group channel pair. Also the reference is valid obviously, but was it opened as "Open Read-Only"? If this is a subVI I'd just unit test this by writing a quick test that creates a file and writes to the channel. Nothing obvious is wrong with the code you have shown, which makes me think it is an issue somewhere else. Oh and is your timestamp array empty? Or is there an error being generated from that waveform function? I think the TDMS function should just pass the error through but I'd probe it to make sure.

-

Did you log in with your NI account after the install?

-

1

1

-

-

Well for me it would be a bit more complicated since I have Pre/Post Install and Uninstall VIs. And some of those VIs depend on VIs in some of the packages. So install order matters and dependencies would need to be checked so the order is right, and Pre/Post Install things ran in the right order, with the right input for things like Package Name, Install Files, etc. put into the variant input of those VIs. Still possible and adds some complications. Which is why to date I still just install VIPM and let it handle it.

-

I believe this post by AQ is the one you are referring to.

-

Yeah sorry for the confusion. I don't have official release process in place for this community stuff and I really should. I did make a newer version of this for the TDMS package and just included it in it because I wanted to make consuming my code easiest for other developers. But with the efforts of VIPM, and GCentral I need to evaluate the best way to share and hold myself to the process to avoid confusion in the future.

-

I think we're at the point where to better help you need to post the actual VIs. I suspect lots of coding styles that are indicative of beginner LabVIEW programmers and I can't make blanket statements like "Don't do this" without being able to see more of what you are trying to do.

Like my initial reaction is that timed loops shouldn't be used except on RT or FPGA platforms. I also see lots of booleans that I would suggest using an event structure for capturing their value change. Maybe putting them all into a single cluster and capturing that value change would be better.

Other suggestions are to use a state machine, or queue message handler. This usually has a cluster of stuff to carry between cases and can be thought of as something akin to variables.

-

Years ago as a proof of concept I made a LabVIEW program that installed packages without needing VIPM installed. As Michael said the files are zips with a specific structure, and spec file defining where the files should be placed, and optional pre/post VIs to run. Obviously VIPM can do more things like track down packages that are dependent from the network, resolve version information, and a bunch of other things. But if you just have a VIPC with all your packages in it that you need to install, it probably wouldn't be too hard to make a LabVIEW program that just installs that.

-

I've not used LabVIEW 64-bit for anything real, but looking at the package I believe it should be compatible. What error are you seeing exactly?

-

I have seen a time when I launched VIPM 2020 and nothing happened. So I looked in the task manager and saw VIPM trying to start, and I task killed it, and saw that there is a helper application that I think had the name service in it. I task killed that too. Then relaunching 2020 worked. This might be what you are running into too.

-

2 hours ago, Antoine Chalons said:

I was wondering if anyone has seen similar situations?

I haven't, and my initial reaction is that I would quit a job like the one you described. The closest I came was when my boss sat me down to show me his 5 year plan for the group since he heard I was planning on quitting soon and he hoped his new road map would inspire me to stick around. I thanked him profusely for showing me his plans, because it meant that I knew I was making the right decision leaving. I quit, he started steering toward an ice burg, then he was fired about a year later. I was contacted by a head hunter saying this job opportunity was a perfect fit for me. I had to inform him I had that job and listed reasons why I wouldn't come back. Someone else that was still there tried getting me to come back, only to realize they were one of the reasons I left. Things have not sounded all that great since.

-

1

1

-

-

So there are plenty of improvements I can suggest for readability, but overall I think the code isn't bad. A few comments would help, and I'd prefer less feedback nodes, and instead code that calls it in a loop, but to be fair that might have just been what you did to demo it. I think all that is needed to to try to not use the dynamic data type. It can do unexpected things, and can hide some of what is going on, inside those functions that you need to open the configure dialog for. This can make the code less readable. So I'd get rid of the To Dynamic data, and instead use the Array Subset function on the 2D array of doubles. This should allow you to get just the two columns you want, and write that to the TDMS file. You may have to mess with the decimation, and possibly transpose the 2D array. I can never remember how the functions work, and so I'm often writing test code to see if the columns end up the way I want. This will mean of course that you also use the Array Subset on the 1D array of string which is the channel names.

As for the time entry, it looks like it works, but might I suggest a different string format? Here is a post that shows how to use the Format Into String, with the timestamp input, which returns an ISO standard string format. More standard, and if a log is moved from one timezone to the next, it can still be clear when the log was made.

-

Yeah targeting the upper management likely would result in more sales. But may also result in less engineers willing to use NI and their products, having been force fed the wrong, or less appropriate tool for the job. I worked with a group that had Veristand 2009 forced down from upper management. NI came in with a dazzling presentation and convinced them to change over their dyno control systems to it and the PXI platform. They did without consulting the engineers, and as a result there was a daily battle where I would repeat "Rewrite Veristand" when some trivial task for LabVIEW, couldn't be done in it. Veristand 2009 ended up being somewhat of a flop, and from what I remember nothing ported to 2010 since NI made some major rewrites of it. We eventually got an apology from NI which basically equated to, sorry we gave you the impression this product was more usable than it actually was.

Luckily my current boss knows NI and their offerings, but trusts my opinion over theirs. They need to convince me something is a good fit, before they will convince him. They have some new test platforms that directly align with our business (battery test in automotive), and so far I'm unimpressed except when it comes to hardware.

Efficient Real-Time Development Practices

in Real-Time

Posted

Since this thread I've been using separate projects for each target. Debugging things aren't that difficult until it is some interaction between the two systems. I'll build my RTEXE, deploy it, then run my Windows VIs from the Host Only project. I might then realize the issue is on the RT side, so I then build the Windows EXE, close the Host Only project, open the RT Only project, open and run my main RT VI, then run my Windows EXE. Lots of building, and deploying when debugging. But since most issues I encounter are clearly one targets bug or the other it isn't too bad. I'll just open that project and run the source and debug the issue, while the other target runs its binary. It really seems like multiple targets in a project is one of those features that works in practice, and in the real world, falls short of expectations.