-

Posts

1,189 -

Joined

-

Last visited

-

Days Won

110

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Neil Pate

-

-

2 hours ago, nagesh said:

Hi folks,

I need to know if this is possible?

if yes then the what are the possibilities??

1. The user should select the signals (by check box or similar).

2. My waveform chart should display only these signals on the front panel. (no of signals in the waveform chart must be modified based on this signals).

3. it is better, by clicking a button the new front panel pops up with waveform chart.

The graphs have a built in ability to allow the plots to be turned on/off at runtime. I think the option is hidden by default and might be called something like Visibility, that will probably solve your problems (1) and (2).

-

Check this out https://fabiensanglard.net/gebb/index.html

I just wish I had time (and the brainpower!) to do a deep dive.

-

1

1

-

-

4 hours ago, JKSH said:

I'm curious: What are some examples of Win32 API calls that have been most useful in LabVIEW programs?

I too have a sprinkling of Win32 calls I have wrapped up over the space of many years. Most are pretty simple helper stuff like bringing a window to the front or printing or getting/setting current directory etc. No rocket science here.

-

Obviously I don't know what I am talking about, but could this in the future be used to simplify configuring Win32 API DLL calls in LabVIEW?

-

18 hours ago, rharmon@sandia.gov said:

You guys always have the best information/ideas... Thank you all...

Since I really like the new entries at the top of the log file, and my major worry is that the file gets too big over time and causes the the log write to consume too much time I really like dhakkan's approach of checking the file size periodically and flushing the file and saving the data to numbered files.

I totally get you wanting to display newer entries first, but surely this is just a presentation issue and should not be solved at the file level? I really think you are going against the stream here by wanting newer entries first in the actual file on disk. It is almost free to append a bit of text to the end of a file, but constantly rewriting it to prepend seems like a lot of trouble to go to.

Rotating the log file is a good idea regardless though.

Notepad++ has a "watch" feature that autoreloads the file that is open. It is not without its warts though as I think the Notepad++ window needs focus.

-

1 hour ago, Antoine Chalons said:

NXG team will be re-assigned to other projects and a large part focusing on non-Windows OS support 😮

That is good to hear.

-

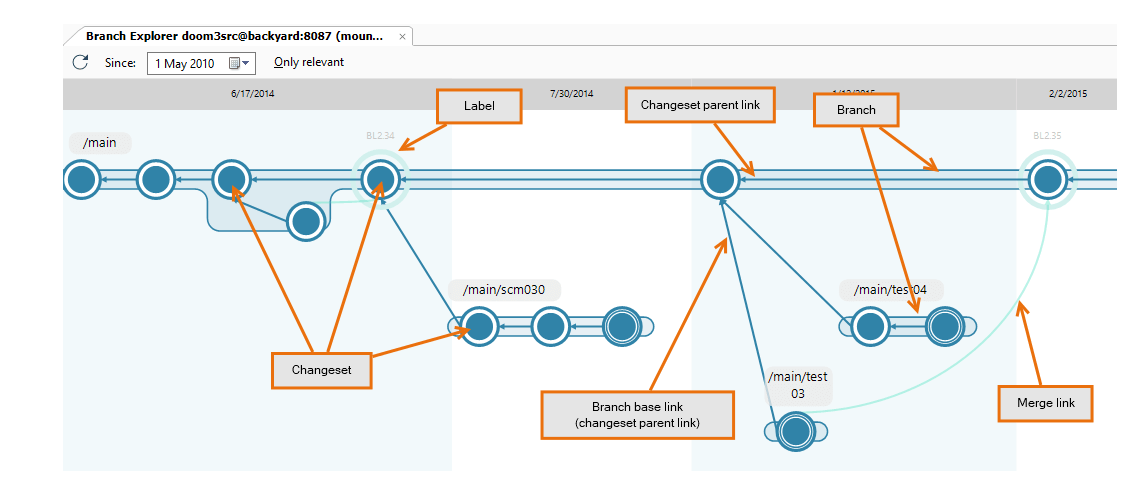

Tangentially, I use Plastic SCM at work (Unity/C# dev), it really hits the sweet spot of easy to use but powerful DVCS. I believe it is modelled on Git but designed to trivially handle many branches.

-

OK, so deleting the branch on the remote only deletes it from being used in future, it still exists in the past and can be visualised? Sorry I misunderstood and thought that git did magic to actually remove the branch in the past (which would be a bad thing). I know about rebase but have never thought to use it.

-

23 hours ago, LogMAN said:

You got it right. "Delete branch" will delete the branch on your fork. It does not affect the clone on your computer. The idea is that every pull request has its own branch, which, once merged into master, can safely be deleted.

This can indeed be confusing if you are used to centralized VCSs.

I still don't really get this. I want to see the branches when I look in the past. If the branch on the remote is deleted then I lose a bit of the story of how the code got to that state don't I?

-

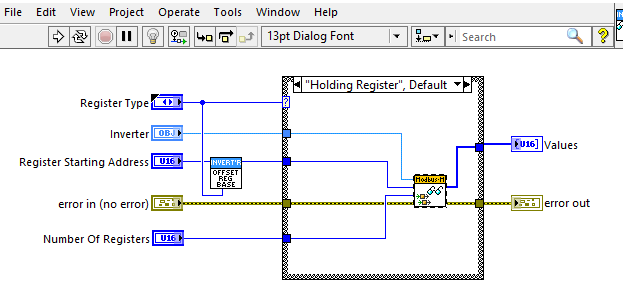

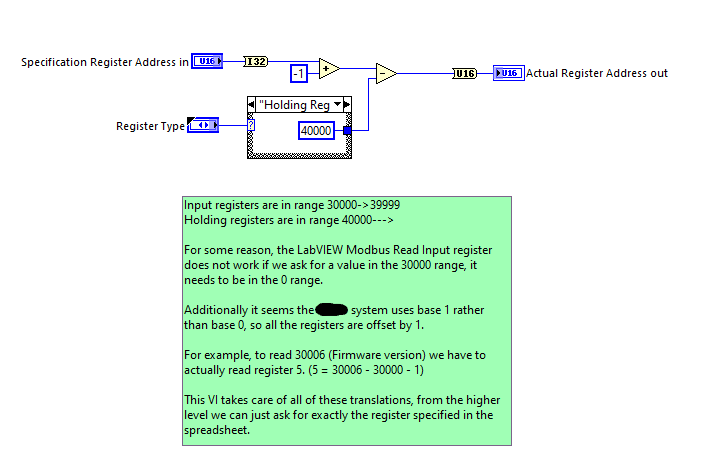

It's been a while but when using the NI Modbus library I found there was some weirdness regarding what the base of the system is. This might just be my misunderstanding of Modbus but for example to read a holding register that was at address 40001 I would actually need to use the Read Holding Register VI with an address of 1 (or 0).

This snippet works fine for reading multiple registers, but see the VI I had to write on the left to do the register address translation.

-

So I am pretty new to GitHub and pull requests (I still am not sure I 100% understand the concept of local and remotes having totally different branches either!)

But what is this all about? I have done a bit of digging and it seems the current best practice is indeed to delete the branch when it is no longer needed. This is also a totally strange concept to me. I presume the branch they are talking about here is the remote branch?

Confused...

-

NI: "

Engineer Ambitiously" "Change some fonts and colours, that will shut up the filthy unwashed masses who are not Fortune 500 companies"-

1

1

-

-

So I am not a Modbus expert, but have definitely written information to a PLC in the past. As far as I know you cannot write to input registers, I presume you can write to output registers (are they called coils?). I don't have the toolkits installed on my PC right now so I cannot check.

This code below works fine for setting Holding Registers. Have you tried writing to the Holding Registers? I

-

1

1

-

-

3 hours ago, mabe said:

Wow! Maybe next time they will default the VIs front panel to pure white too.

BTW, I don't understand why (after nearly 35 years) the block diagram color in LV is still part of the VI instead of an IDE preference. Even if I change my local setting to some gray for my VIs, I still get tons of VIs from other developers with the default burning pure white.

I do agree with you, but must confess that at least once in my dark past I have coloured a block diagram. It was a VI I hated and wanted to get rid of so I coloured the background an awful red colour to remind me every time I opened it.

-

1 hour ago, Ciro99 said:

Hi everyone, I need help with a project where I have a LOGO! Siemens 6ED1052-1HB08-0BA0

that communicates the states of inputs and outputs via Modbus/Ethernet with Labview,

I wanted to ask you if it is possible, using labview to check the state of a Merker,

then to virtually change the status of the Merker from 1 to 0. I have already tried Ethernet Modbus.

thanks in advance for the help.I cannot recall doing this with a LOGO! but have done it quite a bit with plain old S7s. The Modbus module (now open source) works just fine. What difficulties are you having?

-

Just to chip in here, I recall hearing that NI is going to be moving away from the regular biannual releases of LabVIEW. I cannot remember where I heard or read this though.

-

Have you tried to mass compile?

-

Nice article also, part 1 of 7!

https://arstechnica.com/science/2021/01/the-curious-observers-guide-to-quantum-mechanics/

-

2 hours ago, Antoine Chalons said:

Actually - for me - Linux is always a big roadblock 😢

I've taken a similar approach to what you did with SQLite - finding the lib path once at connection, store it in the class private data and setting all the CLFN with a library path input.

Of course running that on Windows goes fine, but when trying to run it on a Linux RT target (I have an IC-3173), as soon as the running code gets to a VI that calls the libpq.so.5.7 the execution stops and I get a message that the target was disconnected.

I checked the access right for the file /usr/lib/libpq.so.5.7 and they look fine for the account "lvuser"

I'm now thinking the issue in libPQ version because I followed this tutorial to install PostgreSQL client on NI Linux RT, it installs version 9.4.17 (latest available on http://download.ni.com/ni-linux-rt/feeds/2019/x64/core2-64/) and the version used in the package is 9.6

How can I install PostgreSQL client 9.6 on NI Linux RT ?

Are you sure you burned the correct amount of sage during the incantation?

-

1

1

-

-

3 hours ago, Antoine Chalons said:

In reply to Neil's opening, here's my 2 cents about what I suggest NI should do :

From what I read in this thread

It seems that NI's NXG dev team was made of people who didn't have enough love for LV-CG- or enough experience using LV-CG for real world applications.

Well now that NI's plan is to move forward with LabVIEW, my suggestion is that they make every NXG dev spend 50% of their time contributing on LabVIEW related open source project.

There's a lot of great project on GitHub, Bitbucket, GitLab, etc. started by passionate LabVIEW users who have the greatness to share their toolkits, frameworks, API, etc.

They have limited resources in most cases, so now that NI has a full team of developers with no real purpose and and not enough understanding of what makes LV-CG great, then let's make them contribute to growing the LV-CG eco-system.

edit :

if you agree >> https://forums.ni.com/t5/LabVIEW-Idea-Exchange/Make-NXG-dev-team-contribute-to-LV-related-open-source-projects/idi-p/4110996

I think the NXG team was outsourced to a C# dev house outside of the US. I suspect the contract has been terminated.

-

5 hours ago, Bryan said:

I agree, I have garnered great disdain for Winblows over the years as far as the negative impacts to our testers from updates mandated by IT departments, obsolescence, the pain to install unsigned drivers, just to name a few. I would hate to see NI stop support for Linux as it has been growing in popularity and getting more user friendly.

Linux is a great and stable platform, though not for the faint of heart. It takes more effort and time to build the same thing you could do in shorter time with Windows. However, If LabVIEW were open source and free, you could theoretically build systems for just the cost of time and hardware. I've been wishing over the years that they would support LabVIEW on Debian based systems as well.

I've created two Linux/LabVIEW based setups in the past and never had the issues I've run into with Windows. Yes, it took more time and effort, but as far as I know - the one(s) I created (circa 2004-5) have been working reliably without issue or have even required any troubleshooting or support since their release. One is running Mandrake and the other an early version of Fedora.

Maybe 2021 will be the year of Linux on the desktop, but given that this has been predicted every year since about 2003 I would not hold my breath.

LabVIEW will never be open source. It would not make sense, nobody outside of NI would be able to maintain it. LabVIEW is not the Linux kernel which is of huge interest to millions of others. The area of the intersection on the Venn diagram of skilled enthusiastic LabVIEW developers, ultra-skilled C++ developers, and those with enough spare time is approximately zero. The full complement of engineers at NI can barely make any progress into the CAR list, what hope is there for anyone else?

-

1

1

-

-

Another option is Alice. It combines a 3D environment with a language quite similar to some of the others mentioned. It can be fun for younger kids as they can ignore the programming bit and just construct a 3D scene with some props quite easily.

-

- Popular Post

- Popular Post

Dear

SantaNII am now in my 40s with youngish kids, so despite the fact that all I got for Christmas this year was a Pickle Rick cushion I am not actually complaining. However, I would like to get my order in to the Elves as early as possible. This is my wishlist, in no particular order. I expect this list will not be to everyone's taste, this is ok, this is just my opinion.

- Make LabVIEW free forever. The war is over, Python has won. If you want to be relevant in 5 to 10 years you need to embrace this. The community edition is a great start but is is probably not enough. Note: I accept it might be necessary to charge for FPGA stuff where I presume you license Xilinx tools. NI is and has always been a hardware company.

- Make all toolkits free. See the above point.

- Remove all third party licensing stuff. Nobody makes any money from this anyway. Encourage completely open sharing of code and lead by example.

- Take all the software engineering knowledge gained during the NXG experiment and start a deep refactor of the current gen IDE. Small changes here please though... we should not have to wait 10 years.

- Listen to the feedback of your most passionate users during this refactor. NXG failed because you ignored us and just assumed we would consume whatever was placed in front of us. I am talking about the people like those reading this post on Christmas day and their spare time because they are so deeply committed to LabVIEW

- My eyes are not what they used to be, so please bring in the NXG style vector graphic support so I can adjust the zoom of my block diagram and front panel to suit accordingly

- As part of the deep refactor, the run-time GUI needs to be modernised. We need proper support for resizable GUIs that react sensible to high DPI environments.

- Bring the best bits of NXG over to current gen. For example the dockable properties pane. (Sorry not much else comes to mind)

- Remove support for Linux and Mac and start to prune this cross compatibility from the codebase. I know this is going to get me flamed for eternity from 0.1 % of the users. (You pretty much made this decision for NXG already). Windows 10 is a great OS and has won the war here.

- Get rid of the 32-bit version and make RT 64-bit compatible. You are a decade overdue here.

- Add unicode support. I have only needed this a few times, but it is mandatory for a multicultural language in 2021 and going forward

- Port the Web Module to Current Gen. All the news I have heard is that the Web Module is going to become a standalone product. Please bring this into Current Gen. This has so much potential.

- Stop adding features for a few years. Spend the engineering effort polishing.

- Fix the random weirdness we get when deploying to RT

- Open source as many toolkits as you can.

- Move the Vision toolkit over to OpenCV and make it open source

- Sell your hardware a bit cheaper. We love your hardware and the integration with LabVIEW but when you are a big multiple more expensive than a competitor it is very hard to justify the cost.

- Allow people to source NI hardware through whatever channel makes most sense to them. Currently the rules on hardware purchasing across regions are ridiculous.

- Bring ni.com into the 21st century. The website is a dinosaur and makes me sad whenever I have to use it

- Re-engage with universities to inspire the next generation of young engineers and makers. This will be much easier if the price is zero

- Re-engage with the community of your most passionate supporters. Lately it has felt like there is a black hole when communicating with you

- Engineer ambitiously? What does this even mean? The people using your products are doing their best, please don't patronise us with this slogan.

- Take the hard work done in NXG and make VIs into a non-binary format human readable so that we can diff and merge with our choice of SCC tools

- Remove all hurdles to hand-editing of these files (no more pointless hashes for "protection" of .lvlibs and VIs etc)

- Openly publish the file formats to allow advanced users to make toolkits. We have some seriously skilled users here who already know how to edit the binary versions! Embrace this, it can only help you.

- Introduce some kind of virtualenv ala Python. i.e. allow libraries and toolkits to be installed on a per-project basis. (I think this is something JKI are investigating with their new Project Dragon thing)

- For the love of all that is holy do not integrate Git deeply into LabVIEW. Nobody wants to be locked into someone else's choice of SCC. (That said, I do think everyone should use Git anyway, this is another war that has been won).

That is about it for now.

All I want is for you guys to succeed so my career of nearly 20 years does not need to be flushed down the toilet like 2020.

Love you

Neil

(Edited: added a few more bullets)

-

12

12

-

3

3

-

1

1

-

Ditto to what Rolf said.

Selecting signals by user - Waveform chart

in LabVIEW General

Posted · Edited by Neil Pate

You can toggle it here:

Then each plot can be individually turned on or off