-

Posts

1,156 -

Joined

-

Last visited

-

Days Won

102

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Neil Pate

-

-

Another weird thing. When I close a project it often does not close the open VIs that are part of the project!

Maybe my installation is just busticated.

-

1

1

-

-

1 hour ago, X___ said:

I am refraining from upgrading without pressing reasons,

Unfortunately I really need the TLS feature of TCP/IP connections so am going to have to lump it. I agree though 2020 feels like one of the "skip" releases.

-

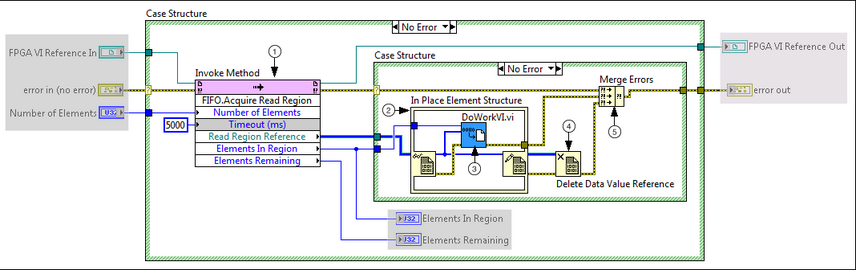

Can anyone shed some light for me on the best practices for the FIFO Acquire Read Region technique? I have never used this before, I always have just done the usual trick of reading zero elements to get the size of the buffer and then reading if there are enough elements for my liking. To my knowledge this was a good technique and I have used it quite a few times with no actual worries (including in some VST code with a ridiculous data rate).

This screenshot is taken from here.

Is this code really more efficient? Does the Read Region block with high CPU usage like the Read FIFO method does? (I don't want that)

Has anyone used this "new" technique successfully?

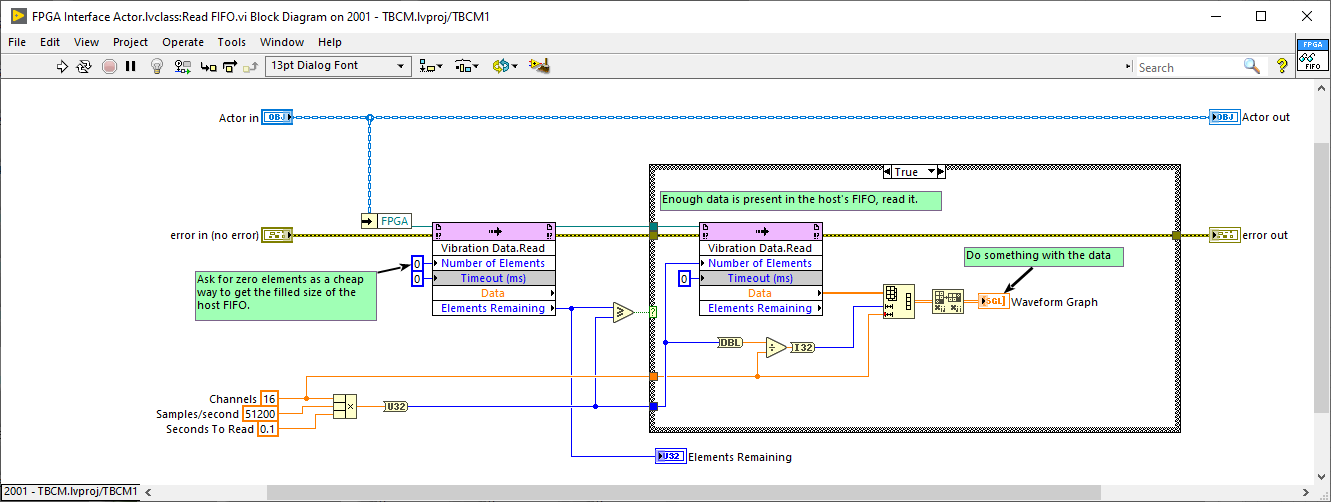

For reference this is my current technique:

-

Following up from some of the information here. This is the bug I am seeing regularly in LV2020

When I close a project I never want to defer these changes, I am used to this option being "dont save changes". See the video, it shows clearly what is happening. As can be seen from the video if I re-open the same project from the Getting Started Window it opens instantaneously which is further proof that it is not actually closed. I really hope this is not a new feature, this is really dangerous behaviour as you think the project is closed so go to commit files or whatever.

This has been reported to NI, no CAR as yet.

Anyone else seeing behaviour like this?

-

2

2

-

-

Never seen this kind of thing running on plain metal across many versions of LV. I do often lose my right Control key though if I use VirtualBox as I think that key is reserved for other stuff.

-

1 minute ago, MarkCG said:

I have seen this too -- looking for RT Get CPU loads.vi in the wrong place, breaking the VI. Not sure how LabVIEW gets into that state but it's been a bug for years. Deleting all RT utility VIs in your code, then adding them again seemed to fix it.

I am about to reimplement my System Status actor from scratch. This time though though I am staying far away from the RT Get CPU Load and am going to try and read it using the linux command line (maybe "top" or similar). Urgh...

-

How frequently? I must say I cannot recall seeing this, certainly not regularly enough for it to be a nuisance. Can you post a video?

-

22 hours ago, X___ said:

I suggest you split the thread, as it may become confusing if everyone refers to a different bug. Mine has been submitted and I will report the CAR when I get one.

Sorry you are right. Bug report submitted.

-

More weirdness here. When I close a project it offers the option to defer and now only prompts me to save when I actually close the Getting Started Window. What I normally want is just to close the Project immediately and ignore any changes (you know, like every other piece of software on the planet).

NI is this really a new "feature"?

-

Seriously hate the class mutation history. What a terrible feature.

-

20 minutes ago, PiDi said:

Those RT utility VIs get broken whenever you try to open code in My Computer project context instead of RT. On which target do you develop your framework? Do you have any shared code between PC and RT? If yes - try to get rid of it.

Currently the only code I have in the My Computer bit is the helper stuff I have to reset the class mutation history. I did not think I could run that from the RT context.

It is so weird as I have VIs that have nothing to do with the RT Load VI that want to try and load them when I save them. Like somehow it might be in the mutation history (which it might be as I did clone an actor that had some stuff like that).

-

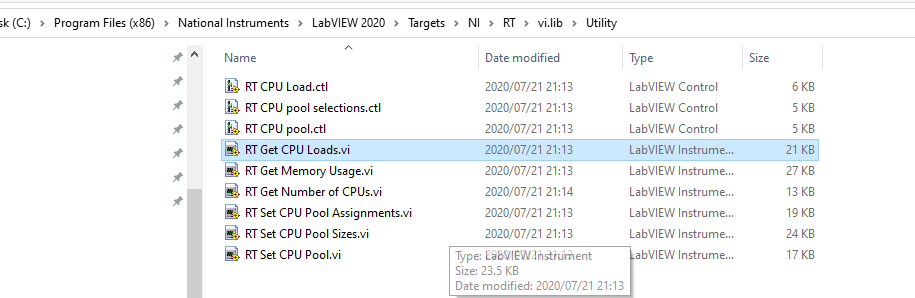

Urgh, I am working on a new framework for RT and LabVIEW has gotten itself so confused. I am getting really weird things when I try and duplicate some of my (homegrown) actor classes. LabVIEW is searching for some of the RT utilities in totally the wrong directory.

I have tried mass compiling and often that just crashes, but I did manage to get this log message:

Search failed to find "NI_Real-Time Target Support.lvlib:RT Get CPU Loads.vi" previously from "<vilib>:\Utility\RT Get CPU Loads.vi" +=+ Caller: "System Status Actor.lvclass:Update.vi"

Now, this library does not exist at that directory, rather there is another vi.lib in Targets\NI\RT and it lives in that vi.lib directory

Now I am kinda stuck... My code runs sort of ok. Sometimes it just refuses to run on the cRIO (but I have seen this behaviour with RT for years now so just gotten used to it) but I cannot mass compile it or it just crashes. I have tried resetting the class mutation history but his also crashes LabVIEW 😞

Any ideas what I can try? This is a real pain. I do of-course have my history in git, but I don't really feel like trying to step back in time and figuring out what the heck actually happened.

-

Really good value and a real chance of not being vapourware!

-

1

1

-

-

On 7/16/2020 at 2:48 AM, JKSH said:

Voted ages ago.

Thanks for posting detailed instructions, @hooovahh: https://forums.ni.com/t5/NI-Linux-Real-Time-Discussions/NI-Linux-Real-Time-PXI-x86-VM/m-p/3561064/highlight/true?profile.language=en#M2107 That's what helped me get started in the first place!

Absolutely. This is such a good tool it is a bit sad NI does not officially endorse this.

By the way I found this thread a bit more current

In particular this post with step-by-step instructions on how to begin: https://forums.ni.com/t5/LabVIEW-Real-Time-Idea-Exchange/Provide-a-Virtual-Machine-VM-in-which-to-run-LV-RT-systems-on/idc-p/3953070/highlight/true#M598

with a bit of further info instruction here to get the embedded GUI up and running: https://forums.ni.com/t5/LabVIEW-Real-Time-Idea-Exchange/Provide-a-Virtual-Machine-VM-in-which-to-run-LV-RT-systems-on/idc-p/3953394/highlight/true#M603

-

1

1

-

-

Update: OK this does "just work". I did what @JKSH tried and ran with a VM image and the results are good. In the video attached I have a re-entrant actor I instantiate twice (just by dropping it on the BD twice).

I have not tried building this into an rtexe but I presume it should also work. Works fine as an rtexe also 🙂 -

Thanks everyone.

Sorry I should have made it a bit more clear, I am familiar with the embedded UI in the Linux based RT environment, I have used it on several projects.

My question is more around the inconsistency. I can get some windows to show up in the Windows host under some circumstances and this is what was confusing me.

I am designing a lightweight actor framework that runs on RT (nothing at all to do with the NI AF) and part of this is the ability to show or hide FP windows. I plan on mostly using this for debugging as the system will normally run headless but I want to see what it can do. I have a similar framework I have developed for "normal" Windows based LabVIEW applications and it works really nicely, but there of course I expect the user to interact with the GUI.

@JKSHthanks for confirming this. This is a bit of pity but not a dealbreaker. I don't have a screen attached as I dont have the right USB C/DP cable that plugs into the cRIO. Will buy one ASAP!

-

Just now, Jordan Kuehn said:

I was getting this in LV2018. I get something similar still in LV2020, as well as projects that don't seem to leave memory even after they are all closed out to the main LV screen. They open back up instantly.

Yes I am seeing that too. The project window opens suspiciously fast after I have closed it in my LV2020 project.

-

Am I being dumb here?

I have a linux based cRIO and have two problems, surely related:

- I want a VI to show its FP when called (just using the tick boxes in the SubVI Node Setup config)

- I want to be able to programmatically show and hide front panel windows. I pass a message to one of my running VIs and tell it to open the FP Window

Neither of these seem to work. In (1) the SubVI is definitely running it just does not show the FP window and (2) the method to open the FP window executes and there is no error in the code but nothing visibly changes

Now, this is not the kind of thing I have done in the past with a cRIO as I am used to running them headless.

Note: I do not actually have a display plugged into the cRIO, I was expecting the windows to open on my dev PC. Is this the mistake I am making?

Note2: if I move all this code out of the RT platform to the My Computer bit everything works perfectly as expected.

Note3: I probably should have mentioned this, the VI I am trying to control is a preallocated re-entrant clone. If I change it to non-reentrant then (1) works correctly but not (2)

-

Confirmed in LV2019 64-bit and LV2020 32-bit running on Windows.

Urgh this is horrible 😞 but I am actually kinda surprised I missed this as I am finishing up quite a large 2019 project and moving stuff around in the project is something I do quite regularly for housekeeping.

As you said, it was difficult before but possible. I am hoping this is just a bug and not an added "feature" that the "data" told NI that customers wanted.

My latest weird project bug with LV2020 is when I close the project it does not automatically close any windows I had open 🤬

-

1

1

-

-

By the way, this has also fixed the issue I forgot to mention that right-clicking in the project also took a really long time to bring up the first instance of the menu.

-

1

1

-

-

2 minutes ago, Darren said:

I'm glad that helped. But that also tells us that your installed compiled cache for core LabVIEW was either missing or somehow broken. You may want to consider repairing your LabVIEW 2020 install, or doing a mass compile of the whole LabVIEW folder, to make sure your core compiled object cache is up to date.

Cool, I will do the full mass compile this evening.

-

2 hours ago, Darren said:

Try mass compiling the [LabVIEW 2020]\resource folder? LabVIEW should install the compiled cache for this already, but maybe something got messed up?

Nice tip Darren, thanks that did seem to work.

Got heaps of errors when mass compiling though! Will do it again sometime and log the results to disk.

-

24 minutes ago, LogMAN said:

Sounds like the compiler is busy figuring things out.

Just tested it on the QMH template and it is as fast as always (1-2 seconds). That said, the template is not very complex. Do you get better results with a simple project like that?Just did a quick test. Started LV2020, did not start or open a project just created a new VI. Did some simple stuff and then created a Sub VI from some of the diagram contents. Just doing this took nearly 30s 😞

-

I have been using LV2020 for a bit recently and have noticed that it is extremely slow to create a SubVI from a selection. This is the kind of thing I do quite often and I am used to it happening mostly instantaneously. In LV2020 this operation now causes the LabVIEW process to ramp up CPU usage and my laptop fans start to spin noisily and about 30s later I get my SubVI.

Anyone else seen this?

SHA256 Hash Algorithm

in LabVIEW General

Posted · Edited by Neil Pate

@gb119 I am trying to use your HMAC - SHA256 to generate a token to allow me access to an Azure Iot hub. I have a snippet of c# code that works fine and I am trying to translate into LabVIEW but not having much luck.

I don't really understand the HMAC stuff properly. Are all SHA256 HMAC implementations the same?

The LabVIEW code above is mostly correct, the decoding of the URI is not quite the same but I manually change this to match the c# code.

I have verified that stringToSign is identical in both implementations, so something is happening after this. The string coming out of the HMA SHA256 is completely different to the c# code which does not look like a Base64 string at all.

My knowledge of this kinda stuff is not good so I am probably missing something super obvious.

Any tips?

ps: apologies for resurrecting this old thread, I should certainly have started a new one.