-

Posts

1,824 -

Joined

-

Last visited

-

Days Won

83

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Daklu

-

Mutation history only comes into play when your app encounters different versions of the same class at runtime. The classes don't mutate from version 8.5 -> 8.6 -> 2009 -> 2010 during the build. In principle clearing the mutation history might save you a little time during the build since you are eliminating the executable code required to perform the runtime mutations, but I'd be surprised if it was noticable and I'd be really surprised if it reduced your build time by 80%. As Jarod said, the easiest way to clear the mutation history of a large number of classes is to change their namespace. You don't need to rename the class to do that. Try this: 1. Add a new project library named "temp.lvlib" to your project. (I'm pretty sure you don't even have to save the library.) 2. In the project explorer, select all your source code and move it so it is a member of temp.lvlib. i.e. If all your code is in a virtual folder named "src," just drag that folder so it is now a member of the library. 3. Move the src folder out of temp.lvlib and back to it's original position in your project. 4. Remove temp.lvlib from your project. 5. Save all. Total time... ~20 seconds.

-

Results absolutely matter when applying the "hero" label. Suppose while driving the burning tanker away from the gas station it exploded next a school bus full of children? It's doubtful that person would be considered a hero, regardless of his good intentions. At the very least the results of the action must be no worse than the expected results had the action not been taken. Intent/motive is crucial when evaluating a person's character, but I don't think they play a significant role in determining heroism.

-

Unexpected Event Structure Non-Timeout Behavior

Daklu replied to Justin Goeres's topic in LabVIEW Bugs

I'll try to wrap it up and post a preview soon. So do I, but there are still implementation decisions that NI would need to make that will add complexity and/or limit their usefulness in some situations. Should the timer event be added to the front of the event queue or the back? Maybe it should be configurable? If so, how? Can timer events be turned on and off at run time? Can timer events be created or the timing parameters modified at run time? How do we configure multiple independent timer events on a single event struct? These are the kinds of functionalities people will want with automatic event timers. Perhaps not at first, but it won't be long before users discover the limitations of a simple timer event are too restrictive. Maybe the wizards at R&D already have an idea for how to cleanly implement timer events. Maybe it looks a lot like a user event. I don't know... I do know that events in general hide a lot of stuff from LV developers in an effort to keep them easy to use. It's that hidden stuff that causes the nasty surprises nobody likes. I'm not sure what you mean by, "other events we did not expect." If an event structure is registered for an event, it should expect to receive that event notification at any time. An event structure can't receive messages it didn't expect. My apologies Justin. I didn't intend it to be shot at all--cheap or otherwise. -

I've had jobs where I've almost died from boredom... does that count?

-

Unexpected Event Structure Non-Timeout Behavior

Daklu replied to Justin Goeres's topic in LabVIEW Bugs

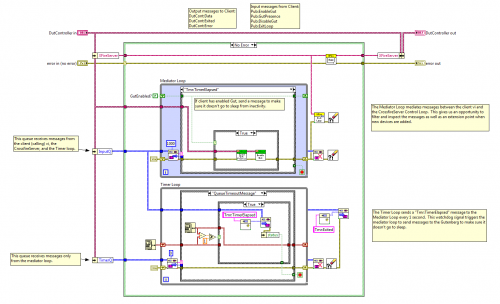

I didn't know about this behavior, but I agree with Chris. I also think that while configuration options do make it faster to write code, they also tend to make the code harder to understand and maintain. Requiring developers to explicitly define what they want to do by writing code isn't necessarily a bad thing. It *can* timeout, it just *doesn't* because it keeps receiving events before the timeout expires. It is a common use-case, but it is also (IMO) an overused and misguided use case. In principle, anything that is in the timeout case (case structure or event structure) should be code that is entirely optional to the correct operation of the component. The component has no control over when it receives messages/events so there is no way to guarantee the timeout case will execute; ergo, putting code in the timeout case that "needs" to be executed is a flawed approach. The "correct" way to handle the situation is to create a parallel loop that sends messages/user events at regular intervals to trigger those other tasks. Using a timeout condition for necessary processing is a convenient shortcut--and I sometimes use it--but it shouldn't be considered a robust solution. (Image taken from this post.) On a recent thread I think alluded to a TimerSlave component I have partially finished. It's essentially a version of the timer loop in the image above, encapsulated in a slave loop object and exposing the timing and message name parameters as part of the creator method. I've been thinking about releasing it as part of LapDog. Would something like that be useful to you? All the more reason to use queues instead of user events. -

Second CLD Practice - Object Oriented

Daklu replied to SteveChandler's topic in Certification and Training

Point of warning--I took the CLD about a year ago and the problems on the sample exams (at that time--I haven't looked to see if they have rewritten them) did't have as many requirements as the problem I was given for the exam. I was able to complete the sample exams in 2-3 hours each, but I didn't finish all the requirements on the real exam. There are lots of advantages to using OOP in real-world apps. Unfortunately, none of those advantages are relevant for the CLD and the disadvantages (namely the time it takes to recreate the frameworks from scratch) are very difficult to overcome. For the exam, I suggest sticking with the standard QSM implementation pattern. (Ignore the alarm bells going off in your head while you're writing your code.) -

Thoughts on Class Data default values

Daklu replied to blawson's topic in Object-Oriented Programming

To quote the teflon president, "I feel your pain." This is one of those design decisions R&D had to make that doesn't have a clear "correct" solution. Consider what happens if you save an object to disk with default values, update the .ctl with new default values, and load the saved object? Should the loaded object contain the old default values or new default values? The answer is, it depends. NI had to choose something, so they implemented it so the object loads using the new default values. Not all data types lend themselves to the default=null convention. Numbers are particularly problematic. Suppose you have a Sum class that adds two numbers and maintains the sum internally. When you load a Sum object from disk where sum=0, is that valid or not? Maybe it's the result of adding -3 and +3? If your serialized objects have data that could be "invalid," it's safer as a general practice to include a private "IsValid" flag. -

Thanks for the encouragement. On the positive side, I should have more time to work on LapDog.

-

Last Tuesday I learned my contract was going to end on Friday, so I lined up a LV dev position with another group that I was supposed to start today. On the way in to work this morning I checked my voice mail to discover the person who was planning on leaving (thus opening the position for me) decided to stay (thus closing the position to me.) Not exactly the kind of news that kicks off a great week. Here's hoping the economy picks up soon...

-

It isn't. I interpreted Black Pearl's suggestion to "Always wire the error wire to the SubVIs" as connecting to the error in terminals of all the sub vi's on a given block diagram, not connecting the error terminals *inside* the sub vi's block diagram. Could be I completely misunderstood... "Save the Pixels." We should get bumper stickers made... Same with me. Even my accessors have error terminals even though they don't contain an error case. Left to Right data flow? I'm thinking of writing an article describing my spiral data flow model... Absolutely agree. Expecting to tack on error handling when the functional code is already in place is asking for trouble.

-

THAT part I understood... everything beyond it is greek. Certain edits to the class data will make the data unretrievable. Example: 1. Put an numeric control in class, assign it the number 5, and save it to disk. 2. Load it from disk and verify you get the number 5. 3. Open the class .ctl, delete the numeric control, and apply the change. 4. Now add a numeric control back to the class .ctl, making sure it has the same name and apply the changes. 5. Load the object saved previously and read the number. Intuitively you think it should be 5, but it has reverted to the default value of 0. The saved data cannot be recovered unless you can revert to the class .ctl file to a version prior to the delete.** Why? Mutation history--it's a double-edged sword. The class remembers that you deleted the first control and then added the second one. As far as it's concerned the new numeric control is for a completely different data member. Since the saved object doesn't contain data for this "new" member it is assigned the default value of 0. Like the issue with propogating multiple typedef changes to unloaded vis, this isn't really a bug either. NI had to define some sort of default behavior and this is as good as any. (I do really wish there were a way to override the default behavior though.) Data persistence is one area where I think typedeffed clusters are easier to use than classes. They are much more transparent to the developer. If you know what to look out for you can be really careful with direct object persistence. Whether or not you can prevent other developers from messing it up is another story.

-

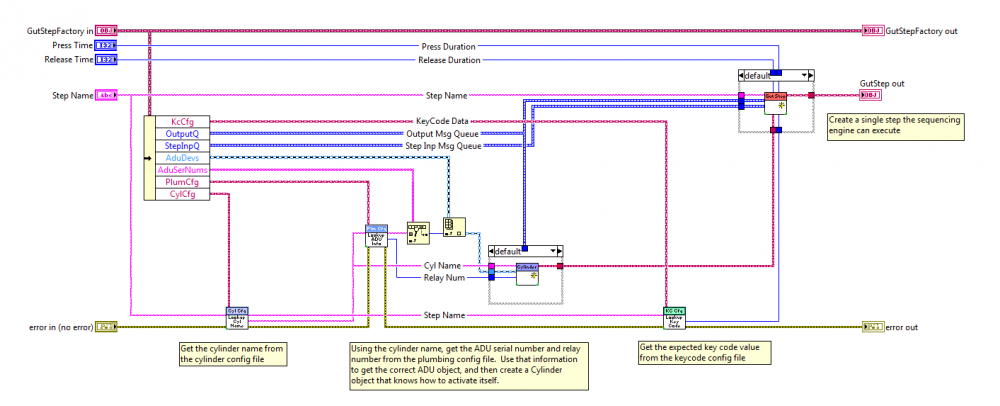

Depends on what you're doing in the sub vi. If all you're doing is calling methods sequentially on a single object, then yeah, the error wire isn't going to affect parallelism. Personally I find I don't do that very often. I don't know if that's good, or bad, or neither... just that I don't run into many situations where I end up doing that. Often in my code there are far more opportunities for parallelism by not making execution dependent on the error wire. If you look at the diagram posted above, LookupAduInfo must be called prior to LookupKeyCode only because of the error wire. In this particular case any potential savings are insignificant. If each sub vi on the diagram had multiple layers of additional sub vis to drill through and I allowed the data to dictate execution flow instead of the error wire, who knows? Just to clarify, I don't mean to imply that I think wrapping an entire sub VI block diagram in an error cluster is pointless. In fact, there specifically *is* a point in doing it--to ensure the sub vi code doesn't execute if there's an error. Turns out that's not a goal that contributes to my code quality or clarity when applied universally to my projects. *shrug*

-

I'd be very, very careful with that particular flavor of kool-aid. There are lots of things that can happen during normal development that can make your saved objects unreadable by the new source code. What makes it all the more dangerous is there's nothing in the LV dev environment warning you that a particular edit will change the way a persisted object is loaded, (or if it can be loaded at all.) I imagine those large documents will be pretty hard to recreate if they unexpectedly become incompatible with the software. Is there any way you can improve the speed of your custom serialization method? I've never done any benchmarking, but orders of magnitude difference between directly writing an object to disk and creating a method that unbundles the class data and writes it to disk? That raises the "something's not right" flag in my head...

-

By "instance" I'm guessing that you're referring to the memory allocated that stores the actual user data, right? Is it okay to obtain multiple pointers to the singleton? I found a couple different c++ singleton implementations (here, here, and here) and if I'm reading them right (big 'if,' it's been 15+ years since I've written a lick of c++ code) it looks like they're doing essentially the same thing AQ is doing--storing the data in some sort of static member (dvr, queue, fg...) and initializing it on the first call. You can split the wire, but in AQ's implementation there aren't going to be any copies. None of the class.ctl data is set to anything other than the default value... ever. Since all instances of the class always have default values, they all point back to the memory location set up for the default object. The first time a class method is called the DVR gets initialized and a second copy of the class.ctl data has to be allocated, but there will never be more than two, and only the second one will ever be accessable by the objects. I must have missed this earlier in the thread... I'll have to try and track down the reasoning tomorrow.

-

I agree... which leads me to believe your point has completely passed me by. If the LV implementation behaves the same way as singleton implementations in other languages, why isn't the LV version a singleton? Or maybe I'm missing some of the finer points of what it means to be a singleton? (I've not used them much in any language, and I'm certainly no authority on them.)

-

How hard was the CLD? -- Not technically difficult, just a lot of requirements in a very short amount of time. Did you pass on your first attempt? -- Yep. How much experience did you have prior to taking the test? -- About 3.5 years. Did you take the NI courses and if so which ones? -- Nope. Were you confident after the test that you passed? -- I was much less confident after the test than I was before the test. What was your score? -- Not as high as I expected prior to the exam. I think it was in the 80's but to be honest I don't remember. Did you finish with any time to spare? -- Heh... good one. Was the project complete? All requirements met? -- No. No. Did you fit everything into a 1024x768 diagram? -- Dunno... my monitors aren't that size. When did you take the exam? -- About a year ago. How did you document algorithms? VISIO or something else? Just pencil and paper state charts or does that have to be in the code? -- Comments on the block diagram. How much time did you spend on studying the requirements and planning before starting to code? -- I spent a little over 20 minutes. Then I realized it was taking too long and dove in.

-

I'm curious why you say it isn't really a singleton? It has all the same behaviors as a singleton. At most LV will allocate enough memory for two instances of the class type--one that never changes for default singleton objects and one that the DVR refnum points to that all the singleton objects read and write to. I'll have to agree with Jon on this. Any singleton is going to have to implement a lock/unlock mechanism if users are doing read-modify-write operations. IMO, exposing the DVR (or queue) refnum via accessors is leaking too much of the class' implementation. If you need to do read-modify-write operations on your singleton I think adding Lock and Unlock methods is a much more robust solution.

-

Something else to consider--by stringing the error wire through all sub vis you're probably imposing artifical data flow constraints on your software, limiting the compiler's ability to create parallel clumps. As far as I know, NI tweaks and improves the compiler with every major release. Adopting general coding practices that optimize compiled code for one release might turn around and bite you in the backside with the next release. Wouldn't a better overall strategy be to wire your code for clarity and logical soundness first, then go back and apply optimizations if they're needed? I'm curious about this... do you try to optimize the error execution path or do you mean you just skip unnecessary delays? I can understand skipping lengthy processes when they aren't needed for the current error condition, but I don't see why it would matter if it takes 100 ms or 75 ms to execute the error path. Doesn't the very nature of there being an error mean the system or component is in an unexpected state and all bets are off? It's not difficult to implement similar behavior in Labview. It's not practical on the vi level by any stretch of the imagination... but it's not difficult. All you have to do is create an ExceptionHandler object that contains an instance of itself and has a single HandleException method. As you progress down your call stack each vi wraps it's own ExceptionHandler object (if it has one) around the one it was given and passes it to the sub vi. See the attached example. (I first proposed the idea here.) Some differences: -LV doesn't inherently support exceptions so you have to check for errors, raise your own exceptions, and invoke the exception handling code yourself. The code shows one example of how to do it. -In other languages often execution continues at the procedure that handled the exception. In this example the exception bubbles up until it is handled and execution continues with the sub vi that generated it, not with the sub vi that handled it. I don't know if "resume where handled" behavior is possible with LV without brute force. I've not used this in a real app and it's clearly too heavy to implement for each vi, but it could be useful at an architectual level when errors occur at lower layer that you want to manage from a higher layer. ExceptionHandling.zip

-

Woo hoo! Hmm.... so define "operate independently" for me. (I'm not being pedantic, honest. These are the thoughts running through my head as I wonder exactly what you mean.) Does that mean you can build the core into an executable and it will execute without any errors, even with the UI layer removed? Is the lack of errors a good enough qualifier, or does it actually have to be useful? (i.e. Does it have be able to *do* some of the things that would normally be accomplished via UI interaction? Say... via the command line and batch files.) If useful functionality without a UI is a requirement, how much of the UI's usefulness has to be replicated at the command line? 50%? Why not 49%, or 51%? Any arbitrary cut off between 0% and 100% puts us in the purely subjective realm of figuring out exactly what should be considered "useful" and how useful it is relative to everything else. Since you're proposing a concrete standard, subjectivism isn't allowed. So we have to choose... Using the 0% bar reduces "decoupled" to mean the same thing as "no run-time dependency." Using the 100% bar makes "decoupling" an impossibility--no command line interface can offer all the same things as an event-driven gui. < Thinking out loud > Seems to me that in addition to different degrees of coupling, there are different kinds of coupling and its meaning is very context-dependent. Maybe I have co-worker who asks me if I've written any code to find prime numbers. "Sure, give me a couple hours to decouple it from my source and I'll send it to you." Clearly I'm referring to source code dependencies. Could that bit of code "operate independently?" Maybe... I probably could compile it into an executable but it's going to need more code before it will do anything useful. There's also run-time coupling. If component A (the core) and component B (the UI) collaborate at run-time to accomplish some task, they rely on each other to do certain things. Perhaps A could have collaborated with C (the command line prompt) instead of B. I don't think that matters. At that point in time A and B are collaborating--and coupled--while accomplishing that task. I can't see how "decoupled" can be anything but a relative and subjective term that, like "warm," resists a black and white definition. When is something decoupled? Here's my definition... Decoupled - A chunk of executable or source code separated enough from everything else so I can do what I want to do without requiring more effort than I want to put into it. When I create really thin UIs there ends up being a lot more messaging between the components as I try to keep the data on screen and the data in the core synchronized. That's not necessarily a bad thing but it does take longer to implement and can be harder to follow the execution path. I'm still trying to find the right balance. In my last project I recently switched from a thin UI to a slightly thicker UI. The UI code, not the core code, manages all on-screen data (test config, sequences, etc,) including loading and saving the files.** That data is passed to the core as parameters of the StartTest message. The result is much simpler; I was able to eliminate at least 2/3 of the messages between the two components. There's always tradeoffs and almost never a clear-cut answer. That's part of the fun, yeah? (**I have a class for each of the files that does that actual loading and saving, so it's not directly managed by the UI. The UI just retains those objects until the test is started.)

-

I have a few things to add but I'm keen to see others opinions on error checking. The "Step" class is part of a Sequencer library that I use for sequencing tests. GutStep is a child class written to execute a test on a specific product. Either that or it's a Nazi parade march. Can't remember which...

-

Kugr! Dropping by for your bi-yearly check in? If you want to start an error handling thread and copy over the content you think is worthwhile that's fine with me.

-

I understand what you're saying and agree with you in principle. What I'm struggling with is the implied idea that there is an arbitrary line you can cross where your UI transitions from coupled to decoupled. "Coupling" is a term we use to describe how much component A relies on component B to do something useful. Or to look at it another way, how extensively we can change component B without affecting component A. For any two components to work together to do something useful there has to be some degree of coupling. Even if the UI and core can each be on different computers they're still coupled. At the very least they have to agree on all the details of the communication interface--the network transport protocol, the set of accepted messages, the message format, etc. Taking a step back they also have to agree on who should implement the functionality required for each feature. Take loading a file as an example. The UI passes a path to the core of a file that needs to be loaded. Who is responsible for making sure the file exists and is in the correct format? (The answer isn't important. The point is they have to have reached an agreement.) Looking at the responsiblities a little more abstractly we could even say the core is coupled to the UI because it needs the UI to tell it what the user wants. Sure the core can execute without the UI, but can it do anything useful for the user? I think what you're describing is better characterized as source code dependency. Injecting UI references into core code definitely makes the core dependent on the UI's source code and creates a relatively high degree of coupling. Breaking source code dependencies is a huge step towards reducing the coupling, but strictly speaking, the only completely decoupled components are those that don't interact at all... anywhere... ever.

-

[General FYI to readers... I've added a SlaveLoop template class to the original post.]

-

There are a couple things I do that make your specific problem a non-issue for me. (Factory method image copied from my post above.) First, I've ditched the rule of wrapping all sub-vi code in an error case structure. (Blasphemy! Burn me at the stake!) About 9 months ago AQ and I had a discussion about this. He termed it, "hyper-reactionary error handling" and talked about the impact it has on the cpu's resources. He said, These days I almost never wrap an entire code block in an error case. Many of my sub vis don't have an error case at all. The questions I ask myself when deciding about an error case are: 1. Do I need the sub vi outputs to be different if there is an error on the input terminal? If so, then I'll use a switch prim or case structure somewhere on the block diagram, though it may not wrap the entire code block. 2. Will the sub vi exit significantly faster by skipping the code on the block diagram when an error is on the input terminal? Yes? Then I'll wrap at least that section of code in an error case. The code I'm looking at here are things like For Loops containing primitive operations that don't have error terminals. String operations, array operations, cpu intesive math, etc. If a sub vi or prim has an error input terminal I don't worry about it's processing time. It will respond to the error input terminal appropriately and I assume (for simplicity) that its execution time = 0 if there is an error in. In the diagram above I'm doing a lot of data compilation to gather the information necessary to create a GutStep object. However, the only primitive operations on this bd are Search Array and Index Array. Is the time saved in those rare instances where there is an error in worth the cost of error checking the other 99.9% of the time when there isn't? Nope, not in my opinion. In fact, this entire block diagram and all the sub vis execute exactly the same code regardless of the error in condition. (I know the sub vis ignore the error in terminal because of the green triangle in the lower left corner.) But low level performance isn't the main reason I've ditched it. I found it adds a lot of complexity and causes more problems than it solves, like the issue you've run into. You've wrapped your accessor method code in an error case because that's what we're "supposed" to do. Why does the accessor method care about the error in terminal? Is unbundling a cluster so expensive that it should be skipped? Nope. Is it the accessor method's responsibility to say, "I saw an error so I'm not returning a real queue refnum because some of you downstream vis might not handle it correctly?" Nope. Each sub vi is responsible for it's own actions when there is an error on its input terminal. In pratical terms that means my accessor methods never have an error case. They set/get what's been requested, each and every time. My object "creator" methods almost never have an error case, also passing the error wire through. Most of my "processing" method don't have an error case either. In the diagram I have 3 different "Lookup" methods. Each of those encapsulates some processing based on the inputs and returns an appropriate output. None of them change their behavior based on the error in terminal's state. However, each of the three Lookup methods are capable of raising an error on their own (I know this because the lower right corner doesn't have a colored triangle,) which would ulitmately result in an invalid GutStep object being sent out. Is it the factory method's responsibility to try and prevent downstream code from accidentally using an invalid GutStep object? Nope. It's the factory method's responsibility to create GutStep object to the best of it's ability. If an error occurs in this method or upstream of this method that might cause the data used to create the GutStep object to be something other than expected, this method lets the calling vi know via the error wire. It's the responsiblity of the calling code to check the error wire and respond according to it's own specific requirements. That's a little bit more about my general approach to error handling. It's more geared around responsibilities--who should be making the decision? (Responsibility-based thinking permeates all my dev work.) I've found that, in general, much of the time I don't really care if there's an error on the wire or not. Many of the primitive functions and structures already know how to handle errors. What do I gain by blindly adding more layers on top of it? Complexity in the form of more possible execution paths, each of which needs to be tested or inspected to verify it is working correctly. Thanks, but I'll pass. ------------ So, now that I've talked your ear off, it sounds like you can solve your immediate problem by removing the error case from your accessor method. All the above is justification for doing that. Of course, that change may cause problems in the rest of your code if you use the <Not a Refnum> output for branching execution flow.

-

Here's how I approach error handling... (hopefully I can explain in coherently.) I imagine each loop as a separate entity in my app. Each of these loops has a specific responsibility: mediator, data producer, data processor, etc. Then, within the context of that loop's responsibilities, I figure out how I want that loop to respond to errors. I try to make each loop self-recover as much as possible. Sometimes that means the loop will "fix" the error on it's own. (Got a bad filename for saving data? Create a temporary file name instead.) Lots of times the loop simply reports the error and goes into a known, predefined state, after which the app user can retry the process that caused the error. I know that's a pretty vague description. Here are some more specifics. They all tie together into a system that works well for me, but it's past the stupid hour and I can't figure out how to lay it out all nice and neat... --- a. All my loops support bi-directional communication. A single queue for receiving messages (input queue) and at least one queue for sending messages to other loops (output queue(s)). --- b. The preferred way to stop loops is by sending it an "ExitLoop" message. --- c. A loop automatically exits if it's input queue is dead. (Can't receive any more messages. Fatal error... Time to stop.) --- d. The loop automatically exits if it's output queue(s) is dead. (Can't send any more messages. Fatal error... Time to stop.) --- e. The loop always sends a "LoopExited" message on its output queue(s) when it exits to let whoever is listening know. If the output queue is already dead the error is swallowed. --- f. I don't connect a loop's error wire directly to the loop's stop terminal. I want the loop to stop when *I* tell it to stop, not because of some arbitrary unhandled error. (Cases c and d are safety nets.) LapDog's messaging library (especially the DequeueMessage method) is an integral part of my error handling. In fact, having the error wrap around the shift register and go back into the DequeueMessage method's error in terminal is the main way errors get handled. DequeueMessage reads the error on the error in terminal, packages the error cluster in an ErrorMessage object, and instead of dequeuing the next message it ejects a message named "QueueErrorInMessage." In that message handling case I'll check the error and decide what action to take. When an owning loop gets a message that a slave loop has exited, it decides whether it can continue operating correctly without the slave. If so, it will make adjustments and continue. If not, it shuts down its other loops and exits too. Hmm... do you mean to say the prior error on the queue accessor's error in terminal triggered the error case, which in turn returned 'not a refnum' and left the queue active?