-

Posts

1,824 -

Joined

-

Last visited

-

Days Won

83

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Daklu

-

Why people would trust so much personal information to an advertising company is beyond me.

-

This is not a case of one random scientist behaving badly. It is a case of several powerful scientists in the area of climate research conspiring to silence critics and manipulate data to achieve a political goal. The IPCC is not a scientific body; it is a political organization. If you read the links you will find many reports of obvious flaws in the IPCC's "peer review" process. Sustainable energy is a separate (though related) issue from AGW. I happen to agree we should be actively researching energy alternatives. The correct way to pursue that goal is NOT by feeding the public fear-based propaganda; it's by finding a real argument that changes the minds of your opponents. ----------------- Incidentally, AGW may be real, it may not be--but it's clear to me we need to step back and look at the issue more thoroughly and more transparently before we take action.

-

I've done some work with a Fanuc robot, though not nearly enough to consider myself an "expert" or even "fairly knowledgable." More along the lines of "knows a few pitfalls and can usually avoid crashing into things." I don't know the model or payload of our robot but it has a range of ~6' end to end. One thing to be aware of is "singularities." These are joint position combinations within the working area from which the robot controller cannot move because the math returns motion values of +/- infinity or there is an infinite number of solutions. (Such as if two axes are aligned concentrically and parallel.) On the Fanuc robot there are two types of motion available: Joint motion and Coordinate motion. With joint motion you program the value you want each joint to take. When executed, the controller sets the speed of the joint movement such that all joints reach their desired position and stop at the same time. The path and orientation of the end effecter are not directly controlled by you during the motion; they are a result of the combined motion of all the joints. With coordinate motion you define the motion in coordinate space. The controller directs the joints to move so the end effecter travels in a straight line at a constant speed from point A to point B. Singularities do not exist in joint motion. They do exist in coordinate motion and make it difficult to access certain regions of the working area. For example, our robot is installed on a wall. Trying to move through the region roughly defined by having the upper arm parallel to the floor and the lower arm pointing straight at the floor was problematic. We hit singularities often because the wrist twist would have to turn infinitely fast (or faster than the robot is capable of) to maintain orientation and speed of the end effecter. Our solution was to move the device being tested so it was positioned under the upper arm instead of right below the elbow. Other solutions could have included programming a coordination motion path around the singularity or switching to joint motion to get through the singularity. So although a robot may be able to reach all of the locations in its range, it cannot always reach them all using the kind of control you want or need.

-

So if I'm understanding this correctly, the action engine has a giant cluster input containing fields for every piece of data that ever needs to be passed into it. And then you have a sub vi for each unique action that the AE can perform. This sounds exactly like a class, except less flexible and more complicated. Why wouldn't you simply use a class?

-

You know, I've never been one to subscribe to the 'left-wing media conspiracy.' Sure, they tended to lean towards the left, but on the whole I figured they were just trying to do their job well. However, the complete absence of any major media outlet reporting on this story leaves me baffled and very disappointed. Why aren't they reporting on it? Fox News (which I neither condemn nor follow) has actually increased their credibility in my view. (Though I still won't watch it.) I know! I can hardly believe it myself!

-

Guns, abortion, and religion discussions tend to devolve into scorching hot flame fests. I don't hang out in the lounge much but from what I've seen the discussions here are much more civil than most places. I hope my disagreements don't come across as flames--they are not intended to be. My involvement in debates (both political and Labview based) has two goals: Testing my own ideas. By throwing my ideas out for public scrutiny others can challenge them and point out errors. (Maybe my opinions are based on bad data, maybe there's new information to support contrary opinions, maybe there's logical inconsistancies in my arguments, etc.) If the counter argument is strong enough I change my opinion. Digging to the root of others' ideas. By questioning others' ideas (sometimes pointedly, and often taking the role of Devil's advocate) and finding out what drives their opinion I can often uncover addition information or different ways of looking at the issue. Sometimes others change their ideas because of flaws in their argument. No intended flaming here. (In fact, I suspect you and I are probably fairly closely aligned.) I agree with you, and incidentally, so does the Supreme Court. The problem is "prudent" is a very subjective term. There are forces in the US who believe the only "prudent" thing to do is remove all firearms from citizens. Agreed, on all counts. The problem I have with it is the wording. It is too ambiguous. It is worded in a way that those who promote banning guns outright can use it to pursue their goals. There are many cases where laws that were intended for one thing ended up having unexpected side effects or were conciously used in ways that the authors didn't expect or necessarily approve of. If it were worded in a way that clearly prevented its use as a way to impose more restrictions on US citizens, I would support it. I'd prefer not to give the gun ban crowd that avenue of attack. I have heard this argument before and used to agree with it. The difference, in my mind, is that owning a gun is a right. Driving a car is not. It is (as my driving instructor pointed out many years ago) a privilege. Pretend there were a large, well-funded, vocal movement with the goal of banning all cars. One of their strategies is to consistently put more and more restrictions on the ability to get a driver's license and purchase cars, all in the name of public safety. After a while you have to draw a line in the sand and say, "no more." From a rights perspective (constitutional, not political) owning a gun is more like being able to vote. There are very few people who promote the idea of a competency test before we are allowed to exercise that right. Neither should we be forced to prove competency before we are allowed to exercise our right to own a firearm. As an aside, most people who purchase firearms are responsible enough to take some sort of safety training. Proving competency before being allowed to purchase a weapon will not prevent criminals from obtaining weapons and it will not prevent accidental shootings. As far as I can tell it serves no purpose other than to construct another obstacle for those who wish to own a firearm. Let me just point out that even though we have a competency test for driving a car, there are still many incompetent drivers on the road. How many auto accidents occur in the US every day? Barring some sort of unforseen mechanical failure, every single one of those is due to a driver who was not operating his vehicle correctly. Rats... I have to go to work... *sigh*

-

After reading through several documents I'll have to disagree with you. The headline is exagerated, but it's not complete horse manure. The potential clearly exists for gun-ban advocates to use this as a way to implement policies in the US that effectively restrict or eliminate citizens from owning and using guns. Hmm... what's the Programme of Action? Adequate laws? Who decides what's adequate? Regulating the transit or retransfer of weapons? Do I have to get permission from the government to buy a handgun from my neighbor or have a rifle in my trunk when I drive to a neighboring state? This is completely circular and makes no sense. By definition the "illegal manufacture, possession, stockpiling and trade of small arms" is, well... illegal. Suppose in the nation-state of Dakluland there are no laws whatsoever regarding the manufacturing, possession, stockpiling, and trade of small arms. Am I in compliance? This is an open ended clause that can be used to justify any imaginable restriction on gun owners. A national database of all gun owners? No. I'm sure this comes across as a rabidly right wing stance to take. Up until about a year and a half ago I held the opinion that having that information on record is not harmful. After all, it can help solve crimes and the government isn't going to go around and confiscate all our guns. Then I discovered this. My opinion changed that day. "Authenticated end-user certificates?" What exactly does that mean? I have to be "certified" to own a firearm? If I have to get permission and certified to do something, that doesn't sound like a right. Their wishes aside, the actions they are promoting (increased legislation, more restrictions, etc.) can be used to do just that. Do I believe there's an overriding UN conspiracy with the goal of disarming American citizens? Not really. But most European nations have vastly more restrictive gun laws than the US and personally I'm not interested in conforming to their idea of what's necessary and appropriate. ------------------------ There's a lot more I found that I haven't posted simply because of time constraints. The measures promoted may not (or may, I don't really know) be intended to be a global ban on guns, but they clearly provide an easy avenue of attack for anti-gun proponents to pursue their agenda. I see the NRA's activities in this matter as a proactive defense.

-

Thanks for the link Yair and the explanation Norm. I'll have to retrain my brain not to think of an "event queue" working behind the scenes as that leads to an expectation of ordered execution.

-

Or in the case of gun control, the Brady Campaign. I think the right side gets called out on it more, but I'm not convinced they do more of it. Why? This is pure speculation on my part but in general, people with more education tend to drift to the left and in general, they tend to be more politically active. That means more smart people are looking critically at the claims made by conservative organizations. ----------- Full disclosure: Politically I'm almost dead-nuts in the middle on any of the typical online quizzes. My left-leaning friends think I'm a conservative nut job, my right-leaning family thinks I'm a liberal moonbat. (Love that word ) Over the last 5 presidential elections I've voted for the 'D' twice, the 'R' twice, and the 'I' once. At heart I'm probably mostly libertarian but practically speaking I don't believe there's any way we can there from here.

-

Congrats! A word of warning... #2 creates more than twice the work than #1. I have a theory that the total work involved in raising children is a function of the factorial of the number of children you have, though I admit I chickened out after #2 and didn't sufficiently test my hypothesis.

-

Do you really believe this phenominon is restricted to right-wing organizations?

-

Aww, Chris... do you have to go? I only just came out to play and so enjoy healthy debate! What do you consider "reputable?" I'd hate to take the time to link sources only to have you dismiss them out of hand as not reputable enough. Unfortunately, "climate change" (and "global warming") is an ambiguous phrase. The precise meaning--is the climate changing?--is largely meaningless without constraints on the timeframe. Is the climate today different than it was 3 million years ago? Not really. 300 years ago? Yep. 30 years ago? Maybe. 3 years ago? No. On the other hand, the political meaning and common understanding of "climate change" generally refers to climate change caused by human activities, or more specifically, by human production of CO2. This is what Climategate is about and I believe this is what Paul is referring to as the scam. (That's what I'm referring to anyway...) So although I'd love to discuss this with you I need to know which definition you're working with. If you restate that as '10 years is a pretty short time to reach conclusions in this instance,' I'll agree with you. Data is, after all, information in itself and doesn't need to be turned into it. Your professor was wrong. Data can be bad. For example, a faulty sensor that continuously outputs a single value produces bad data. I don't see how any interpretation or corrections can make anything useful out of it. (Although I suppose you could claim that wouldn't be "data.") I do agree with what I believe the idea behind his statement is. Namely, statistics don't lie. They can't--they are simply math. The lie comes in manipulating data and analysis to produce an interpretation that supports your preconceived views. I have to admit I find this argument unconvincing on several counts: The data and processes used to reach the conclusions arrived at by the CRU has never been made available for objective scientists to review, much less the general public and commentators. Sometimes commentators DO have a personal stake in interpreting data a certain, less than honest, way. However, in your earlier posts you seem to completely discount the notion that a scientist may also have a personal stake in a certain interpretation. (i.e. How does one provide a reference to show that additional research grants generally depend on the research bearing fruit? Are grant decisions completely random?) Scientists are human too and subject to same desires as everyone else: Money, power, prestige, pride, etc. Why should we take it as a given that commentators can succumb to those influences while scientists are above reproach? This issue is more than just a few scientists fiddling with some numbers. On the whole it's about deliberate, considered, and unethical actions taken by prominent scientists to ramrod a political agenda down the throats of governments. I disagree the issue is that both sides spin data. Extremists at either end of any issue will spin data the way that makes their cause look the most favorable. That, while extremely frustrating to me, is to be expected. The issue here is that in this particular instance the extremists (defined as so by their willingness to spin the information) have obtained very prominent positions with the ability to influence economics on a global scale. (Hmm... in rereading perhaps the "issue here" you are referring to was more narrowly referring to Daryl's comment on 10-year cooling rather than the larger topic of climategate in general. If so, I withdraw my disagreement, though I stand by the rest of the statement.) Naw... then there would just be arguments about what to do about it. Or whose fault it is.

-

Paul, I think it's a big leap to think that because a few scientists have deleted data (if these affirmations have not been taken out of context), that all the climate scientists are covering the truth. You find a rotten apple, you expose it and take it out of the basket. You certainly don't throw the whole basket away. Francois, I think you're missing a key point. From what I have read, it appears (though I am not certain) these scientists may have provided much of the data the climate science community based their findings on. It's not simply a matter of removing a bad apple, it's that the bad apple is held up as a model of what "good" apples should look like. At the very least their papers have had a major impact on public policy, both within the US and in the IPCC. The economic impact of the proposed solutions based on their research is huge, both in monetary and geographical terms. In that context, I have to agree that this may be the biggest scam in history. I'm not saying human-induced global warming isn't happening or that those who believe it is happening are enganged in a huge conspiracy. (Perhaps it is, perhaps it isn't. Having spent time reading through various arguments and critiques from both sides I personally haven't seen any compelling evidence that it is.) I am saying these scientists let their personal/political motivations influence their work to the extent the results can no longer be accurate labelled "scientific." Yet they published and promoted their work as through it was, and successfully influenced public policy with it. The more I read, the more disgusted I get. This is the religion of science at it's worst, and religious ideology of any sort should not form the basis of governmental action.

-

I agree that this is the major issue that nobody is talking about.* Regardless of the ultimate cause of climate change, be it natural or man-made, or our specific political leanings, these scientists have clearly violated the public trust through their manipulation of the scientific process. From withholding data (in clear violation of the law) from people who might be critical of their work to threatening peer review publications as a way to prevent them from publishing contrarian viewpoints, these guys blatently overstepped the bounds of good science. This is completely and totally inexcusable. I've long been on the fence regarding human-induced climate change, but these emails cast a long shadow across climate change proponents. They also, unfortunately, reflect very poorly on the entire scientific community. (*I did find one very good article where a climate researcher acknowledges the mistakes of the CRU.) [Later] After reading a bit more it appears the emails may not be the most condemning part of the hacked information. That would be what appears to be a readme file ("Harry_Read_Me") containing notes by a programmer working on the code of their mathematical model. There's a story on the CBS blog here.

-

This is new information for me as well. Can you expand on it? Under what circumstances does an event queue not execute events in the order they are received?

-

Is this your first?

-

@jgcode re: sequence structures I've always been under the impression stacked sequence structures are considered bad style but flat sequence structures are okay, even when using multiple frames. Did I miss the announcement?

-

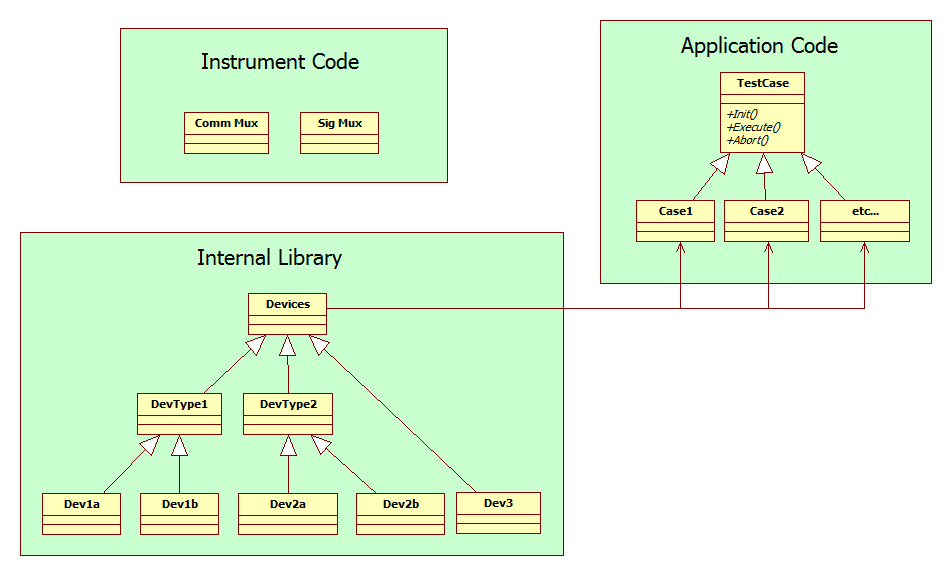

I've been thinking about starting an Object Oriented Design Challenge as a way to foster discussion about different ways to tackle problems in OOP applications. Lack of time has prevented me from contriving a simple problem to kick off the series, so I'll start with a fairly complex problem I'm facing. Background: We have an (incomplete) test sequencer that by fortunate coincidence mostly works. Our application is set up with an abstract Test Case parent with the execution details encapsulated in child classes. We have an internal class library for our devices. The specific device to be tested (Dev1a, Dev2b, etc) is selected by the operator when setting up the test sequence. The device object is passed into each test case before executing it. The station has been designed now needs to test devices in any one of four test positions. We have internally built switch boxes to control the communication path and signal path to each of the test positions. I wrote drivers (CommMux and SigMux) to control the switch boxes. The problems: When starting a test case, the initial switch box settings depend on the test case, the device being tested, and the test position. If any one of those changes it requires a different settings for the switch boxes. Switch box settings may also be changed as part of the test case. (There is more to them than just a mux.) New test cases and new devices will be added in the future. No new test positions will be added to the station. (So they say...) It turns out that we also have to communicate with the devices before any test cases are started, or have even been selected. This communication path can be determined based on the device and test position--it doesn't depend on the specific test case. The questions: With 37 test cases, 12 devices, and 4 test positions, how would you add this functionality into the application in a way that promotes the expected extendability, without creating a separate case for each combination? How do you structure this in a way that the functionality is available to solve problem 1 and problem 2? I have some thoughts on ways to go about this, but I'll withhold them for now so I don't pollute the idea space.

-

That's good to know. I'll have to keep my eyes open for a home learning kit. That's what I needed to know. Thanks for the feedback; now I won't waste a bunch of time experimenting with it. It's not that I want to do that specifically. I just recognize that is an available option but I had no idea what the pros/cons are of using that technique. You and rolf both indicated that using dll's in that way isn't a best practice. That's good enough for me. That being the case, if I want to have my executables dynamically link to reusable code libraries at runtime, what's the best way to distribute the libraries? Package them in .llb's? Any particular place they should go? Target computers may not have Labview installed so user.lib doesn't seem like a good option. If they're not in user.lib then the application developer needs to use the VI's from the runtime library, which takes me back to the original thought of distributing a package of components that are dynamically linked to from both the IDE and the executable, except that it's in a .llb instead of a dll. (I'm getting dizzy just thinking about it.) Or is it simply that dynamically linking to libraries at runtime is generally more trouble than it's worth? Maybe it's better to distribute reuse libraries as devtime tools and include all the necessary components as part of the .exe when compiling? It certainly is easier that way, but it would be nice to be able to update an application to take advantages of new library improvements without having to recompile the source code. My long-range motivation is that I need to design a reuse library to support testing our products during the development phases. Unfortunately the product map is quite complex. Each product has multiple revisions, which may or may not use the same firmware spec. We have multiple vendors implementing the firmware specs in IC's and each vendor puts their own unique spin on how (or if) they implement the commands. Some devices require USB connections, some require serial connections, some support both. Of those that support both, some commands must be sent via USB, some must be sent via serial, and some can be sent through either. Of course, the set of commands that can be sent on a given interface changes with every revision. (And this is only the start...) In short, it's a mess. I believe the original intent was to have a common set of functions for all revisions of a single device, but it has grown in such a way that now it is almost completely overwhelmed by execptions to the core spec. I made sure I was using the call by ref prim for precisely that reason. My original experiments are on my computer at work. I'll post a quick example if I can repro them at home. Nope, no typedefs. I've learned that using typedefs across package distribution boundaries is bad often ill-advised. Exactly. The lack of readily available documentation of best practices means I have to spend a lot of time exploring nooks and crannies so I can develop my own list.

-

Maybe I've been approaching the idea of runtime libraries all wrong. I have always assumed the ability to create DLLs was used only as a way to make Labview code modules available to other languages. Labview seems to have built-in capabilities that make DLLs unnecessary when used with executables built in Labview. Am I wrong? Is it advantageous to create DLLs out of my Labview runtime libraries instead of dynamically linking to the VIs?

-

I didn't know that, and it's a pretty important bit of information if I'm building a framework to base all our applications on. (On the other hand, now I'm wondering why there's all the fuss over the non-palette vi's NI has made inaccessable. Those changes only prevent you from upgrading the source code to the next version--existing applications should work just fine.) Knowledge. I'm trying to understand the long term consequences of decisions I make today. I agree, but there is almost no information available describing best practices for creating reusable code modules or designing api's in Labview. So I have to turn to books talking about how to do these things in other languages and try to figure out which concepts do apply and how they translate into Labview. Hence the question about applying source compatibility vs binary compatibility concepts to Labview. [More thinking out loud] I think I agree with you. Getting back to what ned was talking about it makes more sense to me to approach it from the user's point of view. Source compatibility means a new version of the code doesn't break the run arrow in VI's using the code. Binary compatibility means a new version of the code doesn't break executables that link to the code. (Ned actually used the word binaries but I think that term is ambiguous in Labview due to the background compiling.) But there's potentially another type of compatibility that does not fall into either of those categories. Maybe it's possible to create changes that don't break the run arrow, don't break precompiled executables that link to the code, but do generate run-time errors when executed in the dev environment? If that is possible, what would it look like? Also, if we assume all our development is done in the same version of Labview, is it possible to create changes that cause source incompatibility or binary incompatibility, but not both? As I was poking around with this last night I did create a situation where a change to my "reuse code" allowed a precompiled executable to run fine but opening the executable's source code resulted in a broken run arrow. I have to play with it some more to nail down a repro case and figure out exactly what's happening, but I thought it was interesting nonetheless.

-

Doesn't matter. In principle binary compatibility, like source compatibility, only requires the entry and exit points to remain the same. What happens inside is irrelevant. (Assuming the behavior of the api has not changed.) If that's true then the following must also be true... The Labview RTE's aren't backwards compatible. (Since compiling only occurs in the dev environment.) If I distribute a code module that is intended to be dynamically called at runtime I have to distribute the correct RTE as well. If I have an executable created in 2009 that dynamically calls code modules created in 8.2, 8.5, and 8.6, when the executable is run I'll end up with four different run-time engines loaded into memory. (Either that or the application simply won't run, though I have no idea what error it would generate.) Uhh.... what? Since each vi saves it's compiled code as part of the .vi file I'd be more inclined to think there is no source compatibility. (But that doesn't really work either...) Ugh... that gave me a headache.

-

Here's the relevant part from the book. I believe imports statements in java are simply an edit time shortcut and the compiled code would be fully namespaced. Wouldn't that make that snippet binary compatible but not source compatible? The book goes on to say trying to maintain binary compatibility is a useful goal but spending a lot of time on source compatibility doesn't make much sense in Java, which indicates binary compatibility can't be a subset of source compatibility. (Not in Java anyway.) Maybe source and binary compatibility are independent? I don't think that's correct. A recompile is done automatically if the vi is loaded in the dev environment, but in previous discussions I've been told the run-time engine doesn't do any compiling. Without a recompile the vis must be binary compatible. I have no idea what that means (maybe that's the point?)... but it makes for an interesting mental picture...

-

[Thinking out loud...] I've been reading a book on developing APIs (Practical API Design - Confessions of a Java Framework Architect) that touches on the difference between source compatible code and binary compatible code. In Java there are clear distinctions between the two. If I'm understanding correctly, in Java source compatibility is a subset of binary compatibility. If a new version of an API is source compatible with its previous version it is also binary compatible; however, it is possible for an API to be binary compatible without being source compatible. My initial reaction was that Labview's background compiling makes the distinction meaningless--anything that is source compatible is also binary compatible and anything that is binary compatible is also source compatible. In fact, I'm not even sure the terms themselves have any meaning in the context of Labview. After thinking about it for a while I'm not sure that's true. Is it possible to create a situation where a vi works if it is called dynamically but is broken if the BD is opened, or vice-versa? I'm thinking mainly about creating VI's in a previous version of Labview and calling them dynamically (so it is not recompiled) in a more recent version.