-

Posts

1,824 -

Joined

-

Last visited

-

Days Won

83

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Daklu

-

See... both of those comments reflect the prevailing opinion; one which I have long subscribed to and has merit. Yet people do participate in the beta testing, and unless they spend an inordinate amount of time playing with Labview on the weekends (*cough cough*) they must be doing it at work, where there is the additional requirement of being productive. I'm just wondering what others do to minimize, not eliminate, the risk. I develop engineering test tools for internal customers so my apps don't need to have the same level of polish as you'd expect from hiring a 3rd party firm and some risk may be acceptable. Does NI use their betas as a user research platform or is it strictly for code verification? Is the beta feature set typically fairly well locked down? How successful have you been backsaving projects developed with the beta to previous released versions? If you compile a beta project into an executable does it have the same instabilities you find in the IDE? If you've developed VIs using the beta, do you find you have to make lots of modifications when the final version is released or is it usually a few minor tweaks here and there?

-

I'm looking for information on the differences between JKI's and NI's unit test frameworks. What advantages does NI's UTF offer over JKI's? What disadvantages? The BIG difference is one is free and the other costs $1500. I'm looking for other, not so obvious differences. I've used JKI's UTF enough to be comfortable with it; I've not used NI's UTF.

-

I know several users here participate in Labview betas when they come up. I typically do not, primarily because I'm worried the software I produce will be less stable or will have to be rewritten after I've used some beta feature that ends up getting cut. I'm can deal with design-time issues such as random crashes or incorrectly rendered graphics, but the software I deploy cannot be beta quality. (Unless, of course, it's a beta version of my software.) For those that do participate in the beta, how do you minimize risk to your projects while still adequately testing Labview's new beta functions?

-

It then goes on to assume that you will want to wash and dry resealable bags, which I personally have no interest in.* The product being researched is an "Easy-Wash" resealable bag, so the questionaire focuses on those people who are willing to go to the added effort of doing that. The first questions are just to weed out the null data points. (I believe you've been weeded. ) *I do reuse them, I just don't put bread in the same bag I put my fish in.

-

It could be worse... you could be a balding game-show host from the 50's with a billboard forehead, a nose that would make Pinocchio jealous, and a grin that just reeks of, "Hey kid... want a piece of candy?" My daughter says she's "fun sized."

-

The author of Coders at Work commented on Joel's blog here.

-

These are the comments that summarize my thoughts: “duct tape programmers – sacrificing tomorrow’s productivity, today!” "Duct tape is a patch, not structure. You can’t build a house out of duct tape, but you can patch a hole until you have time to do the right thing."

-

Naming Conventions and Project Organization

Daklu replied to Daryl's topic in Application Design & Architecture

Check with the local NI sales team. Our local sales group has provided on site "brown bag" lunch lessons designed to help developers get up and running quickly. They might be able to talk about the advantages of source control too. -

Naming Conventions and Project Organization

Daklu replied to Daryl's topic in Application Design & Architecture

Heck, I can't even settle on a naming convention for my own stuff. Sometimes my sub VIs names have spaces, sometimes I use PacalCase. Sometimes I'll use dot notation in the name to help describe the purpose of a given VI. (VirtualNamespace.SubVi1) Sometimes my abstract base classes are appended with "Abstract," sometimes they aren't. Sometimes my base classes are appended with "Base," sometimes not. I just haven't found any general rule I'm completely comfortable with yet. About the only positive I can give is that my naming is generally consistent within a specific project. When comparing different projects all bets are off. -

I agree with you on this point. Adding unnecessary complexity is bad, and there certainly are software theorists in every company who tend to do this. (I suspect it's an unconcious attempt to keep their work interesting rather than intentional obfuscation for job security.) But there are times when complexity is both necessary and helpful and I felt like Joel completely dismissed those cases. Duct tape on a go cart is fine. Duct tape on the wing of a 747 isn't. Ultimately the software should be as complex as it needs to be, no more and no less. Two examples in particular really kind of bothered me: "They were being extremely academic about their project. They were trying to approach it from the DOM/DTD side of things. ‘Oh, well, what we need to do is add another abstraction layer here, and have a delegate for this delegate for this delegate. And eventually a character will show up on the screen.’" The article presents this as an example of the kind of overengineering duct tape programmers despise. What the article doesn't mention is that in the overall architecture of the application that may have been the best way to do it. The duct tape programmer, working in his little section of code, wants to make a call directly to Widget D and sees calling Widget A, which delegates to Widget B, which delegates to Widget C, which in turn calls Widget D, as a complete waste of time. He's probably right if the software is going to be released and never touched again. For software such as Netscape that is going to be maintained and built upon for quite some time, you're generally better off (IMO) taking the extra time up front to do it right. Every shortcut or hack you implement couples your code modules together that much more. After a while you end up with a big ball of mud that is impossible to understand or modify and you're left with needing to do a rewrite. "...and you’re going to get paged at night to come in and try to figure it out because he’ll be at some goddamn “Design Patterns” meetup." Yeah, this bothered me because it hit too close to home. I've been studying design patterns lately and I've found them extremely helpful. With this one sentence Joel dismisses design patterns and, by extension, the whole concept of architectural reuse. Maybe he likes reinventing the wheel all the time... I'd rather learn from what others have done. I agree with the conclusion, but not the reason. I believe it is because a) LV users typically do not have any software engineering experience and thus don't know of any other way to program, and b) most users aren't asked to build complex systems where design patterns and architectural considerations come into play. I've only read a bit on the demise of Netscape. Why did they make that decision? I think it depends entirely on the specific situation. At one end of the spectrum, full-time employees definitely should be given training at company expense if the company is asking them to take on challenges they have not encountered. At the other end, I don't want to pay a consultant to spend two weeks learning LOOP. I expect him to already be an expert on the subject. Contract positions can fall anywhere between the two extremes, with short term contracts leaning towards the consultant side and long term or open ended contracts leaning towards the full time employee side.

-

Why is LV beeping at me when I try to edit a VI icon?

Daklu replied to Michael Aivaliotis's topic in LabVIEW Bugs

Nope. It beeps any time I try to edit the vi icon from the block diagram rather than the front panel. -

Why is LV beeping at me when I try to edit a VI icon?

Daklu replied to Michael Aivaliotis's topic in LabVIEW Bugs

I've noticed that too. I agree it's annoying but I guess it's never bothered me enough to file a bug on it... -

I usually like him too, but I didn't like that article. The only worthwhile message, "don't forget to ship your product," is buried under an avalanche of contempt for anyone who tries to methodically improve their software. Then he pulls the "mediocre programmer" card out of his back pocket, neatly preventing contrary opinions. Coercive journalism at its finest. I guess we should all aspire to be duct tape programmers and just wing it a little more... whether we're building a go cart or a Formula 1 racing car. (I did find it rather funny that he held up a Netscape programmer as an example of programming excellence. Maybe Netscape failed because it was over-engineered. On the other hand, maybe it failed because there were too many duct tape programmers flying by the seat of their pants.)

-

I don't have an issue with your approach as a matter of personal preference or coding style. I object to the proposal that NI remove the ability to set alternative default values for objects. IMO there are valid reasons to use it and disabling that feature because it can be misused is throwing out the baby with the bathwater. Funny... I was thinking the same thing about me. Here... try this Kool-Aid. It's really good. Can you expand on this comment?

-

I suppose that could be valid, though I don't think I would design an Interface that makes calls to itself. When I start implementing vertical api methods the code that uses it tends to get more complicated. I have much more success when I keep all my methods for a given api flat and on the same level of complexity. If I need something more complex than what is provided I prefer to either wrap it in a subvi in the client code or build a higher level api on top of the first. This is rather vague and cryptic, but is this something that could be addressed with a relationship-based community scope? Something that allows you to specify exactly which VIs in one class can be called by which VIs in another class, rather than allowing all Friend VIs permission to call any community scoped class VI? I'm not saying that's a good solution... just trying to understand the scenario you're describing. Agreed. Me and my little brain tried to stick as close to traditional Interfaces as possible. Not out of loyalty to the concept, but because it seems to be a well-proven design and I have no idea what the long-term consequences of breaking those rules would be. Still, I have asked myself many times what an Interface in Labview would look like and how would it behave? How does the principle "code to an interface, not an implementation" apply to Labview, where everything is an implementation?

-

Why can't you modify it? Copy the constant to a new vi, wire up a few class methods, and you've got your new settings. It's only unknown until you probe it--just like every other object constant on the block diagram. Setting my own default values for an object constant doesn't make the information it contains any less available, it's just slightly more inconvenient to view and change. As far as being dangerous, I don't see how fixing an object constant in a known state is more dangerous than using a default object and setting all the properties at run time. In both cases you end up with an object in the same state. I'll point out that using an object with my own default values is less dangerous than using a default object and only setting those properties that need to be changed. The former stores the entire state of the object; the latter is effectively a diff against the class' default values, which you have no control over.

-

I don't see it. Interfaces, such as ISleepable, have no knowledge of the classes that will eventually expose them. How would you design a private method to do work on an object without knowing what the object is capable of? If you're talking about the Interface Implementations then I fully agree with you. Edit: Hmm... I see I wasn't very clear about differentiating between Interfaces and Interface Implementations in my previous post.

-

Yes, it really does eliminate the need for an init method and yes, it is by changing the default values of a specific object on the block diagram. I'm not sure why you consider that a "super-secret-implicit-sorta way" of avoiding the requirement to use an Init method. The default value of data containers on the block diagram are under the user's control. That's why this is a problem. The class developer owns the class. When I drop it on the block diagram it's no longer a class, it's an object, and it belongs to me. Once an object has been instantiated it has a unique data space. In principle, changes to default data in the class definition would affect newly instantiated objects but not pre-existing objects. Labview kindly simplifies the workload by automatically updating an object's default data when the developer has not overridden the default values. That kindness just goes a little too far right now. Think about text languages. Suppose you write a program that creates an object, sets it up in some state, and saves that object to disk. Then the class designer modifies the default value of private data in the class definition. Wouldn't it strike you as incorrect behavior if when you reloaded your object, the data you had specifically set and saved to disk had been overwritten? That's essentially what is happening. Changing the data type is a different discussion. Tomi is talking about simply changing the value. Class users never have direct access to private data. Labview doesn't allow it. Class users only have indirect access provided by methods the class developer implements. I think you're getting too hung up on the private data angle. Look at it in terms of the object's state, or better yet, the object's publicly accessable state information. If I've put the object in a specific state, Labview shouldn't be overwriting my state behind the scenes.

-

You can also use User Events to fire the same event programatically, though that requires a little more supporting framework. Or, if your events correctly issue messages to parallel loops, you can achieve the effect of firing the event by simply sending the command directly to the parallel loop.

-

Thanks. A couple quick comments--I'm half asleep so hopefully they'll be coherent. -The only reason ISleepableBaby:Wake is a friend of Baby is so the Interface can access the Baby:WakeUp method. Baby:WakeUp is implemented as a way to wake up the baby without pinching her and making her cry. By giving the method community scope the only way to wake up the baby without pinching her is via the ISleepable interface. This case is trivial--it's intended to show how a developer can require certain functions be called through an Interface. -Baby shouldn't be a friend of ISleepableBaby. That's a mistake. You only make other classes or methods your friends if you want to give them access to your community scoped methods. Convential wisdom is that Interfaces should not have private or community methods, so there's no need for them to make any other classes friends. Maybe in the long run there will be a valid reason for having private or community methods in Interfaces. I haven't seen one yet though. ------------ G'night, and I expect you to have solved the remaining problems by the time I wake up tomorrow.

-

You mean the InterfaceCollection class? This was a design decision I made in an attempt to keep things as simple as possible for class users at the cost of a little more complexity for the class developer. I did a lot of experimenting with putting the InterfaceCollection in the Interfaceable class. There are two main reasons why I ended up rejecting it. The main reason is that if the InterfaceCollection is in the Interfaceable class, child classes would need to implement initialization code to populate the parent object with the correct Interfaces before the class would function correctly. I don't like building classes that require Init VIs. I find them counter-intuitive in Labview and until NI provides us with customizable constructors self-invoked run time initialization routines I'll do my best to avoid them. Also, Init vi requirements tend to propogate down through all descendent classes. If a parent class requires one, chances are the child classes will need them too. I wasn't too thrilled about putting an Init requirement at the base of what could potentially become a very large hierarchy. The second reason is Interfaces are not intended to be dynamically assigned at run-time. The set of Interfaces a class supports is determined and fixed during design. You cannot add support for new Interfaces to a class without modifying its source code. (This restriction maintains continuity with how Interfaces are implemented in other languages.) Since an Interfaceable class exposes a pre-determined set of Interfaces, it's much easier in the long run to store the InterfaceImplementation objects (ISleepableBaby, etc.) with the Interfaceable class they are designed to work with. On a scratch block diagram I add the appropriate InterfaceImplementation objects to an InterfaceCollection using the Add method. Then I make sure I wire an indicator to the output terminal of the last Add method. Run the VI. Convert the indicator to a constant, right click on the front panel indicator, select 'Make current values default,' and drag it over to my Interfaceable object's private data cluster. Done. I might put together a little tool that automates the process but I'm not convinced it's necessary. You're in luck. No OpenG stuff is in there. You do need VIPM to install the package. (That's not OpenG though... it's a reusable code management application.) That's exactly what I was going to do when I mentioned refactoring out the CollectionFramework package. All you need to do is create an InterfaceCollection object with non-default values like I described above and replace the object in the Baby class. _CreateDVR is a compromise. I'd prefer constructors self-invoked run time initialization routines, but alas, we don't have them and it's doubtful we ever will. Create methods do only need to be called once, but then you also need to create error handling in case the user tries to call a method without initializing the object. It's a trade off. The complexity doesn't really go away; it just gets pushed off on a different developer. There's certainly nothing in the framework that requires one or the other. Yep. Erm... wait... maybe not. I know I struggled with circular dependencies for a long time. (Labview doesn't allow them.) The DVR might just be a remnant from all the different things I tried. Or it might be required to break the circularity. To be honest I don't remember right now--it's late here and my brain is a little fried. I'll have to re-examine the code to see if I can figure it out. Thanks for looking it over. The more eyes we can get on it the better it will be. I honestly believe Interfaces in Labview will open up a whole range of possibilities that have previously been difficult or impossible to achieve. I'm not trying to toot my own horn; I'm just hoping others see the potential and get as excited about it as I am. I'm open to all feedback, positive and negative. I have been planning on posting the code in the repository if enough people expressed interest. Kurt mentioned a collective effort towards developing a common framework. I'm all for that too. I'd rather work towards a concensus than impose my vision on others.

-

Can't happen without major shifts in Labview's design. LV doesn't offer a class type primitive we can use on block diagrams. All we have are instantiated objects from which LV extracts the necessary type information. Remove class constants from non-member block diagrams and everything breaks. Plus, if you take away class constants from block diagrams every single class you ever wrote would need to have a factory "CreateNew" method implemented. Private data defines the state of an object at any given time. The class user should not have to worry about how the state is represented internally, but it is perfectly valid for him to be concerned with its state--especially if a previously saved state can be unintentionally modified with no warning or indication to the class user. Ultimately your argument boils down to the fundamental concepts of what it means to be data-based language. Every constant you drop on the block diagram represents one or more real pieces of data. How do you justify allowing some constants, such as strings or integers, to have non-default values stored internally while object constants cannot? From a more practical standpoint, saving non-default data in object constants is often very useful as it can eliminate the need for Init methods. I find this extremely useful because if a class has an Init method that must be invoked before the object will work correctly, classes that inherit from it usually must also have an Init method.

-

Jim, I'm not sure if you noticed this but what you suggested in post #2 is essentially the command pattern. (Though Paul did a much better job of explaining it than either of us!) The only difference between what your suggestion and Paul's illustration is the level of resolution in the commands. Your commands encompass an entire program; Paul's commands are discrete. Either way works, it's just a matter of what the developer is trying to accomplish.

-

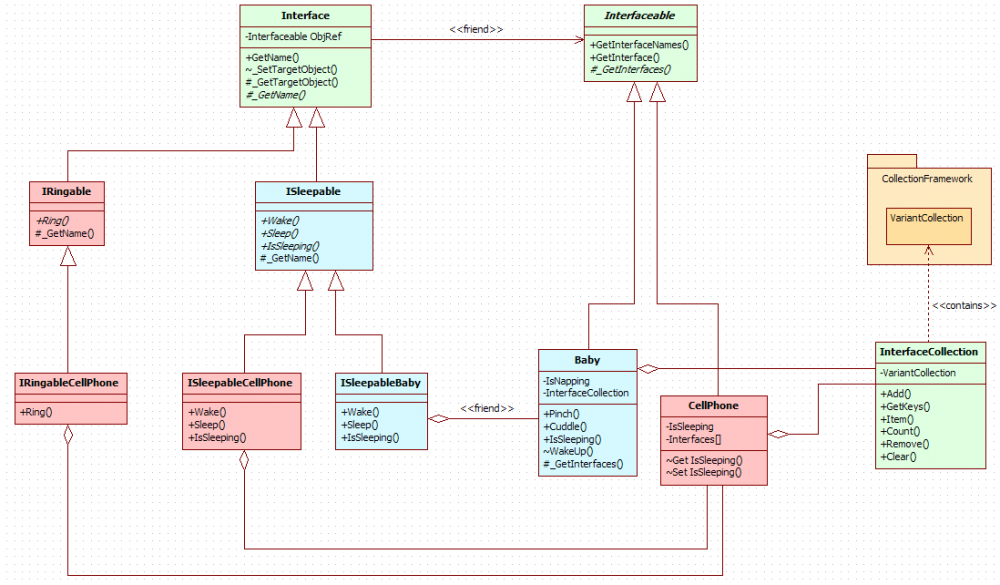

Here's an object diagram of my current Interface framework. Aside from some comments in the demo vi this is all the documentation I have so far. I am curious what the pain points are for others who pick up the framework and try to implement Interfaces in their own code. (Or for that matter, those who just try to understand it!) Some points to note: The Interface framework classes are highlighted in green. Everything else is extra baggage for dev work. The framework currently depends on the CollectionFramework package, highlighted in orange. Eventually I'll refactor the functionality I need into the InterfaceCollection class and remove that dependency. For now the CollectionFramework package is included in the zip file as an OpenG package. They will show up under your User palette after installation. The blue classes have been implemented for demonstration purposes. The red classes are pending implementation to fill out other demonstration scenarios. LV2009 required. Since the language of Interfaces is undefined in Labview, trying to describe things in text is difficult. I have terminology I use but sharing it right now would confuse the issue since it's not quite consistent with the diagram or the demo project. [Edit Aug 3, 2010 - Removed pre-release version. Get current version from the Code Repository.]

-

Not much to discuss... you've laid out your case very well. I can see how the current behavior would lead to very nasty bugs. Someone at NI must have read your comment and lit some fires.