-

Posts

83 -

Joined

-

Last visited

-

Days Won

9

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Youssef Menjour

-

Hi everybody, Is someone know what possibility exist to distribute a library on LabVIEW. I mean, is there only VIPM tool ? I would also like to know where I can find a complete guide on licensing and licensing policy. If i want to put licence on my product what are my option ? How it works (licensing tools) ? What are the different types of licenses possible ? I would like to make several versions of my HAIBAL project with several licensing policies (student, lab and pro) and didn't find a document explaining all of this part. As this is our first software sale on the NI store (or other platform) and I don't have a lawyer on my team, I have a lot of questions: is it complicated to sell my product online ? What are the obligations attached to each type of license? Does JKI offer a solution for all this? If so, how much does it cost? Is it only an acquisition cost, or do you then take a royalty on sales? Is someone can help me on this subject ? Thanks ! Youssef

-

The idea is to propose not only a simple library on LabVIEW but also to propose an alternative to Keras and Pytorch. HAIBAL is a powerful modular library and more practical than python. Be able to run any deep learning graph on cuda or Xilinx FPGA platform with no particular syntaxes is really good for Ai users. We are convinced that doing artificial intelligence with LabVIEW is the way of the future. The graphics language is perfect for this. The promotional video is not aimed at LabVIEW users. It is for those who want to do deep learning in graphical language. We are addressing engineering students, professors, researchers and R&D departments of companies. LabVIEW users already know what drag and drop and data flow are. LabVIEW users do not need this kind of video.

- 16 replies

-

- 1

-

-

- labview

- machine learning

-

(and 3 more)

Tagged with:

-

This video is for the vulgarisation of our product. We took the inspiration from NI : It's marketing and vulgarisation because we address the world of deeplearning which does not formally know the graphic language

- 16 replies

-

- labview

- machine learning

-

(and 3 more)

Tagged with:

-

It's a shame because our library is really disruptive. Being able to easily integrate any DEEP model into LabVIEW architectures is a pure joy. One year to convince that is possible. One year of hardwork to develop the Graphical Deep Learning Library. One year of difficulties. One year to do it.

- 7 replies

-

- deeplearning

- pytorch

-

(and 3 more)

Tagged with:

-

Hello everyone, It is now time for us to communicate about the project. First of all, thank you for the support that some of you have given us during this summer. We didn't go on vacation and continued to work on the project. We didn't communicate much because we were so busy. HAIBAL will be released soon, with some delay but it will be released soon. We have solved many of our problems and are actively continuing development. The release 1 should be coming soon and we are thinking of setting up a free beta version for the community to give us feedback on the product. (What improvements would you like to see!) For the official release, we might be a little bit late because the graphic part is not advanced yet. We still have to make a website and a youtube channel for the HAIBAL project. I don't even talk about the design of the icons which has not been started yet. In short, the designer works a lot. In the meantime, here is the promotional video of HAIBAL ! See you soon the community ! Be patient, the revolution is coming

- 16 replies

-

- labview

- machine learning

-

(and 3 more)

Tagged with:

-

Hello everyone, It is now time for us to communicate about the project. First of all, thank you for the support that some of you have given us during this summer. We didn't go on vacation and continued to work on the project. We didn't communicate much because we were so busy. HAIBAL will be released soon, with some delay but it will be released soon. We have solved many of our problems and are actively continuing development. The release 1 should be coming soon and we are thinking of setting up a free beta version for the community to give us feedback on the product. (What improvements would you like to see!) For the official release, we might be a little bit late because the graphic part is not advanced yet. We still have to make a website and a youtube channel for the HAIBAL project. I don't even talk about the design of the icons which has not been started yet. In short, the designer works a lot. In the meantime, here is the promotional video of HAIBAL ! See you soon the community ! Be patient, the revolution is coming

- 7 replies

-

- deeplearning

- pytorch

-

(and 3 more)

Tagged with:

-

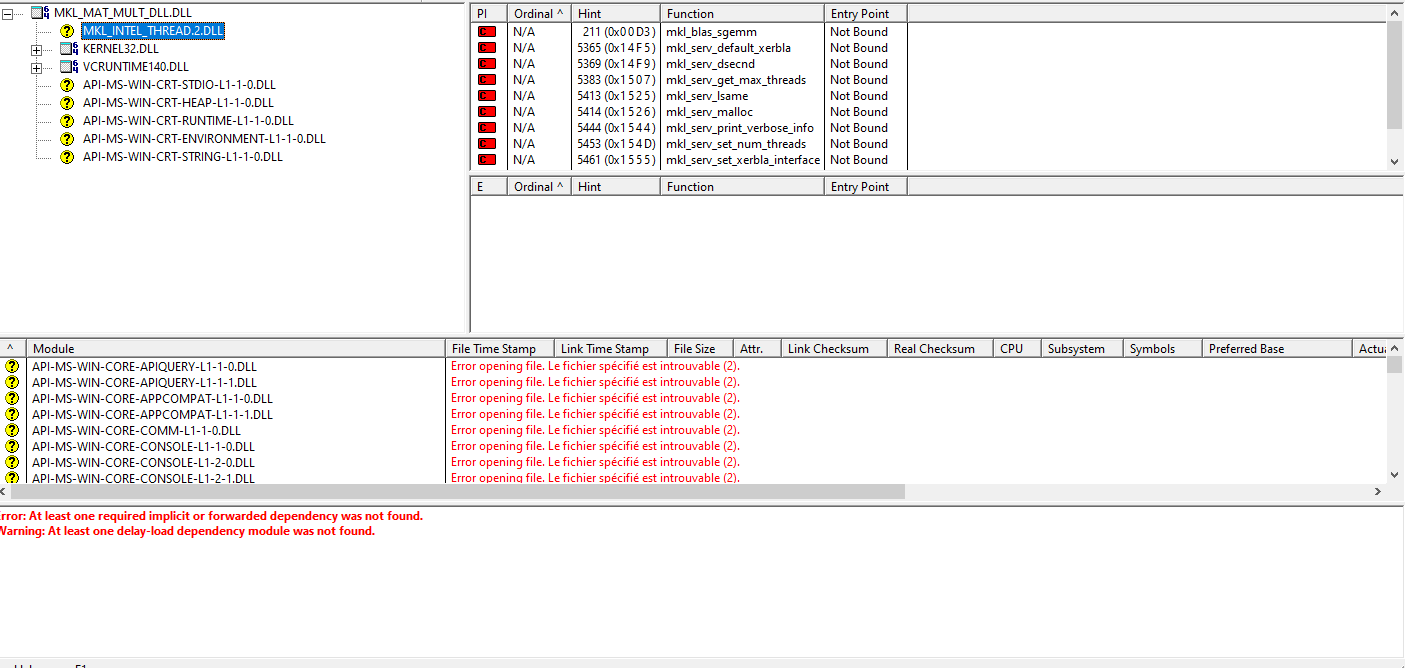

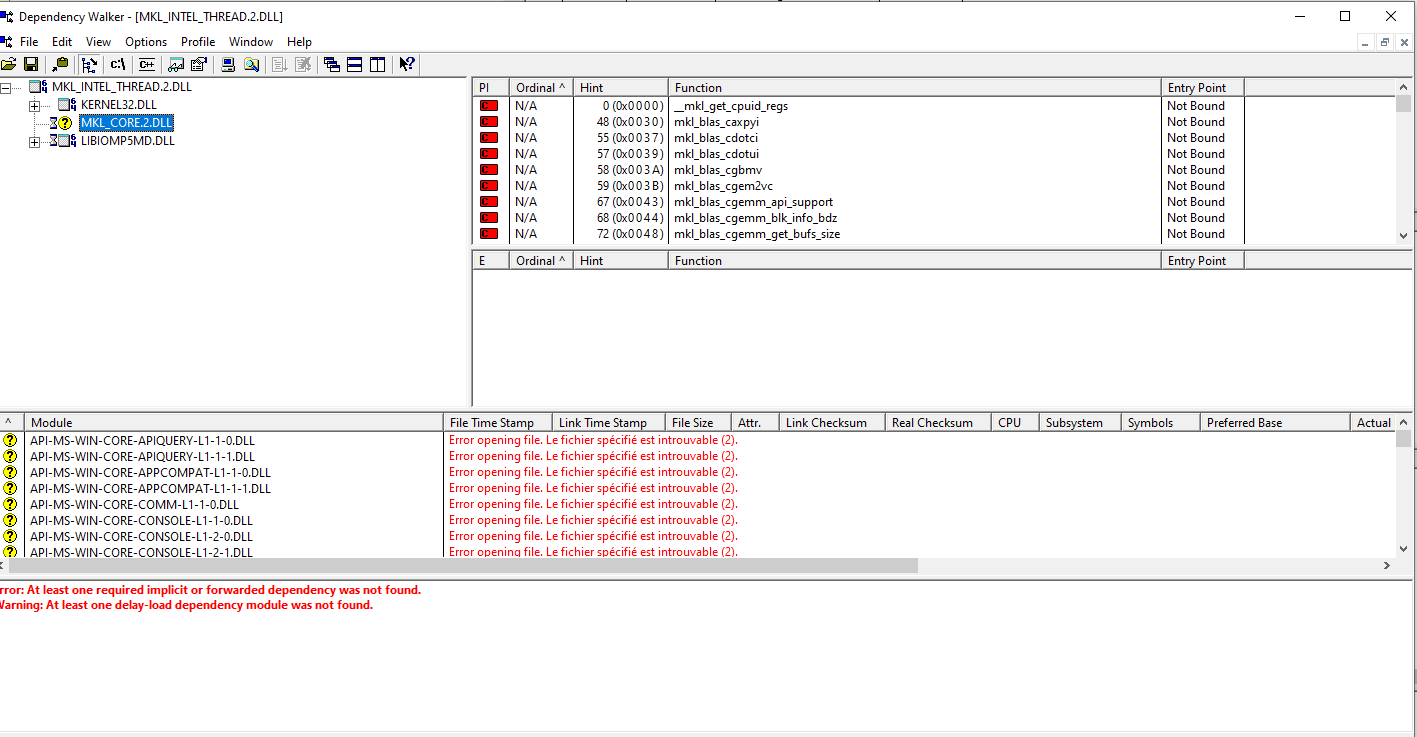

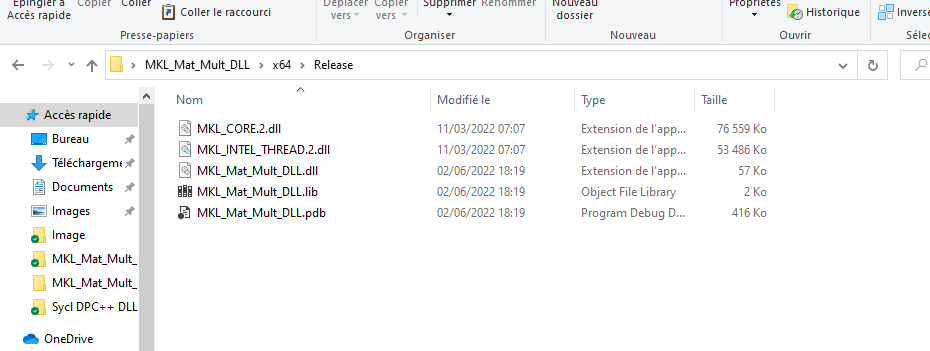

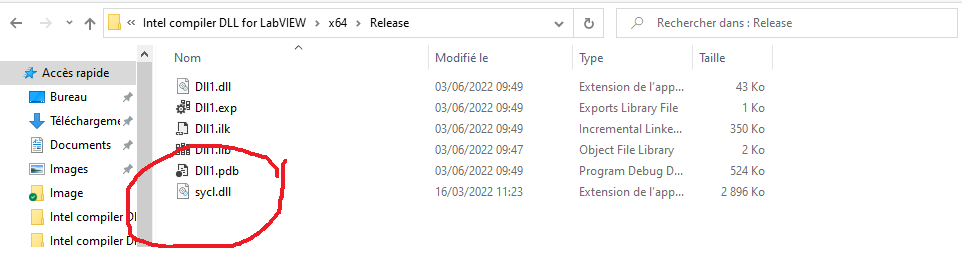

Thank you for your help but I'm not sure thats the good way to solve this problem because if i well understood, you propose to make modification on my configuration machine to make the VI well working. It's not user friendly if i want to use MKL inside an exported library.(Or have to script it to automatize the installation) By the way i looked also about the dependancy I found MKL_intel_THREAD.2.DLL (C:\Program Files (x86)\Intel\oneAPI\mkl\2022.1.0\redist\intel64)unfortunaelly moving this DLL doesn't worked. (Well tried !) --> i suppose MKL_intel_THREAD.2.DLL is calling other DLL so i have to scan this one to know dependany etc etc ... --> maybe a better way to solve this one (edit : done uper image 🤠 --> tried and failed) Is it possible to script PATH environment variable modification to make it more acceptable ? (I already have the answers - It's yes, but my actual knowledge on this subject are low) We can inspire from DNNL library (another library inside the intel package) - Intel propose a script to fix environnent variable but when i launch it on my cmd console seems does not work. I suppose i make it bad. vars.bat

-

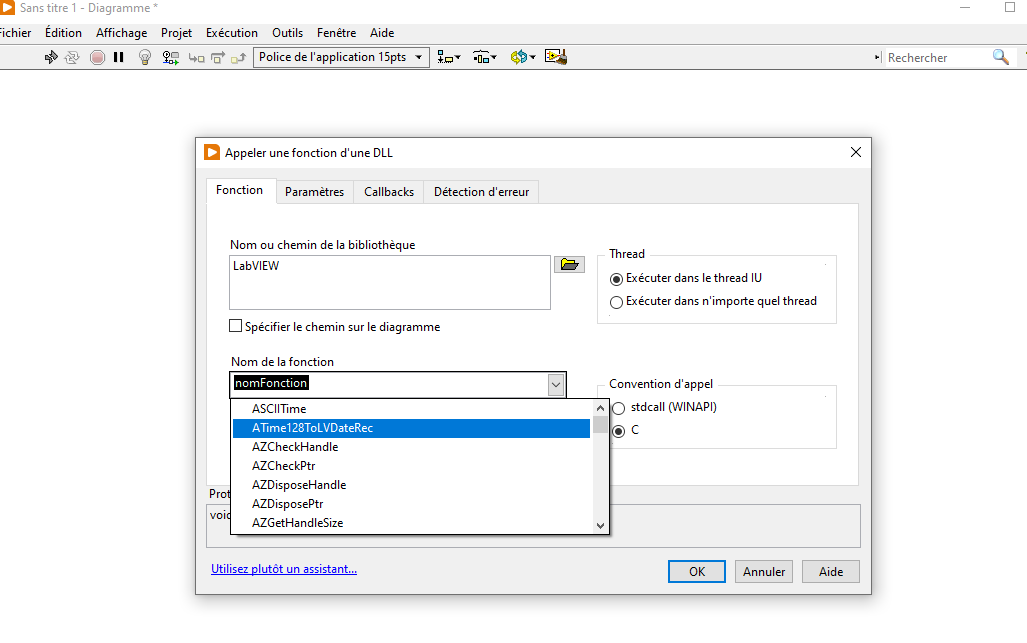

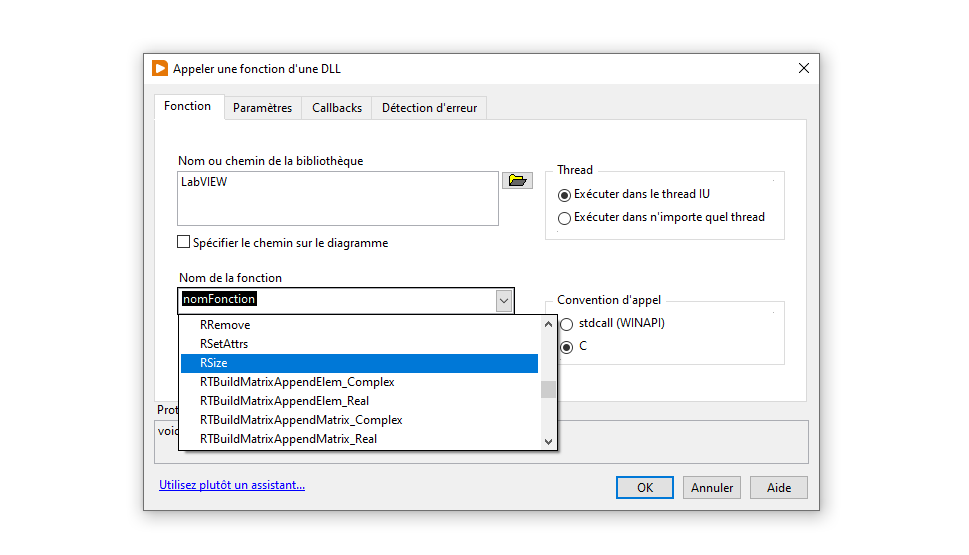

I have the same problem (LabVIEW error 13) when I use a function from the MKL library (math kernel library) There must be a file dependency issue that is not loaded in the DLL runtime. The question now to solve the problem is: How to add its dependencies properly. 🤔 Another question comes to mind: Logically as it stands, my DLLs should not work if I call them with C code (this is normally independent of LabVIEW) --> I will check (need to find how to call DLL in c code) If this is the case then our solution is to include all runtime dependencies (which is of course possible - you just have to know how to do it) One thing is sure, I will have learned a lot!

-

It seems that you are right (which reassures me because your reasoning is logical) Here is the screenshot of the "dependency walker" are there routines to integrate into my code in order to remove these objections? Error: At least one required implicit or forwarded dependency was not found. Warning: At least one delay-load dependency module was not found. As a reminder Header.h /////////////////////////////////////////////////////////////////////////////////////////////////////////// #pragma once #ifdef DPCPP_DLL_EXPORTS #define DPCPP_DLL_API __declspec(dllexport) #else #define DPCPP_DLL_API __declspec(dllimport) #endif extern "C" __declspec(dllexport) DPCPP_DLL_API int __stdcall Mult(int a); /////////////////////////////////////////////////////////////////////////////////////////////////////////// dllmain.cpp /////////////////////////////////////////////////////////////////////////////////////////////////////////// #include "pch.h" #include "Header.h" __declspec(dllexport) int Mult(int a) { return a * 3; } BOOL APIENTRY DllMain( HMODULE hModule, DWORD ul_reason_for_call, LPVOID lpReserved ) { switch (ul_reason_for_call) { case DLL_PROCESS_ATTACH: case DLL_THREAD_ATTACH: case DLL_THREAD_DETACH: case DLL_PROCESS_DETACH: break; } return TRUE; } /////////////////////////////////////////////////////////////////////////////////////////////////////////// dllmain.cpp Header.h

-

Hi Everybody, Intel recently released a DPC++ (data parallel c++) compiler that optimizes speeds for Intel CPUs and GPUs. My problem is that when I compile the functions with the normal Intel 2022 compiler (or the classic Visual Studio compiler) there is no problem and when I use the new intel DPC++ compiler LabVIEW returns an error. Both Intel compilers work perfectly in C and C++ under visual studio. For the exemple, i made a simple function that just multiplies an int32 by 3 and returns the result as an example. The DPC++ compiler is only under the X64 architecture and I use LabVIEW 2020. I made a video to show the problem LabVIEW DLL issue DPC++.mp4 File here: https://we.tl/t-9Iwkf1IGvr you can compile by yourself and see the problem. I added all Visual studio 2022 + Intel Compiler + Intel DPC++ compiler installers in the "install" repository. (on visual studio alt-F7 to go directly to the parameter and change the compiler - F5 to compile) Is someone can tell me what's wrong ? how I can make my DLL work with the DPC++ compiler?

-

LabVIEW memory management different from C ?

Youssef Menjour replied to Youssef Menjour's topic in LabVIEW General

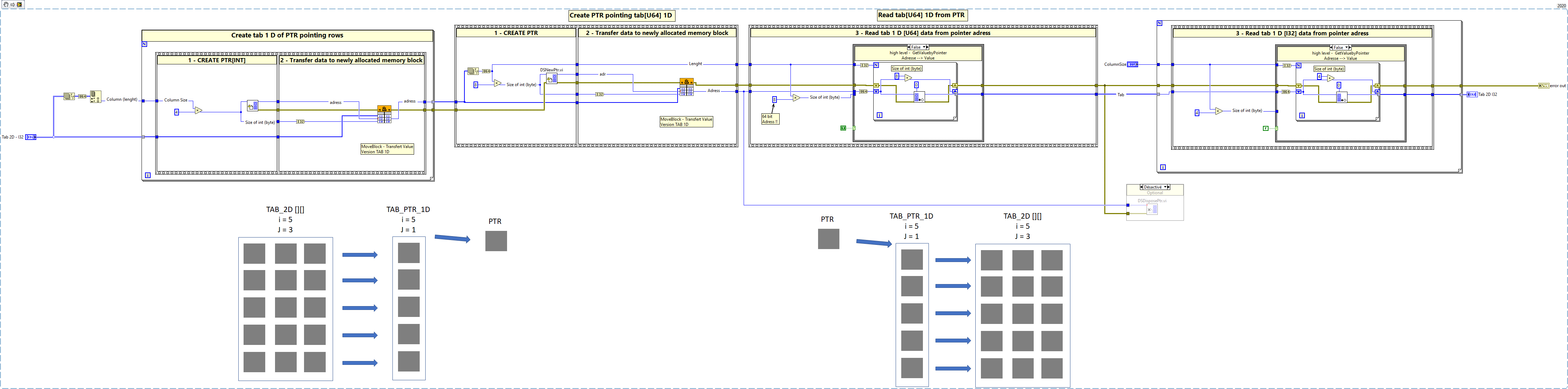

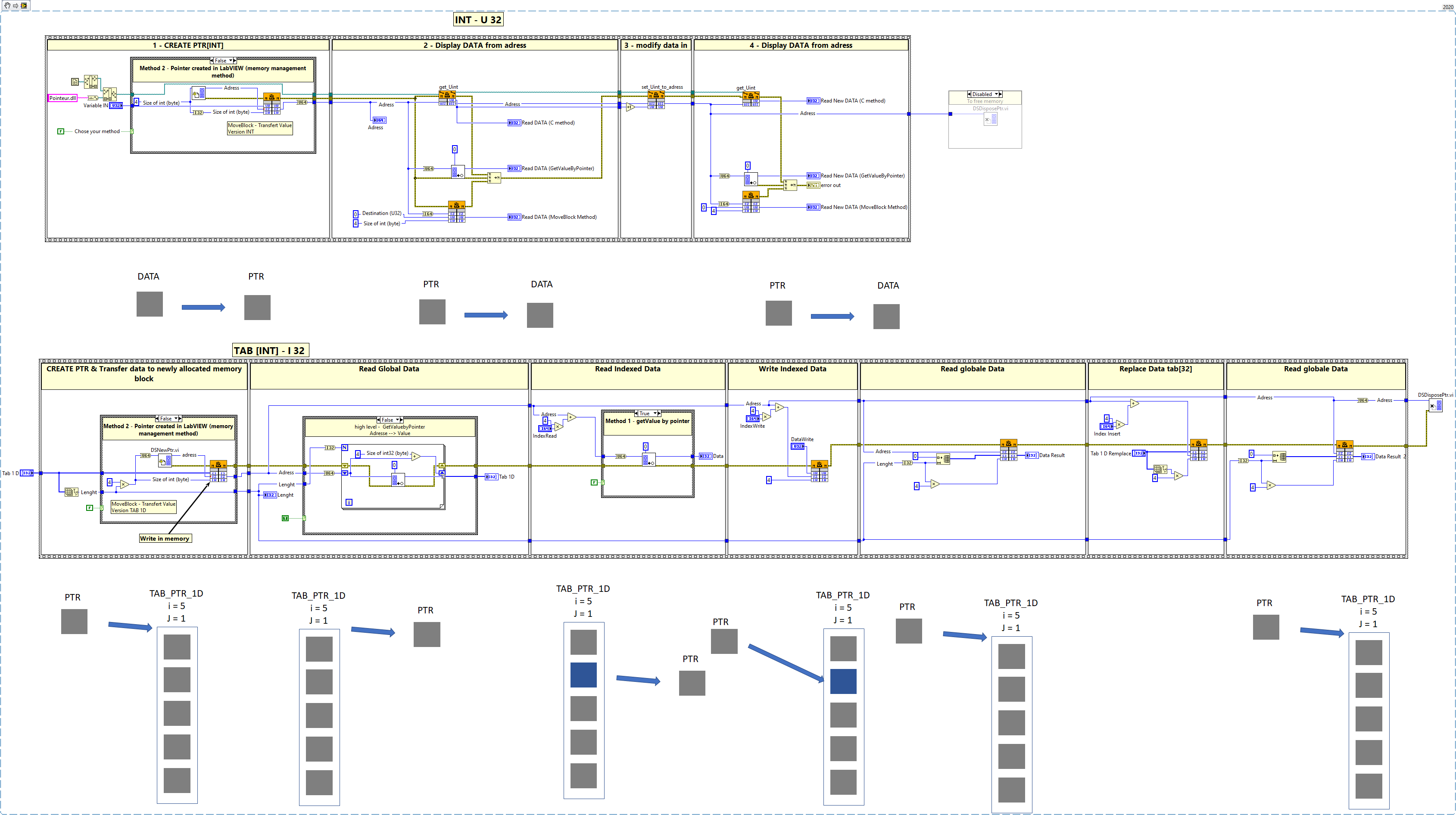

Thanks Rolf for the explanation (i still need to digest all). The best way to acquire experience is to experiment ! Read some of you, we feel we are manipulating a nuclear plant 😆 --> worst case labview crash (we'll survive really !!) As I am working on a project where I need time performance on array operations with CPU (read, calculate, write) ; Good news - The arrays are fixed size (no pointer realocation and no resizing) Bad news - The array can be 1D, 2D, 3D, 4D. (the access times with the LabVIEW native function palette are not satisfactory for our application --> need to find a better solution) By analogy, we suppose that the access to an array is limited on a PC as with an FPGA (on this one the physical limitation of access to the data of an array in read/write is 2 ports of reading and writing by cycle of clock whatever the size of the array). There is also the O(N) rule which says that the access time (read/write) to an array data is proportional to its size N --> I maybe wrong here In any case to increase the access time (read/write) of an array, a simple solution is to organize our data by ourselves (an array is split in several arrays (pointers) to multiply the access speed --> O(N) becomes in theory O(N/n) ) and port are multiplied by n (access time) We navigate in this "array" by addressing the right part (the right pointer). Some will say to me, but why you do not divide your table in labview and basta ? --> simply that navigating with pointers avoids unnecessary data copies on all levels and therefore makes us lose process time. We tested it, we saw a noticeable difference! In theory, doing like this is much more complex to manage but has the advantage of being faster for the reading / writing of data which are in fact the main problem Now why am I having fun with C/C++? Simply in case we can't go fast enough on some operations, in this case we transfer the data via pointers (as i told pointer well managed is the best solution - no copy ), we use C/C++ libraries like "boost" which are optimized for some operation. Moveblock is a very interesting functionnality ! So the next step is to code and test 3D,4D array and be able with only PTR primary adress to navigate very fast inside arrays (recode replace, recode index, code construct the final array) I found some old documentations and topic speaking about memory management and it helped me much. Thank you again Rolf because i saw many time some of your post helping a lot -

LabVIEW memory management different from C ?

Youssef Menjour replied to Youssef Menjour's topic in LabVIEW General

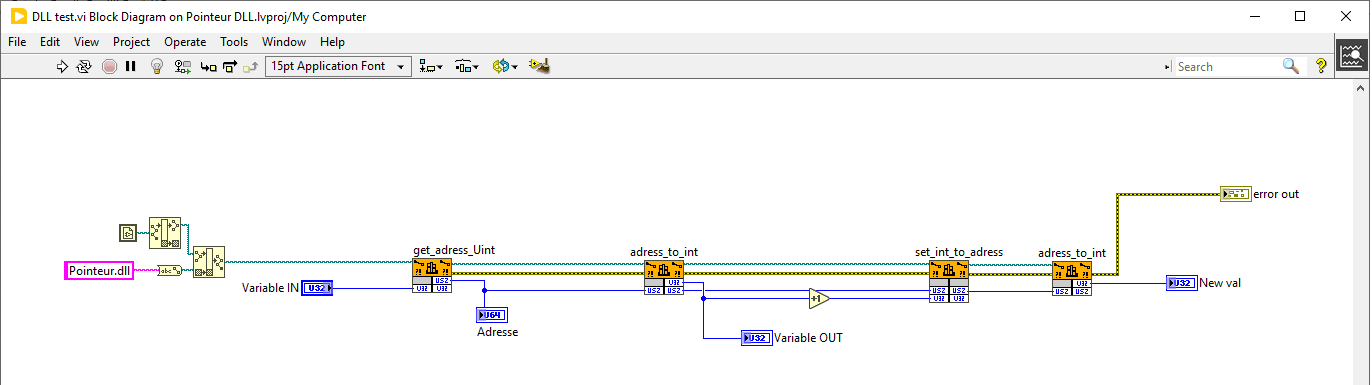

Rolf, if i well understood you, if i do that : DLL_EXPORT unsigned int* Tab1D_int_Ptr(unsigned int* ptr){ return ptr; } Data coming from LabVIEW, it mean adress memory could be released at any time by LabVIEW ? (that's logic) --> method 2 is a solution in that case (Pointer created in labview with DSNewPtr + Moveblock) I have another question, for tab, what is the difference between passing by pointer or handler. I mean we have a a struct implicitly give to the C/C++ lenght of tab with the Handler method but is there another difference ? (Ugly structure syntax 🥵) (many thanks for the exemple cordm ! 👍) Image is a VI snipped ShaunR, i'm not far to do what i want 😉 Pointeur.dll -

LabVIEW memory management different from C ?

Youssef Menjour replied to Youssef Menjour's topic in LabVIEW General

Thank you all for your information, I will be working in sequential and synchronous. There is a lot of interesting information in these different posts! Now we'll have some fun for a while! -

LabVIEW memory management different from C ?

Youssef Menjour replied to Youssef Menjour's topic in LabVIEW General

Hello ShaunR, First of all, thank you for taking the time to answer me. In view of your answer I will recontextualize the question. First of all I agree that you have to initialize and delete a pointer correctly. Here it is an example which aims to understand the management of the memory under LabVIEW. I want to understand how it works. I don't agree with your answer forbidding to manipulate a pointer. When the subject will be mastered there is no reason to be afraid of it. What I want to do is to understand how LabVIEW when declaring a variable stores it in memory. Is it strictly like in C/C++? Lets take an exemple, with an array of U8 Because in this case by manipulating the pointers properly it is interesting to declare an array variable in labview then to transmit its address to a DLL (first difficulty), manipulate as it should in C/C++ then return to labview to continue the flow. Why do I want to do this ? Because it seems (I say seems because it's probably not necessary) that LabVIEW operations are slow, to slow for my application ! As you know we are working on the development of a DeepLearning library and this one is greedy in calculation and thus it is necessary to accelerate them with libraries of multitrading C/C++ (unless to have the equivalent in LabVIEW but I doubt it for the moment). Just to give you a comparatif if we content to use LabVIEW normally we are 10 time slow as python !! Is it possible to pipeline a loop in labview ? Is it possible to merge nested loop in LabVIEW ? Finally about the data transfer, I understand perfectly that in "term of security", copy a data in the DLL to use it then to restore it to LabVIEW is tempting but the worry is in the delays of data transfer. That's what we want to avoid ! I think it's stupid to copy a data if it already exists in memory, why not use it directly ! (in condition to master the subject) The copy and transfer make us lose time. Can you please give me some answers ? Thank you very much -

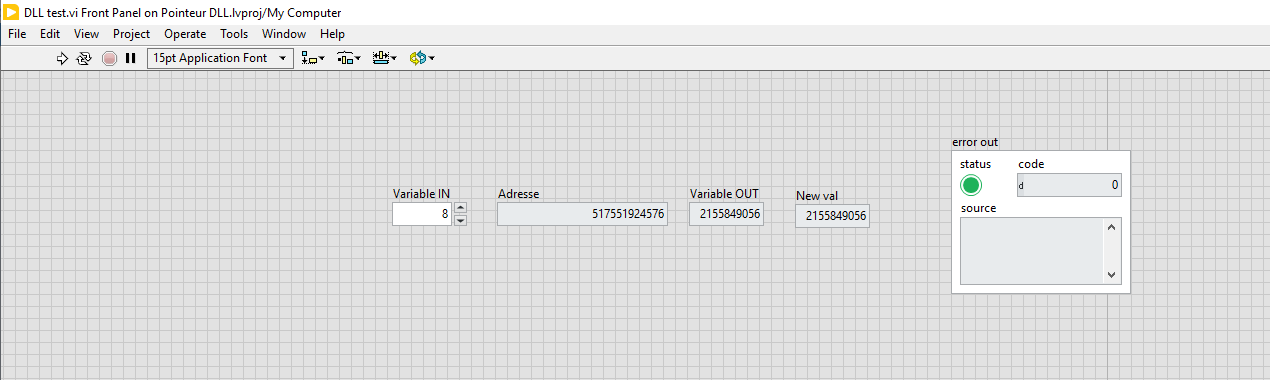

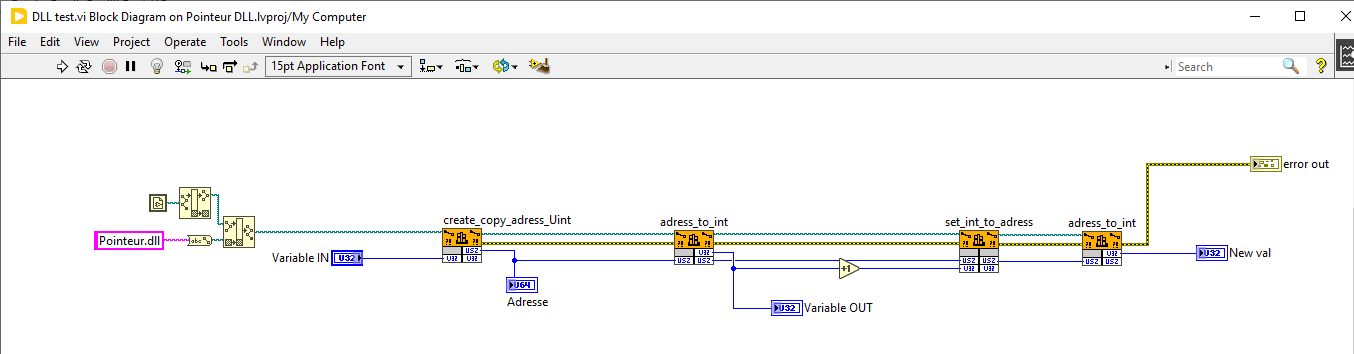

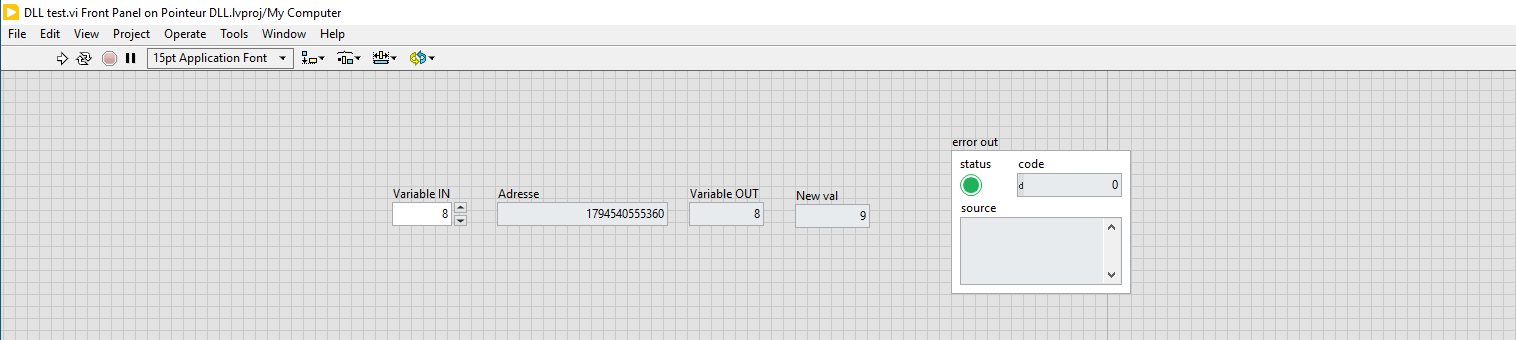

Hi everybody, In our quest to optimize our codes we try to interface the variables declared in LabVIEW with a C/C++ processing (DLL). 1 - In my example, we had fun declaring a U32 variable in LabVIEW, then we created a pointer in C to assign it the value we wanted (copy) then we restored the value in LabVIEW. In this case everything works correctly. Here is the code in C : Hence my question, am I breaking my head unnecessarily, does my function set already exist in the LabVIEW DLL (I have a feeling that one of you will tell me...) 2 - During our second experiment (more interesting), we assign this time the address of the variable U32 declared in LabVIEW to our pointer, this time the idea is to act directly at the level of C on the variable declared in LabVIEW. We read this address, then we try to manipulate the value of this variable via the pointer in C and it does not work! Why ? or did I make a mistake in my reasoning ? This experiment aims to master the memory management of the data declared in LabVIEW at the C level. The idea would be then to do the same thing but with U32 or sgl arrays. 3 - When I declare a variable in LabVIEW, how is it managed in memory? Is it done like in C/C++? 4 - Last question, the moveblock function give me the value of a pointer (read), which function allows me to write to a pointed celled ? I put the source code as zip file DLL pointeur.zip

-

It seems your page is not working Rolf.

-

Thank you very much !! I will have a look it will be very usefull for us about the optimization of our execution code !

-

Sorry guys if i feel to be a newbie but what is a "CINs" ? Another question : I remember during 2011 to see that LabVIEW had a C code generator. Do you know why this option is no more available ?

-

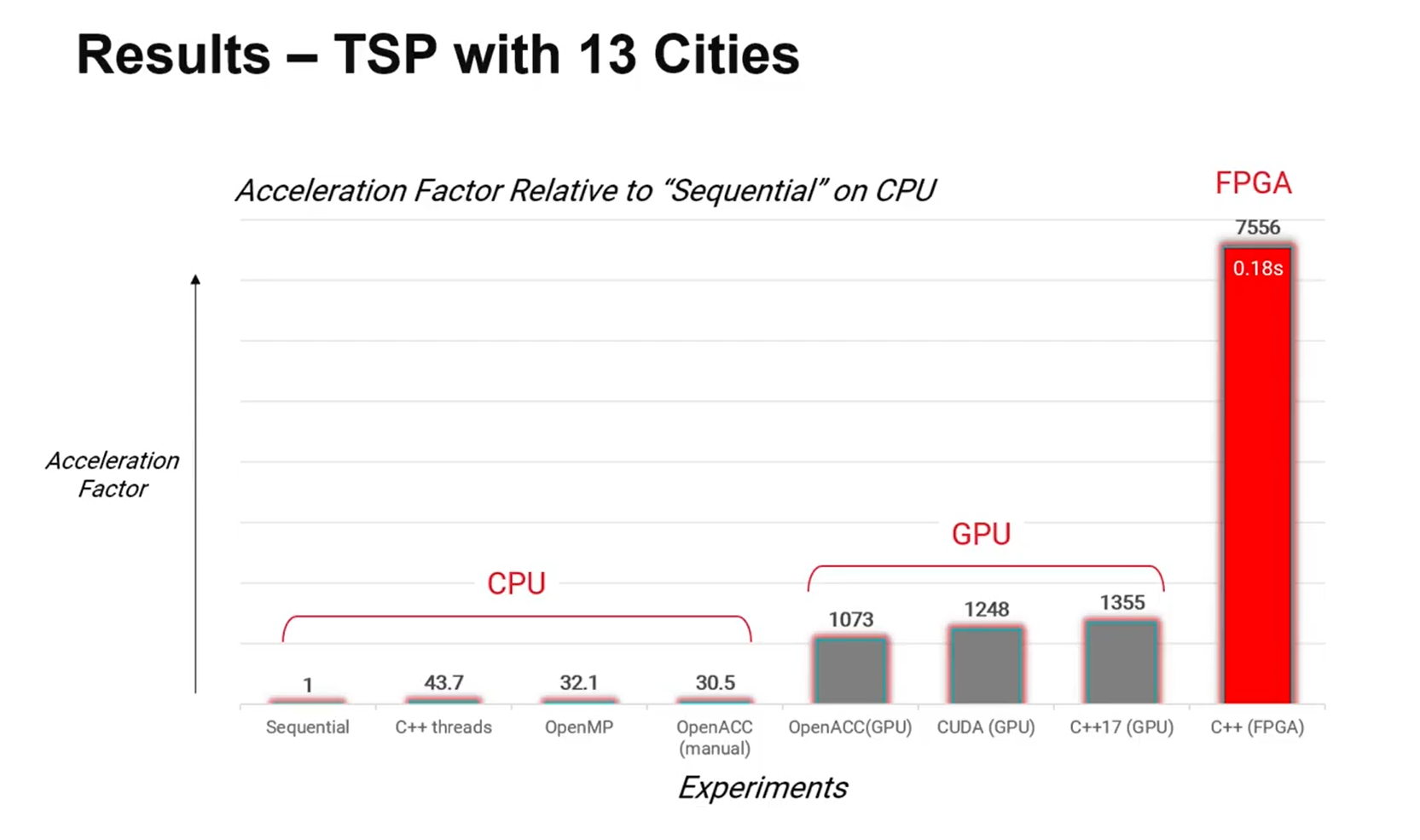

ok thanks guys for all of these feedback ! It's for our HAIBAL project we will soon start optmisation of our code and i'm exploring différent possibilities. We continu the work of Hugo Always on our famous stride ! And our dream now is to finish on xilinx platform fpga. I want to prove that we can be efficient in calculation also with LabVIEW. (Maybe we will have to precompile as DLL a numerous part of our code to make it more efficient)

-

Hello LabVIEW community, Is there a documentation about LabVIEW DLL functions. We would like to have a global view at our team in order to explore possibilities of functions (Maybe another topic already spoke about). Thanks for your help

-

The HAIBAL toolbox will propose to the user to make his own architecture / training / prediction natively on LabVIEW. Of course we will propose natively numerous exemple like Yolo, Minst, VGG ... (that user can directly use and modify it) As our toolkit is new, we made the choice to be also fully compatible with Keras. This means that if you already have a model trained on Keras, it will be possible to import it on HAIBAL. This will also open our library to thousands of models available on the internet. (all import will traduct on HAIBAL native labVIEW code as editable by users) In this case, you will have two choices; 1 - use it on labVIEW (predict / train) 2 - Generate all the native architecture equivalent to HAIBAL (as you can see on the video) in order to modify it as you wish. HAIBAL it's more 3000 VIs, it represente a huge work and we not yet finished. We hope to release the first version this summer (with Cuda) and hope NI-FPGA optimisation to speed up inference. (Open CL and all Xilinx FPGA compatibilities will also come during 2022/2023) We are building actually our website and our youtube channel. The teams will propose tutorials (youtube/git hub) and news (website) to give visibilities for users In this video we import Keras VGG-16 model saved in HDF5 format to HAIBAL LabVIEW deep learning library. Then we can generate with our scripting the graph to allow user modify any architecture for his purpose before running it.

- 16 replies

-

- labview

- machine learning

-

(and 3 more)

Tagged with: