-

Posts

817 -

Joined

-

Last visited

-

Days Won

15

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Cat

-

-

Accessing the massive LAVA knowledge base...

I need a 1Gbit ethernet hub. Not a switch or a router, but a hub. I have searched and searched and cannot find such a beast and was hoping you all might know of one.

We're connecting our network to some one else's network -- there's 1 input pipe and we need two outputs -- and they freaked when they heard we were going to use a switch. A hub is okay, but not a switch. There are supposedly security as well as technical issues involved here, so if "they" say it's got to be a hub, it's got to be a hub.

And if all my wishes were to be granted, it would take SC multimode fiber inputs, but copper would be just fine. I have plenty of media converters.

-

Today's bug/feature:

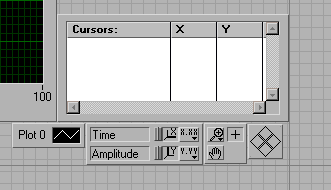

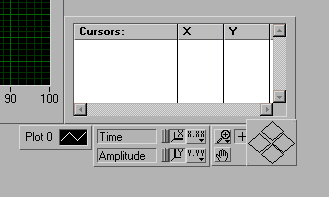

Drop a waveform graph on the FP. Make visible all the legends and the Graph Palette. When you're done it might look something like this (I've grouped all that stuff together to make a smaller pic):

Drop some other control on the FP (I suggest a numeric just because it's small). Group it with the graph. Right-click on the graph and select "Scale object with pane".

Expand/contract the window. Keep your eye on (what I think is called) the Cursor Movement tool. It's in the bottom right hand corner of the above pic -- the square thing with the 4 diamonds in it.

Unlike any of the other palette/legend objects, the cursor tool changes size along with the graph. Even setting the pane back to the original size does not get the diamonds the right size and lined up again in the box. Resize the window a few times and the tool is completely unusable.

This resizing ot the cursor tool does not happen if the graph isn't grouped with another control. Unfortunately, I have to have the graph in a group so all controls resize together.

I can't find any way to access that box and the diamonds programmatically. My fallback is to program my own right/left cursor movement controls, which isn't difficult, it's just annoying when there are tools to do it built in to LV.

Is this a bug or a feature? All I know is it's bugging me.

-

Just received some more info from my customer.

Turning off the WiFi connection gives a startup time of less then a minute....

I used to have that problem in the development environment. No network - Quick loads. Network - it would hang for a looong time on startup.

It turns out there was a link to a vi that no longer existed in my project. For some reason LV went hunting for it even tho I didn't use it.

I'm not sure if this will help with a built application, but it might give you a place to start looking...

-

1

1

-

-

Annoying it is, but I don't think that's gonna be changed. Let's think of how it might affect appending to text file.

No no! I agree completely! I'm sure changing it now would result in a lot of broken code.

I've got the primitive wrapped in a vi with a switch to delete the last EOL if necessary. I wrote that after being bitten a couple times by it just like François was.

-

-

I'd go nuts if I had to develop on the low end computers we use for test stations.

That might explain my mental state much of the time.

If you go this route, just make sure you test on the real platform early and often. My dual core dual CPU laptop (obviously) runs circles around code the target platform single core 1 CPU laptop really struggles with. I've gotten deep into some CPU intensive thing and had to backtrack.

Also, if the target platform's screen isn't the same resolution as the development screen, there WILL be font size issues.

-

But LabVIEW is missing!

Which might, actually, be a good thing...

-

Woo-hoo! Congrats!

-

Hi, How are you I need your help in this project... It is becoming late. I need to complete this one as soon as possible. I am ready to pay the money for your work.... please make sure of this

You haven't even paid up yet for all the work that's already been done for you. $39.95 for a Premium Membership...

-

Ah, the evilness that is copies of data. Have you...

- Reduced the number of subVIs

- Tried using Request Deallocation

- (LV2009) tried passing by-reference data

- Attempted to use smaller chunks at once

- waved the dead chicken

Other than LV9, I've tried all of the above, especially waving the dead chicken.

FWIW, NI folks generally seem to poo-poo "Anxious Deallocation" as I've seen it be called. I still throw it in occasionally where it might theoretically have some effect, but I personally haven't seen it help too much myself.

Multiple channels at 64kS/s does not seem to be all that useful for display in real time

I actually display up to 4 channels of "real time" waveform data (or spectra or lofar). We use it for what we call "tap-out"; determining if a particular sensor is hooked up where it's supposed to be. Also to watch for transients. But as you suggest, in that case I can break it up into much smaller chunks and memory is not an issue.

-

I don't know anything about your application, but if it is the amount of contiguous memory that is the bottle neck I would start looking into using queues or RT-FIFOs to store data in smaller chunks.

Unfortunately, this is a post-run application where the users want to see the entire data set(s) at one time.

I also believe that the number of buffers of data LabVIEW holds on to can be minimized by using FIFOs; once allocated no more buffers should have to be added.

That is true, in a perfect world. In reality, LV often grabs much more memory than it actually needs. While I understand this is a good thing for performance, it is a bad thing for large data sets. I jump thru lots of hoops to *really* empty and remove queues in order to attempt to get back all the memory I possibly can.

-

Curious... what are you doing that you need 2GB chunk at once? Not that I haven't had Windows take down LV for exceeding 2GB...

Multi-minute runs at 64k sample rate * multi-channels to track transient noise as it travels around a test vessel.

The main problem is not the amount of memory my data takes, it's all the copies of my data that LabVIEW makes, and holds on to. If a data set is 750MB, that shouldn't be a problem, but LV will make multiple copies of it and blow up the memory.

I currently decimate like crazy on the longer runs -- which of course slows eveything down and is a matter of taking an WAG educated guess at how much contiguous memory is available and keeping my fingers crossed.

-

So in theory, you could use the same source with 32 and 64-bit LabVIEW and your output will be as expected.

I'm more concerned about the input.

More specifically (maybe you've already answered this, but the caffeine just hasn't kicked in for me yet), if I build my 32-bit code using 64-bit LV, will 64-bit LV make my code 64-bit first? I.e., do I have to protect that code to keep it 32-bit during a 64-bit build?

More specifically (maybe you've already answered this, but the caffeine just hasn't kicked in for me yet), if I build my 32-bit code using 64-bit LV, will 64-bit LV make my code 64-bit first? I.e., do I have to protect that code to keep it 32-bit during a 64-bit build?I suspect it has to do with what you're application will actually be doing ..

Yeah, I admit it was a pretty open-ended question. I'm looking to run on a 64-bit box in order to be able to access more than the measly 2GB memory (or call it 1GB contiguous on a good day) LV can use on a 32-bit box.

-

I believe I can have both LV2009 32-bit and LV2009 64-bit installed on the same 64-bit machine. I believe using LV2009 32-bit I can create a 32-bit application on a 64-bit machine

I'm assuming I should NOT open and run my 32-bit code under 64-bit LV because that would recompile it into 64-bit.

I'm trying to wrap my head around the best way to write in 32-bit but be able to build both 32 and 64 bit applications out of the same code. Or would it just be easier to have a dedicated 64 bit machine, copy my 32-bit development code to it and build it there??

Last but not least, this very interesting article on the topic on the NI site says there is a performance hit running a 32-bit app on a 64-bit system. Anyone have any Real World LabVIEW experience with this and know what that hit might more-or-less be?

(BTW, try searching on "64-bit" on this site. Well, actually, don't. I got real frustrated and then went to Google.)

-

There was a momentary scare when HR noticed there was some food being served at NIWeek and therefore it must not fall under a training request, because one obviously can't eat and learn in the same 24 hour period, and therefore the approval was unapproved.

I had to write a justification letter (liberally plagarized from the one on the NIWeek website), get 3 more levels of approval, and then the unapproved approval was finally reapproved. I just love working for Big Brother...

-

Is the issue here that you are trying to do a two stage process in 1 go? i.e read from a Solaris drive, process the data and save it to NTFS?

That was the hope...

The 25MB/s might just be the limitation of the solaris drive (ATA 33 perchance?) and you might not be able to overcome that.

All drives (whether NTFS or UFS) are the WD Caviar Black SATA 3Gb/s. I've transferred data from drive to drive on this system at upwards of 80MB/s.

The answer I got back from tech support at UFS Explorer was something along the lines that 25MB/s was as fast as USB drive could go. I reminded them this was a strictly SATA system, and not limited by USB, so any other ideas? I never heard back...

What about slapping a brand new 1TB NTFS formatted SATAII drive (they are cheap after all) in the windows box alongside the UFS drive. Start a flavour of linux (such as Debian) in a virtual machine or as a live CD. Install this Linux-NTFS in the virtual machine or live CD and just copy everything to the new drive. Throw away the old drive or take it home to build you own solaris system

Then run your software on the data, NTFS to NTFS.

This is still 2-step, right? Translate data from UFS drive to NTFS drive and then process data? And this assumes a Linux utility that reads Solaris 8 and 10 UFS disks on the Linux box. But I guess it would save hopefully save me some time in the UFS to NTFS transfer stage. What I really need is LV for Linux, and then some way to run the data extraction at the some time. Maybe...

Ok, but back to the Real World. I am unfortunately working with quite a few restraints. I'm not the person who will be transferring the scads of data (thank goodness -- there was 86 TB of data from the last test). It has to run off of someone else's PC. Or actually several other PCs.

At this point I've installed UFS Explorer on all those PCs. I've installed my LV app to extract data from the files. The user sticks the UFS disk in a disk tray, runs UFS Explorer on the files to be processed to transfer them to a Windows/NTFS disk then runs my app on the Windows/NTFS files to extract the data.

I also tried the UFS Explorer tech support again to see if there's someway to run it (or any other product they might have) from the command line. Then I could set up a GUI that would make it at least look like 1 step to the User, even tho it wouldn't speed it up any. No response...

Probably missing something as per usual

No, I'm very appreciative of any stabs anyone wants to take at this. Thanks!

-

I think I've got it!

Resize the window first, then reposition the plots, then resize the plot area. Seems to be working, so far. Now I just have to clean up all the miscellaneous controls/indicators.

Thanks to Yair, François, asbo, and especially Ton!

-

Do by chance resize the graph in multiple locations?

What code do you have, does our snippet work?

The User resizes the entire window manually which resizes the group of graphs. The code only resizes programmatically in one place.

Yes, your snippet works.

Part of the issue, I think, is that not only am I resizing the graphs, I'm also moving them (back to their original positions). And to make it more complicated, all those graphs are in a group that resizes with the pane size. And of course, I'm also resizing the window to it's original size...

I actually discovered just resizing the window to its original size gets at least the graphs fairly close. Unfortunately there are a lot of other controls/indicators on the window that end up in the wrong place, so I've got to tie them down. I also need it to be more than just fairly close, so I'll work some more with incorporating your snippet.

I think my main problem at the moment is doing things in the right order. And I would love to get a reference to my group of graphs so I could turn "resize with pane" off and on...

-

I tried it in 8.6 with a graph, xy-graph and chart and in all cases the PlotAreaSize property resizes the bounds as well.

Hmm. I obviously need to figure out why it's not working for me...

I was hoping it was something that would only work in LV9 and then I'd have an actual reason to upgrade.

-

I think you are looking for the 'PlorAreaSize' and 'PlotAreaPosition' these seem to do what you want:

<Plug>:

have you looked at my Resize/Align/Distribute toolset?

</plug>

Ton

Love those humongous graphics...

PlotArea functions just resize the plot area (the black part) not the whole graph (the outside grey part).

I will take a look at your toolset now!

-

It's always the little things that getcha...

Short version: I need to be able to programmically resize a graph at run time.

For the long version, go here. I've got 18 graphs, with anywhere from 1 (big graph) to 4 (quarter-sized graphs) of them visible at any one time. When one screen-full of graphs gets resized (by the User manipulating the window), they all have to be resized, even the ones that are hidden.

After resizing a few times, a lot of the other controls and indicators on the screen tend to get out of whack -- they move or stretch or whatever. I want to be able to hit a "reset" button that puts everything back to the baseline size/position. I thought I could use Position and Bounds to do this, but while Position works fine, Bounds is read-only. Using any of the "Plot Area" functions does just that -- resizes the plot area but the graph itself does not change size.

Tell me there's a simple solution to this that I haven't been able to come up with (or found doing a search here)...

-

Probably the best thing to do would be to test your system as early in the build as you can and measure the performance time.

Thanks for the info. I plan on hitting the run button today. Results over in the link I cited above...

-

I achieved an effect similar to what you are describing by placing all of my charts in an xcontrol with appropriate, draggable splitters. Each chart was set to "Fill Control to Pane" and then, as far as the top level VI was concerned there was only 1 control to resize (which seems to work MUCH nicer).

This was kind of the effect I was hoping to have using tabs.

I haven't used xcontrols much since they first came out. If you don't mind, take a look at the first paragraph of my original post. I need to show either 1 chart OR 2 charts OR 3or4 charts at one time. The user can switch back and forth between 2 different types of those sets, with only 1 type at a time on the screen. And the 3rd set is two charts of different sizes. Can I do this automagically with xcontrols?

-

Hazaah! See you at the NI-Week LAVA BBQ

You'll be the guy in black tie and tux with the smarmy-looking grin, right?

Neede: 1Gbit ethernet hub

in LAVA Lounge

Posted

While the details aren't exact, you have the general idea... I'm sure you all will visit me in prison, right? Right...?!?

And unfortunately, *they* don't need anything -- *their* network is set up and certified. We stoopidly let another group convince us they (a different *they*) were taking care of the network connections. The "no switches" bomb was just dropped on us last week, and we're loading the system at some undisclosable time in the very near future.

Oh well, the analysts always ask for more data than they really need. I'm sure just getting half of it will be fine...................