-

Posts

815 -

Joined

-

Last visited

-

Days Won

15

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Cat

-

-

I don't want to split a hair, but just to mention that as these methods require to load whole file to memory they'll work well for files not exceeding let's say 100-200 MB.

Considering the 4 copies in memory that might happen, I'd be lucky to get that 200MB...

You are right; this method is definitely for handling small files. The vast amount of time I use it for editing configuration files.

-

Thanks, just want to know how people handle this.

B.T.W how you handle the replacement of the file contents(if you just write txt to file, it will attached from the last point of orignal contents, if you write from the start point of the file, there will be a orignal line left in the last line of the new file), I delet the orignal file and create a new file to avoid this.

I do the same thing Shaun suggested. It would be really nice if LV had a "Replace a Line in a String" function. Even better yet if it had a "replace last line in string" option. I've written my own vi for the latter. Sometimes it works and sometimes it doesn't.

I don't delete the original file, but use "Create or replace" when writing the file contents back.

-

OMG! It's a really really good thing I can't get those videos at work! I just got to LMAO in the privacy of my own home.

-

She's beautiful! Congrats!

-

I was hoping RTF might do it, but that only works in MSWord.

If your target platform is Excel 2007, that version uses a form of XML encoding. If you want to do this the hard way, Microsoft probably has file format info on their website. Otherwise, ActiveX and/or Excel macros are good suggestions.

-

How long are you usually out for?

Usually 5 days. The longest was a week. A really looong week. Electric Boat was running our system but didn't want us to touch it unless something went wrong. It was working fine so I had absolutely nothing to do and nowhere to go for a week. Oh, and that was also the trip where, due to oddities in the way federal employees get paid, I didn't get paid for the last two days.

The fun part was that it was a "Builder's Trial", the big test before the shipyard turns the boat over to the Navy, and only the second time the sub had been out to sea. On a Builder's Trial they take the boat out and "see what she can do." So we did lots of fast dives, hard turns, etc. It was kinda fun (I was watching stuff fly around the Crew's Mess at one point), as long as I didn't let myself think about what would happen if Something Important broke.

Meh... feelings are overrated.

I'll bring that point up to my boss.

-

I would be breaking things left and right.

"What!? I have to go out again?"

"What!? I have to go out again?"That's what I thought, too. Then I had to do it. Over and over.

If you like hot, noisy, smelly, confined, crowded spaces, it's definitely the place to be. Oh and if you don't mind being trapped in a tin can a few hundred feet deep in the ocean with no hope of survival if anything goes wrong.

I've managed to avoid riding for over a year but my number may be up this spring. I have a new system that's going on board for the first time and I (unfortunately) feel obligated to go with it and make sure it's working right on the actual test platform.

-

Suggestion from LV Architect: How about an XControl whose data type is a Data Value Reference of your array and you manage the display of the data that way...

AQ fills in details:

So basically, you read your data from disk, and then use the New Data Value Reference to stuff the data into the DVR. Then you write that to the FPTerminal of a custom XControl. That custom XControl has an array display with the array index hidden. Instead, you have a separate numeric control. You grab subsets of the array to display and change which subset you get each time the user changes the value in the numeric control. This should get you down to just 1 copy of the entire array with copies of some very small subset (however big you make the display on the FP of that XControl).

Unfortunately I have close to 2000 vis I have to retest everytime I upgrade. If they don't all still work it may mean a million dollar test coming to a screeching halt. Or even worse, it may mean I have to ride a submarine again.

So The Big Boss is very hesitant to allow upgrading. I snuck 8.6.1 in by saying it was really just a minor mod to 8.2.....

So The Big Boss is very hesitant to allow upgrading. I snuck 8.6.1 in by saying it was really just a minor mod to 8.2.....I know nothing about DVRs (other than what little I've gleaned from LAVA), but I will take a look at them on the system I have upgraded to LV9. I'll need to output the data to a graph, so displaying a little bit at a time isn't really an option -- will this method still help cut down on data copies in that case?

-

Can you try both examples with Syncronous display? I would guess that the reference forces the transfer copy and we have another copy still unknown.

Having "Synchronous display" checked or unchecked makes no difference to the number of data copies made. Unfortunately...

-

I don't get it. Why would I wash the food out of a bag that I'm just going to put food in?

If the food that was in the bag was moldy

If you were putting something dry (eg, crackers) into a bag that had had something wet (eg, spaghetti sauce) in it

-

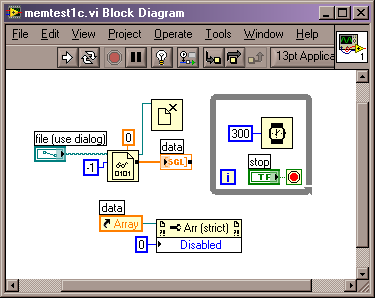

So, now that AQ has convinced me I'm going to have 3 copies of my data on any open and running vi no matter what I do, here's Q#2:

This should look familiar to those of you following the first discussion. It creates 3 copies of the data:

But when I add a reference to the code as in here:

It creates four copies of the data.

This is what really started me down the road of trying to figure this out. I was seeing 4 copies of the data in my (much larger) application. So I started deleting little parts of it, saving, exiting, and running to see when the number of copies would drop. The last thing to go (other than the read file and send to graph part) was a reference to the graph that was changing the label. Once I deleted that and ran it, the number of copies dropped to 3. As it turns out, you don't have to connect the reference to anything -- just dropping it on the BD and letting it hang there also creates that fourth data copy.

Should I expect this??

I know that if I use the Value property of the array a new copy may be made (I say "may" because if you connect the read file to a Value property of the array instead of the array terminal, the # of copies stays at 3). But should I expect a copy of the data to be made just by using some non-Value property of the array?

Cat

-

We had a similar problem with large images. Our solution was to view at different resolutions (i.e decimate) and load from disk only the sections of the image the user could see as he zoomed in rather than try to keep the whole image in memory.

Yeah, I've been bouncing something like this around in my head for awhile. I haven't been able to do it with graphs I've decimated in the past, because they are drawn in "real time". There's no historical data buffer to go back to and zoom in on. But my current application is a static file, so it should be doable.

-

You have the FP open when running this VI. That means that you have the buffer coming from the Read node, the Operate data and the Transfer data.

Do this: Close the VI so it leaves memory (that deallocates all the data). Open a new VI. Drop your current VI on the diagram as a subVI and then run the new VI. You should only see the one copy of the data that is coming from the Read node.

Yes, I agree, there is only 1 copy made of the data. Or 0 copies if the subvi deallocates memory. But attach the array in the subvi to a control in the main vi and 3 copies are made. Given no local variables, no references, no updating -- I'm still not sure where they are all coming from.

Because that one copy is the already allocated buffer for when you run the VI again. In other words, if you run the VI a second time, you won't take a performance penalty having to allocate that first buffer because LV will use the one already allocated, assuming it is the size needed. Running thousands of VIs you'll find that most of the time arrays and strings are the same size every time they go through a given block diagram. LV leaves the dataspace allocations allocated all the way through the diagram because for repeated runs, especially direct runs of the VI that happen when you're testing and debugging your VIs, the data is the same size and you don't have to do the allocation every time.

It sounds to me like what you're saying is that LV sees that 150MB worth of data has entered a vi from somewhere and it decides to make a 150MB buffer for that vi. Then when the data actually goes somewhere (ie, gets wired to a control) it passes thru that buffer, but that buffer is not used for making the 2 usual copies of the data for the control; 2 more copies are added. I've been saved the time of having to allocate 150MB of memory next time I run the program, but in the meantime, my 150MB of data is eating up almost a half a Gig of memory.

Am I getting close??

Do you agree that Aristos has answered the mysterious parts fo the challenge and now you have to work with that limtiation?

We may be getting close...

If yes, then you may want to concider Dr Damien paper on managing large data sets that can be found here.

Thanks for the suggestion, but I read that article before bugging you all with this. I actually do decimation on some of my graphs, but in this case I can't. It often gets used for transient analysis -- where 2 or more plots are compared in time -- and the user needs to get down to sample-level resolution.

-

Why can't you read the file in chunks and simply build up the data to pass to the graph? You could preallocate the array used for the graph data and replace it as you read from the file. Once all the data has been read pass the entire data set to the graph for display.

I was going to ask you if that's what you meant, but then I thought that wouldn't help any because I'd be making a copy (or more) for the array and a copy (or more) for the graph. At this point I'm not sure if that's not just going to make matters worse. But I can give it a try.

If I do the read in a subvi and deallocate, that works fine (1 copy made), it's just when that output gets put into the control on the calling vi that extra copies are made.

-

When working with large files like this I generally try to read the file in chunks.

I agree, and if I wasn't outputing this to a graph, I would be reading in chunks.

This really all started because my users have been getting "out of memory" errors when running one of my analysis programs. I went in to it to see if I could make it more efficient, and kept running into copies of code where I didn't think I was making any.

I'm going to have to break the files up anyway, in a macro sense. These files can get quite large and impossible to read on a generic laptop. However, I would like to break it up as little as possible for better usability.

-

Whether I deallocate or not, when I stop the vi the memory drops by about ~150MB, ie, I'm down to two copies of the data in memory. In another version of this code, I took the loop out of the code I attached above, and turned the graph into a plain array. Then I called that vi from another vi and attached it to an array on the calling vi. I did this because I thought there might be something with the Read function that was holding on to extra data (I know, I was grasping at straws). I deallocated the memory in the subvi. It actually did deallocate -- I was pleasantly surprised, since I haven't had a whole lot of luck using that function in the past. <BR><BR>I put the call to the subvi in a sequence where I call it and wait 10 seconds after it returns -- passing the data wire thru that frame. Up to that point only 1 copy of the data is made. In the last frame, the wire is connected to the array terminal. As soon as program flow gets to that last frame, two more copies of the data are made. I understand LV makes 2 copies of FP controls, but why isn't it reusing the copy that's there not doing anything else?If you drop an "deallocate" and let that code stop (to let the deallocate work), does the memory drop? I'm thinking the transfer buffer is the extra copy. Ben -

Any control represents as many as 5 copies of the data.

5 copies. Yeesh.

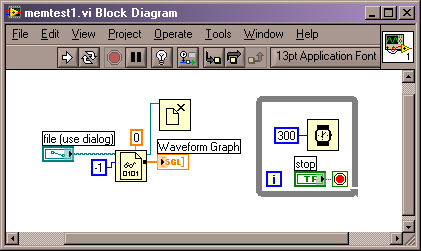

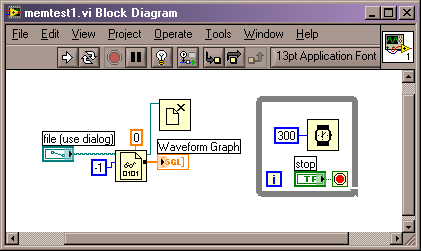

I'm attaching a pic of an example BD. All it does is read a file (~150MBytes) and send it to a waveform graph. The loop is there to keep the vi "alive". The default of all controls/indicators is "empty". There have been no edits done since the last save (in fact, I've been editing, saving, exiting LV, and starting the vi clean, just to make sure there are no residual memory or undo issues).

When the vi is opened, LabVIEW is showing 81MB of memory usage. After it runs and is in steady state, LabVIEW shows ~537MB of memory usage. This would be, I assume, three copies of the data. Where are they all coming from? I've got "Show Buffer Allocations" turned on for arrays in the pic, and there's only 1 little black box showing.

I'm running 8.6.1, if that makes a difference.

-

I have a vi that opens a file, reads it, and puts the data in a waveform graph. How many total copies of the data should be floating around after it does all that? I had thought it was 2, but it seems to be 3. This is not playing well with my Very Large data sets. And it's really bad when I need multiple data sets displayed at the same time.

I tried moving the read to a subvi, deallocating memory (which actually seems to work), and then passing the data to the waveform on the main vi. Still 3 copies.

Is my memory going along with my computer's?

Cat

-

Something horrific like c*l*r ?

Aha! Now I understand why it disappeared.

-

Netscape's failure is fascinating, and pretty well documented.

Among other things, they decided to re-write their browser from scratch, "the right way". It ended up more than a year late, during which time Microsoft took the entire market.

I was a devoted Netscape user until they did their browser "the right way". Then I gritted my teeth and switched to IE. And it had nothing to do with waiting for the new version. It was soley because to Netscape, "right way" = "bloat ware". They tried to become every thing to everybody and along the way lost their most important product -- a really decent web browser. So they lost me as a customer.

There's a lesson in there somewhere for software designers...

-

Out of town visitor appear to be suprised that Pittsburgh is no longer "hell with the lid off".

I heard that quote on the news last night.

I was pleasantly surprised by how Pittsburgh looked. Maybe next time I'm flying thru there, I'll stop and tour around for awhile.

Cat

-

I've been playing around with this some more (I'm too sleepy to do any real work).

If you drop an I32 constant on the BD and manually change it to a SGL, it defaults to 6 digits of precision (enough to make 1.299999etc look like 1.3). If you drop an I32 constant on the BD and manually change it to a DBL, it sets it to 13 digits of precision. So when LV automatically converts the I32 constant to a DBL and then you convert it to a SGL, it must keep the 13 digits of precision, and your 1.3 now looks like 1.299999etc

All of this is how it works on my system anyway; YMMV.

Okay, time to do something useful for the Fleet...

Cat

-

Set your 1.3 constant to 10+ significant digits and it will display that it's actually equal to 1.29999995231...

Because of what Rolf said, 1.5 can be represented exactly and 1.3 cannot.

Cat

-

pop-up Advanced >>> customize

Go to tweazer mode and re-size.

Thanks, Ben!

I haven't had any reason to customize a control in years and completely forgot about that.

Delete a line in a file

in LabVIEW General

Posted

I've written and deleted a few rants...