Search the Community

Showing results for tags 'timestamp'.

-

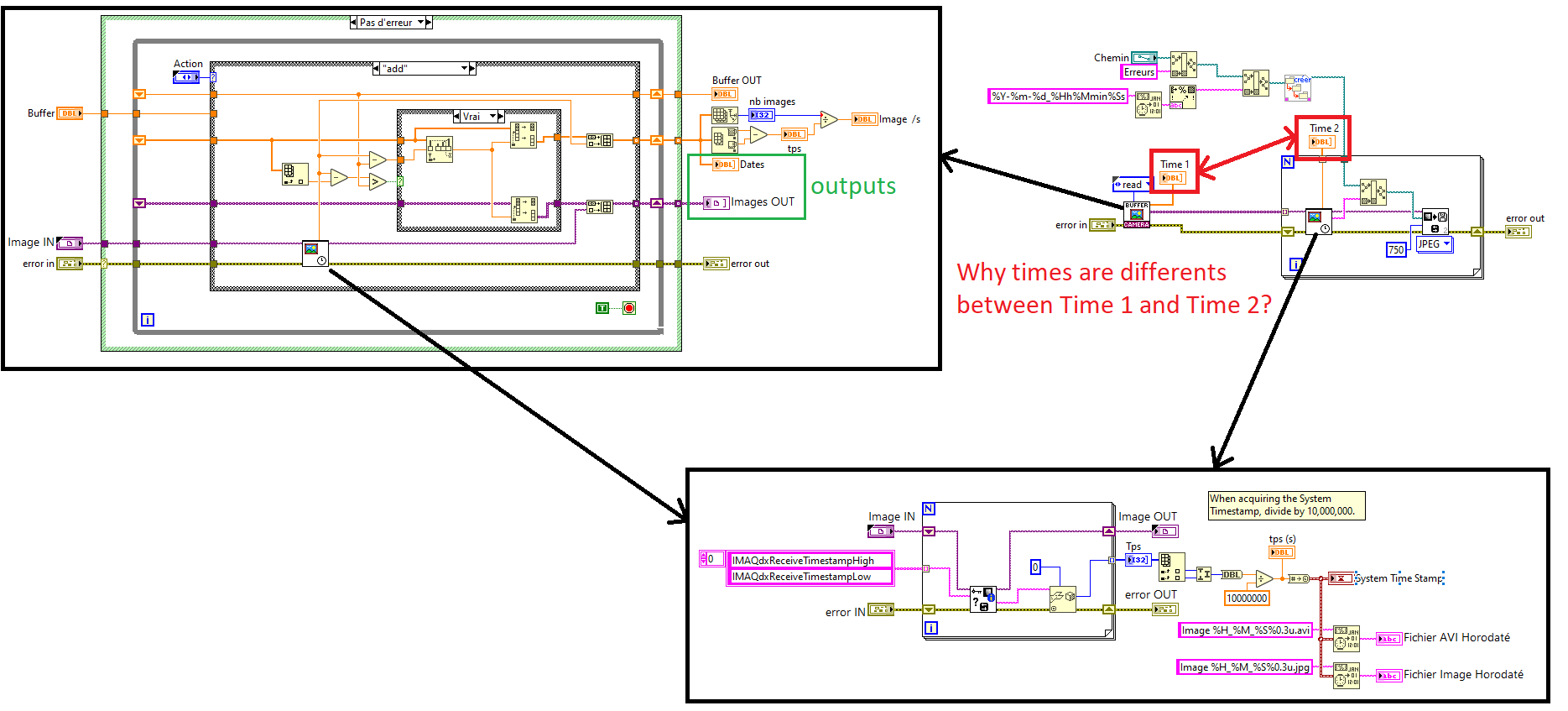

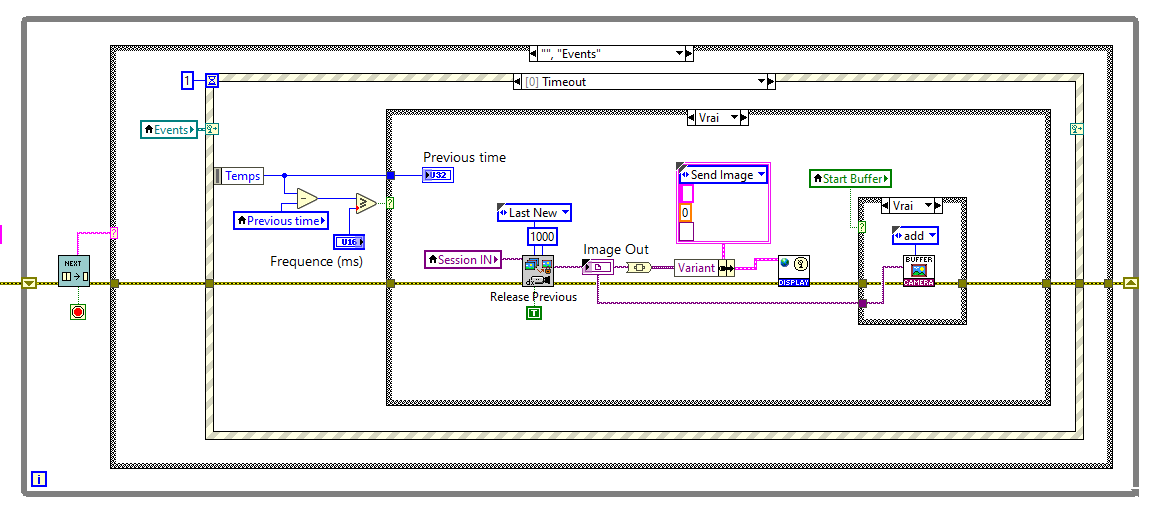

Hello, I have a GiGe camera Basler. I have created a ring acquisition like the exemple IMAQdx with circular buffer. I load an image every 15ms and I add it in a Vi type FGV (cf. Stock Images.png). In the FGV vi, I create 2 tables. One is for the timestamps and the seconds is for the images. I use the timestamp table juste for keep the last 15s. If my test crash I save the images table. If I use the Vi to look at the timestamp of my image table, it is not the same timestamp as when I added the image in the table (cf. Save Images.png). Why? The propreties IMAQdxReceiveTimestampHigh and IMAQdxReceiveTimestampLow are not attached to the images? I hope you understand my English 🙂 (thank you deepl) François A.

- 3 replies

-

- imaqdxreceivetimestamplow

- imaqdxreceivetimestamphigh

- (and 3 more)

-

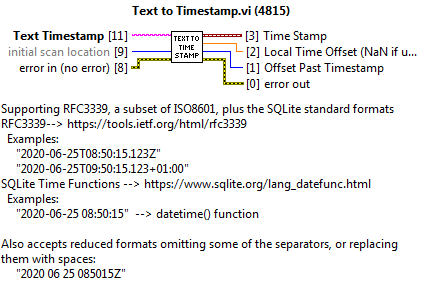

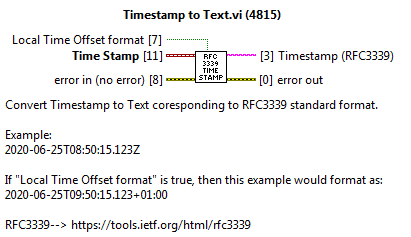

In an attempt to standardize my handling of formatting timestamps as text, I have added functions to "JDP Science Common Utilities" (a VI support package, on the Tools Network). This is used by SQLite Library (version just released) and JSONtext (next release), but they can also be used by themselves (LabVIEW 2013+). Follows RFC3339, and supports local-time offsets.

-

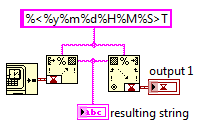

A part of my code converts a time stamp into a string. Another part will have to read this string and convert it back to a time stamp. However, when I run this quick example I've created, I get an error. Does anybody know what I'm missing? Thanks

-

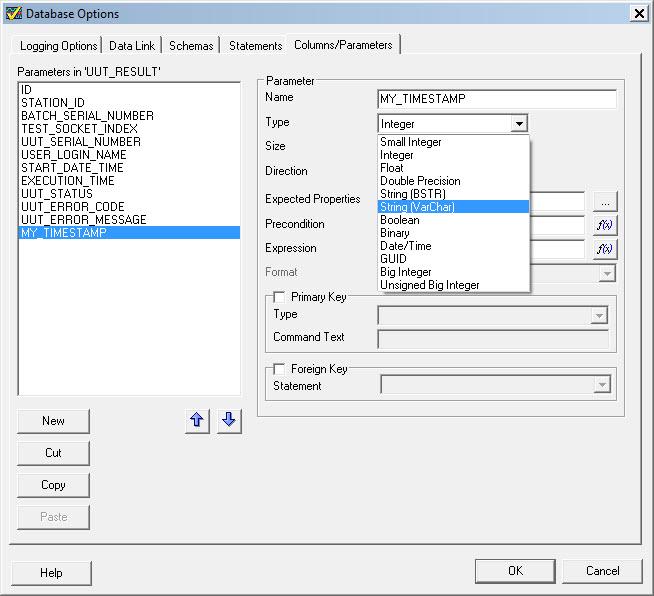

My new job has me starting from scratch DB wise. I worked with a homegrown schema for many years, but it doesn't match my needs here and I want to make this supportable log term (next poor soul), so I was thinking of using the out-of-box schema with MySQL. I've got MyQL 5.1 and TS 2010 installed; the TS 2010 MySQL implementation uses INSERT statements which lack my one pet peeve, a timestamp data type. I think this may be because MySQL at the time 2010 was developed only had a date-time data type. Does anyone know if a newer version of TestStand includes support for a timestamp data type with the MySQL template?

-

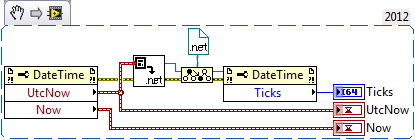

Hi I need the UTC time and convert it to tics. I use this code(LV2012/DotNet 2.0): Why is the Now time(local) the same as UTCtime. There should be a difference of 2 hours! I made a program in C# and it is showing the right time (2 hours between Local and UTC) regards Bjarne

-

Greetings, I've been playing around with the timestamp recently and I'd like to throw out some of my findings to see if they're expected behavior or not. One issue is that if you call Get Date/Time in Seconds, the minimum interval is ~1ms. Now this is much better than ~2009 where the minimum interval was ~14ms, if I recall correctly. Meaning, even if you call Get Date/Time in Seconds a million times in a second, you only get ~1000 updates. The other oddities involve converting to double or extended and from double/extended back to timestamp. It seems that when converting to double, at least with a current day timestamp, your resolution is cut off at ~5e-7 (0.5us). This is expected given that you only have so many signficant digits in a double and a lot of them are eaten up by the ~3.4 billion seconds that have elapsed since 7:00PM 12-31-1903 (any story behind that start date, btw?) However, when you convert to extended, you get that same double-level truncation, no extra resolution is gained. Now, when you convert from a double to a timestamp, you have that same 0.5us step size. When you convert an extended to a timestamp, you get an improved resolution of 2e-10 (0.2ns). However, the internal representation of the timestamp (which, as far as I know, is a 128-bit floating point) allows down to ~5E-20 (this is all modern era with 3.4 billion to the left of the decimal). One other oddity, if you convert a timestamp to a date-time record instead of an extended number (which gets treated like a double as noted above), you once again get the 2e-10 step size in the fractional second field. I've tried a few different things to "crack the nut" on a timestamp like flattening to string or changing to variant and back to extended, but I've been unable to figure out how to access the full resolution except via the control face itself. Attached is one of my experimentation VI's. You can vary the increment size and see where the arrays actually start recording changes. Ah, one final thing I decided to try, if I take the difference of two time stamps, the resulting double will resolve a difference all the way down to 5e-20, which is about the step size I figured the internal representation would hold. I would like to be able to uncover the full resolution of the timestamp for various timing functions and circular buffer lookups, but I would like to be able to resolve the math down to, say 100MHz or 1e-8. I guess I can work around this using the fractional part of the date-time record, but it would be nice to be able to just use the timestamps directly without a bunch of intermediary functions. Time Tester.vi

-

Hi, I'm plotting a bar graph which takes only double data input. My X-axis should be the timestamp and my Y-axis is the double data. Bt here I can't figure out any way to convert the timestamp to double so that I can use it as an input. Any suggestions. Regards, Runjhun A.