Leaderboard

Popular Content

Showing content with the highest reputation on 07/18/2011 in all areas

-

The list should always be sorted. I have filed CAR 308287 to update the documentation accordingly.2 points

-

If you want to draw with good performance, the LabVIEW 3D Picture control uses OpenGL which should be using your machine's GPU. The learning curve looks a bit intimidating, but I think once you get going it won't be too bad.1 point

-

There's a fairly key resource maintained on ni.com: http://www.ni.com/LargeApps Lots of good info on that page, but the real gem for me is the link "Read technical series". Anyone writing any significant VI hierarchy should have at least browsed this encyclopedia, just so you know that it exists when you have a specific question.1 point

-

It's quite a shame really, because Shared Variables held such a promise, but we use them as little as we can get away with now. We've been burned repeatedly by their shortcomings, and I feel we've been let down by NI Support when we've tried to get issues resolved. Issues that still carry several CARs around, like how every Shared Variable in an SV-lib inherits the error message from any of the member-SVs to have experienced a buffer overflow for instance - such an error will emerge from all the SV-nodes when any single node experiences the error. NI have known about that for years now, but won't fix it. And the update rate performance specifications aren't transferable to real life. SVs are quite limited in regards to dynamic IPs - we use DataSocket to connect to SVs on a target with dynamic IP, but DataSocket holds its own bag of problems (needing root loop to execute), and it's not truely flexible enough anyway. A Real-Time application can't deploy SVs on its own programmatically etc. I also once saw needing the DSC module for SV event-support as a cheap shot at first, but there will always be differentiation in features between product package levels. Coupling SVs with events yourself isn't that hard, and even if you need the DSC module (the tag-engine for instance), it's not that expensive. Many of our projects carry multi-million dollar budgets anyway, so a few bucks for the DSC-module won't kill anybody. But losing data will. We've for instance battled heavily with SVs undeploying themselves on our PXI targets under heavy load, without anyone being able to come up with an explanation. We've had NI application engineers look at it over the course of about 2 years - no ideas. And then at this year's CLA Summit an NI guy (don't remember who) spoke out loud that Shared Variables never were designed for streaming, they don't work reliably for that. Use Network Streams instead. Well, thanks for that, except that message comes 3 years too late. And Network Streams use the SVE as well, so I can't trust them either. I started TCPIP-Link because we no longer can live with SVs, but we need the functionality. Now TCPIP-Link will be used for all our network communication. If the distribution of TCPIP-Link follow the rest of CIMs toolset-policy (with a select few exceptions), it will be available with full source code included. But I'm not sure that'll be the case. Even if NI don't adopt TCPIP-Link, and even if CIM never release it for external use (I can't see both not happening, but you never know), I can always publish a video on Youtube where I pit SVs and Network Streams against TCPIP-Link - who'll finish first when the task is to deploy the network data transfer functionality to a cRIO, and get 10 Gigs of data streamed of it without error . But there isn't much debate coming off VIRegisters in this thread, is there? Did everybody go on holliday? Cheers, Steen1 point

-

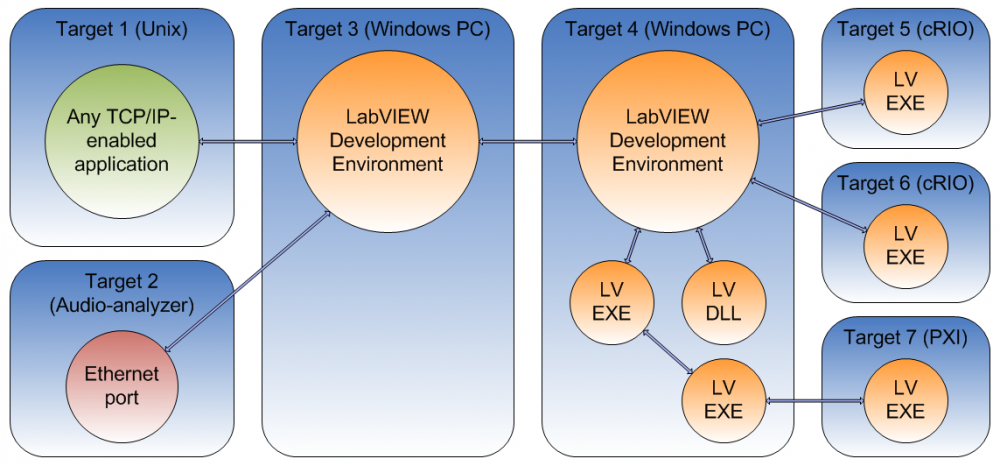

It is . It's basically a TCP/IP based messaging toolset that I've spent the last 1½ years developing. I'm the architect and only developer on it, but my employer owns (most of) it. I spoke with Eli Kerry about it at the CLA Summit, and it might be presented to NI at NI Week if we manage to get our heads around what we want to do with it. But as it's not in the public domain I unfortunately can't share any code really. But this is what TCPIP-Link is (I'm probably forgetting some features): A multi-connect server and single-connect client that maintains persistent connections with each other. That means they connect, and if the connection breaks they stay up and attempt to reconnect until the world ends (or until you stop one of the end-points ). You can have any number of TCPIP-Link servers and clients running in your LabVIEW instance at a time. Both server and client support TCP/IP connection with other TCPIP-Link parties (LabVIEW), as well as non-TCPIP-Link parties (LabVIEW or anything else, HW or SW). So you have a toolset for persistent connections with anything speaking TCP/IP basically. Outgoing messages can be transmitted using one of four schemes: confirmation-of-transmission (no acknowledge, just ack that the message went into the transmit-buffer without error), confirmation-of-arrival (TCPIP-Link at the other end acknowledges the reception; happens automatically), confirmation-of-delivery (you in the receiving application acknowledges reception; is done with the TCPIP-Link API, the message tells you if it needs COD-ack), and a buffered streaming mode. The streaming mode works a bit like Shared Variables, but without the weight of the SVE. The user can set up the following parameters per connection: Buffer expiration time (if the buffer doesn't fill, it'll be transmitted anyway after this period of time), Buffer size (the buffer will be transmitted when it reaches this size), Minimum packet gap (specifies minimum idle time on the transmission line, especially useful if you send large packets and don't want to hog the line), Maximum packet size (packets are split into this size if they exceed it), and Purge timeout (how long time will the buffer be maintained if the connection is lost, before it's purged). You transmit data through write-nodes, and receive data by subscribing to events. Subscribable system-events are available to tell you about connects/disconnects etc. A log is maintained for each connection, you can read the log when you want or you can subscribe to log-events. The log holds the last 500 system eventsfor each connection (Connection, ConnectionAttempt, Disconnection, LinkLifeBegin, LinkLifeEnd, LinkStateChange, ModuleLifeBegin, ModuleLifeEnd, ModuleStateChange etc.) as well as the last 500 errors and warnings. The underlying protocol, besides persistence, utilizes framing and byte-stuffing to ensure data integrity. 12 different telegram types are used, among which is a KeepAlive telegram that discover congestion or disconnects that otherwise wouldn't propagate into LabVIEW. If an active network device exist between you and your peer, LabVIEW won't tell you if the peer disconnected by mistake. If you and your peer have a switch between you for instance, your TCP/IP-connection in LabVIEW stays valid even if the network cable is disconnected from your peer's NIC - but no messages will get through. TCPIP-Link will discover this scenario and notify you, close the sockets down, and go into reconnect-mode. TCPIP-Link of course works on localhost as well, but it's clever enough to skip TCP/IP if you communicate within the same LV-instance, in which case the events are generated directly (you can force TCPIP-Link to use the TCP/IP-stack anyway in this case though, if you want to). Something like 20 or 30 networking and application related LabVIEW errors are handled transparently inside all components of TCPIP-Link, so it won't wimp out on all the small wrenches that TCP-connections throw into your gears. You can read about most of what happens in the warning log if you care though (error 42 anyone? Oh, we're hitting the driver too hard. Error 62? Wait, I thought it should be 66? No, not on Real-Time etc.). The API will let you discover running TCPIP-Link parties on the network (UDP multicast to an InformationServer on each LV-instance, configurable subnet time-to-live and timeout). Servers and clients can be configured individually as Hidden to remain from discovery in this way though. Traffic data is available for each connection, mostly stuff like line-load, payload ratio and such. It's about 400 VIs, but when you get your connections up and running (which isn't harder than dropping a StartServer or StartClient node and wire an IP-address to it) the performance is 90-95% of the best you (I) can get through the most raw TCP/IP-implementation in LabVIEW. And such a basic implementation (TCP Write and TCP Read) leaves a bit to be desired, if the above feature list is what you need . We (CIM Industrial Systems) use TCPIP-Link in measurement networks to enable cRIOs to persistently stay connected to their host 24/7 for instance. I'm currently pondering implementing stuff like adapter-teaming (bundling several NICs into one virtual connection for redundancy and higher bandwidth) as well as data encryption. Here's a connection diagram example from the user guide (arrows are TCPIP-Link connections): Cheers, Steen1 point